Abstract

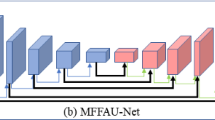

Kidney cancer is one of the most common cancers. Precise delineation and localization of the lesion area play a crucial role in the diagnosis and treatment of kidney cancer. Deep learning-based automatic medical image segmentation can help to confirm the diagnosis. The traditional 3D nnU-net based on convolutional layers is widely used in medical image segmentation. However, the fixed receptive field of convolutional neural networks introduces an induction bias limiting their ability to capture long-range spatial information in input images. The Swin Transformer addresses this limitation by leveraging the global contextual modeling ability obtained through self-attention computation. However, it requires a large amount of training data and lacks in local feature encoding. To overcome these limitations, our paper proposes a hybrid network structure called STransUnet, which combines the nnU-net with Swin Transformer. STransUnet retains the local feature encoding capability of nnU-net while introducing the Swin Transformer to capture a broader range of global contextual information, resulting in a more powerful modeling ability for image segmentation tasks. In the KiTS23 challenge, our average Dice and average Surface Dice of segmentation on the test are 0.801 and 0.680 ranked the 6th and 8th respectively and our Tumor Dice is 0.687.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chen, J., et al.: TransUNet: transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:2102.04306 (2021)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding (2018)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Houlsby, N.: An image is worth 16\(\times \)16 words: transformers for image recognition at scale (2020)

Fedus, W., Zoph, B., Shazeer, N.: Switch transformers: scaling to trillion parameter models with simple and efficient sparsity (2021)

Bray, F., Ferlay, J., Soerjomataram, I., Siegel, R.L.: Global cancer statistics 2018: globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: Cancer J. Clin. (2018)

Hatamizadeh, A., Nath, V., Tang, Y., Yang, D., Roth, H.R., Xu, D.: Swin UNETR: swin transformers for semantic segmentation of brain tumors in MRI images. In: Crimi, A., Bakas, S. (eds.) BrainLes 2021. LNCS, vol. 12962, pp. 272–284. Springer, Cham (2021). https://doi.org/10.1007/978-3-031-08999-2_22

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (2016)

Isensee, F., Jaeger, P.F., Kohl, S.A.A., Petersen, J., Maier-Hein, K.H.: nnU-net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods (2021)

Krizhevsky, A., Sutskever, I., Hinton, G.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, vol. 25, no. 2 (2012)

Liu, Z., et al.: Swin transformer: hierarchical vision transformer using shifted windows (2021)

Matsoukas, C., Haslum, J.F., Sderberg, M., Smith, K.: Is it time to replace CNNs with transformers for medical images? (2021)

Sha, Y., Zhang, Y., Ji, X., Hu, L.: Transformer-UNet: raw image processing with unet. arXiv preprint arXiv:2109.08417 (2021)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. (2014)

Sudre, C.H., Li, W., Vercauteren, T., Ourselin, S., Jorge Cardoso, M.: Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In: Cardoso, M.J., et al. (eds.) DLMIA/ML-CDS -2017. LNCS, vol. 10553, pp. 240–248. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67558-9_28

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Rabinovich, A.: Going deeper with convolutions. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

Vaswani, A., et al.: Attention is all you need. arXiv (2017)

Yi-de, M., Qing, L., Zhi-Bai, Q.: Automated image segmentation using improved PCNN model based on cross-entropy. In: Proceedings of 2004 International Symposium on Intelligent Multimedia, Video and Speech Processing, pp. 743–746. IEEE (2004)

Zhou, S.K., et al.: A review of deep learning in medical imaging: image traits, technology trends, case studies with progress highlights, and future promises (2020)

Acknowledgment

This work is supported by the National Key Research and Development Program of China (Grant no: 2021YFC2009200).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Qian, L., Luo, L., Zhong, Y., Zhong, D. (2024). A Hybrid Network Based on nnU-Net and Swin Transformer for Kidney Tumor Segmentation. In: Heller, N., et al. Kidney and Kidney Tumor Segmentation. KiTS 2023. Lecture Notes in Computer Science, vol 14540. Springer, Cham. https://doi.org/10.1007/978-3-031-54806-2_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-54806-2_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-54805-5

Online ISBN: 978-3-031-54806-2

eBook Packages: Computer ScienceComputer Science (R0)