Abstract

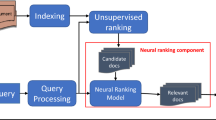

Neural ranking models have become increasingly popular for real-world search and recommendation systems in recent years. Unlike their tree-based counterparts, neural models are much less interpretable. That is, it is very difficult to understand their inner workings and answer questions like how do they make their ranking decisions? or what document features do they find important? This is particularly disadvantageous since interpretability is highly important for real-world systems. In this work, we explore feature selection for neural learning-to-rank (LTR). In particular, we investigate six widely-used methods from the field of interpretable machine learning (ML) and introduce our own modification, to select the input features that are most important to the ranking behavior. To understand whether these methods are useful for practitioners, we further study whether they contribute to efficiency enhancement. Our experimental results reveal a large feature redundancy in several LTR benchmarks: the local selection method TabNet can achieve optimal ranking performance with less than 10 features; the global methods, particularly our G-L2x, require slightly more selected features, but exhibit higher potential in improving efficiency. We hope that our analysis of these feature selection methods will bring the fields of interpretable ML and LTR closer together.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

MQ2008 is omitted from cost analysis since no associated cost information is available.

- 2.

References

Abdul, A.M., von der Weth, C., Kankanhalli, M.S., Lim, B.Y.: COGAM: measuring and moderating cognitive load in machine learning model explanations. In: Bernhaupt, R., et al. (eds.) CHI 2020: CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020, pp. 1–14. ACM (2020). https://doi.org/10.1145/3313831.3376615

Alvarez-Melis, D., Jaakkola, T.S.: Towards robust interpretability with self-explaining neural networks. In: Bengio, S., Wallach, H.M., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018(December), pp. 3–8, 2018. Montréal, Canada, pp. 7786–7795 (2018). https://proceedings.neurips.cc/paper/2018/hash/3e9f0fc9b2f89e043bc6233994dfcf76-Abstract.html

Arapakis, I., Bai, X., Cambazoglu, B.B.: Impact of response latency on user behavior in web search. In: Geva, S., Trotman, A., Bruza, P., Clarke, C.L.A., Järvelin, K. (eds.) The 37th International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2014, Gold Coast, QLD, Australia - 06–11 July 2014, pp. 103–112. ACM (2014). https://doi.org/10.1145/2600428.2609627

Arik, S.Ö., Pfister, T.: TabNet: attentive interpretable tabular learning. In: Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, 2–9 February 2021, pp. 6679–6687. AAAI Press (2021). https://doi.org/10.1609/AAAI.V35I8.16826

Bai, X., Cambazoglu, B.B.: Impact of response latency on sponsored search. Inf. Process. Manag. 56(1), 110–129 (2019). https://doi.org/10.1016/J.IPM.2018.10.005

Balin, M.F., Abid, A., Zou, J.Y.: Concrete autoencoders: differentiable feature selection and reconstruction. In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9–15 June 2019, Long Beach, California, USA. Proceedings of Machine Learning Research, vol. 97, pp. 444–453. PMLR (2019). http://proceedings.mlr.press/v97/balin19a.html

Barreda-Ángeles, M., Arapakis, I., Bai, X., Cambazoglu, B.B., Pereda-Baños, A.: Unconscious physiological effects of search latency on users and their click behaviour. In: Baeza-Yates, R., Lalmas, M., Moffat, A., Ribeiro-Neto, B.A. (eds.) Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, Santiago, Chile, 9–13 August 2015, pp. 203–212. ACM (2015). https://doi.org/10.1145/2766462.2767719

Bruch, S.: An alternative cross entropy loss for learning-to-rank. In: Leskovec, J., Grobelnik, M., Najork, M., Tang, J., Zia, L. (eds.) WWW 2021: The Web Conference 2021, Virtual Event / Ljubljana, Slovenia, 19–23 April 2021, pp. 118–126. ACM / IW3C2 (2021). https://doi.org/10.1145/3442381.3449794

Bruch, S., Wang, X., Bendersky, M., Najork, M.: An analysis of the softmax cross entropy loss for learning-to-rank with binary relevance. In: Fang, Y., Zhang, Y., Allan, J., Balog, K., Carterette, B., Guo, J. (eds.) Proceedings of the 2019 ACM SIGIR International Conference on Theory of Information Retrieval, ICTIR 2019, Santa Clara, CA, USA, 2–5 October 2019, pp. 75–78. ACM (2019). https://doi.org/10.1145/3341981.3344221

Burges, C.J.C., Ragno, R., Le, Q.V.: Learning to rank with nonsmooth cost functions. In: Schölkopf, B., Platt, J.C., Hofmann, T. (eds.) Advances in Neural Information Processing Systems, vol. 19, Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, British Columbia, Canada, 4–7 December 2006, pp. 193–200. MIT Press (2006). https://proceedings.neurips.cc/paper/2006/hash/af44c4c56f385c43f2529f9b1b018f6a-Abstract.html

Burges, C.J.C., Shaked, T., Renshaw, E., Lazier, A., Deeds, M., Hamilton, N., Hullender, G.N.: Learning to rank using gradient descent. In: Raedt, L.D., Wrobel, S. (eds.) Machine Learning, Proceedings of the Twenty-Second International Conference (ICML 2005), Bonn, Germany, 7–11 August 2005. ACM International Conference Proceeding Series, vol. 119, pp. 89–96. ACM (2005). https://doi.org/10.1145/1102351.1102363

Burges, C.J.: From ranknet to lambdarank to lambdamart: an overview. Learning 11(23–581), 81 (2010)

Cao, Z., Qin, T., Liu, T., Tsai, M., Li, H.: Learning to rank: from pairwise approach to listwise approach. In: Ghahramani, Z. (ed.) Machine Learning, Proceedings of the Twenty-Fourth International Conference (ICML 2007), Corvallis, Oregon, USA, 20–24 June 2007. ACM International Conference Proceeding Series, vol. 227, pp. 129–136. ACM (2007). https://doi.org/10.1145/1273496.1273513

Chapelle, O., Chang, Y.: Yahoo! learning to rank challenge overview. In: Chapelle, O., Chang, Y., Liu, T. (eds.) Proceedings of the Yahoo! Learning to Rank Challenge, held at ICML 2010, Haifa, Israel, 25 June 2010. JMLR Proceedings, vol. 14, pp. 1–24. JMLR.org (2011). http://proceedings.mlr.press/v14/chapelle11a.html

Chapelle, O., Keerthi, S.S.: Efficient algorithms for ranking with SVMs. Inf. Retr. 13(3), 201–215 (2010). https://doi.org/10.1007/S10791-009-9109-9

Chen, J., Song, L., Wainwright, M.J., Jordan, M.I.: Learning to explain: an information-theoretic perspective on model interpretation. In: Dy, J.G., Krause, A. (eds.) Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, 10–15 July 2018. Proceedings of Machine Learning Research, vol. 80, pp. 882–891. PMLR (2018). http://proceedings.mlr.press/v80/chen18j.html

Dato, D., et al.: Fast ranking with additive ensembles of oblivious and non-oblivious regression trees. ACM Trans. Inf. Syst. 35(2), 15:1–15:31 (2016). https://doi.org/10.1145/2987380

Du, M., Liu, N., Hu, X.: Techniques for interpretable machine learning. Commun. ACM 63(1), 68–77 (2020). https://doi.org/10.1145/3359786

Falcon, W., et al.: Pytorch lightning. GitHub 3, 6. https://github.com/PyTorchLightning/pytorch-lightning (2019)

Freund, Y., Iyer, R.D., Schapire, R.E., Singer, Y.: An efficient boosting algorithm for combining preferences. In: Shavlik, J.W. (ed.) Proceedings of the Fifteenth International Conference on Machine Learning (ICML 1998), Madison, Wisconsin, USA, 24–27 July 1998, pp. 170–178. Morgan Kaufmann (1998)

Gallagher, L., Chen, R., Blanco, R., Culpepper, J.S.: Joint optimization of cascade ranking models. In: Culpepper, J.S., Moffat, A., Bennett, P.N., Lerman, K. (eds.) Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, WSDM 2019, Melbourne, VIC, Australia, 11–15 February 2019, pp. 15–23. ACM (2019). https://doi.org/10.1145/3289600.3290986

Geng, X., Liu, T., Qin, T., Li, H.: Feature selection for ranking. In: Kraaij, W., de Vries, A.P., Clarke, C.L.A., Fuhr, N., Kando, N. (eds.) SIGIR 2007: Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Amsterdam, The Netherlands, 23–27 July 2007, pp. 407–414. ACM (2007). https://doi.org/10.1145/1277741.1277811

Gigli, A., Lucchese, C., Nardini, F.M., Perego, R.: Fast feature selection for learning to rank. In: Carterette, B., Fang, H., Lalmas, M., Nie, J. (eds.) Proceedings of the 2016 ACM on International Conference on the Theory of Information Retrieval, ICTIR 2016, Newark, DE, USA, 12–6 September 2016, pp. 167–170. ACM (2016). https://doi.org/10.1145/2970398.2970433

Jang, E., Gu, S., Poole, B.: Categorical reparametrization with gumble-softmax (2017)

Järvelin, K., Kekäläinen, J.: Cumulated gain-based evaluation of IR techniques. ACM Trans. Inf. Syst. 20(4), 422–446 (2002). https://doi.org/10.1145/582415.582418

Joachims, T.: Optimizing search engines using clickthrough data. In: Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 23–26 July 2002, Edmonton, Alberta, Canada, pp. 133–142. ACM (2002). https://doi.org/10.1145/775047.775067

Joachims, T.: Training linear SVMs in linear time. In: Eliassi-Rad, T., Ungar, L.H., Craven, M., Gunopulos, D. (eds.) Proceedings of the Twelfth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006, pp. 217–226. ACM (2006). https://doi.org/10.1145/1150402.1150429

Kaur, H., Nori, H., Jenkins, S., Caruana, R., Wallach, H.M., Vaughan, J.W.: Interpreting interpretability: understanding data scientists’ use of interpretability tools for machine learning. In: Bernhaupt, R., et al. (eds.) CHI 2020: CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020, pp. 1–14. ACM (2020). https://doi.org/10.1145/3313831.3376219

Ke, G., et al.: LightGBM: a highly efficient gradient boosting decision tree. In: Guyon, I., et al. (eds.) Advances in Neural Information Processing Systems, vol. 30: Annual Conference on Neural Information Processing Systems 2017(December), pp. 4–9, 2017. Long Beach, CA, USA, pp. 3146–3154 (2017). https://proceedings.neurips.cc/paper/2017/hash/6449f44a102fde848669bdd9eb6b76fa-Abstract.html

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. In: Bengio, Y., LeCun, Y. (eds.) 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014, Conference Track Proceedings (2014). http://arxiv.org/abs/1312.6114

Lai, H., Pan, Y., Liu, C., Lin, L., Wu, J.: Sparse learning-to-rank via an efficient primal-dual algorithm. IEEE Trans. Computers 62(6), 1221–1233 (2013). https://doi.org/10.1109/TC.2012.62

Lai, H., Pan, Y., Tang, Y., Yu, R.: FSMRank: feature selection algorithm for learning to rank. IEEE Trans. Neural Networks Learn. Syst. 24(6), 940–952 (2013). https://doi.org/10.1109/TNNLS.2013.2247628

Laporte, L., Flamary, R., Canu, S., Déjean, S., Mothe, J.: Nonconvex regularizations for feature selection in ranking with sparse SVM. IEEE Trans. Neural Networks Learn. Syst. 25(6), 1118–1130 (2014). https://doi.org/10.1109/TNNLS.2013.2286696

Lemhadri, I., Ruan, F., Abraham, L., Tibshirani, R.: LassoNet: a neural network with feature sparsity. J. Mach. Learn. Res. 22, 127:1–127:29 (2021). http://jmlr.org/papers/v22/20-848.html

Leonhardt, J., Rudra, K., Anand, A.: Extractive explanations for interpretable text ranking. ACM Trans. Inf. Syst. (2022). https://doi.org/10.1145/3576924

Li, P., Burges, C.J.C., Wu, Q.: McRank: learning to rank using multiple classification and gradient boosting. In: Platt, J.C., Koller, D., Singer, Y., Roweis, S.T. (eds.) Advances in Neural Information Processing Systems, vol. 20, Proceedings of the Twenty-First Annual Conference on Neural Information Processing Systems, Vancouver, British Columbia, Canada, 3–6 December 2007, pp. 897–904. Curran Associates, Inc. (2007). https://proceedings.neurips.cc/paper/2007/hash/b86e8d03fe992d1b0e19656875ee557c-Abstract.html

Liu, T.: Learning to rank for information retrieval. Found. Trends Inf. Retr. 3(3), 225–331 (2009). https://doi.org/10.1561/1500000016

Lucchese, C., Nardini, F.M., Orlando, S., Perego, R., Veneri, A.: ILMART: interpretable ranking with constrained lambdamart. In: SIGIR 2022: The 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022, pp. 2255–2259. ACM (2022). https://doi.org/10.1145/3477495.3531840

Lundberg, S.M., Lee, S.: A unified approach to interpreting model predictions. In: Guyon, I., et al. (eds.) Advances in Neural Information Processing Systems, vol. 30: Annual Conference on Neural Information Processing Systems 2017(December), pp. 4–9, 2017. Long Beach, CA, USA, pp. 4765–4774 (2017). https://proceedings.neurips.cc/paper/2017/hash/8a20a8621978632d76c43dfd28b67767-Abstract.html

Martins, A.F.T., Astudillo, R.F.: From softmax to sparsemax: a sparse model of attention and multi-label classification. In: Balcan, M., Weinberger, K.Q. (eds.) Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York City, NY, USA, 19–24 June 2016. JMLR Workshop and Conference Proceedings, vol. 48, pp. 1614–1623. JMLR.org (2016). http://proceedings.mlr.press/v48/martins16.html

Masoomi, A., Wu, C., Zhao, T., Wang, Z., Castaldi, P.J., Dy, J.G.: Instance-wise feature grouping. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Advances in Neural Information Processing Systems, vol. 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020(December), pp. 6–12, 2020. virtual (2020). https://proceedings.neurips.cc/paper/2020/hash/9b10a919ddeb07e103dc05ff523afe38-Abstract.html

Molnar, C.: Interpretable machine learning. Lulu.com (2020)

Pan, F., Converse, T., Ahn, D., Salvetti, F., Donato, G.: Feature selection for ranking using boosted trees. In: Cheung, D.W., Song, I., Chu, W.W., Hu, X., Lin, J. (eds.) Proceedings of the 18th ACM Conference on Information and Knowledge Management, CIKM 2009, Hong Kong, China, 2–6 November 2009, pp. 2025–2028. ACM (2009). https://doi.org/10.1145/1645953.1646292

Pang, L., Xu, J., Ai, Q., Lan, Y., Cheng, X., Wen, J.: SetRank: learning a permutation-invariant ranking model for information retrieval. In: Huang, J.X., et al. (eds.) Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, SIGIR 2020, Virtual Event, China, 25–30 July 2020, pp. 499–508. ACM (2020). https://doi.org/10.1145/3397271.3401104

Pobrotyn, P., Bartczak, T., Synowiec, M., Bialobrzeski, R., Bojar, J.: Context-aware learning to rank with self-attention. CoRR abs/2005.10084 (2020). https://arxiv.org/abs/2005.10084

Purpura, A., Buchner, K., Silvello, G., Susto, G.A.: Neural feature selection for learning to rank. In: Hiemstra, D., Moens, M.-F., Mothe, J., Perego, R., Potthast, M., Sebastiani, F. (eds.) ECIR 2021. LNCS, vol. 12657, pp. 342–349. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-72240-1_34

Qin, T., Liu, T.: Introducing LETOR 4.0 datasets. CoRR abs/1306.2597 (2013). http://arxiv.org/abs/1306.2597

Qin, T., Liu, T., Xu, J., Li, H.: LETOR: a benchmark collection for research on learning to rank for information retrieval. Inf. Retr. 13(4), 346–374 (2010). https://doi.org/10.1007/S10791-009-9123-Y

Qin, Z., et al.: Are neural rankers still outperformed by gradient boosted decision trees? In: 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. OpenReview.net (2021). https://openreview.net/forum?id=Ut1vF_q_vC

Rahangdale, A., Raut, S.A.: Deep neural network regularization for feature selection in learning-to-rank. IEEE Access 7, 53988–54006 (2019). https://doi.org/10.1109/ACCESS.2019.2902640

Ribeiro, M.T., Singh, S., Guestrin, C.: “why should I trust you?”: Explaining the predictions of any classifier. In: Krishnapuram, B., Shah, M., Smola, A.J., Aggarwal, C.C., Shen, D., Rastogi, R. (eds.) Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016, pp. 1135–1144. ACM (2016). https://doi.org/10.1145/2939672.2939778

Rigutini, L., Papini, T., Maggini, M., Scarselli, F.: SortNet: learning to rank by a neural-based sorting algorithm. CoRR abs/2311.01864 (2023). https://doi.org/10.48550/ARXIV.2311.01864

Rong, Y., et al.: Towards human-centered explainable AI: user studies for model explanations. CoRR abs/2210.11584 (2022). https://doi.org/10.48550/arXiv.2210.11584

Rudin, C.: Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1(5), 206–215 (2019). https://doi.org/10.1038/s42256-019-0048-x

Shrikumar, A., Greenside, P., Kundaje, A.: Learning important features through propagating activation differences. In: Precup, D., Teh, Y.W. (eds.) Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017. Proceedings of Machine Learning Research, vol. 70, pp. 3145–3153. PMLR (2017). http://proceedings.mlr.press/v70/shrikumar17a.html

Simonyan, K., Vedaldi, A., Zisserman, A.: Deep inside convolutional networks: visualising image classification models and saliency maps. In: Bengio, Y., LeCun, Y. (eds.) 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014, Workshop Track Proceedings (2014). http://arxiv.org/abs/1312.6034

Sun, Z., Qin, T., Tao, Q., Wang, J.: Robust sparse rank learning for non-smooth ranking measures. In: Allan, J., Aslam, J.A., Sanderson, M., Zhai, C., Zobel, J. (eds.) Proceedings of the 32nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2009, Boston, MA, USA, 19–23 July 2009, pp. 259–266. ACM (2009). https://doi.org/10.1145/1571941.1571987

Sundararajan, M., Taly, A., Yan, Q.: Axiomatic attribution for deep networks. In: Precup, D., Teh, Y.W. (eds.) Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017. Proceedings of Machine Learning Research, vol. 70, pp. 3319–3328. PMLR (2017). http://proceedings.mlr.press/v70/sundararajan17a.html

Taylor, M.J., Guiver, J., Robertson, S., Minka, T.: SoftRank: optimizing non-smooth rank metrics. In: Najork, M., Broder, A.Z., Chakrabarti, S. (eds.) Proceedings of the International Conference on Web Search and Web Data Mining, WSDM 2008, Palo Alto, California, USA, 11–12 February 2008, pp. 77–86. ACM (2008). https://doi.org/10.1145/1341531.1341544

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. Roy. Stat. Soc.: Ser. B (Methodol.) 58(1), 267–288 (1996)

Vaswani, A., et al.: Attention is all you need. In: Guyon, I., et al. (eds.) Advances in Neural Information Processing Systems, vol. 30: Annual Conference on Neural Information Processing Systems 2017(December), pp. 4–9, 2017. Long Beach, CA, USA, pp. 5998–6008 (2017). https://proceedings.neurips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html

Wang, L., Lin, J., Metzler, D.: A cascade ranking model for efficient ranked retrieval. In: Ma, W., Nie, J., Baeza-Yates, R., Chua, T., Croft, W.B. (eds.) Proceeding of the 34th International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2011, Beijing, China, 25–29 July 2011, pp. 105–114. ACM (2011). https://doi.org/10.1145/2009916.2009934

Wu, Q., Burges, C.J.C., Svore, K.M., Gao, J.: Adapting boosting for information retrieval measures. Inf. Retr. 13(3), 254–270 (2010). https://doi.org/10.1007/S10791-009-9112-1

Xia, F., Liu, T., Wang, J., Zhang, W., Li, H.: Listwise approach to learning to rank: theory and algorithm. In: Cohen, W.W., McCallum, A., Roweis, S.T. (eds.) Machine Learning, Proceedings of the Twenty-Fifth International Conference (ICML 2008), Helsinki, Finland, 5–9 June 2008. ACM International Conference Proceeding Series, vol. 307, pp. 1192–1199. ACM (2008). https://doi.org/10.1145/1390156.1390306

Xu, J., Li, H.: AdaRank: a boosting algorithm for information retrieval. In: Kraaij, W., de Vries, A.P., Clarke, C.L.A., Fuhr, N., Kando, N. (eds.) SIGIR 2007: Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Amsterdam, The Netherlands, 23–27 July 2007, pp. 391–398. ACM (2007). https://doi.org/10.1145/1277741.1277809

Xu, Z.E., Huang, G., Weinberger, K.Q., Zheng, A.X.: Gradient boosted feature selection. In: Macskassy, S.A., Perlich, C., Leskovec, J., Wang, W., Ghani, R. (eds.) The 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD 2014, New York, NY, USA - 24–27 August 2014, pp. 522–531. ACM (2014). https://doi.org/10.1145/2623330.2623635

Yoon, J., Jordon, J., van der Schaar, M.: INVASE: instance-wise variable selection using neural networks. In: 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. OpenReview.net (2019). https://openreview.net/forum?id=BJg_roAcK7

Yue, Y., Finley, T., Radlinski, F., Joachims, T.: A support vector method for optimizing average precision. In: Kraaij, W., de Vries, A.P., Clarke, C.L.A., Fuhr, N., Kando, N. (eds.) SIGIR 2007: Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Amsterdam, The Netherlands, 23–27 July 2007, pp. 271–278. ACM (2007). https://doi.org/10.1145/1277741.1277790

Zhang, Z., Rudra, K., Anand, A.: Explain and predict, and then predict again. In: WSDM 2021, The Fourteenth ACM International Conference on Web Search and Data Mining, Virtual Event, Israel, 8–12 March 2021, pp. 418–426. ACM (2021). https://doi.org/10.1145/3437963.3441758

Zhang, Z., Setty, V., Anand, A.: SparCAssist: a model risk assessment assistant based on sparse generated counterfactuals. In: Amigó, E., Castells, P., Gonzalo, J., Carterette, B., Culpepper, J.S., Kazai, G. (eds.) SIGIR 2022: The 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022, pp. 3219–3223. ACM (2022). https://doi.org/10.1145/3477495.3531677

Zhuang, H., et al.: Interpretable ranking with generalized additive models. In: WSDM 2021, The Fourteenth ACM International Conference on Web Search and Data Mining, Virtual Event, Israel, 8–12 March 2021, pp. 499–507. ACM (2021). https://doi.org/10.1145/3437963.3441796

Acknowledgements

This work is partially supported by German Research Foundation (DFG), under the Project IREM with grant No. AN 996/1-1, and by the Netherlands Organisation for Scientific Research (NWO) under grant No. VI.Veni.222.269.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lyu, L., Roy, N., Oosterhuis, H., Anand, A. (2024). Is Interpretable Machine Learning Effective at Feature Selection for Neural Learning-to-Rank?. In: Goharian, N., et al. Advances in Information Retrieval. ECIR 2024. Lecture Notes in Computer Science, vol 14611. Springer, Cham. https://doi.org/10.1007/978-3-031-56066-8_29

Download citation

DOI: https://doi.org/10.1007/978-3-031-56066-8_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-56065-1

Online ISBN: 978-3-031-56066-8

eBook Packages: Computer ScienceComputer Science (R0)