Abstract

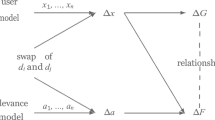

In most recent studies, gender bias in document ranking is evaluated with the NFaiRR metric, which measures bias in a ranked list based on an aggregation over the unbiasedness scores of each ranked document. This perspective in measuring the bias of a ranked list has a key limitation: individual documents of a ranked list might be biased while the ranked list as a whole balances the groups’ representations. To address this issue, we propose a novel metric called TExFAIR (term exposure-based fairness), which is based on two new extensions to a generic fairness evaluation framework, attention-weighted ranking fairness (AWRF). TExFAIR assesses fairness based on the term-based representation of groups in a ranked list: (i) an explicit definition of associating documents to groups based on probabilistic term-level associations, and (ii) a rank-biased discounting factor (RBDF) for counting non-representative documents towards the measurement of the fairness of a ranked list. We assess TExFAIR on the task of measuring gender bias in passage ranking, and study the relationship between TExFAIR and NFaiRR. Our experiments show that there is no strong correlation between TExFAIR and NFaiRR, which indicates that TExFAIR measures a different dimension of fairness than NFaiRR. With TExFAIR, we extend the AWRF framework to allow for the evaluation of fairness in settings with term-based representations of groups in documents in a ranked list.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Referring to the amount of attention an item (document) receives from users in the ranking.

- 2.

- 3.

- 4.

As they have different position bias.

References

Abolghasemi, A., Askari, A., Verberne, S.: On the interpolation of contextualized term-based ranking with BM25 for query-by-example retrieval. In: Proceedings of the 2022 ACM SIGIR International Conference on Theory of Information Retrieval, pp. 161–170 (2022)

Abolghasemi, A., Verberne, S., Askari, A., Azzopardi, L.: Retrievability bias estimation using synthetically generated queries. In: Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, pp. 3712–3716 (2023)

Bajaj, P., et al.: MS MARCO: a human generated machine reading comprehension dataset. arXiv preprint arXiv:1611.09268 (2016)

Biega, A.J., Gummadi, K.P., Weikum, G.: Equity of attention: amortizing individual fairness in rankings. In: The 41st international ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 405–414 (2018)

Bigdeli, A., Arabzadeh, N., Seyedsalehi, S., Mitra, B., Zihayat, M., Bagheri, E.: De-biasing relevance judgements for fair ranking. In: Kamps, J., et al. (eds.) Advances in Information Retrieval. ECIR 2023. LNCS, vol. 13981, pp. 350–358. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-28238-6_24

Bigdeli, A., Arabzadeh, N., Seyedsalehi, S., Zihayat, M., Bagheri, E.: On the orthogonality of bias and utility in ad hoc retrieval. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1748–1752 (2021)

Bigdeli, A., Arabzadeh, N., Seyedsalehi, S., Zihayat, M., Bagheri, E.: A light-weight strategy for restraining gender biases in neural rankers. In: Hagen, M., et al. (eds.) Advances in Information Retrieval. ECIR 2022. LNCS, vol. 13186, pp. 47–55. Springer, Cham (2022). https://doi.org/10.1007/978-3-030-99739-7_6

Clarke, C.L., Vtyurina, A., Smucker, M.D.: Assessing top-preferences. ACM Trans. Inf. Syst.. 39(3), 1–21 (2021)

Czarnowska, P., Vyas, Y., Shah, K.: Quantifying social biases in NLP: a generalization and empirical comparison of extrinsic fairness metrics. Trans. Assoc. Comput. Linguist. 9, 1249–1267 (2021)

Diaz, F., Mitra, B., Ekstrand, M.D., Biega, A.J., Carterette, B.: Evaluating stochastic rankings with expected exposure. In: Proceedings of the 29th ACM International Conference on Information and Knowledge Management, pp. 275–284 (2020)

Ekstrand, M.D., Das, A., Burke, R., Diaz, F.: Fairness in information access systems. Found. Trends Inf. Retr. 16(1–2), 1–177 (2022)

Ekstrand, M.D., McDonald, G., Raj, A., Johnson, I.: Overview of the TREC 2021 fair ranking track. In: The Thirtieth Text REtrieval Conference (TREC 2021) Proceedings (2022)

Gao, R., Shah, C.: Toward creating a fairer ranking in search engine results. Inf. Process. Manag. 57(1), 102138 (2020)

Garg, S., Perot, V., Limtiaco, N., Taly, A., Chi, E.H., Beutel, A.: Counterfactual fairness in text classification through robustness. In: Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, pp. 219–226 (2019)

Ghosh, A., Dutt, R., Wilson, C.: When fair ranking meets uncertain inference. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1033–1043 (2021)

Heuss, M., Cohen, D., Mansoury, M., de Rijke, M., Eickhoff, C.: Predictive uncertainty-based bias mitigation in ranking. In: Proceedings of the 32nd ACM International Conference on Information and Knowledge Management (CIKM 2023), New York, pp. 762–772 (2023)

Heuss, M., Sarvi, F., de Rijke, M.: Fairness of exposure in light of incomplete exposure estimation. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 759–769 (2022)

Hofstätter, S., Althammer, S., Schröder, M., Sertkan, M., Hanbury, A.: Improving efficient neural ranking models with cross-architecture knowledge distillation. arXiv preprint arXiv:2010.02666 (2020)

Hofstätter, S., Lin, S.C., Yang, J.H., Lin, J., Hanbury, A.: Efficiently teaching an effective dense retriever with balanced topic aware sampling. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 113–122 (2021)

Jiao, X., et al.: Tinybert: distilling bert for natural language understanding. In: Findings of the Association for Computational Linguistics: EMNLP 2020, pp. 4163–4174 (2020)

Kay, M., Matuszek, C., Munson, S.A.: Unequal representation and gender stereotypes in image search results for occupations. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, pp. 3819–3828 (2015)

Klasnja, A., Arabzadeh, N., Mehrvarz, M., Bagheri, E.: On the characteristics of ranking-based gender bias measures. In: 14th ACM Web Science Conference 2022, pp. 245–249 (2022)

Lin, C.Y.: Rouge: A package for automatic evaluation of summaries. In: Text Summarization Branches Out, pp. 74–81 (2004)

Lin, J., Ma, X.: A few brief notes on deepimpact, coil, and a conceptual framework for information retrieval techniques. arXiv preprint arXiv:2106.14807 (2021)

Lin, J., Ma, X., Lin, S.C., Yang, J.H., Pradeep, R., Nogueira, R.: Pyserini: a python toolkit for reproducible information retrieval research with sparse and dense representations. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 2356–2362 (2021)

Lin, J., Nogueira, R., Yates, A.: Pretrained transformers for text ranking: bert and beyond. Synth. Lect. Hum. Lang. Technol. 14(4), 1–325 (2021)

Lin, S.C., Yang, J.H., Lin, J.: Distilling dense representations for ranking using tightly-coupled teachers. arXiv preprint arXiv:2010.11386 (2020)

Lu, K., Mardziel, P., Wu, F., Amancharla, P., Datta, A.: Gender bias in neural natural language processing. In: Nigam, V., et al. (eds.) Logic, Language, and Security. LNCS, vol. 12300, pp. 189–202. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-62077-6_14

Mallia, A., Khattab, O., Suel, T., Tonellotto, N.: Learning passage impacts for inverted indexes. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1723–1727 (2021)

Maudslay, R.H., Gonen, H., Cotterell, R., Teufel, S.: It’s all in the name: mitigating gender bias with name-based counterfactual data substitution. arXiv preprint arXiv:1909.00871 (2019)

McDonald, G., Macdonald, C., Ounis, I.: Search results diversification for effective fair ranking in academic search. Inf. Retriev. J. 25(1), 1–26 (2022)

Morik, M., Singh, A., Hong, J., Joachims, T.: Controlling fairness and bias in dynamic learning-to-rank. In: Proceedings of the 43rd international ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 429–438 (2020)

Nogueira, R., Cho, K.: Passage re-ranking with BERT. arXiv preprint arXiv:1901.04085 (2019)

Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pp. 311–318 (2002)

Pearl, J.: Causal inference in statistics: an overview. Statist. Surv. 3, 96–146 (2009)

Raj, A., Ekstrand, M.D.: Measuring fairness in ranked results: an analytical and empirical comparison. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 726–736 (2022)

Raj, A., Wood, C., Montoly, A., Ekstrand, M.D.: Comparing fair ranking metrics. arXiv preprint arXiv:2009.01311 (2020)

Reimers, N., Gurevych, I.: Sentence-BERT: sentence embeddings using siamese bert-networks. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing (2019)

Rekabsaz, N., Kopeinik, S., Schedl, M.: Societal biases in retrieved contents: measurement framework and adversarial mitigation of bert rankers. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 306–316 (2021)

Rekabsaz, N., Schedl, M.: Do neural ranking models intensify gender bias? In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 2065–2068 (2020)

Robertson, S.E., Walker, S.: Some simple effective approximations to the 2-poisson model for probabilistic weighted retrieval. In: Proceedings of the 17th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 232–241 (1994)

Rus, C., Luppes, J., Oosterhuis, H., Schoenmacker, G.H.: Closing the gender wage gap: adversarial fairness in job recommendation. In: The 2nd Workshop on Recommender Systems for Human Resources, in Conjunction with the 16th ACM Conference on Recommender Systems (2022)

Sapiezynski, P., Zeng, W.E., Robertson, R., Mislove, A., Wilson, C.: Quantifying the impact of user attention on fair group representation in ranked lists. In: Companion Proceedings of the 2019 World Wide Web Conference, pp. 553–562 (2019)

Seyedsalehi, S., Bigdeli, A., Arabzadeh, N., Mitra, B., Zihayat, M., Bagheri, E.: Bias-aware fair neural ranking for addressing stereotypical gender biases. In: EDBT, pp. 2–435 (2022)

Singh, A., Joachims, T.: Fairness of exposure in rankings. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 2219–2228 (2018)

Sulem, E., Abend, O., Rappoport, A.: BLEU is not suitable for the evaluation of text simplification. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pp. 738–744 (2018)

Wang, W., Wei, F., Dong, L., Bao, H., Yang, N., Zhou, M.: Minilm: deep delf-attention distillation for task-agnostic compression of pre-trained transformers. Adv. Neural. Inf. Process. Syst. 33, 5776–5788 (2020)

Webber, W., Moffat, A., Zobel, J.: A similarity measure for indefinite rankings. ACM Trans. Inf. Syst. 28(4), 1–38 (2010)

Webster, K., et al.: Measuring and reducing gendered correlations in pre-trained models. arXiv preprint arXiv:2010.06032 (2020)

Wu, H., Mitra, B., Ma, C., Diaz, F., Liu, X.: Joint multisided exposure fairness for recommendation. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 703–714 (2022)

Wu, Y., Zhang, L., Wu, X.: Counterfactual fairness: unidentification, bound and algorithm. In: Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (2019)

Xiong, L., et al.: Approximate nearest neighbor negative contrastive learning for dense text retrieval. arXiv preprint arXiv:2007.00808 (2020)

Yang, K., Stoyanovich, J.: Measuring fairness in ranked outputs. In: Proceedings of the 29th International Conference on Scientific and Statistical Database Management, pp. 1–6 (2017)

Zehlike, M., Bonchi, F., Castillo, C., Hajian, S., Megahed, M., Baeza-Yates, R.: Fa*ir: a fair top-k ranking algorithm. In: Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, pp. 1569–1578 (2017)

Zehlike, M., Castillo, C.: Reducing disparate exposure in ranking: a learning to rank approach. In: Proceedings of the Web Conference 2020, pp. 2849–2855 (2020)

Zehlike, M., Yang, K., Stoyanovich, J.: Fairness in ranking, Part I: score-based ranking. ACM Comput. Surv. 55(6), 1–36 (2022)

Zerveas, G., Rekabsaz, N., Cohen, D., Eickhoff, C.: Mitigating bias in search results through contextual document reranking and neutrality regularization. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 2532–2538 (2022)

Zhang, T., Kishore, V., Wu, F., Weinberger, K.Q., Artzi, Y.: Bertscore: Evaluating text generation with bert. In: International Conference on Learning Representations (2019)

Acknowledgements

This work was supported by the DoSSIER project under European Union’s Horizon 2020 research and innovation program, Marie Skłodowska-Curie grant agreement No. 860721, the Hybrid Intelligence Center, a 10-year program funded by the Dutch Ministry of Education, Culture and Science through the Netherlands Organisation for Scientific Research, https://hybrid-intelligence-centre.nl, project LESSEN with project number NWA.1389.20.183 of the research program NWA ORC 2020/21, which is (partly) financed by the Dutch Research Council (NWO), and the FINDHR (Fairness and Intersectional Non-Discrimination in Human Recommendation) project that received funding from the European Union’s Horizon Europe research and innovation program under grant agreement No 101070212.

All content represents the opinion of the authors, which is not necessarily shared or endorsed by their respective employers and/or sponsors.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Abolghasemi, A., Azzopardi, L., Askari, A., de Rijke, M., Verberne, S. (2024). Measuring Bias in a Ranked List Using Term-Based Representations. In: Goharian, N., et al. Advances in Information Retrieval. ECIR 2024. Lecture Notes in Computer Science, vol 14612. Springer, Cham. https://doi.org/10.1007/978-3-031-56069-9_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-56069-9_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-56068-2

Online ISBN: 978-3-031-56069-9

eBook Packages: Computer ScienceComputer Science (R0)