Abstract

Based on the theories of Riemannian surface, Topology and Analytic function, a novel method for dimensionality reduction is proposed in this paper. This approach utilizes FCA to merge highly correlated features to obtain approximate independent new features in the locally, and establishes a conformal homomorphic function to realize global dimensionality reduction for text data with the manifold embed in the Hausdorff space. During the process of dimensionality reduction, the geometric topological structure information of the original data is preserved through conformal homomorphism function. This method is characterized by its simplicity, effectiveness, low complexity, and it avoids the neighbor problem in nonlinear dimensionality reduction and it is conducive to the outlier data. Moreover, it has extensible for new text vectors and new feature from sub-vectors of new text vectors, and incremental operation without involving existing documents. The mapping function exhibits desirable properties resulting in stable, reliable, and interpretable dimensionality reduction outcomes. Experimental results on both construction laws and regulations dataset and toutiao text dataset demonstrate that this dimensionality reduction technique is effective when combined with the typical classification method of Random Forest, Support Vector Machine, and Feedforward Neural Network.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

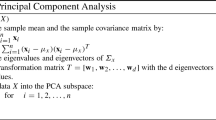

Beattie, J.R., Esmonde-White, F.S.W.L.: Exploration of principal component analysis: deriving principal component analysis visually using spectra. Appl. Spectroscopy 75(4), 361–375 (2021)

Gardner-Lubbe, S.: Linear discriminant analysis for multiple functional data analysis. J. Appl. Stat. 48(11), 1917–1944 (2021)

Dehak, N.: Front-end factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process. 19(4), 788–798 (2010)

Tharwat, A.: Independent component analysis: an introduction. Appl. Comput. Inform. 17(2), 222–249 (2021)

Genggeng, L., Lin, X., Chihua, C.: Unsupervised text feature learning via deep variational auto-encoder. Inf. Technol. Control 49(3), 421–437 (2020)

Moreira, L.A.S., Justel, C.M., de Oliveira, J.C., et al.: Development of a method for data dimensionality reduction in loop closure detection: an incremental approach. Robotica 39(4), 557–571 (2021)

Bickel, P.J., Kur, G., Nadler, B.: Projection pursuit in high dimensions. Pro. Natl. Acad. Sci. USA 115(37), 9151–9156 (2018)

Ingwer, B., Patrick, J.F.G.: Modern Multidimensionality Scaling: Theory and Application. Springer, New York (1997)

Tenenbaum, J.B., de Vin, S., John, C.L.: A global geometric framework for nonlinear dimensionality reduction. Science 290(22), 2319–2323 (2000)

Sam, T.R., Lawrence, K.S.: Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500), 2323–2326 (2000)

Belkin, M., Niyogi, P.: Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 15(6), 1373–1396 (2023)

He, X., Niyogi, P.: Locality preserving projections. Adv. Neural. Inf. Process. Syst. 16, 153–160 (2003)

Wu, T., Xiao, Y., Guo, M., Nie, F.: A general framework for dimensionality reduction of k-means clustering. J. Classif. 37(3), 616–631 (2019). https://doi.org/10.1007/s00357-019-09342-4

Junjun, P., Ng, M.K.: Coseparable nonnegative matrix factorization. SIAM J. Matrix Anal. Appl. 44(3), 1393–1420 (2023)

Zheng, X.H., Ma, Z.M., Che, H.J., et al.: HSIC regularized manifold learning. J. Intell. Fuzzy Syst. 36(6), 5547–5558 (2019)

Shuzhi, S.G., Hongsheng, H., Chengyao, S.: Geometrically local embedding in manifolds for dimension reduction. Pattern Recogn. 45(4), 1455–1470 (2012)

Yang, J.: KPCA plus LDA: a complete kernel Fisher discriminate framework for feature extraction and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 27(2), 230–244 (2005)

Cambria, E., Mazzocco, T., Hussain, A.: Application of multi-dimensional scaling and artificial neural networks for biologically inspired opinion mining. Biol Inspir Cognit. Arch. 4, 41–53 (2013)

Andrew, L., Harli, L., Jose, A., et al.: O(k)-Equivariant Dimensionality Reduction on Stiefel Manifolds. arXiv:2309.10775, https://arxiv.org/abs/2309.10775

Canyao, L., Jujian, L., Huimin, Z., et al.: Dimensionality reduction with extreme learning machine based on manifold preserving. In: 10th International Conference on Brain Inspired Cognitive Systems, pp. 128–138. Elsevier Rio Grande do Norte, Brazil (2021)

ShiZhong, L., XiaoJun, J., SuLei, Z.: The application study of entropy analysis method in feature extraction. J. North China Inst. Technol. 20(3), 278–281 (1999)

Rui, H., Mingyi, H., Saojun, Y.: A margin based feature extraction algorithm for the small sample size problem. Chin. J. Comput. 30(7), 1173–1178 (2007)

Daubechies, I.: The Wavelet Transform, Time-Frequency Localization and Signal Analysis. Princeton University Press, Princeton (2009)

Shuyin, X., Cheng, W., Guoyin, W., et al.: A Unified Granular-ball Learning Model of Pawlak Rough Set and Neighborhood Rough Set. arXiv:2201.03349, https://arxiv.org/abs/2201.03349

Xingrui, Y., Shouwei, Z., Ruxue, Z., et al.: BiLSTM-attention text classification model of improved BERT word vector. Transducer Microsyst. Technol. 42(10), 160–164 (2023)

Jaffe, A., Kluger, Y., Lindenbaum, O.: The spectral underpinning of word2vec. Front. Appl. Math. Stat. 6(3), 1–24 (2020)

Ketineni, S., Sheela, J.: Metaheuristic aided improved LSTM for multi-document summarization: a hybrid optimization model. J. Web Eng. 22(4), 701–730 (2023)

Gustave, C.: Cours d’topology. Higher Education Press, Beijing (2009)

Shichang, S.: Popular in the Modern Mathematical Theory. Shaanxi Science and Technology Press, Shaanxi (2000)

Daren, W.: Lectures on Differential Geometry. People’s Education Press, Beijing (1981)

Munkres, J.R.: Topology. 2nd ed., China Machine Press, Beijing (2006)

Saff, E.B., Snider, A.D.: Fundamentals of Complex Analysis with Applications to Engineering and Science, 3rd ed. China Machine Press, Beijing (2004)

Bianping, S., Dongli, C.: Complex Functions and Integral Transformations. Higher Education Press, Beijing (2022)

Simon, D.: Riemann Surface. Oxford University Press, Britain (2011)

Github. https://github.com/aceimnorstuvwxz/toutiao-text-classfication-dataset. Accessed 08 Jan 2024

Acknowledgments

This research was supported by Special Projects in Key Areas for General Universities in Guangdong Province (2021ZDZX1077); Natural Science Foundation of Guangdong Province of China with (2020A1515010784); Guangdong University of Science & Technology Quality Project Editor (GKZLGC2022255); Guangdong University of Science & Technology Innovation and Improvement Project (GKY2022CQTD2); Innovation and Improvement School Project from Guangdong University of Science & Technology (GKY-2019CQYJ-3); Also supported by Social Sciences Project of Guangdong University of Science & Technology (GKY-2023KYZDW-6).

The support is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 IFIP International Federation for Information Processing

About this paper

Cite this paper

Su, B. et al. (2024). Hybrid Integrated Dimensionality Reduction Method Based on Conformal Homeomorphism Mapping. In: Shi, Z., Torresen, J., Yang, S. (eds) Intelligent Information Processing XII. IIP 2024. IFIP Advances in Information and Communication Technology, vol 703. Springer, Cham. https://doi.org/10.1007/978-3-031-57808-3_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-57808-3_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-57807-6

Online ISBN: 978-3-031-57808-3

eBook Packages: Computer ScienceComputer Science (R0)