Abstract

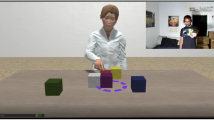

As multimodal interactions between humans and computers become more sophisticated, involving not only speech, but gestures, haptics, eye movement, and other input types, each modality introduces subtleties which can be misinterpreted without a deeper understanding of the agent’s mental state. In this paper, we argue that Simulation Theory of Mind (SToM) [23], interpreted within a model of embodied HCI [41, 42], can help model the capacity to attribute beliefs and intentions to oneself and others. We adopt a version of Dynamic Epistemic Logic that admits of degrees of belief, reflecting changing evidence available to an agent [5, 6]. This model is able to address the complexities of mutual perception and belief, and how a dynamic common ground is constructed and changes [15]. To demonstrate this, we apply the SToM model to the problem of Common Ground Tracking (CGT) in multi-party dialogues, focusing here on a joint problem-solving task called the Weights Task, where participants cooperate to find the weights of a set of blocks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The blocks are uniquely colored as: red (r), yellow (y), blue (b), green (g), and purple (p). The scale is denoted as s.

- 2.

ELAN serves as an annotation tool designed for the enhancement of audio and video recordings. It facilitates users in incorporating an extensive array of textual annotations onto audio and/or video recordings. These annotations may encompass sentences, individual words or glosses, comments, translations, or descriptions of observed features within the media.

References

Asher, N.: Common ground, corrections and coordination. J. Semant. 15, 239–299 (1998)

Baltag, A., Moss, L.S., Solecki, S.: The logic of public announcements, common knowledge, and private suspicions. In: Arló-Costa, H., Hendricks, V.F., van Benthem, J. (eds.) Readings in Formal Epistemology. SGTP, vol. 1, pp. 773–812. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-20451-2_38

Barsalou, L.W.: Perceptions of perceptual symbols. Behav. Brain Sci. 22(4), 637–660 (1999)

Belle, V., Bolander, T., Herzig, A., Nebel, B.: Epistemic planning: perspectives on the special issue. Artif. Intell. 316, 103842 (2023)

van Benthem, J., Fernández-Duque, D., Pacuit, E.: Evidence and plausibility in neighborhood structures. Ann. Pure Appl. Logic 165(1), 106–133 (2014)

van Benthem, J., Pacuit, E.: Dynamic logics of evidence-based beliefs. Stud. Logica. 99, 61–92 (2011)

Bolander, T.: Seeing is believing: formalising false-belief tasks in dynamic epistemic logic. In: Jaakko Hintikka on Knowledge and Game-theoretical Semantics, pp. 207–236 (2018)

Bolander, T., Andersen, M.B.: Epistemic planning for single-and multi-agent systems. J. Appl. Non-Classical Logics 21(1), 9–34 (2011)

Bolander, T., Jensen, M.H., Schwarzentruber, F.: Complexity results in epistemic planning. In: IJCAI, pp. 2791–2797 (2015)

Brutti, R., Donatelli, L., Lai, K., Pustejovsky, J.: Abstract meaning Representation for gesture. In: Proceedings of the Thirteenth Language Resources and Evaluation Conference, pp. 1576–1583. European Language Resources Association, Marseille, France, June 2022

Clark, H.H., Brennan, S.E.: Grounding in communication. Perspect. Socially Shared Cogn. 13(1991), 127–149 (1991)

Dautenhahn, K.: Socially intelligent robots: dimensions of human-robot interaction. Philos. Trans. R. Soc. B: Biol. Sci. 362(1480), 679–704 (2007)

De Groote, P.: Type raising, continuations, and classical logic. In: Proceedings of the Thirteenth Amsterdam Colloquium, pp. 97–101 (2001)

Dey, I., Puntambekar, S.: Examining nonverbal interactions to better understand collaborative learning. In: Proceedings of the 16th International Conference on Computer-Supported Collaborative Learning-CSCL 2023, pp. 273–276. International Society of the Learning Sciences (2023)

Dissing, L., Bolander, T.: Implementing theory of mind on a robot using dynamic epistemic logic. In: IJCAI, pp. 1615–1621 (2020)

Eijck, J.: Perception and change in update logic. In: van Eijck, J., Verbrugge, R. (eds.) Games, Actions and Social Software. LNCS, vol. 7010, pp. 119–140. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-29326-9_7

Feldman, J.: Embodied language, best-fit analysis, and formal compositionality. Phys. Life Rev. 7(4), 385–410 (2010)

Feldman, R.: Respecting the evidence. Philos. Perspect. 19, 95–119 (2005)

Geib, C., George, D., Khalid, B., Magnotti, R., Stone, M.: An integrated architecture for common ground in collaboration (2022)

Gianotti, M., Patti, A., Vona, F., Pentimalli, F., Barbieri, J., Garzotto, F.: Multimodal interaction for persons with autism: the 5A case study. In: Antona, M., Stephanidis, C. (eds.) Universal Access in Human-Computer Interaction, HCII 2023. LNCS, vol. 14020, pp. 581–600. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-35681-0_38

Ginzburg, J.: Interrogatives: Questions, Facts and Dialogue. The Handbook of Contemporary Semantic Theory, pp. 359–423. Blackwell, Oxford (1996)

Ginzburg, J.: The Interactive Stance: Meaning for Conversation. OUP, Oxford (2012)

Goldman, A.I.: In defense of the simulation theory. Mind Lang. 7(1–2), 104–119 (1992)

Goldman, A.I.: Simulating Minds: The Philosophy, Psychology, and Neuroscience of Mindreading. Oxford University Press, Oxford (2006)

Gopnik, A.: How we know our minds: the illusion of first-person knowledge of intentionality. Behav. Brain Sci. 16(1), 1–14 (1993)

Gordon, R.M.: Folk psychology as simulation. Mind Lang. 1(2), 158–171 (1986)

Heal, J.: Simulation, Theory, and Content. Theories of Theories of Mind, pp. 75–89 (1996)

Henderson, M., Thomson, B., Williams, J.D.: The second dialog state tracking challenge. In: Proceedings of the 15th Annual Meeting of the Special Interest Group on Discourse and Dialogue (SIGDIAL), pp. 263–272 (2014)

Khebour, I., et al.: The weights task dataset: a multimodal dataset of collaboration in a situated task. J. Open Humanities Data 10 (2024)

Kolve, E., et al.: AI2-THOR: an interactive 3D environment for visual AI. arXiv preprint arXiv:1712.05474 (2017)

Krishnaswamy, N., et al.: Diana’s World: a situated multimodal interactive agent. In: AAAI Conference on Artificial Intelligence (AAAI): Demos Program. AAAI (2020)

Krishnaswamy, N., Pustejovsky, J.: VoxSim: a visual platform for modeling motion language. In: Proceedings of COLING 2016, The 26th International Conference on Computational Linguistics: Technical Papers. ACL (2016)

Krishnaswamy, N., Pustejovsky, J.: Multimodal continuation-style architectures for human-robot interaction. arXiv preprint arXiv:1909.08161 (2019)

Krshnaswamy, N., Pickard, W., Cates, B., Blanchard, N., Pustejovsky, J.: VoxWorld platform for multimodal embodied agents. In: LREC Proceedings, vol. 13 (2022)

Miller, P.W.: Body language in the classroom. Tech. Connecting Educ. Careers 80(8), 28–30 (2005)

Narayanan, S.: Mind changes: a simulation semantics account of counterfactuals. Cognitive Science (2010)

Pacuit, E.: Neighborhood Semantics for Modal Logic. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67149-9

Plaza, J.: Logics of public communications. In: Proceedings 4th International Symposium on Methodologies for Intelligent Systems, pp. 201–216 (1989)

Premack, D., Woodruff, G.: Does the chimpanzee have a theory of mind? Behav. Brain Sci. 1(4), 515–526 (1978)

Pustejovsky, J., Krishnaswamy, N.: VoxML: a visualization modeling language. arXiv preprint arXiv:1610.01508 (2016)

Pustejovsky, J., Krishnaswamy, N.: Embodied human computer interaction. KI-Künstliche Intelligenz 35(3–4), 307–327 (2021)

Pustejovsky, J., Krishnaswamy, N.: The role of embodiment and simulation in evaluating HCI: theory and framework. In: Duffy, V.G. (ed.) HCII 2021. LNCS, vol. 12777, pp. 288–303. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-77817-0_21

Radu, I., Tu, E., Schneider, B.: Relationships between body postures and collaborative learning states in an augmented reality study. In: Bittencourt, I., Cukurova, M., Muldner, K., Luckin, R., Millán, E. (eds.) Artificial Intelligence in Education: 21st International Conference, AIED 2020, Ifrane, Morocco, 6–10 July 2020, Proceedings, Part II 21, pp. 257–262. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-52240-7_47

Savva, M., et al.: Habitat: a platform for embodied AI research. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9339–9347 (2019)

Schneider, B., Pea, R.: Does seeing one another’s gaze affect group dialogue? A computational approach. J. Learn. Analytics 2(2), 107–133 (2015)

Sousa, A., Young, K., D’aquin, M., Zarrouk, M., Holloway, J.: Introducing CALMED: multimodal annotated dataset for emotion detection in children with autism. In: Antona, M., Stephanidis, C. (eds.) International Conference on Human-Computer Interaction, pp. 657–677. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-35681-0_43

Stalnaker, R.: Common ground. Linguist. Philos. 25(5–6), 701–721 (2002)

Sun, C., Shute, V.J., Stewart, A., Yonehiro, J., Duran, N., D’Mello, S.: Towards a generalized competency model of collaborative problem solving. Comput. Educ. 143, 103672 (2020)

Suzuki, R., Karim, A., Xia, T., Hedayati, H., Marquardt, N.: Augmented reality and robotics: a survey and taxonomy for AR-enhanced human-robot interaction and robotic interfaces. In: Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, pp. 1–33 (2022)

Tam, C., Brutti, R., Lai, K., Pustejovsky, J.: Annotating situated actions in dialogue. In: Proceedings of the 4th International Workshop on Designing Meaning Representation (2023)

Tolzin, A., Körner, A., Dickhaut, E., Janson, A., Rummer, R., Leimeister, J.M.: Designing pedagogical conversational agents for achieving common ground. In: Gerber, A., Baskerville, R. (eds.) International Conference on Design Science Research in Information Systems and Technology, pp. 345–359. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-32808-4_22

Tu, J., Rim, K., Pustejovsky, J.: Competence-based question generation. In: Proceedings of the 29th International Conference on Computational Linguistics, pp. 1521–1533 (2022)

Van Fraassen, C.: Belief and the will. J. Philos. 81(5), 235–256 (1984)

VanderHoeven, H., et al.: Multimodal design for interactive collaborative problem-solving support. In: HCII 2024. Springer, Cham (2024)

Wellman, H.M., Carey, S., Gleitman, L., Newport, E.L., Spelke, E.S.: The Child’s Theory of Mind. The MIT Press, Cambridge (1990)

Wimmer, H., Perner, J.: Beliefs about beliefs: representation and constraining function of wrong beliefs in young children’s understanding of deception. Cognition 13(1), 103–128 (1983)

Wittenburg, P., Brugman, H., Russel, A., Klassmann, A., Sloetjes, H.: ELAN: a professional framework for multimodality research. In: 5th LREC 2006, pp. 1556–1559 (2006)

Won, A.S., Bailenson, J.N., Janssen, J.H.: Automatic detection of nonverbal behavior predicts learning in dyadic interactions. IEEE Trans. Affect. Comput. 5(2), 112–125 (2014)

Xia, F., Zamir, A.R., He, Z., Sax, A., Malik, J., Savarese, S.: Gibson ENV: real-world perception for embodied agents. In: Proceedings of the IEEE Conference on Computer Vision And Pattern Recognition, pp. 9068–9079 (2018)

Acknowledgements

This work was supported in part by NSF grant DRL 2019805, to Dr. Pustejovsky at Brandeis University, and Dr. Krishnaswamy at Colorado State University. It was also supported in part by NSF grant CNS 2033932 to Dr. Pustejovsky. We would like to thank the reviewers for their comments and suggestions. The views expressed herein are ours alone.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhu, Y. et al. (2024). Modeling Theory of Mind in Multimodal HCI. In: Kurosu, M., Hashizume, A. (eds) Human-Computer Interaction. HCII 2024. Lecture Notes in Computer Science, vol 14684. Springer, Cham. https://doi.org/10.1007/978-3-031-60405-8_14

Download citation

DOI: https://doi.org/10.1007/978-3-031-60405-8_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-60404-1

Online ISBN: 978-3-031-60405-8

eBook Packages: Computer ScienceComputer Science (R0)