Abstract

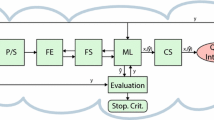

This paper delves into the paradigm of zero-shot object detection, a fundamental challenge in computer vision. Traditional approaches encounter limitations in recognizing novel objects, prompting the exploration of innovative strategies. The paper introduces a transformative Human-in-the-Loop (HITL) strategy, synergizing machine learning with human intelligence to revolutionize the recognition and localization of unseen objects in visual data. The Human-in-the-Loop strategy comprises a deep learning base, involving cutting-edge models like convolutional neural networks (CNNs), human-in-the-loop iterations with strategic input from annotators, and adaptive model refinement based on human annotations. Insights from diverse case studies are integrated, providing a nuanced understanding of the Human-in-the-Loop strategy’s effectiveness. The discussion examines the strengths and limitations of the Human-in-the-Loop strategy, addressing scalability and applicability across domains. The exploration of adaptive model refinement, exemplified by classical works and recent developments, underscores its pivotal role in enhancing adaptability to diverse objects. Case studies, such as Human-in-the-Loop in population health and the application of digital twins, are included. By synergizing machine learning and human expertise, it aims to redefine the landscape of object recognition. The comprehensive discussion and case studies underscore the potential impact of the Human-in-the-Loop strategy on advancing computer vision capabilities, paving the way for future developments in the field.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chen, Z.: Human-in-the-loop Machine Learning System via Model Interpretability. Duke University, Durham, NC, USA (2023)

Moqadam, S.B., Delle, K., Schorling, U., Asheghabadi, A.S., Norouzi, F., Xu, J.: Reproducing tactile and proprioception based on the human-in-the-closed-loop conceptual approach. IEEE Access 11, 41894–41905 (2023)

Mosqueira-Rey, E., Hernández-Pereira, E., Alonso-Ríos, D., Bobes-Bascarán, J., Fernández-Leal, Á.: Human-in-the-loop machine learning: a state of the art. Artif. Intell. Rev. 56(4), 3005–3054 (2023)

Herrmann, T., Pfeiffer, S.: Keeping the organization in the loop: a socio-technical extension of human-centered artificial intelligence. AI Soc. 38(4), 1523–1542 (2023)

Chen, L., Wang, J., Guo, B., Chen, L.: Human-in-the-loop machine learning with applications for population health. CCF Trans. Pervasive Comput. Interact. 5(1), 1–12 (2023)

Bononi, L., et al.: Digital twin collaborative platforms: applications to humans-in-the-loop crafting of urban areas. IEEE Consumer Electron. Mag. 12(6), 38–46 (2023)

Lee, H., Park, S.: Sensing-aware deep reinforcement learning with HCI-based human-in-the-loop feedback for autonomous nonlinear drone mobility control. IEEE Access 12, 1727–1736 (2024)

Pookpanich, P., Siriborvornratanakul, T.: Offensive language and hate speech detection using deep learning in football news live streaming chat on YouTube in Thailand. Soc. Netw. Anal. Min. 14(1), 18 (2023)

Holzinger, A., et al.: Human-in-the-loop integration with domain-knowledge graphs for explainable federated deep learning. In: CD-MAKE, pp. 45–64 (2023)

Zhao, Z., Panpan, X., Scheidegger, C., Ren, L.: Human-in-the-loop extraction of interpretable concepts in deep learning models. IEEE Trans. Vis. Comput. Graph. 28(1), 780–790 (2022)

Sharif, M., Erdogmus, D., Amato, C., Padir, T.: End-to-end grasping policies for human-in-the-loop robots via deep reinforcement learning. In: ICRA 2021, pp. 2768–2774 (2021)

Kerdvibulvech, C.: Human hand motion recognition using an extended particle filter. In: Perales, F.J., Santos-Victor, J. (eds.) AMDO 2014. LNCS, vol. 8563, pp. 71–80. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-08849-5_8

Gao, X., Si, J., Wen, Y., Li, M., Huang, H.: Reinforcement learning control of robotic knee with human-in-the-loop by flexible policy iteration. IEEE Trans. Neural Networks Learn. Syst. 33(10), 5873–5887 (2022)

D’Amato, A.M., Ridley, A.J., Bernstein, D.S.: Retrospective-cost-based adaptive model refinement for the ionosphere and thermosphere. Stat. Anal. Data Min. 4(4), 446–458 (2011)

Ghassemi, P., Lulekar, S.S., Chowdhury, S.: Adaptive model refinement with batch Bayesian sampling for optimization of bio-inspired flow tailoring. In: AIAA Aviation and Aeronautics Forum and Exposition (AIAA AVIATION Forum 2019), 17–21 June 2019, Dallas, Texas (2019)

Zeng, F., Zhang, W., Li, J., Zhang, T., Yan, C.: Adaptive model refinement approach for Bayesian uncertainty quantification in turbulence model. AIAA J. 60(6), 3502–3516 (2022)

Songja, R., Promboot, I., Haetanurak, B., et al.: Deepfake AI images: should deepfakes be banned in Thailand? AI Ethics (2023)

Acknowledgments

This research presented herein was partially supported by a research grant from the Research Center, NIDA (National Institute of Development Administration).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Kerdvibulvech, C., Li, Q. (2024). Empowering Zero-Shot Object Detection: A Human-in-the-Loop Strategy for Unveiling Unseen Realms in Visual Data. In: Duffy, V.G. (eds) Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management. HCII 2024. Lecture Notes in Computer Science, vol 14711. Springer, Cham. https://doi.org/10.1007/978-3-031-61066-0_14

Download citation

DOI: https://doi.org/10.1007/978-3-031-61066-0_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-61065-3

Online ISBN: 978-3-031-61066-0

eBook Packages: Computer ScienceComputer Science (R0)