Abstract

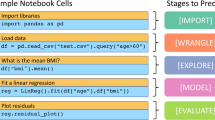

The understanding of large-scale scientific software is a significant challenge due to its diverse codebase, extensive code length, and target computing architectures. The emergence of generative AI, specifically large language models (LLMs), provides novel pathways for understanding such complex scientific codes. This paper presents S3LLM, an LLM-based framework designed to enable the examination of source code, code metadata, and summarized information in conjunction with textual technical reports in an interactive, conversational manner through a user-friendly interface. S3LLM leverages open-source LLaMA-2 models to enhance code analysis through the automatic transformation of natural language queries into domain-specific language (DSL) queries. In addition, S3LLM is equipped to handle diverse metadata types, including DOT, SQL, and customized formats. Furthermore, S3LLM incorporates retrieval-augmented generation (RAG) and LangChain technologies to directly query extensive documents. S3LLM demonstrates the potential of using locally deployed open-source LLMs for the rapid understanding of large-scale scientific computing software, eliminating the need for extensive coding expertise and thereby making the process more efficient and effective. S3LLM is available at https://github.com/ResponsibleAILab/s3llm.

This manuscript has been authored by UT-Battelle, LLC under contract DE-AC05-00OR22725 with the US Department of Energy (DOE). The US government retains and the publisher, by accepting the article for publication, acknowledges that the US government retains a nonexclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of this manuscript, or allow others to do so, for US government purposes. DOE will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-public-access-plan).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

A software toolkit for porting E3SM land models onto GPUs using OpenACC.

References

Doxygen. https://www.doxygen.nl/. Accessed 01 Mar 2024

Acharya, A., Singh, B., Onoe, N.: LLM based generation of item-description for recommendation system. In: Proceedings of the 17th ACM RecSys, pp. 1204–1207 (2023)

Bill, D., Eriksson, T.: Fine-tuning a LLM using reinforcement learning from human feedback for a therapy chatbot application (2023)

Chang, Y., Lo, K., Goyal, T., Iyyer, M.: Booookscore: a systematic exploration of book-length summarization in the era of LLMs. arXiv preprint arXiv:2310.00785 (2023)

Freire, S.K., Wang, C., Niforatos, E.: Chatbots in knowledge-intensive contexts: comparing intent and LLM-based systems. arXiv preprint arXiv:2402.04955 (2024)

Golaz, J.-C., et al.: The DOE E3SM model version 2: overview of the physical model and initial model evaluation. J. Adv. Model. Earth Syst. 14(12), e2022MS003156 (2022)

Huang, S., Zhao, J., Li, Y., Wang, L.: Learning preference model for LLMs via automatic preference data generation. In: The 2023 Conference on EMLP (2023)

Jury, B., Lorusso, A., Leinonen, J., Denny, P., Luxton-Reilly, A.: Evaluating LLM-generated worked examples in an introductory programming course. In: Proceedings of the 26th Australasian Computing Education Conference, pp. 77–86 (2024)

Lewis, P., et al.: Retrieval-augmented generation for knowledge-intensive NLP tasks. In: Advances in Neural Information Processing Systems, vol. 33, pp. 9459–9474 (2020)

Oleson, K.W., Lawrence, D.M., et al.: Technical description of version 4.0 of the community land model (CLM). NCAR Tech. Note NCAR/TN-478+ STR 257, 1–257 (2010)

Schwartz, P., Wang, D., Yuan, F., Thornton, P.: SPEL: software tool for porting E3SM land model with openacc in a function unit test framework. In: 2022 Workshop on Accelerator Programming Using Directives (WACCPD), pp. 43–51. IEEE (2022)

Tsigkanos, C., Rani, P., Müller, S., Kehrer, T.: Variable discovery with large language models for metamorphic testing of scientific software. In: Mikyška, J., de Mulatier, C., Paszynski, M., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M. (eds.) ICCS 2023. LNCS, vol. 14073, pp. 321–335. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-35995-8_23

Van Veen, D., Van Uden, C., et al.: Clinical text summarization: adapting large language models can outperform human experts. Res. Square (2023)

Zhang, T., Ladhak, F., et al.: Benchmarking large language models for news summarization. Trans. Assoc. Comput. Linguist. 12, 39–57 (2024)

Zheng, L., Chiang, W.-L., et al.: Judging LLM-as-a-judge with MT-bench and chatbot arena. In: Advances in Neural Information Processing Systems, vol. 36 (2024)

Zheng, W., Wang, D., Song, F.: XScan: an integrated tool for understanding open source community-based scientific code. In: Rodrigues, J.M.F., et al. (eds.) ICCS 2019. LNCS, vol. 11536, pp. 226–237. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-22734-0_17

Zheng, W., Wang, D., Song, F.: FQL: an extensible feature query language and toolkit on searching software characteristics for HPC applications. In: Tools and Techniques for High Performance Computing, pp. 129–142 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Shaik, K. et al. (2024). S3LLM: Large-Scale Scientific Software Understanding with LLMs Using Source, Metadata, and Document. In: Franco, L., de Mulatier, C., Paszynski, M., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M.A. (eds) Computational Science – ICCS 2024. ICCS 2024. Lecture Notes in Computer Science, vol 14834. Springer, Cham. https://doi.org/10.1007/978-3-031-63759-9_27

Download citation

DOI: https://doi.org/10.1007/978-3-031-63759-9_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-63758-2

Online ISBN: 978-3-031-63759-9

eBook Packages: Computer ScienceComputer Science (R0)