Abstract

Shapley values are among the most popular tools for explaining predictions of black-box machine learning models. However, their high computational cost motivates the use of sampling approximations, inducing a considerable degree of uncertainty. To stabilize these model explanations, we propose ControlSHAP, an approach based on the Monte Carlo technique of control variates. Our methodology is applicable to any machine learning model and requires virtually no extra computation or modeling effort. On several high-dimensional datasets, we find it can produce dramatic reductions in the variability of Shapley estimates.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

https://github.com/jeremy-goldwasser/ControlSHAP contains our code and experimental results.

References

Aas, K., Jullum, M., Løland, A.: Explaining individual predictions when features are dependent: more accurate approximations to shapley values. Artif. Intell. 298, 103502 (2021)

Belle, V., Papantonis, I.: Principles and practice of explainable machine learning. Front. Big Data 4, 688969 (2021)

Berger, A., et al.: A comprehensive TCGA pan-cancer molecular study of gynecologic and breast cancers. Cancer Cell 33 (2018)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Campbell, T.W., Roder, H., Georgantas, R.W., III., Roder, J.: Exact shapley values for local and model-true explanations of decision tree ensembles. Mach. Learn. Appl. 9, 100345 (2022)

Charnes, A., Golany, B., Keane, M., Rousseau, J.: Extremal principle solutions of games in characteristic function form: core, Chebychev and Shapley value generalizations. In: Sengupta, J.K., Kadekodi, G.K. (eds.) Econometrics of Planning and Efficiency, pp. 123–133. Springer, Dordrecht (1988). https://doi.org/10.1007/978-94-009-3677-5_7

Chen, H., Janizek, J.D., Lundberg, S.M., Lee, S.: True to the model or true to the data? CoRR abs/2006.16234 (2020)

Chen, J., Song, L., Wainwright, M.J., Jordan, M.I.: L-shapley and c-shapley: efficient model interpretation for structured data. CoRR abs/1808.02610 (2018)

Covert, I., Lee, S.: Improving kernelshap: practical shapley value estimation via linear regression. CoRR abs/2012.01536 (2020)

Covert, I., Lundberg, S.M., Lee, S.: Understanding global feature contributions through additive importance measures. CoRR abs/2004.00668 (2020)

Dubey, R., Chandani, A.: Application of machine learning in banking and finance: a bibliometric analysis. Int. J. Data Anal. Tech. Strateg. 14(3) (2022)

Fang, K.T., Kotz, S., Ng, K.W.: Symmetric Multivariate and Related Distributions. Chapman and Hall, Boca Raton (1990)

Ferdous, M., Debnath, J., Chakraborty, N.R.: Machine learning algorithms in healthcare: a literature survey. In: 11th International Conference on Computing, Communication and Networking Technologies, ICCCNT 2020, Kharagpur, India, 1–3 July 2020, pp. 1–6. IEEE (2020)

Ghorbani, A., Zou, J.Y.: Neuron shapley: discovering the responsible neurons. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, 6–12 December 2020, virtual (2020)

Goli, A., Mohammadi, H.: Developing a sustainable operational management system using hybrid Shapley value and Multimoora method: case study petrochemical supply chain. Environ. Dev. Sustain. 24(9), 10540–10569 (2022). https://doi.org/10.1007/s10668-021-01844-

Hofmann, H.: Statlog (German credit data) (1994)

Hooker, G., Mentch, L., Zhou, S.: Unrestricted permutation forces extrapolation: variable importance requires at least one more model, or there is no free variable importance. Stat. Comput. 31, 1–16 (2021)

Jethani, N., Sudarshan, M., Covert, I.C., Lee, S., Ranganath, R.: Fastshap: real-time shapley value estimation. In: The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, 25–29 April 2022. OpenReview.net (2022)

Kohavi, R.: Census income (1996)

Laugel, T., Lesot, M., Marsala, C., Detyniecki, M.: Issues with post-hoc counterfactual explanations: a discussion. CoRR abs/1906.04774 (2019)

Leisch, F., Weingessel, A., Hornik, K.: On the generation of correlated artificial binary data. In: SFB Adaptive Information Systems and Modelling in Economics and Management Science, vol. 20 (1970)

Lundberg, S.M., et al.: From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2(1), 56–67 (2020)

Lundberg, S.M., Lee, S.: A unified approach to interpreting model predictions. In: Guyon, I., et al. (eds.) Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, 4–9 December 2017, Long Beach, CA, USA, pp. 4765–4774 (2017)

Mandalapu, V., Elluri, L., Vyas, P., Roy, N.: Crime prediction using machine learning and deep learning: a systematic review and future directions. IEEE Access 11, 60153–60170 (2023)

Mitchell, R., Cooper, J., Frank, E., Holmes, G.: Sampling permutations for shapley value estimation. J. Mach. Learn. Res. 23, 43:1–43:46 (2022)

Moro, S., Cortez, P., Rita, P.: A data-driven approach to predict the success of bank telemarketing. Decis. Support Syst. 62, 22–31 (2014)

Narayanam, R., Narahari, Y.: Determining the top-k nodes in social networks using the shapley value. In: Padgham, L., Parkes, D.C., Müller, J.P., Parsons, S. (eds.) 7th International Joint Conference on Autonomous Agents and Multiagent Systems (AAMAS 2008), Estoril, Portugal, 12–16 May 2008, vol. 3, pp. 1509–1512. IFAAMAS (2008)

Oates, C.J., Girolami, M., Chopin, N.: Control functionals for Monte Carlo integration. J. R. Stat. Soc. Ser. B Stat. Methodol. 79(3), 695–718 (2017)

Ribeiro, M.T., Singh, S., Guestrin, C.: “why should I trust you?”: explaining the predictions of any classifier. In: Krishnapuram, B., Shah, M., Smola, A.J., Aggarwal, C.C., Shen, D., Rastogi, R. (eds.) Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016, pp. 1135–1144. ACM (2016)

Rudin, C.: Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1(5), 206–215 (2019)

Shapley, L.: A value for n-person games (1952)

Song, E., Nelson, B.L., Staum, J.: Shapley effects for global sensitivity analysis: theory and computation. SIAM/ASA J. Uncertain. Quantification 4(1), 1060–1083 (2016)

Strumbelj, E., Kononenko, I.: An efficient explanation of individual classifications using game theory. J. Mach. Learn. Res. 11, 1–18 (2010)

Strumbelj, E., Kononenko, I.: Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 41(3), 647–665 (2014)

Sundararajan, M., Najmi, A.: The many shapley values for model explanation. In: Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13–18 July 2020, Virtual Event. Proceedings of Machine Learning Research, vol. 119, pp. 9269–9278. PMLR (2020)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. Roy. Stat. Soc. Ser. B (Methodol.) 58(1), 267–288 (1996)

Tomita, T.M., et al.: Sparse projection oblique randomer forests. J. Mach. Learn. Res. 21(1), 4193–4231 (2020)

Verma, S., Dickerson, J.P., Hines, K.: Counterfactual explanations for machine learning: a review. CoRR abs/2010.10596 (2020)

Zhou, Y., Zhou, Z., Hooker, G.: Approximation trees: statistical stability in model distillation. CoRR abs/1808.07573 (2018)

Zhou, Z., Hooker, G., Wang, F.: S-LIME: stabilized-lime for model explanation. In: Zhu, F., Ooi, B.C., Miao, C. (eds.) KDD 2021: The 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Virtual Event, Singapore, 14–18 August 2021, pp. 2429–2438. ACM (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

Disclosure of Interests

We have no competing interests to disclose.

Appendices

Appendix

A Exact Shapley Values

1.1 A.1 Shapley Value, Assuming Feature Independence

Proof

Assume features are sampled independently in the value function. Consider a second-order Taylor approximation to f at x:

Let \(J = \nabla f(x)\), \(H = \nabla ^2 f(x)\), \(\varSigma =\text {Cov}(X)\), and \(\sigma ^2_{k\ell }=\text {Cov}(X_k, X_\ell )\). The Shapley value function is

where the quadratic term is equal to

Recall \(\displaystyle \phi _j(x) := \frac{1}{d} \sum _{S \subseteq [d]\backslash \{j\}} {d-1\atopwithdelims ()|S|}^{-1} \big (v_x(S \cup \{j\}) - v_x(S)\big )\). The difference in value functions is

Define the Shapley weight \(w_S = \frac{1}{d}{d-1\atopwithdelims ()|S|}^{-1}\).

Noting subsets of equal size have the same Shapley weight, we can easily show \(\displaystyle \sum _{S \subseteq [d]\backslash \{j\}}w_S = 1\).

With a bit more arithmetic, we can show \(\displaystyle \sum _{S \subseteq [d]\backslash \{j, k\}}w_S = \frac{1}{2}\).

Plugging the results of 11 and 12 into 10 yields the final expression for the Shapley value:

\(\square \)

1.2 A.2 Shapley Value, Correlated Features

Consider the linear model \(g(x') = \beta ^T x' + b\), where \(x' \sim \mathcal {N}(\mu , \varSigma )\). (For our problem, \(\beta = \nabla f(x)\) and \(b = f(x)\).)

Let \(P_S\) be the projection matrix selecting set S; define \(R_S = P_{\bar{S}}^T P_{\bar{S}} \varSigma P_S^T (P_S \varSigma P_S^T)^{-1} P_S\) and \(Q_S = P_S^T P_S\). We consider the set of permutations of [d], each of which indexes a subset S as the features that appear before j. Averaging over all such permutations, the Shapley values of g(x) [1, 7, 23] are

Lastly, we observe that \(C_j = -D_j\). For each subset S, the R terms cancel out; \(Q_{S^C} + Q_S = I_d\), so \(Q_{\{S\cup j\}^C} - Q_{S^C} = -(Q_{S\cup j} - Q_S)\). This yields our expression for the dependent Shapley value:

B Comprehensive Results

We display results across all 5 datasets and 3 machine learning predictors. All datasets were binary classification problems, so we used the same models to fit them. For logistic regression and random forest, we used the sklearn implementation with default hyperparameters. For the neural network, we fit a two-layer MLP in Pytorch with 50 neurons in the hidden layer and hyperbolic tangent activation functions.

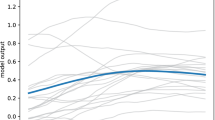

Figure 6 displays the variance reductions of our ControlSHAP methods in the four settings: Independent vs Dependent Features, and Shapley Sampling vs KernelSHAP. The error bars span the 2th to 7th percentiles of the variance reductions for 40 held-out samples.

Figure 7 compares the average number of changes in rankings between the original and ControlSHAP Shapley estimates. Specifically, we look the Shapley estimates obtained via KernelSHAP, assuming correlated features.

C Anticipated Correlation

Figure 8 compares the observed and anticipated variance reductions, across two combinations of dataset and predictor. Recall that the variance reduction of the control variate estimate for \(\phi _j(x)\) is \(\rho ^2(\hat{\phi }_j(x)^\text {model}, \hat{\phi }_j(x)^\text {approx})\), where \(\rho \) is the Pearson’s correlation coefficient. We compute the sample correlation coefficient of the Shapley values on sample x as follows:

We average this across the 50 iterations to obtain a single estimate for the correlation \(\hat{\rho }\). The plots display the median and error bars for \(\hat{\rho }^2(\hat{\phi }_j(x)^\text {model}, \hat{\phi }_j(x)^\text {approx})\) across 40 samples. Once again, the error bars span the 2th to 7th percentiles.

D Comparing KernelSHAP Variance Estimators

Section 4.2 of the paper details methods for computing the variance and covariance between KernelSHAP estimates. Bootstrapping and the least squares covariance produce extremely similar estimates. In turn, these produce ControlSHAP estimates that reduce variance by roughly the same amount, as shown in Fig. 9. This indicates that both methods are appropriate choices.

In contrast, we were not able to get the grouped method to reliably work. Its variance estimates were somewhat erratic, as they are drawn from a heavy-tailed \(\chi ^2\) distribution (Fig. 10). As a result, its ControlSHAP estimates were occasionally more variable than the original KernelSHAP values themselves.

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Goldwasser, J., Hooker, G. (2024). Stabilizing Estimates of Shapley Values with Control Variates. In: Longo, L., Lapuschkin, S., Seifert, C. (eds) Explainable Artificial Intelligence. xAI 2024. Communications in Computer and Information Science, vol 2154. Springer, Cham. https://doi.org/10.1007/978-3-031-63797-1_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-63797-1_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-63796-4

Online ISBN: 978-3-031-63797-1

eBook Packages: Computer ScienceComputer Science (R0)