Abstract

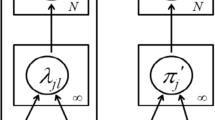

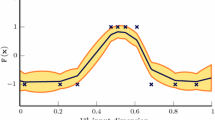

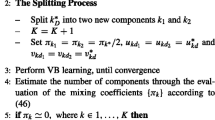

This proof-of-concept work generalizes the concept of invariance, as used in contrastive learning, to fully probabilistic models (such as, e.g., mixture models) that explicitly describe data distributions in an interpretable fashion, and whose main applications are density estimation (e.g., outlier detection), sampling and tractable inference. Invariance allows allows probabilistic models to operate at a lower effective model complexity, and therefore to deal with more complex (image) data. In this article, we propose iGMM, a Gaussian Mixture Model (GMM) that explicitly incorporates invariance into its loss, which is a generalization of the conventional GMM log-likelihood. When constructing hierarchies of conventional GMM and iGMM instances, we obtain invariance properties that are reminiscent of simple and complex cells in the mammalian visual cortex. We show, by experiments on the MNIST and FashionMNIST dataset, that GMM-iGMM hierarchies can faithfully sample from learned data distributions even if the iGMM is invariant to some aspects of the data, and demonstrate that outlier detection performance is strongly enhanced in GMM-iGMM hierarchies.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Butz, C.J., Oliveira, J.S., dos Santos, A.E., Teixeira, A.L.: Deep convolutional sum-product networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 3248–3255 (2019)

Gepperth, A.: A new perspective on probabilistic image modeling. In: International Joint Conference on Neural Networks(IJCNN) (2022)

Gepperth, A., Pfülb, B.: Gradient-based training of gaussian mixture models in high-dimensional spaces (2020)

Grathwohl, W., Chen, R.T., Bettencourt, J., Sutskever, I., Duvenaud, D.: Ffjord: free-form continuous dynamics for scalable reversible generative models. arXiv preprint arXiv:1810.01367 (2018)

Khosla, P., et al.: Supervised contrastive learning. Adv. Neural. Inf. Process. Syst. 33, 18661–18673 (2020)

Kingma, D.P., Dhariwal, P.: Glow: generative flow with invertible 1\(\times \)1 convolutions. Adv. Neural Inf. Process. Syst. 31 (2018)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Peharz, R., et al.: Einsum networks: fast and scalable learning of tractable probabilistic circuits. In: International Conference on Machine Learning, pp. 7563–7574. PMLR (2020)

Rezende, D., Mohamed, S.: Variational inference with normalizing flows. In: International Conference on Machine Learning, pp. 1530–1538. PMLR (2015)

Richardson, E., Weiss, Y.: On GANs and GMMs. Adv. Neural Inf. Process. Syst. 31, 5847–5858 (2018)

Tang, Y., Salakhutdinov, R., Hinton, G.: Deep mixtures of factor analysers. In: Proceedings of the 29th International Conference on Machine Learning, ICML 2012, vol. 1, pp. 505–512 (2012)

Van Den Oord, A., Schrauwen, B.: Factoring variations in natural images with deep Gaussian mixture models. Adv. Neural. Inf. Process. Syst. 4(January), 3518–3526 (2014)

Van de Ven, G.M., Tolias, A.S.: Three scenarios for continual learning. arXiv preprint arXiv:1904.07734 (2019)

Viroli, C., McLachlan, G.J.: Deep Gaussian mixture models. Stat. Comput. 29(1), 43–51 (2019). https://doi.org/10.1007/s11222-017-9793-z

Wolfshaar, J., Pronobis, A.: Deep generalized convolutional sum-product networks. In: International Conference on Probabilistic Graphical Models, pp. 533–544. PMLR (2020)

Xiao, H., Rasul, K., Vollgraf, R.: Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms, pp. 1–6 (2017). http://arxiv.org/abs/1708.07747

Zhang, L., Goldstein, M., Ranganath, R.: Understanding failures in out-of-distribution detection with deep generative models. In: International Conference on Machine Learning, pp. 12427–12436. PMLR (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Gepperth, A. (2024). Probabilistic Models with Invariance. In: Villmann, T., Kaden, M., Geweniger, T., Schleif, FM. (eds) Advances in Self-Organizing Maps, Learning Vector Quantization, Interpretable Machine Learning, and Beyond. WSOM+ 2024. Lecture Notes in Networks and Systems, vol 1087. Springer, Cham. https://doi.org/10.1007/978-3-031-67159-3_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-67159-3_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-67158-6

Online ISBN: 978-3-031-67159-3

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)