Abstract

Graph Neural Networks (GNNs) have emerged as potent models for graph learning. Distributing the training process across multiple computing nodes is the most promising solution to address the challenges of ever-growing real-world graphs. However, current adversarial attack methods on GNNs neglect the characteristics and applications of the distributed scenario, leading to suboptimal performance and inefficiency in attacking distributed GNN training.

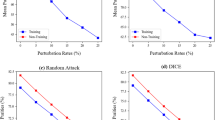

In this study, we introduce Disttack, the first framework of adversarial attacks for distributed GNN training that leverages the characteristics of frequent gradient updates in a distributed system. Specifically, Disttack corrupts distributed GNN training by injecting adversarial attacks into one single computing node. The attacked subgraphs are precisely perturbed to induce an abnormal gradient ascent in backpropagation, disrupting gradient synchronization between computing nodes and thus leading to a significant performance decline of the trained GNN. We evaluate Disttack on four large real-world graphs by attacking five widely adopted GNNs. Compared with the state-of-the-art attack method, experimental results demonstrate that Disttack amplifies the model accuracy degradation by 2.75\(\times \) and achieves speedup by 17.33\(\times \) on average while maintaining unnoticeability.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Open-sourced at https://github.com/zhangyxrepo/Disttack.

References

Chen, Y., et al.: Understanding and improving graph injection attack by promoting unnoticeability. arXiv preprint arXiv:2202.08057 (2022)

Hamilton, W., Ying, Z., Leskovec, J.: Inductive representation learning on large graphs. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Jia, Z., Lin, S., Gao, M., Zaharia, M., Aiken, A.: Improving the accuracy, scalability, and performance of graph neural networks with ROC. Proc. Mach. Learn. Syst. 2, 187–198 (2020)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016)

Li, J., Xie, T., Chen, L., Xie, F., He, X., Zheng, Z.: Adversarial attack on large scale graph. IEEE Trans. Knowl. Data Eng. 35(1), 82–95 (2021)

Lin, H., Yan, M., Ye, X., Fan, D., Pan, S., Xie, Y.: A comprehensive survey on distributed training of graph neural networks. Proc. IEEE (2023)

Ma, L., Yang, Z., Miao, Y., Xue, J., et al.: Neugraph: parallel deep neural network computation on large graphs. In: 2019 USENIX Annual Technical Conference (USENIX ATC 2019) (2019)

Mosca, P., Zhang, Y., Xiao, Z., Wang, Y., et al.: Cloud security: services, risks, and a case study on amazon cloud services. Int. Commun. Netw. Syst. Sci. 7(12), 529 (2014)

Shao, Y., et al.: Distributed graph neural network training: a survey. ACM Comput. Surv. 56(8), 1–39 (2022)

Sun, L., et al.: Adversarial attack and defense on graph data: a survey. IEEE Trans. Knowl. Data Eng. 35(8), 7693–7711 (2022)

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Lio, P., Bengio, Y.: Graph attention networks. arXiv preprint arXiv:1710.10903 (2017)

Wang, K., Shen, Z., Huang, C., Wu, C.H., Dong, Y., Kanakia, A.: Microsoft academic graph: when experts are not enough. Quant. Sci. Stud. 1(1), 396–413 (2020)

Waniek, M., Michalak, T.P., Wooldridge, M.J., Rahwan, T.: Hiding individuals and communities in a social network. Nat. Hum. Behav. 2(2), 139–147 (2018)

West, D.B., et al.: Introduction to graph theory, vol. 2 (2001)

Wu, F., Souza, A., Zhang, T., Fifty, C., Yu, T., Weinberger, K.: Simplifying graph convolutional networks. In: International Conference on Machine Learning, pp. 6861–6871. PMLR (2019)

Xu, K., Hu, W., Leskovec, J., Jegelka, S.: How powerful are graph neural networks? arXiv preprint arXiv:1810.00826 (2018)

Zeng, H., Zhou, H., Srivastava, A., Kannan, R., Prasanna, V.: Graphsaint: graph sampling based inductive learning method. arXiv preprint arXiv:1907.04931 (2019)

Zhu, R., Zhao, K., Yang, H., Lin, W., Zhou, C., et al.: Aligraph: a comprehensive graph neural network platform. arXiv preprint arXiv:1902.08730 (2019)

Zügner, D., Akbarnejad, A., Günnemann, S.: Adversarial attacks on neural networks for graph data. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 2847–2856 (2018)

Zügner, D., Borchert, O., Akbarnejad, A., Günnemann, S.: Adversarial attacks on graph neural networks: perturbations and their patterns. ACM Trans. Knowl. Discov. Data (TKDD) 14(5), 1–31 (2020)

Acknowledgement

This work was supported by National Key Research and Development Program (Grant No. 2023YFB4502305), the National Natural Science Foundation of China (Grant No. 62202451), CAS Project for Young Scientists in Basic Research (Grant No. YSBR-029), and CAS Project for Youth Innovation Promotion Association.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, Y. et al. (2024). Disttack: Graph Adversarial Attacks Toward Distributed GNN Training. In: Carretero, J., Shende, S., Garcia-Blas, J., Brandic, I., Olcoz, K., Schreiber, M. (eds) Euro-Par 2024: Parallel Processing. Euro-Par 2024. Lecture Notes in Computer Science, vol 14802. Springer, Cham. https://doi.org/10.1007/978-3-031-69766-1_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-69766-1_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-69765-4

Online ISBN: 978-3-031-69766-1

eBook Packages: Computer ScienceComputer Science (R0)