Abstract

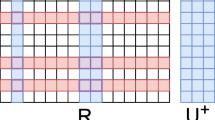

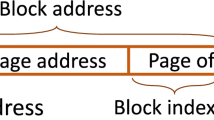

Attention-based models, such as Transformer and BERT, have achieved remarkable success across various tasks. However, their deployment is hindered by challenges such as high memory requirements, long inference latency, and significant power consumption. One potential solution for accelerating attention is the utilization of resistive random access memory (RRAM), which exploits process-in-memory (PIM) capability. However, existing RRAM-based accelerators often grapple with costly write operations. Accordingly, we exploit a write-optimized RRAM-based accelerator dubbed Watt for attention-based models, which can further reduce the number of intermediate data written to the RRAM-based crossbars, thereby effectively mitigating workload imbalance among crossbars. Specifically, given the importance and similarity of tokens in the sequences, we design the importance detector and similarity detector to significantly compress the intermediate data \(K^T\) and V written to the crossbars. Moreover, due to pruning numerous vectors in \(K^T\) and V, the number of vectors written to crossbars varies across different inferences, leading to workload imbalance among crossbars. To tackle this issue, we propose a workload-aware dynamic scheduler comprising a top-k engine and remapping engine. The scheduler first ranks the total write counts of each crossbar and the write counts for each inference using the top-k engine, then assigns inference tasks to the crossbars via the remapping engine. Experimental results show that Watt averagely achieves \(6.5\times \), \(4.0\times \), and \(2.1\times \) speedup compared to the state of the art accelerators Sanger, TransPIM, and Retransformer. Meanwhile, it averagely achieves \(18.2\times \), \(3.2\times \), and \(2.8\times \) energy savings with respect to the three accelerators.

Zhuoran Song is the corresponding author.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

https://www.synopsys.com/community/university-program/teaching-resources.html

Arnab, A., et al.: ViViT: a video vision transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6836–6846 (2021)

Balasubramonian, R., et al.: Cacti 7: New tools for interconnect exploration in innovative off-chip memories. ACM Trans. Architect. Code Optim. (TACO) 14(2), 1–25 (2017)

Chen, P.Y., et al.: Neurosim: a circuit-level macro model for benchmarking neuro-inspired architectures in online learning. IEEE TCAD 37(12), 3067–3080 (2018)

Devlin, J., et al.: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Dong, X.y., et al.: NVSim: a circuit-level performance, energy, and area model for emerging nonvolatile memory. TCAD 31(7), 994–1007 (2012)

Ham, T.J., et al.: \(A^3\): Accelerating attention mechanisms in neural networks with approximation. In: 2020 HPCA. pp. 328–341. IEEE (2020)

Han, K., et al.: A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 45(1), 87–110 (2022)

Liu, F., et al.: Spark: scalable and precision-aware acceleration of neural networks via efficient encoding. In: 2024 IEEE International Symposium on High-Performance Computer Architecture (HPCA), pp. 1029–1042 (2024)

Lu, L., et al.: Sanger: a co-design framework for enabling sparse attention using reconfigurable architecture. In: MICRO-54: 54th Annual IEEE/ACM International Symposium on Microarchitecture, pp. 977–991 (2021)

Niu, D., et al.: Design of cross-point metal-oxide ReRAM emphasizing reliability and cost. In: 2013 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), pp. 17–23. IEEE (2013)

Otter, D.W., et al.: A survey of the usages of deep learning for natural language processing. IEEE trans. Neural Netw. Learning Syst. 32(2), 604–624 (2020)

Radford, A., et al.: Language models are unsupervised multitask learners. OpenAI blog 1(8), 9 (2019)

Rajpurkar, P., Zhang, J., Lopyrev, K., Liang, P.: Squad: 100,000+ questions for machine comprehension of text. arXiv preprint arXiv:1606.05250 (2016)

Shafiee, A., et al.: ISAAC: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. ACM SIGARCH Comput. Architect. News 44(3), 14–26 (2016)

Song, L.H., et al.: PipeLayer: a pipelined ReRAM-based accelerator for deep learning. In: 2017 HPCA, pp. 541–552. IEEE (2017)

Wang, H., et al.: SpAtten: efficient sparse attention architecture with cascade token and head pruning. In: 2021 HPCA, pp. 97–110. IEEE (2021)

Wen, W., et al.: Renew: enhancing lifetime for ReRAM crossbar based neural network accelerators. In: 2019 IEEE 37th International Conference on Computer Design (ICCD), pp. 487–496. IEEE (2019)

Yang, X., et al.: ReTransformer: ReRAM-based processing-in-memory architecture for transformer acceleration. In: Proceedings of the 39th International Conference on Computer-Aided Design, pp. 1–9 (2020)

You, H., et al.: ViTCoD: vision transformer acceleration via dedicated algorithm and accelerator co-design. In: 2023 HPCA, pp. 273–286. IEEE (2023)

Zhang, X., et al.: HyAcc: a hybrid CAM-MAC RRAM-based accelerator for recommendation model. In: 2023 ICCD, pp. 375–382. IEEE (2023)

Zhou, M., et al.: TransPIM: a memory-based acceleration via software-hardware co-design for transformer. In: 2022 HPCA, pp. 1071–1085. IEEE (2022)

Zokaee, F., et al.: Mitigating voltage drop in resistive memories by dynamic reset voltage regulation and partition reset. In: 2020 HPCA, pp. 275–286. IEEE (2020)

Acknowledgements

This work is partly supported by the National Natural Science Foundation of China (Grant No. 62202288, 61972242).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, X. et al. (2024). Watt: A Write-Optimized RRAM-Based Accelerator for Attention. In: Carretero, J., Shende, S., Garcia-Blas, J., Brandic, I., Olcoz, K., Schreiber, M. (eds) Euro-Par 2024: Parallel Processing. Euro-Par 2024. Lecture Notes in Computer Science, vol 14802. Springer, Cham. https://doi.org/10.1007/978-3-031-69766-1_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-69766-1_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-69765-4

Online ISBN: 978-3-031-69766-1

eBook Packages: Computer ScienceComputer Science (R0)