Abstract

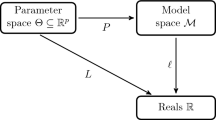

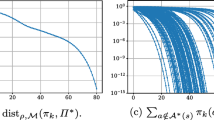

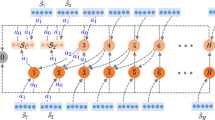

Policy gradient methods are among the most important techniques in reinforcement learning. Despite the inherent non-concave nature of policy optimization, these methods demonstrate good behavior, both in practice and in theory. Hence, it is important to study the non-concave optimization landscape. This paper aims to provide a comprehensive landscape analysis of the objective function optimized by stochastic policy gradient methods. Using tools borrowed from statistics and topology, we prove a uniform convergence result for the empirical objective function, (and its gradient, Hessian and stationary points) to the corresponding population counterparts. Specifically, we derive \(\tilde{O}(\sqrt{|\mathcal {S}||\mathcal {A}|}/(1-\gamma )\sqrt{n})\) rates of convergence, with the sample size n, the state space \(\mathcal {S}\), the action space \(\mathcal {A}\), and the discount factor \(\gamma \). Furthermore, we prove the one-to-one correspondence of the non-degenerate stationary points between the population and the empirical objective. In particular, our findings are agnostic to the choice of the algorithm and hold for a wide range of gradient-based methods. Consequently, we are able to recover and improve numerous existing results through the vanilla policy gradient. To the best of our knowledge, this is the first work theoretically characterizing optimization landscapes of stochastic policy gradient methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

For rewards in \([R_{\min }, R_{\max }]\) simply rescale these bounds.

References

Silver, D., et al.: Mastering the game of go with deep neural networks and tree search. Nature 529(7587), 484–489 (2016)

Silver, D., et al.: Mastering the game of go without human knowledge. Nature 550(7676), 354–359 (2017)

Nalpantidis, L., Polydoros, A.S.: Survey of model-based reinforcement learning: applications on robotics. J. Intell. Robot. Syst. 86(2), 153–173 (2017)

Christiano, P.F., Leike, J., Brown, T., Martic, M., Legg, S., Amodei, D.: Deep re- inforcement learning from human preferences. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

OpenAI: Gpt-4 Technical report (2024)

Williams, R.J.: Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 8, 229–256 (1992)

Sutton, R.S., McAllester, D., Singh, S., Mansour, Y.: Policy gradient methods for reinforcement learning with function approximation. In: Advances in Neural Information Processing Systems, vol. 12. MIT Press (1999)

Kakade, S.M.: A natural policy gradient. In: Advances in Neural Information Processing Systems, vol. 14 (2001)

Baxter, J., Bartlett, P.L.: Infinite-horizon policy-gradient estimation. J. Artif. Int. Res. 15(1), 319–350 (2001)

Agarwal, A., Kakade, S.M., Lee, J.D., Mahajan, G.: On the theory of policy gradient methods: optimality, approximation, and distribution shift. J. Mach. Learn. Res. 22(1) (2021)

Mei, J., Xiao, C., Szepesvari, C., Schuurmans, D.: On the global convergence rates of softmax policy gradient methods (2022)

Mei, J., Gao, Y., Dai, B., Szepesvari, C., Schuurmans, D.: Leveraging non- uniformity in first-order non-convex optimization. In: Proceedings of the 38th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 139, pp. 7555–7564. PMLR (2021)

Xiao, L.: On the convergence rates of policy gradient methods. J. Mach. Learn. Res. 23(282), 1–36 (2022)

Li, G., Wei, Y., Chi, Y., Gu, Y., Chen, Y.: Softmax policy gradient methods can take exponential time to converge. In: In Proceedings of Thirty Fourth Conference on Learning Theory, vol. 134, pp. 3107–3110 (2021)

Zhang, K., Koppel, A., Zhu, H., Başar, T.: Global convergence of policy gradient methods to (almost) locally optimal policies. SIAM J. Control Optim. 58(6), 3586–3612 (2020)

Mei, J., Dai, B., Xiao, C., Szepesvari, C., Schuurmans, D.: Understanding the effect of stochasticity in policy optimization. In: Advances in Neural Information Processing Systems, vol. 34, pp. 19339–19351 (2021)

Mei, J., Zhong, Z., Dai, B., Agarwal, A., Szepesvari, C., Schuurmans, D.: Stochastic gradient succeeds for bandits. In: Proceedings of the 40th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 202, pp. 24325–24360. PMLR (2023)

Lu, M., Aghaei, M., Raj, A., Vaswani, S.: Practical principled policy optimization for finite MDPs. In: OPT 2023: Optimization for Machine Learning (2023)

Ding, Y., Zhang, J., Lavaei, J.: On the global optimum convergence of momentum-based policy gradient. In: Proceedings of The 25th International Conference on Artificial Intelligence and Statistics. Proceedings of Machine Learning Research, vol. 151, pp. 1910–1934. PMLR (2022)

Yuan, R., Gower, R.M., Lazaric, A.: A general sample complexity analysis of vanilla policy gradient. In: Proceedings of The 25th International Conference on Artificial Intelligence and Statistics, vol. 151, pp. 3332–3380. PMLR (2022)

Masiha, S., Salehkaleybar, S., He, N., Kiyavash, N., Thiran, P.: Stochastic second- order methods improve best-known sample complexity of SGD for gradient-dominated functions. In: Advances in Neural Information Processing Systems (2022)

Liu, Y., Zhang, K., Basar, T., Yin, W.: An improved analysis of (variance-reduced) policy gradient and natural policy gradient methods. In: Advances in Neural Information Processing Systems, vol. 33, pp. 7624–7636 (2020)

Fatkhullin, I., Barakat, A., Kireeva, A., He, N.: Stochastic policy gradient methods: Improved sample complexity for Fisher-non-degenerate policies. In: Proceedings of the 40th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 202, pp. 9827–9869. PMLR (2023)

Shen, Z., Ribeiro, A., Hassani, H., Qian, H., Mi, C.: Hessian aided policy gradient. In: Proceedings of the 36th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 97, pp. 5729–5738. PMLR (2019)

Wachi, A., Wei, Y., Sui, Y.: Safe policy optimization with local generalized linear function approximations. CoRR abs/2111.04894 (2021)

Papini, M.: Safe policy optimization (2021)

Yang, L., Zheng, Q., Pan, G.: Sample complexity of policy gradient finding second-order stationary points. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 12, pp. 10630–10638 (2021)

Maniyar, M.P., Prashanth, L.A., Mondal, A., Bhatnagar, S.: A cubic-regularized policy newton algorithm for reinforcement learning (2023)

Ge, R., Lee, J.D., Ma, T.: Matrix completion has no spurious local minimum. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Kawaguchi, K.: Deep learning without poor local minima. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Sun, J., Qu, Q., Wright, J.: A geometric analysis of phase retrieval. CoRR abs/1602.06664 (2016)

Mei, S., Bai, Y., Montanari, A.: The landscape of empirical risk for non-convex losses (2017)

Ge, R., Ma, T.: On the optimization landscape of tensor decompositions (2017)

Jin, C., Ge, R., Netrapalli, P., Kakade, S.M., Jordan, M.I.: How to escape saddle points efficiently. In: Proceedings of the 34th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 70, pp. 1724–1732. PMLR (2017)

Du, S., Lee, J., Li, H., Wang, L., Zhai, X.: Gradient descent finds global minima of deep neural networks. In: Proceedings of the 36th International Conference on Machine Learning, vol. 97, pp. 1675–1685. PMLR (2019)

Sun, R.: Optimization for deep learning: theory and algorithms. CoRR abs/1912.08957 (2019)

Soltanolkotabi, M., Javanmard, A., Lee, J.D.: Theoretical insights into the optimization landscape of over-parameterized shallow neural networks. IEEE Trans. Inf. Theory 65(2), 742–769 (2019)

Liu, X.: Neural networks with complex-valued weights have no spurious local minima. CoRR abs/2103.07287 (2021)

Caramanis, C., Fotakis, D., Kalavasis, A., Kontonis, V., Tzamos, C.: Optimizing solution-samplers for combinatorial problems: the landscape of policy-gradient method. In: Thirty-Seventh Conference on Neural Information Processing Systems (2023)

Duan, J., Li, J., Chen, X., Zhao, K., Li, S.E., Zhao, L.: Optimization landscape of policy gradient methods for discrete-time static output feedback. IEEE Trans. Cybern. 1–14 (2024)

Puterman, M.L.: Markov Decision Processes: Discrete Stochastic Dynamic Programming. Wiley, New York (1994)

Sutton, R.S., McAllester, D., Singh, S., Mansour, Y.: Policy gradient methods for reinforcement learning with function approximation. In: Advances in Neural Information Processing Systems, pp. 1057–1063 (2000)

Vapnik, V.N., Vapnik, V., et al.: Statistical learning theory (1998)

Khodadadian, S., Jhunjhunwala, P.R., Varma, S.M., Maguluri, S.T.: On the linear convergence of natural policy gradient algorithm. CoRR (2021)

Nesterov, Y., Polyak, B.T.: Cubic regularization of newton method and its global performance. Math. Program. 108(1), 177–205 (2006)

Dubrovin, B., Fomenko, A., Novikov, S.: On differentiable functions with isolated critical points. Topology 8(4), 361–369 (1969). https://doi.org/10.1016/0040-9383(69)90022-6

Dubrovin, B., Fomenko, A., Novikov, S.: Modern Geometry-Methods and Applications: Part II: The Geometry and Topology of Manifolds. Springer, New York (2012). https://doi.org/10.1007/978-1-4612-1100-6

Vershynin, R.: Introduction to the non-asymptotic analysis of random matrices. In: Eldar, Y.C., Kutyniok, G. (eds.) Compressed Sensing. Cambridge University Press, Cambridge (2012)

Hoeffding, W.: Probability inequalities for sums of bounded random variables. J. Am. Stat. Assoc. 58(301), 13–30 (1963)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, X. (2024). Landscape Analysis of Stochastic Policy Gradient Methods. In: Bifet, A., Davis, J., Krilavičius, T., Kull, M., Ntoutsi, E., Žliobaitė, I. (eds) Machine Learning and Knowledge Discovery in Databases. Research Track. ECML PKDD 2024. Lecture Notes in Computer Science(), vol 14942. Springer, Cham. https://doi.org/10.1007/978-3-031-70344-7_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-70344-7_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-70343-0

Online ISBN: 978-3-031-70344-7

eBook Packages: Computer ScienceComputer Science (R0)