Abstract

Reliably capturing predictive uncertainty is indispensable for the deployment of machine learning (ML) models in safety-critical domains. The most commonly used approaches to uncertainty quantification are, however, either computationally costly in inference or incapable of capturing different types of uncertainty (i.e., aleatoric and epistemic). In this paper, we tackle this issue using the Dempster-Shafer theory of evidence, which only recently gained attention as a tool to estimate uncertainty in ML. By training a neural network to return a generalized probability measure and combining it with conformal prediction, we obtain set predictions with guaranteed user-specified confidence. We test our method on various datasets and empirically show that it reflects uncertainty more reliably than a calibrated classifier with softmax output, since our approach yields smaller and hence more informative prediction sets at the same bounded error level in particular for samples with high epistemic uncertainty. In order to deal with the exponential scaling inherent to classifiers within Dempster-Shafer theory, we introduce a second approach with reduced complexity, which also returns smaller sets than the comparative method, even on large classification tasks with more than 40 distinct labels. Our results indicate that the proposed methods are promising approaches to obtain reliable and informative predictions in the presence of both aleatoric and epistemic uncertainty in only one forward-pass through the network.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Aggarwal, C.C., Kong, X., Gu, Q., Han, J., Philip, S.Y.: Active learning: a survey. In: Data Classification: Algorithms and Applications, pp. 571–605. Chapman and Hall/CRC Press (2014). https://doi.org/10.1201/b17320

Balasubramanian, V., Ho, S.S., Vovk, V.: Conformal Prediction for Reliable Machine Learning: Theory, Adaptations and Applications. Newnes (2014). https://doi.org/10.1016/C2012-0-00234-7

Bates, S., Angelopoulos, A., Lei, L., Malik, J., Jordan, M.: Distribution-free, risk-controlling prediction sets. J. ACM (JACM) 68(6), 1–34 (2021). https://doi.org/10.1145/3478535

Bengs, V., Hüllermeier, E., Waegeman, W.: Pitfalls of epistemic uncertainty quantification through loss minimisation. In: Neural Information Processing Systems (2022)

Bengs, V., Hüllermeier, E., Waegeman, W.: On second-order scoring rules for epistemic uncertainty quantification. In: Krause, A., Brunskill, E., Cho, K., Engelhardt, B., Sabato, S., Scarlett, J. (eds.) Proceedings of the 40th International Conference on Machine Learning, vol. 202, pp. 2078–2091. PMLR (2023)

Breiman, L.: Bagging predictors. Mach. Learn. 24, 123–140 (1996). https://doi.org/10.1007/BF00058655

Cella, L., Martin, R.: Validity, consonant plausibility measures, and conformal prediction. Int. J. Approximate Reasoning 141, 110–130 (2022). https://doi.org/10.1016/j.ijar.2021.07.013

Chow, C.: On optimum recognition error and reject tradeoff. IEEE Trans. Inf. Theory 16(1), 41–46 (1970). https://doi.org/10.1109/TIT.1970.1054406

Cohen, G., Afshar, S., Tapson, J., van Schaik, A.: EMNIST: An Extension of MNIST to Handwritten Letters. CoRR abs/1702.05373 (2017)

Cuzzolin, F.: Belief Functions: Theory and Applications, vol. 8764 (2014). https://doi.org/10.1007/978-3-319-11191-9

Dempster, A.P.: Upper and lower probabilities induced by a multivalued mapping. Ann. Math. Stat. 38(2), 325–339 (1967). https://doi.org/10.1214/aoms/1177698950

Fixsen, D., Mahler, R.P.: The modified dempster-shafer approach to classification. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 27(1), 96–104 (1997). https://doi.org/10.1109/3468.553228

Gal, Y., Ghahramani, Z.: Dropout as a Bayesian approximation: representing model uncertainty in deep learning. In: Proceedings of the 33rd International Conference on International Conference on Machine Learning, vol. 48, pp. 1050–1059. JMLR.org (2016)

Grycko, E.: Classification with set-valued decision functions. In: Opitz, O., Lausen, B., Klar, R. (eds.) Information and Classification, pp. 218–224. Springer, Heidelberg (1993). https://doi.org/10.1007/978-3-642-50974-2_22

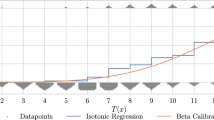

Guo, C., Pleiss, G., Sun, Y., Weinberger, K.Q.: On calibration of modern neural networks. In: International Conference on Machine Learning, pp. 1321–1330. PMLR (2017)

Haenni, R.: Shedding New Light on Zadeh’s Criticism of Dempster’s Rule of Combination, vol. 2, p. 6-pp. (2005). https://doi.org/10.1109/ICIF.2005.1591951

Herbei, R., Wegkamp, M.H.: Classification with reject option. Can. J. Stat./La Revue Canadienne de Statistique 709–721 (2006). https://doi.org/10.1002/cjs.5550340410

Hüllermeier, E., Waegeman, W.: Aleatoric and epistemic uncertainty in machine learning: an introduction to concepts and methods. Mach. Learn. 110, 457–506 (2021)

Ji, B., Jung, H., Yoon, J., Kim, K., Shin, Y.: Bin-wise temperature scaling (BTS): improvement in confidence calibration performance through simple scaling techniques. In: 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), pp. 4190–4196 (2019). https://doi.org/10.1109/ICCVW.2019.00515

Joy, T., Pinto, F., Lim, S.N., Torr, P.H., Dokania, P.K.: Sample-dependent adaptive temperature scaling for improved calibration. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, no. 12, pp. 14919–14926 (2023). https://doi.org/10.1609/aaai.v37i12.26742

Krizhevsky, A., Nair, V., Hinton, G.: CIFAR-10 (Canadian Institute for Advanced Research). http://www.cs.toronto.edu/~kriz/cifar.html

Lakshminarayanan, B., Pritzel, A., Blundell, C.: Simple and scalable predictive uncertainty estimation using deep ensembles. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 6405–6416. Curran Associates Inc., Red Hook (2017)

Lei, J., Wasserman, L.: Distribution-free prediction bands for non-parametric regression. J. Roy. Stat. Soc. 76(1), 71–96 (2014)

Li, C., et al.: Hyper evidential deep learning to quantify composite classification uncertainty. In: The Twelfth International Conference on Learning Representations (2024)

Lienen, J., Hüllermeier, E.: Credal self-supervised learning. In: Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W. (eds.) 35th Conference on Neural Information Processing Systems (NeurIPS 2021), pp. 14370–14382. Curran Associates, Inc. (2021)

MacKay, D.J.C.: A practical Bayesian framework for backpropagation networks. Neural Comput. 4(3), 448–472 (1992). https://doi.org/10.1162/neco.1992.4.3.448

Manchingal, S.K., Cuzzolin, F.: Epistemic Deep Learning. arXiv preprint arXiv:2206.07609 (2022)

Manchingal, S.K., Mubashar, M., Wang, K., Shariatmadar, K., Cuzzolin, F.: Random-Set Convolutional Neural Network (RS-CNN) for Epistemic Deep Learning. arXiv preprint arXiv:2307.05772 (2023)

Mortier, T., Wydmuch, M., Dembczyński, K., Hüllermeier, E., Waegeman, W.: Efficient set-valued prediction in multi-class classification. Data Min. Knowl. Discov. 35(4), 1435–1469 (2021). https://doi.org/10.1007/s10618-021-00751-x

Nguyen, H.T.: On random sets and belief functions. J. Math. Anal. Appl. 65(3), 531–542 (1978)

Papadopoulos, H., Proedrou, K., Vovk, V., Gammerman, A.: Inductive confidence machines for regression. In: Elomaa, T., Mannila, H., Toivonen, H. (eds.) ECML 2002. LNCS (LNAI), vol. 2430, pp. 345–356. Springer, Heidelberg (2002). https://doi.org/10.1007/3-540-36755-1_29

Pearl, J.: On probability intervals. Int. J. Approximate Reasoning 2(3), 211–216 (1988). https://doi.org/10.1016/0888-613X(88)90117-X

Pearl, J.: Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann, Burlington (1988)

Sale, Y., Caprio, M., Hüllermeier, E.: Is the volume of a credal set a good measure for epistemic uncertainty? In: Uncertainty in Artificial Intelligence, pp. 1795–1804. PMLR (2023)

Sensoy, M., Kaplan, L., Kandemir, M.: Evidential deep learning to quantify classification uncertainty. In: Advances in Neural Information Processing Systems, vol. 31 (2018)

Shafer, G.: A Mathematical Theory of Evidence. Princeton University Press, Princeton (1976). https://doi.org/10.1515/9780691214696

Shafer, G.: Belief functions and possibility measures. In: Anal of Fuzzy Inf, pp. 51–84. CRC Press Inc (1987)

Shafer, G.: Perspectives on the theory and practice of belief functions. Int. J. Approximate Reasoning 4(5), 323–362 (1990). https://doi.org/10.1016/0888-613X(90)90012-Q

Shafer, G.: Allocations of probability. In: Yager, R.R., Liu, L. (eds.) Classic Works of the Dempster-Shafer Theory of Belief Functions, pp. 183–196. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-44792-4_7

Shafer, G., Vovk, V.: A tutorial on conformal prediction. J. Mach. Learn. Res. 9, 371–421 (2008)

Smets, P.: The nature of the unnormalized beliefs encountered in the transferable belief model. In: Dubois, D., Wellman, M.P., D’Ambrosio, B., Smets, P. (eds.) Uncertainty in Artificial Intelligence, pp. 292–297. Morgan Kaufmann (1992)

Stallkamp, J., Schlipsing, M., Salmen, J., Igel, C.: The German traffic sign recognition benchmark: a multi-class classification competition. In: IEEE International Joint Conference on Neural Networks, pp. 1453–1460 (2011). https://doi.org/10.1109/IJCNN.2011.6033395

Stutz, D., Roy, A.G., Matejovicova, T., Strachan, P., Cemgil, A.T., Doucet, A.: Conformal Prediction under Ambiguous Ground Truth. arXiv e-prints (2023)

Vovk, V., Gammerman, A., Shafer, G.: Algorithmic Learning in a Random World, vol. 29. Springer, New York (2005). https://doi.org/10.1007/b106715

Wang, Z., Gao, R., Yin, M., Zhou, M., Blei, D.: Probabilistic conformal prediction using conditional random samples. In: Proceedings of The 26th International Conference on Artificial Intelligence and Statistics, vol. 206, pp. 8814–8836. PMLR (2023)

Wilson, N.: How much do you Believe? Int. J. Approx. Reason. 6(3), 345–365 (1992). https://doi.org/10.1016/0888-613X(92)90029-Y

Yager, R.R.: On the Dempster-Shafer framework and new combination rules. Inf. Sci. 41(2), 93–137 (1987). https://doi.org/10.1016/0020-0255(87)90007-7

Yu, Y., Bates, S., Ma, Y., Jordan, M.: Robust calibration with multi-domain temperature scaling. In: Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A. (eds.) Advances in Neural Information Processing Systems, vol. 35, pp. 27510–27523. Curran Associates, Inc. (2022)

Zadeh, L.: On the validity of dempster’s rule of combination of evidence. Technical report, EECS Department, University of California, Berkeley (1979)

Acknowledgments

This work was supported by the Dutch National Growth Fund (NGF), as part of the Quantum Delta NL programme as well as the Dutch Research Council (NWO/OCW), as part of the Quantum Software Consortium programme (project number 024.003.03), and co-funded by the European Union (ERC CoG, BeMAIQuantum, 101124342). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the European Research Council. Neither the European Union nor the granting authority can be held responsible for them.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

Reproducibility Statement

Code is made available from the authors upon request.

Disclaimer

The results, opinions and conclusions expressed in this publication are not necessarily those of Volkswagen Aktiengesellschaft.

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Kempkes, M.C., Dunjko, V., van Nieuwenburg, E., Spiegelberg, J. (2024). Reliable Classifications with Guaranteed Confidence Using the Dempster-Shafer Theory of Evidence. In: Bifet, A., Davis, J., Krilavičius, T., Kull, M., Ntoutsi, E., Žliobaitė, I. (eds) Machine Learning and Knowledge Discovery in Databases. Research Track. ECML PKDD 2024. Lecture Notes in Computer Science(), vol 14942. Springer, Cham. https://doi.org/10.1007/978-3-031-70344-7_6

Download citation

DOI: https://doi.org/10.1007/978-3-031-70344-7_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-70343-0

Online ISBN: 978-3-031-70344-7

eBook Packages: Computer ScienceComputer Science (R0)