Abstract

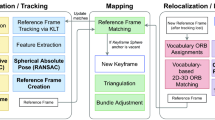

Extended Reality (XR) technologies, including Virtual Reality (VR) and Augmented Reality (AR), offer immersive experiences merging digital content with the real world. Achieving precise spatial tracking over large areas is a critical challenge in XR development. This paper addresses the drift issue, caused by small errors accumulating over time leading to a discrepancy between the real and virtual worlds. Tackling this issue is crucial for co-located XR experiences where virtual and physical elements interact seamlessly. Building upon the locally accurate spatial anchors, we propose a solution that extends this accuracy to larger areas by exploiting an external, drift-corrected tracking method as a ground truth. During the preparation stage, anchors are placed inside the headset and inside the external tracking method simultaneously, yielding 3D-3D correspondences. Both anchor clouds, and thus tracking methods, are aligned using a suitable cloud registration method during the operational stage. Our method enhances user comfort and mobility by leveraging the headset’s built-in tracking capabilities during the operational stage, allowing standalone functionality. Additionally, this method can be used with any XR headset that supports spatial anchors and with any drift-free external tracking method. Empirical evaluation demonstrates the system’s effectiveness in aligning virtual content with the real world and expanding the accurate tracking area. In addition, the alignment is evaluated by comparing the camera poses of both tracking methods. This approach may benefit a wide range of industries and applications, including manufacturing and construction, education, and entertainment.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Alatise, M.B., Hancke, G.P.: Pose estimation of a mobile robot based on fusion of IMU data and vision data using an extended Kalman filter. Sensors 17(10), 2164 (2017). https://doi.org/10.3390/s17102164, https://www.mdpi.com/1424-8220/17/10/2164

Arun, K.S., Huang, T.S., Blostein, S.D.: Least-squares fitting of two 3-D point sets. IEEE Trans. Pattern Anal. Mach. Intell. PAMI-9(5), 698–700 (1987). https://doi.org/10.1109/TPAMI.1987.4767965. Conference Name: IEEE Transactions on Pattern Analysis and Machine Intelligence

Bai, N., Tian, Y., Liu, Y., Yuan, Z., Xiao, Z., Zhou, J.: A high-precision and low-cost IMU-based indoor pedestrian positioning technique. IEEE Sens. J. 20(12), 6716–6726 (2020). https://doi.org/10.1109/JSEN.2020.2976102

Campos, C., Elvira, R., Rodríguez, J.J.G., Montiel, J.M.M., Tardós, J.D.: ORB-SLAM3: an accurate open-source library for visual, visual-inertial and multi-map SLAM. IEEE Trans. Robot. 37(6), 1874–1890 (2021). https://doi.org/10.1109/TRO.2021.3075644, http://arxiv.org/abs/2007.11898, arXiv:2007.11898 [cs]

Durrant-Whyte, H., Bailey, T.: Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 13(2), 99–110 (2006)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981). https://doi.org/10.1145/358669.358692

Furtado, J.S., Liu, H.H.T., Lai, G., Lacheray, H., Desouza-Coelho, J.: Comparative analysis of OptiTrack motion capture systems. In: Janabi-Sharifi, F., Melek, W. (eds.) Advances in Motion Sensing and Control for Robotic Applications, pp. 15–31. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-17369-2_2

Garrido-Jurado, S., Muñoz-Salinas, R., Madrid-Cuevas, F.J., Medina-Carnicer, R.: Generation of fiducial marker dictionaries using Mixed Integer Linear Programming. Pattern Recogn. 51, 481–491 (2016). https://doi.org/10.1016/j.patcog.2015.09.023

Garrido-Jurado, S., Muñoz-Salinas, R., Madrid-Cuevas, F., Marín-Jiménez, M.: Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recogn. 47(6), 2280–2292 (2014). https://doi.org/10.1016/j.patcog.2014.01.005

Keetha, N., et al.: SplaTAM: Splat, Track & Map 3D Gaussians for Dense RGB-D SLAM, April 2024. https://doi.org/10.48550/arXiv.2312.02126, arXiv:2312.02126 [cs]

Mao, W., He, J., Qiu, L.: CAT: high-precision acoustic motion tracking. In: Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, MobiCom 2016, pp. 69–81. Association for Computing Machinery, New York, NY, USA, October 2016. https://doi.org/10.1145/2973750.2973755

McGill, M., Gugenheimer, J., Freeman, E.: A quest for co-located mixed reality: aligning and assessing SLAM tracking for same-space multi-user experiences. In: Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology, VRST 2020, pp. 1–10. Association for Computing Machinery, New York, NY, USA, November 2020. https://doi.org/10.1145/3385956.3418968

Michiels, N., et al.: Tracking and co-location of global point clouds for large-area indoor environments. Virtual Reality 28(2), 106 (2024). https://doi.org/10.1007/s10055-024-01004-0

Moré, J.J.: The Levenberg-Marquardt algorithm: implementation and theory. In: Watson, G.A. (ed.) Numerical Analysis, pp. 105–116. Springer, Heidelberg (1978). https://doi.org/10.1007/BFb0067700

Mur-Artal, R., Tardós, J.D.: Fast relocalisation and loop closing in keyframe-based SLAM. In: 2014 IEEE International Conference on Robotics and Automation (ICRA), pp. 846–853, May 2014. https://doi.org/10.1109/ICRA.2014.6906953, ISSN: 1050-4729

Niehorster, D.C., Li, L., Lappe, M.: The accuracy and precision of position and orientation tracking in the HTC Vive virtual reality system for scientific research. i-Perception 8(3), 2041669517708205 (2017). https://doi.org/10.1177/2041669517708205

Podkosova, I., et al.: ImmersiveDeck: a large-scale wireless VR system for multiple users, pp. 1–7, March 2016. https://doi.org/10.1109/SEARIS.2016.7551581

Romero-Ramirez, F.J., Muñoz-Salinas, R., Medina-Carnicer, R.: Speeded up detection of squared fiducial markers. Image Vis. Comput. 76, 38–47 (2018). https://doi.org/10.1016/j.imavis.2018.05.004

Song, S., Lim, H., Lee, A.J., Myung, H.: DynaVINS: A Visual-Inertial SLAM for Dynamic Environments (2022). https://arxiv.org/abs/2208.11500, _eprint: 2208.11500

Teruggi, S., Fassi, F.: Mixed reality content alignment in monumental environments. In: The International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLIII-B2-2022, pp. 901–908. Copernicus GmbH, Gottingen, Germany (2022). https://doi.org/10.5194/isprs-archives-XLIII-B2-2022-901-2022, ISSN: 16821750

thetuvix: Spatial anchors - Mixed Reality, March 2023. https://learn.microsoft.com/en-us/windows/mixed-reality/design/spatial-anchors

vtieto: World Locking Tools documentation. https://learn.microsoft.com/en-us/mixed-reality/world-locking-tools/

Wright, M., Freed, A.: Open SoundControl: A New Protocol for Communicating with Sound Synthesizers (1997). https://api.semanticscholar.org/CorpusID:27393683

Yugay, V., Li, Y., Gevers, T., Oswald, M.R.: Gaussian-SLAM: photo-realistic dense SLAM with Gaussian splatting, March 2024. https://doi.org/10.48550/arXiv.2312.10070, http://arxiv.org/abs/2312.10070, arXiv:2312.10070 [cs]

Acknowledgements

This work was funded by the European Union’s Horizon Europe Programme under grant agreement 101070072, Flanders Make’s XRTwin SBO project (R-12528), MAX-R (Mixed Augmented and eXtended Reality media pipeline), the Special Research Fund (BOF) of Hasselt University (R-14360) and a specialized FWO fellowship grant (1SHDZ24N).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Vanherck, J., Zoomers, B., Jorissen, L., Vandebroeck, I., Joris, E., Michiels, N. (2024). Large-Area Spatially Aligned Anchors. In: De Paolis, L.T., Arpaia, P., Sacco, M. (eds) Extended Reality. XR Salento 2024. Lecture Notes in Computer Science, vol 15027. Springer, Cham. https://doi.org/10.1007/978-3-031-71707-9_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-71707-9_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-71706-2

Online ISBN: 978-3-031-71707-9

eBook Packages: Computer ScienceComputer Science (R0)