Abstract

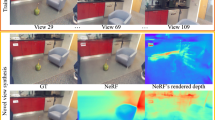

Neural Radiance Fields (NeRF) have achieved impressive results in 3D reconstruction and novel view generation. A significant challenge within NeRF involves editing reconstructed 3D scenes, such as object removal, which demands consistency across multiple views and the synthesis of high-quality perspectives. Previous studies have integrated depth priors, typically sourced from LiDAR or sparse depth estimates from COLMAP, to enhance NeRF’s performance in object removal. However, these methods are either expensive or time-consuming. This paper proposes a new pipeline that leverages SpinNeRF and monocular depth estimation models like ZoeDepth to enhance NeRF’s performance in complex object removal with improved efficiency. A thorough evaluation of COLMAP’s dense depth reconstruction on the KITTI dataset is conducted to demonstrate that COLMAP can be viewed as a cost-effective and scalable alternative for acquiring depth ground truth compared to traditional methods like LiDAR. This serves as the basis for evaluating the performance of monocular depth estimation models to determine the best one for generating depth priors for SpinNeRF. The new pipeline is tested in various scenarios involving 3D reconstruction and object removal, and the results indicate that our pipeline significantly reduces the time required for the acquisition of depth priors for object removal and enhances the fidelity of the synthesized views, suggesting substantial potential for building high-fidelity digital twin systems with increased efficiency in the future.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Mildenhall, B., Srinivasan, P.P., Tancik, M., Barron, J.T., Ramamoorthi, R., Ng, R.: NeRF: representing scenes as neural radiance fields for view synthesis. Commun. ACM 65(1), 99–106 (2021)

Wang, S., Zhang, J., Wang, P., Law, J., Calinescu, R., Mihaylova, L.: A deep learning-enhanced digital twin framework for improving safety and reliability in human-robot collaborative manufacturing. Robot. Comput.-Integr. Manuf. 85, 102608 (2024)

Yang, B., et al.: Learning object-compositional neural radiance field for editable scene rendering. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 13779–13788 (2021)

Mirzaei, A., et al.: Spin-NeRF: multiview segmentation and perceptual inpainting with neural radiance fields. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 20669–20679 (2023)

Weder, S., et al.: Removing objects from neural radiance fields. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16528–16538 (2023)

Deng, K., Liu, A., Zhu, J.-Y., Ramanan, D.: Depth-supervised NeRF: fewer views and faster training for free. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12882–12891 (2022)

Bhat, S.F., Birkl, R., Wofk, D., Wonka, P., Müller, M.: ZoeDepth: zero-shot transfer by combining relative and metric depth (2023)

Geiger, A., Lenz, p., Urtasun, R.: Are we ready for autonomous driving? The KITTI vision benchmark suite. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3354–3361. IEEE (2012)

Riedmiller, M., Lernen, A.: Multi layer perceptron. Machine Learning Lab Special Lecture, University of Freiburg, p. 24 (2014)

Wei, Y., Liu, S., Rao, Y., Zhao, W., Lu, J., Zhou, J.: NerfingMVS: guided optimization of neural radiance fields for indoor multi-view stereo. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 5610–5619 (2021)

Neff, T., et al.: DoNeRF: towards real-time rendering of compact neural radiance fields using depth oracle networks. In: Computer Graphics Forum, vol. 40, pp. 45–59. Wiley Online Library (2021)

Roessle, B., Barron, J.T., Mildenhall, B., Srinivasan, P.P., Nießner, M.: Dense depth priors for neural radiance fields from sparse input views. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12892–12901 (2022)

Wu, Q., et al.: Object-compositional neural implicit surfaces. In: European Conference on Computer Vision, pp. 197–213. Springer (2022)

Suvorov, R., et al.: Resolution-robust large mask inpainting with Fourier convolutions. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2149–2159 (2022)

Schönberger, J.L., Frahm, J.-M.: Structure-from-motion revisited. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Schönberger, J.L., Zheng, E., Frahm, J.-M., Pollefeys, M.: Pixelwise view selection for unstructured multi-view stereo. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision – ECCV 2016, pp. 501–518. Springer, Cham (2016)

Triggs, B., McLauchlan, P.F., Hartley, R.I., Fitzgibbon, A.W.: Bundle adjustment—a modern synthesis. In: Vision Algorithms: Theory and Practice: International Workshop on Vision Algorithms Corfu, Greece, 21–22 September 1999, pp. 298–372. Springer (2000)

Hoiem, D., Efros, A.A., Hebert, M.: Recovering surface layout from an image. Int. J. Comput. Vision 75, 151–172 (2007)

Liu, C., Yuen, J., Torralba, A., Sivic, J., Freeman, W.T.: Sift flow: dense correspondence across different scenes. In: Computer Vision–ECCV 2008: 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008, Part III, pp. 28–42. Springer (2008)

Patni, S., Agarwal, A., Arora, C.: EcoDepth: effective conditioning of diffusion models for monocular depth estimation (2024)

Yang, L., Kang, B., Huang, Z., Xu, X., Feng, J., Zhao, H.: Depth anything: unleashing the power of large-scale unlabeled data (2024)

Bhat, S.F., Alhashim, I., Wonka, P.: AdaBins: depth estimation using adaptive bins. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4009–4018 (2021)

Gasperini, S., Morbitzer, N., Jung, H., Navab, N., Tombari, F.: Robust monocular depth estimation under challenging conditions. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8177–8186 (2023)

Ranftl, R., Lasinger, K., Hafner, D., Schindler, K., Koltun, V.: Towards robust monocular depth estimation: mixing datasets for zero-shot cross-dataset transfer. IEEE Trans. Pattern Anal. Mach. Intell. 44(3), 1623–1637 (2020)

Dosovitskiy, A., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Takagi, Y., Nishimoto, S.: High-resolution image reconstruction with latent diffusion models from human brain activity. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14453–14463 (2023)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 586–595 (2018)

Godard, C., Aodha, O.M., Firman, M., Brostow, G.J.: Digging into self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (2019)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Lin, Y., Wang, P., Wang, Z., Ali, S., Mihaylova, L.: Towards automated remote sizing and hot steel manufacturing with image registration and fusion. J. Intell. Manuf. 1–18 (2023)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Guo, Z., Wang, P. (2025). Depth Priors in Removal Neural Radiance Fields. In: Huda, M.N., Wang, M., Kalganova, T. (eds) Towards Autonomous Robotic Systems. TAROS 2024. Lecture Notes in Computer Science(), vol 15051. Springer, Cham. https://doi.org/10.1007/978-3-031-72059-8_31

Download citation

DOI: https://doi.org/10.1007/978-3-031-72059-8_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72058-1

Online ISBN: 978-3-031-72059-8

eBook Packages: Computer ScienceComputer Science (R0)