Abstract

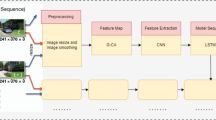

Recent work in Visual Odometry and SLAM has shown the effectiveness of using deep network backbones. Despite excellent accuracy, such approaches are often expensive to run or do not generalize well zero-shot. To address this problem, we introduce Deep Patch Visual-SLAM, a new system for monocular visual SLAM based on the DPVO visual odometry system. We introduce two loop closure mechanisms which significantly improve the accuracy with minimal runtime and memory overhead. On real-world datasets, DPV-SLAM runs at 1x-3x real-time framerates. We achieve comparable accuracy to DROID-SLAM on EuRoC and TartanAir while running twice as fast using a third of the VRAM. We also outperform DROID-SLAM by large margins on KITTI. As DPV-SLAM is an extension to DPVO, its code can be found in the same repository: https://github.com/princeton-vl/DPVO

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Burri, M., et al.: The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 35(10), 1157–1163 (2016)

Campos, C., Elvira, R., Rodríguez, J.J.G., Montiel, J.M., Tardós, J.D.: ORB-SLAM3: an accurate open-source library for visual, visual-inertial, and multimap slam. IEEE Trans. Rob. 37(6), 1874–1890 (2021)

Czarnowski, J., Laidlow, T., Clark, R., Davison, A.J.: DeepFactors: real-time probabilistic dense monocular slam. IEEE Robot. Autom. Lett. 5(2), 721–728 (2020)

Dai, A., Chang, A.X., Savva, M., Halber, M., Funkhouser, T., Nießner, M.: ScanNet: richly-annotated 3D reconstructions of indoor scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5828–5839 (2017)

Engel, J., Koltun, V., Cremers, D.: Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 40(3), 611–625 (2017)

Engel, J., Usenko, V., Cremers, D.: A photometrically calibrated benchmark for monocular visual odometry. arXiv preprint arXiv:1607.02555 (2016)

Forster, C., Pizzoli, M., Scaramuzza, D.: SVO: fast semi-direct monocular visual odometry. In: 2014 IEEE International Conference on Robotics and Automation (ICRA), pp. 15–22. IEEE (2014)

Fu, T., Su, S., Wang, C.: iSLAM: Imperative SLAM. arXiv preprint arXiv:2306.07894 (2023)

Gálvez-López, D., Tardos, J.D.: Bags of binary words for fast place recognition in image sequences. IEEE Trans. Rob. 28(5), 1188–1197 (2012)

Gao, X., Wang, R., Demmel, N., Cremers, D.: LDSO: direct sparse odometry with loop closure. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2198–2204. IEEE (2018)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? The KITTI vision benchmark suite. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3354–3361. IEEE (2012)

Keetha, N., et al.: SplaTAM: Splat, track & map 3D gaussians for dense RGB-D SLAM. arXiv preprint (2023)

Li, H., Gu, X., Yuan, W., Yang, L., Dong, Z., Tan, P.: Dense RGB slam with neural implicit maps. arXiv preprint arXiv:2301.08930 (2023)

Li, R., Wang, S., Long, Z., Gu, D.: UnDeepVO: monocular visual odometry through unsupervised deep learning. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 7286–7291. IEEE (2018)

Lindenberger, P., Sarlin, P.E., Pollefeys, M.: LightGlue: local feature matching at light speed. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 17627–17638 (2023)

Mur-Artal, R., Montiel, J.M.M., Tardos, J.D.: ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Trans. Rob. 31(5), 1147–1163 (2015)

Mur-Artal, R., Tardós, J.D.: ORB-SLAM2: an open-source slam system for monocular, stereo, and RGB-D cameras. IEEE Trans. Rob. 33(5), 1255–1262 (2017)

Riba, E., Mishkin, D., Ponsa, D., Rublee, E., Bradski, G.: Kornia: an open source differentiable computer vision library for PyTorch. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3674–3683 (2020)

Rublee, E., Rabaud, V., Konolige, K., Bradski, G.: ORB: an efficient alternative to sift or SURF. In: 2011 International Conference on Computer Vision, pp. 2564–2571. IEEE (2011)

Schneider, T., et al.: maplab: an open framework for research in visual-inertial mapping and localization. IEEE Robot. Autom. Lett. 3(3), 1418–1425 (2018)

Schops, T., Sattler, T., Pollefeys, M.: BAD SLAM: bundle adjusted direct RGB-D SLAM. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 134–144 (2019)

Shen, S., Cai, Y., Wang, W., Scherer, S.: DytanVO: joint refinement of visual odometry and motion segmentation in dynamic environments. In: 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 4048–4055. IEEE (2023)

Shi, J., et al.: Good features to track. In: 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 593–600. IEEE (1994)

Strasdat, H., Montiel, J., Davison, A.J.: Scale drift-aware large scale monocular SLAM. Robot. Sci. Syst. VI 2(3), 7 (2010)

Straub, J., et al.: The replica dataset: A digital replica of indoor spaces. arXiv preprint arXiv:1906.05797 (2019)

Sturm, J., Engelhard, N., Endres, F., Burgard, W., Cremers, D.: A benchmark for the evaluation of RGB-D SLAM systems. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 573–580. IEEE (2012)

Sucar, E., Liu, S., Ortiz, J., Davison, A.J.: iMAP: implicit mapping and positioning in real-time. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6229–6238 (2021)

Teed, Z., Deng, J.: DeepV2D: Video to depth with differentiable structure from motion. arXiv preprint arXiv:1812.04605 (2018)

Teed, Z., Deng, J.: RAFT: recurrent all-pairs field transforms for optical flow. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12347, pp. 402–419. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58536-5_24

Teed, Z., Deng, J.: DROID-SLAM: deep visual slam for monocular, stereo, and RGB-D cameras. Adv. Neural. Inf. Process. Syst. 34, 16558–16569 (2021)

Teed, Z., Lipson, L., Deng, J.: Deep patch visual odometry. In: Advances in Neural Information Processing Systems (2023)

Tyszkiewicz, M., Fua, P., Trulls, E.: DISK: learning local features with policy gradient. Adv. Neural. Inf. Process. Syst. 33, 14254–14265 (2020)

Umeyama, S.: Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 13(04), 376–380 (1991)

Wang, S., Clark, R., Wen, H., Trigoni, N.: DeepVO: towards end-to-end visual odometry with deep recurrent convolutional neural networks. In: 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 2043–2050. IEEE (2017)

Wang, W., Hu, Y., Scherer, S.: TartanVO: a generalizable learning-based vo. In: Conference on Robot Learning, pp. 1761–1772. PMLR (2021)

Wang, W., et al.: TartanAir: a dataset to push the limits of visual SLAM. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4909–4916. IEEE (2020)

Wang, X., Maturana, D., Yang, S., Wang, W., Chen, Q., Scherer, S.: Improving learning-based ego-motion estimation with homomorphism-based losses and drift correction. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 970–976. IEEE (2019)

Wenzel, P., et al.: 4Seasons: a cross-season dataset for multi-weather SLAM in autonomous driving. In: Akata, Z., Geiger, A., Sattler, T. (eds.) DAGM GCPR 2020. LNCS, vol. 12544, pp. 404–417. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-71278-5_29

Xu, S., Xiong, H., Wu, Q., Wang, Z.: Attention-based long-term modeling for deep visual odometry. In: 2021 Digital Image Computing: Techniques and Applications (DICTA), pp. 1–8. IEEE (2021)

Yang, N., Stumberg, L.v., Wang, R., Cremers, D.: D3VO: deep depth, deep pose and deep uncertainty for monocular visual odometry. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1281–1292 (2020)

Ye, W., et al.: DeFlowSLAM: self-supervised scene motion decomposition for dynamic dense SLAM (2023)

Yin, Z., Shi, J.: GeoNet: unsupervised learning of dense depth, optical flow and camera pose. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1983–1992 (2018)

Zhan, H., Weerasekera, C.S., Bian, J.W., Garg, R., Reid, I.: DF-VO: What should be learnt for visual odometry? arXiv preprint arXiv:2103.00933 (2021)

Zhang, J., Henein, M., Mahony, R., Ila, V.: VDO-SLAM: a visual dynamic object-aware slam system. arXiv preprint arXiv:2005.11052 (2020)

Zhang, Y., Tosi, F., Mattoccia, S., Poggi, M.: GO-SLAM: Global optimization for consistent 3D instant reconstruction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3727–3737 (2023)

Zhou, H., Ummenhofer, B., Brox, T.: DeepTAM: deep tracking and mapping. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 822–838 (2018)

Zhu, Z., et al.: NICE-SLAM: neural implicit scalable encoding for slam. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12786–12796 (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lipson, L., Teed, Z., Deng, J. (2025). Deep Patch Visual SLAM. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15060. Springer, Cham. https://doi.org/10.1007/978-3-031-72627-9_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-72627-9_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72626-2

Online ISBN: 978-3-031-72627-9

eBook Packages: Computer ScienceComputer Science (R0)