Abstract

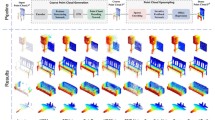

Point cloud registration is a fundamental problem for large-scale 3D scene scanning and reconstruction. With the help of deep learning, registration methods have evolved significantly, reaching a nearly-mature stage. As the introduction of Neural Radiance Fields (NeRF), it has become the most popular 3D scene representation as its powerful view synthesis capabilities. Regarding NeRF representation, its registration is also required for large-scale scene reconstruction. However, this topic extremly lacks exploration. This is due to the inherent challenge to model the geometric relationship among two scenes with implicit representations. The existing methods usually convert the implicit representation to explicit representation for further registration. Most recently, Gaussian Splatting (GS) is introduced, employing explicit 3D Gaussian. This method significantly enhances rendering speed while maintaining high rendering quality. Given two scenes with explicit GS representations, in this work, we explore the 3D registration task between them. To this end, we propose GaussReg, a novel coarse-to-fine framework, both fast and accurate. The coarse stage follows existing point cloud registration methods and estimates a rough alignment for point clouds from GS. We further newly present an image-guided fine registration approach, which renders images from GS to provide more detailed geometric information for precise alignment. To support comprehensive evaluation, we carefully build a scene-level dataset called ScanNet-GSReg with 1379 scenes obtained from the ScanNet dataset and collect an in-the-wild dataset called GSReg. Experimental results demonstrate our method achieves state-of-the-art performance on multiple datasets. Our GaussReg is \(44 \times \) faster than HLoc (SuperPoint as the feature extractor and SuperGlue as the matcher) with comparable accuracy.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bai, X., Luo, Z., Zhou, L., Fu, H., Quan, L., Tai, C.L.: D3feat: joint learning of dense detection and description of 3d local features. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020

Barron, J.T., Mildenhall, B., Tancik, M., Hedman, P., Martin-Brualla, R., Srinivasan, P.P.: Mip-NeRF: a multiscale representation for anti-aliasing neural radiance fields. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 5835–5844 (2021). https://doi.org/10.1109/ICCV48922.2021.00580

Barron, J.T., Mildenhall, B., Verbin, D., Srinivasan, P.P., Hedman, P.: Mip-NeRF 360: unbounded anti-aliased neural radiance fields. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5460–5469 (2022). https://doi.org/10.1109/CVPR52688.2022.00539

Besl, P., McKay, N.D.: A method for registration of 3-d shapes. IEEE Trans. Pattern Anal. Mach. Intell. 14(2), 239–256 (1992). https://doi.org/10.1109/34.121791

Chen, A., Xu, Z., Geiger, A., Yu, J., Su, H.: TensoRF: tensorial radiance fields. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision - ECCV 2022, ECCV 2022, LNCS, vol. 13692, pp. 333–350. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-19824-3_20

Chen, G., Wang, W.: A survey on 3d gaussian splatting. arXiv preprint arXiv:2401.03890 (2024)

Chen, Y., Lee, G.H.: Dreg-nerf: Deep registration for neural radiance fields. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 22703–22713, October 2023

Dai, A., Nießner, M., Zollöfer, M., Izadi, S., Theobalt, C.: BundleFusion: real-time globally consistent 3d reconstruction using on-the-fly surface re-integration. ACM Trans. Graph. 2017 (TOG) 36(4), 1 (2017)

Deitke, M., et al.: Objaverse-xl: a universe of 10 m+ 3d objects. arXiv preprint arXiv:2307.05663 (2023)

DeTone, D., Malisiewicz, T., Rabinovich, A.: Superpoint: self-supervised interest point detection and description. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, June 2018

Fang, J., et al.: NeRFuser: large-scale scene representation by nerf fusion (2023)

Furukawa, Y., Ponce, J.: Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 32(8), 1362–1376 (2010). https://doi.org/10.1109/TPAMI.2009.161

Goesele, M., Curless, B., Seitz, S.: Multi-view stereo revisited. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2006), vol. 2, pp. 2402–2409 (2006). https://doi.org/10.1109/CVPR.2006.199

Gojcic, Z., Zhou, C., Wegner, J.D., Andreas, W.: The perfect match: 3d point cloud matching with smoothed densities. In: International Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Goli, L., Rebain, D., Sabour, S., Garg, A., Tagliasacchi, A.: nerf2nerf: pairwise registration of neural radiance fields. In: International Conference on Robotics and Automation (ICRA), IEEE (2023)

Hernández Esteban, C., Schmitt, F.: Silhouette and stereo fusion for 3d object modeling. Comput. Vis. Image Underst. 96(3), 367–392 (2004). https://doi.org/10.1016/j.cviu.2004.03.016

Kerbl, B., Kopanas, G., Leimkühler, T., Drettakis, G.: 3d gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 42(4) (2023). https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/

Li, J., Hu, Q., Ai, M.: Point cloud registration based on one-point ransac and scale-annealing biweight estimation. IEEE Trans. Geosci. Remote Sens. 59(11), 9716–9729 (2021). https://doi.org/10.1109/TGRS.2020.3045456

Mellado, N., Dellepiane, M., Scopigno, R.: Relative scale estimation and 3d registration of multi-modal geometry using growing least squares. IEEE Trans. Visual Comput. Graphics 22(9), 2160–2173 (2016). https://doi.org/10.1109/TVCG.2015.2505287

Mildenhall, B., Srinivasan, P.P., Tancik, M., Barron, J.T., Ramamoorthi, R., Ng, R.: Nerf: representing scenes as neural radiance fields for view synthesis. In: ECCV (2020)

Müller, T., Evans, A., Schied, C., Keller, A.: Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. 41(4), 102:1–102:15 (2022). https://doi.org/10.1145/3528223.3530127

Myronenko, A., Song, X.: Point set registration: coherent point drift. IEEE Trans. Pattern Anal. Mach. Intell. 32(12), 2262–2275 (2010). https://doi.org/10.1109/TPAMI.2010.46

Pais, G.D., Ramalingam, S., Govindu, V.M., Nascimento, J.C., Chellappa, R., Miraldo, P.: 3dregnet: a deep neural network for 3d point registration. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7191–7201 (2020). https://doi.org/10.1109/CVPR42600.2020.00722

Pan, Y., Yang, B., Liang, F., Dong, Z.: Iterative global similarity points: a robust coarse-to-fine integration solution for pairwise 3d point cloud registration. In: 2018 International Conference on 3D Vision (3DV), pp. 180–189 (2018). https://doi.org/10.1109/3DV.2018.00030

Paris, S., Sillion, F.X., Quan, L.: A surface reconstruction method using global graph cut optimization. Int. J. Comput. Vis. 66(2), 141–161 (2006). https://doi.org/10.1007/s11263-005-3953-x

Paszke, A., et al.: Automatic differentiation in pytorch (2017)

Qin, Z., Yu, H., Wang, C., Guo, Y., Peng, Y., Xu, K.: Geometric transformer for fast and robust point cloud registration. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11133–11142 (2022). https://doi.org/10.1109/CVPR52688.2022.01086

Sarlin, P.E., Cadena, C., Siegwart, R., Dymczyk, M.: From coarse to fine: robust hierarchical localization at large scale. In: CVPR (2019)

Sarlin, P.E., DeTone, D., Malisiewicz, T., Rabinovich, A.: SuperGlue: learning feature matching with graph neural networks. In: CVPR (2020). https://arxiv.org/abs/1911.11763

Schönberger, J.L., Frahm, J.M.: Structure-from-motion revisited. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4104–4113 (2016). https://doi.org/10.1109/CVPR.2016.445

Slabaugh, G., Schafer, R., Malzbender, T., Culbertson, B.: A survey of methods for volumetric scene reconstruction from photographs. In: Mueller, K., Kaufman, A.E. (eds.) Volume Graphics 2001. Eurographics, pp. 81–100. Springer, Vienna (2001). https://doi.org/10.1007/978-3-7091-6756-4_6

Strecha, C., Fransens, R., Van Gool, L.: Combined depth and outlier estimation in multi-view stereo. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2006), vol. 2, pp. 2394–2401 (2006). https://doi.org/10.1109/CVPR.2006.78

Sun, C., Sun, M., Chen, H.T.: Direct voxel grid optimization: super-fast convergence for radiance fields reconstruction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5459–5469, June 2022

Thomas, H., Qi, C.R., Deschaud, J.E., Marcotegui, B., Goulette, F., Guibas, L.J.: Kpconv: flexible and deformable convolution for point clouds. In: Proceedings of the IEEE International Conference on Computer Vision (2019)

Wang, P., et al.: F2-nerf: fast neural radiance field training with free camera trajectories. In: CVPR (2023)

Wang, Y., Solomon, J.M.: Deep closest point: learning representations for point cloud registration. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019

Wu, X., et al.: CL-neRF: continual learning of neural radiance fields for evolving scene representation. In: Thirty-seventh Conference on Neural Information Processing Systems (2023). https://openreview.net/forum?id=uZjpSBTPik

Yao, Y., Luo, Z., Li, S., Fang, T., Quan, L.: Mvsnet: depth inference for unstructured multi-view stereo. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision - ECCV 2018, pp. 785–801. Springer, Cham (2018)

Yew, Z.J., Lee, G.H.: Regtr: end-to-end point cloud correspondences with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6677–6686, June 2022

Zaharescu, A., Boyer, E., Horaud, R.: TransforMesh : a topology-adaptive mesh-based approach to surface evolution. In: Yagi, Y., Kang, S.B., Kweon, I.S., Zha, H. (eds.) ACCV 2007. LNCS, vol. 4844, pp. 166–175. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-76390-1_17

Zang, Y., Lindenbergh, R., Yang, B., Guan, H.: Density-adaptive and geometry-aware registration of tls point clouds based on coherent point drift. IEEE Geosci. Remote Sens. Lett. 17(9), 1628–1632 (2020). https://doi.org/10.1109/LGRS.2019.2950128

Zeng, A., Song, S., Nießner, M., Fisher, M., Xiao, J., Funkhouser, T.: 3dmatch: learning local geometric descriptors from RGB-d reconstructions. In: CVPR (2017)

Zhang, J., Yao, Y., Deng, B.: Fast and robust iterative closest point. IEEE Trans. Pattern Anal. Mach. Intell. 44(7), 3450–3466 (2022). https://doi.org/10.1109/TPAMI.2021.3054619

Zhang, X., Yang, J., Zhang, S., Zhang, Y.: 3d registration with maximal cliques. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17745–17754 (2023)

Zhou, Q.-Y., Park, J., Koltun, V.: Fast global registration. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 766–782. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_47

Acknowledgments

The work was supported in part by NSFC-62172348, the Basic Research Project No. HZQB-KCZYZ-2021067 of Hetao Shenzhen-HK S&T Cooperation Zone, Guangdong Provincial Outstanding Youth Fund (No. 2023B1515020055), the National Key R&D Program of China with grant No. 2018YFB1800800, by Shenzhen Outstanding Talents Training Fund 202002, by Guangdong Research Projects No. 2017ZT07X152 and No. 2019CX01X104, by Key Area R&D Program of Guangdong Province (Grant No. 2018B030338001), by the Guangdong Provincial Key Laboratory of Future Networks of Intelligence (Grant No. 2022B1212010001), and by Shenzhen Key Laboratory of Big Data and Artificial Intelligence (Grant No. ZDSYS201707251409055). It was also partly supported by NSFC-61931024, and Shenzhen Science and Technology Program No. JCYJ20220530143604010.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Chang, J., Xu, Y., Li, Y., Chen, Y., Feng, W., Han, X. (2025). GaussReg: Fast 3D Registration with Gaussian Splatting. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15073. Springer, Cham. https://doi.org/10.1007/978-3-031-72633-0_23

Download citation

DOI: https://doi.org/10.1007/978-3-031-72633-0_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72632-3

Online ISBN: 978-3-031-72633-0

eBook Packages: Computer ScienceComputer Science (R0)