Abstract

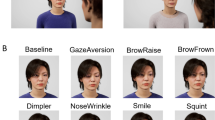

Do our facial expressions change when we speak over video calls? Given two unpaired sets of videos of people, we seek to automatically find spatio-temporal patterns that are distinctive of each set. Existing methods use discriminative approaches and perform post-hoc explainability analysis. Such methods are insufficient as they are unable to provide insights beyond obvious dataset biases, and the explanations are useful only if humans themselves are good at the task. Instead, we tackle the problem through the lens of generative domain translation: our method generates a detailed report of learned, input-dependent spatio-temporal features and the extent to which they vary between the domains. We demonstrate that our method can discover behavioral differences between conversing face-to-face (F2F) and on video-calls (VCs). We also show the applicability of our method on discovering differences in presidential communication styles. Additionally, we are able to predict temporal change-points in videos that decouple expressions in an unsupervised way, and increase the interpretability and usefulness of our model. Finally, our method, being generative, can be used to transform a video call to appear as if it were recorded in a F2F setting. Experiments and visualizations show our approach is able to discover a range of behaviors, taking a step towards deeper understanding of human behaviors. Video results, code and data can be found at facet.cs.columbia.edu.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Zhao, N., Zhang, X., Noah, J.A., Tiede, M., Hirsch, J.: Separable processes for live “in-person” and live “zoom-like” faces. Imaging Neurosci. (2023)

Balters, S., Miller, J.G., Li, R., Hawthorne, G., Reiss, A.L.: Virtual (Zoom) Interactions Alter Conversational Behavior and Inter-Brain Coherence. bioRxiv (2023)

Matz, S., Harari, G.: Personality–place transactions: mapping the relationships between big five personality traits, states, and daily places. J. Personal. Soc. Psychol. (2020)

Khan, M.R.: A review of the effects of virtual communication on performance and satisfaction across the last ten years of research. J. Appl. Behav. Anal. (2021)

Archibald, M., Ambagtsheer, R., Casey, M., Lawless, M.: Using zoom videoconferencing for qualitative data collection: perceptions and experiences of researchers and participants. Int. J. Qualit. Methods (2019)

Nesher Shoshan, H., Wehrt, W.: Understanding “zoom fatigue”: a mixed-method approach. Appl. Psychol. (2022)

Fauville, G., Luo, M., Queiroz, A.C.M., Bailenson, J.N., Hancock, J.: Zoom Exhaustion and Fatigue Scale. Comput. Human Behav. Rep. (2021)

Bailenson, J.N.: Nonverbal Overload: A Theoretical Argument for the Causes of Zoom Fatigue, Mind, and Behavior, Technology (2021)

Boland, J., Fonseca, P., Mermelstein, I., Williamson, M.: Zoom disrupts the rhythm of conversation. J. Exp. Psychol. Gen. (2021)

Fauville, G., Luo, M., Queiroz, A.C., Bailenson, J., Hancock, J.: Zoom exhaustion and fatigue scale. SSRN Electron. J. (2021)

Hoehe, M., Thibaut, F.: Going digital: how technology use may influence human brains and behavior. Dialog. Clin. Neurosci. (2020)

Numata, T., et al.: Achieving affective human–virtual agent communication by enabling virtual agents to imitate positive expressions. Sci. Rep. (2020)

Smith, H.J., Neff, M.: Communication behavior in embodied virtual reality. In: ACM CHI (2018)

Geng, S., Teotia, R., Tendulkar, P., Menon, S., Vondrick, C.: Affective faces for goal-driven dyadic communication. CoRR (2023)

Fong, R., Patrick, M., Vedaldi, A.: Understanding deep networks via extremal perturbations and smooth masks. In: ICCV (2019)

Petsiuk, V., Das, A., Saenko, K.: Rise: randomized input sampling for explanation of black-box models. CoRR (2018)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-cam: visual explanations from deep networks via gradient-based localization. In: ICCV (2017)

Shitole, V., Li, F., Kahng, M., Tadepalli, P., Fern, A.: One explanation is not enough: structured attention graphs for image classification. In: NeurIPS (2021)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks. In: ECCV (2014)

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: CVPR (2016)

Gunning, D., Aha, D.: Darpa’s explainable artificial intelligence (XAI) Program. AI Magazine (2019)

Goyal, Y., Wu, Z., Ernst, J., Batra, D., Parikh, D., Lee, S.: Counterfactual visual explanations. In: ICML (2019)

Vandenhende, S., Mahajan, D., Radenovic, F., Ghadiyaram, D.: Making heads or tails: towards semantically consistent visual counterfactuals. In: ECCV (2022)

Wang, P., Vasconcelos, N.: Scout: self-aware discriminant counterfactual explanations. In: CVPR (2020)

Ribeiro, M.T., Singh, S., Guestrin, C.: “Why should i trust you?” explaining the predictions of any classifier. In: SIGKDD (2016)

Koh, P.W., Liang, P.: Understanding black-box predictions via influence functions. In: ICML (2017)

Yeh, C.-K., Kim, J., Yen, I. E.-H., Ravikumar, P.K.: Representer point selection for explaining deep neural networks. In: NeurIPS (2018)

Tsai, C.-P., Yeh, C.-K., Ravikumar, P.: Sample based explanations via generalized representers. In: CoRR (2023)

Sui, Y., Wu, G., Sanner, S.: Representer point selection via local Jacobian expansion for post-hoc classifier explanation of deep neural networks and ensemble models. In: NeurIPS (2021)

Pruthi, G., Liu, F., Sundararajan, M., Kale, S.: Estimating training data influence by tracking gradient descent. CoRR (2020)

Silva, A., Chopra, R., Gombolay, M.C.: Cross-loss influence functions to explain deep network representations. In: AISTATS (2020)

Guo, H., Rajani, N., Hase, P., Bansal, M., Xiong, C.: “Fastif: scalable influence functions for efficient model interpretation and debugging. CoRR (2020)

Pan, W., Cui, S., Bian, J., Zhang, C., Wang, F.: Explaining algorithmic fairness through fairness-aware causal path decomposition. In: SIGKDD (2021)

Pradhan, R., Zhu, J., Glavic, B., Salimi, B.: Interpretable data-based explanations for fairness debugging. In: SIGMOD (2022)

Meng, C., Trinh, L., Xu, N., Enouen, J., Liu, Y.: Interpretability and fairness evaluation of deep learning models on mimic-iv dataset. Sci. Rep. (2022)

Alelyani, S.: Detection and evaluation of machine learning bias. Appl. Sci. (2021)

Gilpin, L.H., Bau, D., Yuan, B.Z., Bajwa, A., Specter, M., Kagal, L.: Explaining explanations: an overview of interpretability of machine learning. In: DSAA (2018)

Kim, S.S., Meister, N., Ramaswamy, V.V., Fong, R., Russakovsky, O.: Hive: evaluating the human interpretability of visual explanations. In: ECCV (2022)

Selvaraju, R.R., et al.: Squinting at VGA models: introspecting VGA models with sub-questions. In: CVPR (2020)

Das, A., Agrawal, H., Zitnick, L., Parikh, D., Batra, D.: Human attention in visual question answering: do humans and deep networks look at the same regions? In: Computer Vision and Image Understanding (2017)

Brendel, W., Bethge, M.: Approximating CNNs with bag-of-local-features models works surprisingly well on imagenet. CoRR (2019)

Bohle, M., Fritz, M., Schiele, B.: Convolutional dynamic alignment networks for interpretable classifications. In: CVPR (2021)

Böhle, M., Fritz, M., Schiele, B.: B-cos networks: alignment is all we need for interpretability. In: CVPR (2022)

Chen, C., Li, O., Tao, D., Barnett, A., Rudin, C., Su, J.K.: This looks like that: deep learning for interpretable image recognition. In: NeurIPS (2019)

Donnelly, J., Barnett, A.J., Chen, C.: Deformable protopnet: an interpretable image classifier using deformable prototypes. In: CVPR (2022)

Koh, P.W., et al.: Concept bottleneck models. In: ICML (2020)

Hastie, T., Tibshirani, R.: Generalized additive models. Statist. Sci. (1986)

Lou, Y., Caruana, R., Gehrke, J., Hooker, G.: Accurate intelligible models with pairwise interactions. In: SIGKDD (2013)

Dubey, A., Radenovic, F., Mahajan, D.: Scalable interpretability via polynomials. In: NeurIPS (2022)

Radenovic, F., Dubey, A., Mahajan, D.: Neural basis models for interpretability. In: NeurIPS (2022)

Chang, C.-H., Caruana, R., Goldenberg, A.: Node-gam: neural generalized additive model for interpretable deep learning. In: ICLR (2022)

Agarwal, R., et al.: Neural additive models: interpretable machine learning with neural nets. In: NeurIPS (2021)

Burgess, C.P., et al.: Understanding disentangling in \(\beta \)-vae. In: CoRR (2018)

Zhu, Z., Luo, P., Wang, X., Tang, X.: Multi-view perceptron: a deep model for learning face identity and view representations. In: NeurIPS (2014)

Reed, S.E., Sohn, K., Zhang, Y., Lee, H.: Learning to disentangle factors of variation with manifold interaction. In: ICML (2014)

Whitney, W.F., Chang, M., Kulkarni, T.D., Tenenbaum, J.B.: Understanding visual concepts with continuation learning. CoRR (2016)

Cheung, B., Livezey, J.A., Bansal, A.K., Olshausen, B.A.: Discovering hidden factors of variation in deep networks. CoRR (2014)

Lin, Z., Thekumparampil, K.K., Fanti, G.C., Oh, S.: Infogan-cr: disentangling generative adversarial networks with contrastive regularizers. CoRR (2019)

Jeon, I., Lee, W., Pyeon, M., Kim, G.: IB-GAN: disentangled representation learning with information bottleneck generative adversarial networks. In: AAAI (2021)

Chen, X., Duan, Y., Houthooft, R., Schulman, J., Sutskever, I., Abbeel, P.: Infogan: interpretable representation learning by information maximizing generative adversarial nets. In: NeurIPS (2016)

Ramesh, A., Choi, Y., LeCun, Y.: A spectral regularizer for unsupervised disentanglement. CoRR (2018)

Dalva, Y., Altındiş, S. F., Dundar, A.: Vecgan: image-to-image translation with interpretable latent directions. In: ECCV (2022)

Dalva, Y., Pehlivan, H., Moran, C., Hatipoğlu, Ö.I., Dündar, A.: Face attribute editing with disentangled latent vectors. CoRR (2023)

Higgins, I., et al.: beta-VAE: learning basic visual concepts with a constrained variational framework. In: ICLR (2017)

Kim, H., Mnih, A.: Disentangling by factorising. In: ICML (2018)

Chen, T.Q., Li, X., Grosse, R.B., Duvenaud, D.K.: Isolating sources of disentanglement in variational autoencoders. CoRR (2018)

Jeong, Y., Song, H.O.: Learning discrete and continuous factors of data via alternating disentanglement. In: ICML (2019)

Kumar, A., Sattigeri, P., Balakrishnan, A.: Variational inference of disentangled latent concepts from unlabeled observations. CoRR (2017)

Yesu, K., Shandilya, S., Rekharaj, N., Ankit, K., Sairam, P.S.: Big five personality traits inference from five facial shapes using CNN. In: International Conference on Computing, Power and Communication Technologies (GUCON) (2021)

Knyazev, G.G., Bocharov, A.V., Slobodskaya, H.R., Ryabichenko, T.I.: Personality-linked biases in perception of emotional facial expressions. In: Personality and Individual Differences (2008)

Kachur, A., Osin, E., Davydov, D., Shutilov, K., Novokshonov, A.: Assessing the big five personality traits using real-life static facial images. Sci. Rep. (2020)

Büdenbender, B., Höfling, T.T.A., Gerdes, A.B.M., Alpers, G.W.: Training machine learning algorithms for automatic facial coding: the role of emotional facial expressions prototypicality. PLOS One (2023)

Stahelski, A., Anderson, A., Browitt, N., Radeke, M.: Facial expressions and emotion labels are separate initiators of trait inferences from the face. Front. Psychol. (2021)

Snoek, L., et al.: Testing, explaining, and exploring models of facial expressions of emotions. Sci. Adv. (2023)

Straulino, E., Scarpazza, C., Sartori, L.: What is missing in the study of emotion expression? Front. Psychol. (2023)

Du, S., Tao, Y., Martinez, A.M.: Compound facial expressions of emotion. PNAS (2014)

Minetaki, K.: Facial expression and description of personality. In: ACM MISNC (2023)

Jonell, P., Kucherenko, T., Henter, G.E., Beskow, J.: Let’s face it: probabilistic multi-modal interlocutor-aware generation of facial gestures in dyadic settings. In: ACM IVA (2020)

Ng, E., Subramanian, S., Klein, D., Kanazawa, A., Darrell, T., Ginosar, S.: Can language models learn to listen? In: ICCV (2023)

Ng, E., et al.: Learning to listen: modeling non-deterministic dyadic facial motion. In: CVPR (2022)

Burgess, C.P., et al.: Understanding disentangling in \(\beta \)-vae. (2018)

Li, Z., Liu, H.: Beta-VAE has 2 behaviors: PCA or ICA? (2023)

García de Herreros García, P.: Towards latent space disentanglement of variational autoencoders for language (2022)

Pastrana, R.: Disentangling variational autoencoders. CoRR (2022)

Higgins, I., et al.: Unsupervised deep learning identifies semantic disentanglement in single inferotemporal face patch neurons. Nat. Commun. (2021)

Goodfellow, I., et al.: Generative adversarial nets. In: NeurIPS (2014)

Zhu, J.-Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: ICCV (2017)

Chakrabarty, A., Das, S.: On translation and reconstruction guarantees of the cycle-consistent generative adversarial networks. In: Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A. (eds.) Advances in Neural Information Processing Systems, vol. 35, pp. 23607–23620. Curran Associates, Inc. (2022)

Shen, Z., Zhou, S.K., Chen, Y., Georgescu, B., Liu, X., Huang, T.: One-to-one mapping for unpaired image-to-image translation. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1170–1179 (2020)

Wang, T.-C., et al.: Video-to-video synthesis. In: NeurIPS (2018)

Kuster, C., Popa, T., Bazin, J.-C., Gotsman, C., Gross, M.: Gaze correction for home video conferencing. In: ACM TOG (2012)

Hill, F.: The gesture that encapsulates remote-work life. The Atlantic, 20 July (2023)

Schwarz, G.: Estimating the dimension of a model. The Annals of Statistics (1978)

Denby, D.: The three faces of Trump. The New Yorker, August (2015)

Collett, P.: The seven faces of Donald Trump—a psychologist’s view. The Guardian, January (2017)

Golshan, T.: Donald Trump’s unique speaking style, explained by linguists. Vox, January (2017)

Locatello, F., et al.: Challenging common assumptions in the unsupervised learning of disentangled representations. In: ICML (2019)

Acknowledgements

This research is based on work partially supported by the DARPA CCU program under contract HR001122C0034 and the National Science Foundation AI Institute for Artificial and Natural Intelligence (ARNI). PT is supported by the Apple PhD fellowship.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Sarin, S., Mall, U., Tendulkar, P., Vondrick, C. (2025). How Video Meetings Change Your Expression. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15075. Springer, Cham. https://doi.org/10.1007/978-3-031-72643-9_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-72643-9_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72642-2

Online ISBN: 978-3-031-72643-9

eBook Packages: Computer ScienceComputer Science (R0)