Abstract

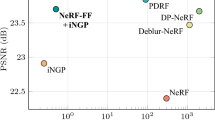

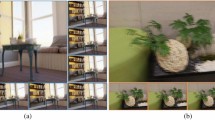

Dynamic Neural Radiance Field (NeRF) from monocular videos has recently been explored for space-time novel view synthesis and achieved excellent results. However, defocus blur caused by depth variation often occurs in video capture, compromising the quality of dynamic reconstruction because the lack of sharp details interferes with modeling temporal consistency between input views. To tackle this issue, we propose \(D^{2}RF\), the first dynamic NeRF method designed to restore sharp novel views from defocused monocular videos. We introduce layered Depth-of-Field (DoF) volume rendering to model the defocus blur and reconstruct a sharp NeRF supervised by defocused views. The blur model is inspired by the connection between DoF rendering and volume rendering. The opacity in volume rendering aligns with the layer visibility in DoF rendering. To execute the blurring, we modify the layered blur kernel to the ray-based kernel and employ an optimized sparse kernel to gather the input rays efficiently and render the optimized rays with our layered DoF volume rendering. We synthesize a dataset with defocused dynamic scenes for our task, and extensive experiments on our dataset show that our method outperforms existing approaches in synthesizing all-in-focus novel views from defocus blur while maintaining spatial-temporal consistency in the scene.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Abadie, G., McAuley, S., Golubev, E., Hill, S., Lagarde, S.: Advances in real-time rendering in games. In: ACM SIGGRAPH 2018 Courses, p. 1 (2018)

Abuolaim, A., Brown, M.S.: Defocus deblurring using dual-pixel data. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12355, pp. 111–126. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58607-2_7

Abuolaim, A., Delbracio, M., Kelly, D., Brown, M.S., Milanfar, P.: Learning to reduce defocus blur by realistically modeling dual-pixel data. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2289–2298 (2021)

Busam, B., Hog, M., McDonagh, S., Slabaugh, G.: SteReFo: efficient image refocusing with stereo vision. In: Proceedings of IEEE International Conference on Computer Vision Workshops (ICCVW) (2019)

Chen, Q., Koltun, V.: Full flow: optical flow estimation by global optimization over regular grids. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4706–4714 (2016)

Dong, J., Roth, S., Schiele, B.: DWDN: deep wiener deconvolution network for non-blind image deblurring. IEEE Trans. Pattern Anal. Mach. Intell. 44(12), 9960–9976 (2021)

Fan, H., Su, H., Guibas, L.J.: A point set generation network for 3D object reconstruction from a single image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 605–613 (2017)

Gao, C., Saraf, A., Kopf, J., Huang, J.B.: Dynamic view synthesis from dynamic monocular video. In: ICCV, pp. 5712–5721 (2021)

Ignatov, A., Patel, J., Timofte, R.: Rendering natural camera bokeh effect with deep learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pp. 418–419 (2020)

Ignatov, A., et al.: Aim 2019 challenge on Bokeh effect synthesis: methods and results. In: Proceedings of IEEE International Conference on Computer Vision Workshops (ICCVW), pp. 3591–3598. IEEE (2019)

Ignatov, A., et al.: Aim 2020 challenge on rendering realistic Bokeh. In: Bartoli, A., Fusiello, A. (eds.) Computer Vision – ECCV 2020 Workshops. ECCV 2020. LNCS, vol. 12537, pp. 213–228. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-67070-2_13

Jimenez Rezende, D., Eslami, S., Mohamed, S., Battaglia, P., Jaderberg, M., Heess, N.: Unsupervised learning of 3D structure from images. Adv. Neural. Inf. Process. Syst. 29, 4996–5004 (2016)

Kajiya, J.T., Von Herzen, B.P.: Ray tracing volume densities. ACM SIGGRAPH Comput. Graph. 18(3), 165–174 (1984)

Kim, M.J., Gu, G., Choo, J.: LensNeRF: rethinking volume rendering based on thin-lens camera model. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3182–3191 (2024)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Lee, D., Lee, M., Shin, C., Lee, S.: DP-NeRF: deblurred neural radiance field with physical scene priors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12386–12396, June 2023

Lee, J., Son, H., Rim, J., Cho, S., Lee, S.: Iterative filter adaptive network for single image defocus deblurring. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2034–2042 (2021)

Lee, S., Eisemann, E., Seidel, H.P.: Real-time lens blur effects and focus control. ACM Trans. Graph. (TOG) 29(4), 1–7 (2010)

Levin, A., Fergus, R., Durand, F., Freeman, W.T.: Image and depth from a conventional camera with a coded aperture. ACM Trans. Graph. (TOG) 26(3), 70-es (2007)

Li, Z., Niklaus, S., Snavely, N., Wang, O.: Neural scene flow fields for space-time view synthesis of dynamic scenes. In: CVPR, pp. 6498–6508 (2021)

Li, Z., Wang, Q., Cole, F., Tucker, R., Snavely, N.: DynIBaR: neural dynamic image-based rendering. In: CVPR, pp. 4273–4284 (2023)

Liu, Y.L., et al.: Robust dynamic radiance fields. In: CVPR, pp. 13–23 (2023)

Lombardi, S., Simon, T., Saragih, J., Schwartz, G., Lehrmann, A., Sheikh, Y.: Neural volumes: learning dynamic renderable volumes from images. ACM Trans. Graph. 38(4CD), 65.1–65.14 (2019)

Luo, X., Peng, J., Xian, K., Wu, Z., Cao, Z.: Defocus to focus: photo-realistic bokeh rendering by fusing defocus and radiance priors. Inf. Fusion 89, 320–335 (2023)

Ma, L., et al.: Deblur-NeRF: neural radiance fields from blurry images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12861–12870 (2022)

Mildenhall, B., et al.: Local light field fusion: practical view synthesis with prescriptive sampling guidelines. ACM Trans. Graph. (TOG) 38(4), 1–14 (2019)

Mildenhall, B., Srinivasan, P.P., Tancik, M., Barron, J.T., Ramamoorthi, R., Ng, R.: NeRF: representing scenes as neural radiance fields for view synthesis. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12346, pp. 405–421. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58452-8_24

Pan, L., Chowdhury, S., Hartley, R., Liu, M., Zhang, H., Li, H.: Dual pixel exploration: simultaneous depth estimation and image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4340–4349 (2021)

Park, J., Tai, Y.W., Cho, D., So Kweon, I.: A unified approach of multi-scale deep and hand-crafted features for defocus estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1736–1745 (2017)

Park, K., et al.: Nerfies: deformable neural radiance fields. In: ICCV, pp. 5865–5874 (2021)

Park, K., et al.: HyperNeRF: a higher-dimensional representation for topologically varying neural radiance fields. ACM TOG 40(6), 1–12 (2021)

Peng, J., Cao, Z., Luo, X., Lu, H., Xian, K., Zhang, J.: BokehMe: when neural rendering meets classical rendering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16283–16292 (2022)

Peng, J., Zhang, J., Luo, X., Lu, H., Xian, K., Cao, Z.: MPIB: an MPI-based bokeh rendering framework for realistic partial occlusion effects. In: Avidan, S., Brostow, G., Cisse, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022, Proceedings, Part VI, pp. 590–607. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-20068-7_34

Pumarola, A., Corona, E., Pons-Moll, G., Moreno-Noguer, F.: D-NeRF: neural radiance fields for dynamic scenes. In: CVPR, pp. 10318–10327 (2021)

Ranftl, R., Bochkovskiy, A., Koltun, V.: Vision transformers for dense prediction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 12179–12188 (2021)

Ruan, L., Chen, B., Li, J., Lam, M.: Learning to deblur using light field generated and real defocus images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16304–16313 (2022)

Schonberger, J.L., Frahm, J.M.: Structure-from-motion revisited. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4104–4113 (2016)

Sheng, Y., et al.: Dr. Bokeh: differentiable occlusion-aware bokeh rendering. arXiv preprint arXiv:2308.08843 (2023)

Shi, J., Xu, L., Jia, J.: Just noticeable defocus blur detection and estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 657–665 (2015)

Sitzmann, V., Thies, J., Heide, F., Nießner, M., Wetzstein, G., Zollhofer, M.: DeepVoxels: learning persistent 3D feature embeddings. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2437–2446 (2019)

Sitzmann, V., Zollhoefer, M., Wetzstein, G.: Scene representation networks: continuous 3D-structure-aware neural scene representations. In: Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 32. Curran Associates, Inc. (2019). https://proceedings.neurips.cc/paper/2019/file/b5dc4e5d9b495d0196f61d45b26ef33e-Paper.pdf

Son, H., Lee, J., Cho, S., Lee, S.: Single image defocus deblurring using kernel-sharing parallel atrous convolutions. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2642–2650 (2021)

Teed, Z., Deng, J.: RAFT: recurrent all-pairs field transforms for optical flow. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M. (eds.) Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part II 16, vol. 12347, pp. 402–419. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58536-5_24

Tretschk, E., Tewari, A., Golyanik, V., Zollhöfer, M., Lassner, C., Theobalt, C.: Non-rigid neural radiance fields: reconstruction and novel view synthesis of a dynamic scene from monocular video. In: ICCV, pp. 12959–12970 (2021)

Wadhwa, N., et al.: Synthetic depth-of-field with a single-camera mobile phone. ACM Trans. Graph. (TOG) 37(4), 1–13 (2018)

Wang, C., Eckart, B., Lucey, S., Gallo, O.: Neural trajectory fields for dynamic novel view synthesis. arXiv preprint arXiv:2105.05994 (2021)

Wang, C., MacDonald, L.E., Jeni, L.A., Lucey, S.: Flow supervision for deformable nerf. In: CVPR, pp. 21128–21137 (2023)

Wang, L., et al.: DeepLens: shallow depth of field from a single image. ACM Trans. Graph. (TOG) 37(6), 1–11 (2018)

Wang, Y., et al.: Neural video depth stabilizer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9466–9476 (2023)

Wu, Z., Li, X., Peng, J., Lu, H., Cao, Z., Zhong, W.: DoF-NeRF: depth-of-field meets neural radiance fields. In: Proceedings of the 30th ACM International Conference on Multimedia, pp. 1718–1729 (2022)

Xian, W., Huang, J.B., Kopf, J., Kim, C.: Space-time neural irradiance fields for free-viewpoint video. In: CVPR, pp. 9421–9431 (2021)

Xiao, L., Kaplanyan, A., Fix, A., Chapman, M., Lanman, D.: DeepFocus: learned image synthesis for computational displays. ACM Trans. Graph. (TOG) 37(6), 1–13 (2018)

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.H.: Restormer: efficient transformer for high-resolution image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5728–5739 (2022)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 586–595 (2018)

Zhang, X., Matzen, K., Nguyen, V., Yao, D., Zhang, Y., Ng, R.: Synthetic defocus and look-ahead autofocus for casual videography. ACM Trans. Graph. (TOG) 38, 1–16 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Luo, X., Sun, H., Peng, J., Cao, Z. (2025). Dynamic Neural Radiance Field from Defocused Monocular Video. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15063. Springer, Cham. https://doi.org/10.1007/978-3-031-72652-1_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-72652-1_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72651-4

Online ISBN: 978-3-031-72652-1

eBook Packages: Computer ScienceComputer Science (R0)