Abstract

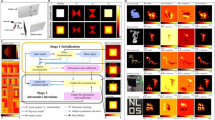

This paper presents a novel optimization-based method for non-line-of-sight (NLOS) imaging that aims to reconstruct hidden scenes under general setups with significantly reduced reconstruction time. In NLOS imaging, the visible surfaces of the target objects are notably sparse. To mitigate unnecessary computations arising from empty regions, we design our method to render the transients through partial propagations from a continuously sampled set of points from the hidden space. Our method is capable of accurately and efficiently modeling the view-dependent reflectance using surface normals, which enables us to obtain surface geometry as well as albedo. In this pipeline, we propose a novel domain reduction strategy to eliminate superfluous computations in empty regions. During the optimization process, our domain reduction procedure periodically prunes the empty regions from our sampling domain in a coarse-to-fine manner, leading to substantial improvement in efficiency. We demonstrate the effectiveness of our method in various NLOS scenarios with sparse scanning patterns. Experiments conducted on both synthetic and real-world data support the efficacy in general NLOS scenarios, and the improved efficiency of our method compared to the previous optimization-based solutions. Our code is available at https://github.com/hyunbo9/domain-reduction-strategy.

H. Shim and I. Cho—Equal contribution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ahn, B., Dave, A., Veeraraghavan, A., Gkioulekas, I., Sankaranarayanan, A.C.: Convolutional approximations to the general non-line-of-sight imaging operator. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 7889–7899 (2019)

Aittala, M., et al.: Computational mirrors: blind inverse light transport by deep matrix factorization. Adv. Neural Inf. Process. Syst. 32 (2019)

Arellano, V., Gutierrez, D., Jarabo, A.: Fast back-projection for non-line of sight reconstruction. Opt. Express 25(10), 11574–11583 (2017)

Batarseh, M., Sukhov, S., Shen, Z., Gemar, H., Rezvani, R., Dogariu, A.: Passive sensing around the corner using spatial coherence. Nat. Commun. 9(1), 1–6 (2018)

Boger-Lombard, J., Katz, O.: Passive optical time-of-flight for non line-of-sight localization. Nat. Commun. 10(1), 1–9 (2019)

Bouman, K.L., et al.: Turning corners into cameras: principles and methods. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2270–2278 (2017)

Chen, W., Wei, F., Kutulakos, K.N., Rusinkiewicz, S., Heide, F.: Learned feature embeddings for non-line-of-sight imaging and recognition. ACM Trans. Graph. 39(6), 1–18 (2020)

Choi, K., Kim, I., Choi, D., Marco, J., Gutierrez, D., Kim, M.H.: Self-calibrating, fully differentiable NLOS inverse rendering. In: SIGGRAPH Asia 2023 Conference Papers, pp. 1–11 (2023)

Chopite, J.G., Hullin, M.B., Wand, M., Iseringhausen, J.: Deep non-line-of-sight reconstruction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 960–969 (2020)

Galindo, M., Marco, J., O’Toole, M., Wetzstein, G., Gutierrez, D., Jarabo, A.: A dataset for benchmarking time-resolved non-line-of-sight imaging (2019). https://graphics.unizar.es/nlos

Grau, J., Plack, M., Haehn, P., Weinmann, M., Hullin, M.: Occlusion fields: an implicit representation for non-line-of-sight surface reconstruction. arXiv preprint arXiv:2203.08657 (2022)

Gu, C., Sultan, T., Masumnia-Bisheh, K., Waller, L., Velten, A.: Fast non-line-of-sight imaging with non-planar relay surfaces. In: 2023 IEEE International Conference on Computational Photography (ICCP), pp. 1–12. IEEE (2023)

Heide, F., O’Toole, M., Zang, K., Lindell, D.B., Diamond, S., Wetzstein, G.: Non-line-of-sight imaging with partial occluders and surface normals. ACM Trans. Graph. 38(3), 1–10 (2019)

Iseringhausen, J., Hullin, M.B.: Non-line-of-sight reconstruction using efficient transient rendering. ACM Trans. Graph. 39(1), 1–14 (2020)

Isogawa, M., Chan, D., Yuan, Y., Kitani, K., O’Toole, M.: Efficient non-line-of-sight imaging from transient sinograms. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12352, pp. 193–208. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58571-6_12

Isogawa, M., Chan, D., Yuan, Y., Kitani, K., O’Toole, M.: Efficient non-line-of-sight imaging from transient sinograms. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 193–208. Springer (2020)

Isogawa, M., Yuan, Y., O’Toole, M., Kitani, K.M.: Optical non-line-of-sight physics-based 3d human pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7013–7022 (2020)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Kirmani, A., Hutchison, T., Davis, J., Raskar, R.: Looking around the corner using transient imaging. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 159–166 (2009)

La Manna, M., Nam, J.H., Reza, S.A., Velten, A.: Non-line-of-sight-imaging using dynamic relay surfaces. Opt. Express 28(4), 5331–5339 (2020)

Lindell, D.B., Wetzstein, G., Koltun, V.: Acoustic non-line-of-sight imaging. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6780–6789 (2019)

Lindell, D.B., Wetzstein, G., O’Toole, M.: Wave-based non-line-of-sight imaging using fast FK migration. ACM Trans. Graph. 38(4), 1–13 (2019)

Liu, L., Gu, J., Lin, K.Z., Chua, T.S., Theobalt, C.: Neural sparse voxel fields. In: NeurIPS (2020)

Liu, X., Bauer, S., Velten, A.: Analysis of feature visibility in non-line-of-sight measurements. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 10140–10148 (2019)

Liu, X., Bauer, S., Velten, A.: Phasor field diffraction based reconstruction for fast non-line-of-sight imaging systems. Nat. Commun. 11(1), 1–13 (2020)

Liu, X., et al.: Non-line-of-sight imaging using phasor-field virtual wave optics. Nature 572(7771), 620–623 (2019)

Liu, X., Wang, J., Xiao, L., Fu, X., Qiu, L., Shi, Z.: Few-shot non-line-of-sight imaging with signal-surface collaborative regularization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13303–13312 (2023)

Martin-Brualla, R., Radwan, N., Sajjadi, M.S., Barron, J.T., Dosovitskiy, A., Duckworth, D.: Nerf in the wild: neural radiance fields for unconstrained photo collections. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7210–7219 (2021)

Mildenhall, B., Srinivasan, P.P., Tancik, M., Barron, J.T., Ramamoorthi, R., Ng, R.: NeRF: representing scenes as neural radiance fields for view synthesis. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12346, pp. 405–421. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58452-8_24

Mu, F., et al.: Physics to the rescue: deep non-line-of-sight reconstruction for high-speed imaging. IEEE Trans. Pattern Anal. Mach. Intell. (2022)

Nam, J.H., et al.: Low-latency time-of-flight non-line-of-sight imaging at 5 frames per second. Nat. Commun. 12(1), 6526 (2021)

O’Toole, M., Lindell, D.B., Wetzstein, G.: Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555(7696), 338–341 (2018)

Pei, C., et al.: Dynamic non-line-of-sight imaging system based on the optimization of point spread functions. Opt. Express 29(20), 32349–32364 (2021)

Plack, M., Callenberg, C., Schneider, M., Hullin, M.B.: Fast differentiable transient rendering for non-line-of-sight reconstruction. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3067–3076 (2023)

Rapp, J., et al.: Seeing around corners with edge-resolved transient imaging. Nat. Commun. 11(1), 5929 (2020)

Saunders, C., Murray-Bruce, J., Goyal, V.K.: Computational periscopy with an ordinary digital camera. Nature 565(7740), 472–475 (2019)

Seidel, S.W., Murray-Bruce, J., Ma, Y., Yu, C., Freeman, W.T., Goyal, V.K.: Two-dimensional non-line-of-sight scene estimation from a single edge occluder. IEEE Trans. Comput. Imaging 7, 58–72 (2020)

Sharma, P., et al.: What you can learn by staring at a blank wall. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 2330–2339 (2021)

Shen, S., et al.: Non-line-of-sight imaging via neural transient fields. IEEE Trans. Pattern Anal. Mach. Intell. 43(7), 2257–2268 (2021)

Shi, X., Tang, M., Zhang, S., Qiao, K., Gao, X., Jin, C.: Passive localization and reconstruction of multiple non-line-of-sight objects in a scene with a large visible transmissive window. Opt. Express 32(6), 10104–10118 (2024)

Tanaka, K., Mukaigawa, Y., Kadambi, A.: Polarized non-line-of-sight imaging. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2136–2145 (2020)

Tsai, C.Y., Kutulakos, K.N., Narasimhan, S.G., Sankaranarayanan, A.C.: The geometry of first-returning photons for non-line-of-sight imaging. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7216–7224 (2017)

Tsai, C.Y., Sankaranarayanan, A.C., Gkioulekas, I.: Beyond volumetric albedo–a surface optimization framework for non-line-of-sight imaging. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1545–1555 (2019)

Velten, A., Willwacher, T., Gupta, O., Veeraraghavan, A., Bawendi, M.G., Raskar, R.: Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 3(1), 1–8 (2012)

Wang, J., Liu, X., Xiao, L., Shi, Z., Qiu, L., Fu, X.: Non-line-of-sight imaging with signal superresolution network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17420–17429 (2023)

Wu, C., et al.: Non–line-of-sight imaging over 1.43 km. Proc. Natl. Acad. Sci. 118(10), e2024468118 (2021)

Xin, S., Nousias, S., Kutulakos, K.N., Sankaranarayanan, A.C., Narasimhan, S.G., Gkioulekas, I.: A theory of fermat paths for non-line-of-sight shape reconstruction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6800–6809 (2019)

Ye, J.T., Huang, X., Li, Z.P., Xu, F.: Compressed sensing for active non-line-of-sight imaging. Opt. Express 29(2), 1749–1763 (2021)

Yedidia, A.B., Baradad, M., Thrampoulidis, C., Freeman, W.T., Wornell, G.W.: Using unknown occluders to recover hidden scenes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12231–12239 (2019)

Young, S.I., Lindell, D.B., Girod, B., Taubman, D., Wetzstein, G.: Non-line-of-sight surface reconstruction using the directional light-cone transform. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1407–1416 (2020)

Yu, A., Li, R., Tancik, M., Li, H., Ng, R., Kanazawa, A.: Plenoctrees for real-time rendering of neural radiance fields. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5752–5761 (2021)

Zhu, D., Cai, W.: Fast non-line-of-sight imaging with two-step deep remapping. ACS Photonics 9(6), 2046–2055 (2022)

Acknowledgements

This work was supported by the Samsung Research Funding Center (SRFC-IT2001-04), Artificial Intelligence Innovation Hub under Grant RS-2021-II212068, and Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (Artificial Intelligence Graduate School Program, Yonsei University, under Grant 2020-0-01361).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Shim, H., Cho, I., Kwon, D., Kim, S.J. (2025). Domain Reduction Strategy for Non-Line-of-Sight Imaging. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15089. Springer, Cham. https://doi.org/10.1007/978-3-031-72751-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-72751-1_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72750-4

Online ISBN: 978-3-031-72751-1

eBook Packages: Computer ScienceComputer Science (R0)