Abstract

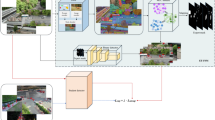

Due to data collection challenges, the mean-teacher learning paradigm has emerged as a critical approach for cross-domain object detection, especially in adverse weather conditions. Despite significant progress, existing methods are still plagued by low-quality pseudo-labels in degraded images. This paper proposes a generation-composition paradigm training framework that includes the tiny-object-friendly loss, i.e., IAoU loss with a joint-filtering and student-aware strategy to improve pseudo-labels generation quality and refine the filtering scheme. Specifically, in the generation phase of pseudo-labels, we observe that bounding box regression is essential for feature alignment and develop the IAoU loss to enhance the precision of bounding box regression, further facilitating subsequent feature alignment. We also find that selecting bounding boxes based solely on classification confidence performs poorly in cross-domain noisy image scenes. Moreover, relying exclusively on predictions from the teacher model could cause the student model to collapse. Accordingly, in the composition phase, we introduce the mean-teacher model with a joint-filtering and student-aware strategy combining classification and regression thresholds from both the student and the teacher models. Our extensive experiments, conducted on synthetic and real-world adverse weather datasets, clearly demonstrate that the proposed method surpasses state-of-the-art benchmarks across all scenarios, particularly achieving a 12.4\(\%\) improvement of mAP, i.e., Cityscapes to RTTS. Our code will be available at https://github.com/iu110/GCHQ/.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Balakrishna, S., Mustapha, A.A.: Progress in multi-object detection models: a comprehensive survey. Multim. Tools Appl. 82(15), 22405–22439 (2023)

Cao, S., Joshi, D., Gui, L., Wang, Y.: Contrastive mean teacher for domain adaptive object detectors. In: CVPR, pp. 23839–23848 (2023)

Chen, C., Zheng, Z., Ding, X., Huang, Y., Dou, Q.: Harmonizing transferability and discriminability for adapting object detectors. In: CVPR, pp. 8869–8878 (2020)

Chen, C., Zheng, Z., Ding, X., Huang, Y., Dou, Q.: Harmonizing transferability and discriminability for adapting object detectors. In: CVPR, pp. 8866–8875 (2020)

Chen, M., et al.: Learning domain adaptive object detection with probabilistic teacher. In: ICML, vol. 162, pp. 3040–3055 (2022)

Chen, X., Li, H., Li, M., Pan, J.: Learning a sparse transformer network for effective image deraining. In: CVPR, pp. 5896–5905 (2023)

Chen, Y., Jhong, S., Hsia, C.: Roadside unit-based unknown object detection in adverse weather conditions for smart internet of vehicles. ACM Trans. Manag. Inf. Syst. 13(4), 47:1–47:21 (2022)

Chen, Y., Li, W., Sakaridis, C., Dai, D., Van Gool, L.: Domain adaptive faster r-cnn for object detection in the wild. In: CVPR, pp. 3339–3348 (2018)

Choi, J., Chun, D., Kim, H., Lee, H.: Gaussian yolov3: An accurate and fast object detector using localization uncertainty for autonomous driving. In: ICCV, pp. 502–511 (2019)

Deng, J., Li, W., Chen, Y., Duan, L.: Unbiased mean teacher for cross-domain object detection. In: CVPR, pp. 4091–4101 (2021)

Fu, X., Huang, J., Zeng, D., Huang, Y., Ding, X., Paisley, J.W.: Removing rain from single images via a deep detail network. In: CVPR,pp. 1715–1723 (2017)

Hahner, M., Dai, D., Sakaridis, C., Zaech, J., Gool, L.V.: Semantic understanding of foggy scenes with purely synthetic data. In: ITSC, pp. 3675–3681 (2019)

He, M., et al.: Cross domain object detection by target-perceived dual branch distillation. In: CVPR, pp. 9560–9570 (2022)

He, Z., Zhang, L.: Domain adaptive object detection via asymmetric tri-way faster-RCNN. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12369, pp. 309–324. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58586-0_19

Hnewa, M., Radha, H.: Multiscale domain adaptive yolo for cross-domain object detection. In: ICIP, pp. 3323–3327 (2021)

Hsu, H.K., et al.: Progressive domain adaptation for object detection. In: WACV, pp. 738–746 (2019). https://api.semanticscholar.org/CorpusID:198167281

Huang, S.C., Le, T.H., Jaw, D.W.: Dsnet: joint semantic learning for object detection in inclement weather conditions. IEEE Trans. Pattern Anal. Mach. Intell. 43(8) (2021)

Inoue, N., Furuta, R., Yamasaki, T., Aizawa, K.: Cross-domain weakly-supervised object detection through progressive domain adaptation. In: CVPR, pp. 5001–5009. Computer Vision Foundation/IEEE Computer Society (2018)

Kalwar, S., Patel, D., Aanegola, A., Konda, K.R., Garg, S., Krishna, K.M.: Gdip: gated differentiable image processing for object detection in adverse conditions. In: ICRA, pp. 7083–7089 (2023)

Khodabandeh, M., Vahdat, A., Ranjbar, M., Macready, W.G.: A robust learning approach to domain adaptive object detection. CoRR abs/ arXiv: 1904.02361 (2019)

Kim, T., Jeong, M., Kim, S., Choi, S., Kim, C.: Diversify and match: a domain adaptive representation learning paradigm for object detection. In: CVPR, pp. 12456–12465. Computer Vision Foundation / IEEE (2019)

Li, W., Liu, X., Yuan, Y.: SIGMA++: improved semantic-complete graph matching for domain adaptive object detection. TPAMI 45(7), 9022–9040 (2023)

Li, Y., et al.: Cross-domain adaptive teacher for object detection. In: CVPR, pp. 7571–7580 (2022)

Lin, W., Chu, J., Leng, L., Miao, J., Wang, L.: Feature disentanglement in one-stage object detection. Pattern Recognit. 145, 109878 (2024)

Liu, W., Ren, G., Yu, R., Guo, S., Zhu, J., Zhang, L.: Image-adaptive yolo for object detection in adverse weather conditions. In: AAAI, vol. 36, pp. 1792–1800 (2022)

Mattolin, G., Zanella, L., Ricci, E., Wang, Y.: Confmix: unsupervised domain adaptation for object detection via confidence-based mixing. In: WACV, pp. 423–433 (2023)

Murez, Z., Kolouri, S., Kriegman, D., Ramamoorthi, R., Kim, K.: Image to image translation for domain adaptation. In: CVPR, pp. 4500–4509 (2018)

Panagiotakopoulos, T., Dovesi, P.L., Härenstam-Nielsen, L., Poggi, M.: Online domain adaptation for semantic segmentation in ever-changing conditions. In: ECCV, vol. 13694, pp. 128–146 (2022). https://doi.org/10.1007/978-3-031-19830-4_8

Park, T., Efros, A.A., Zhang, R., Zhu, J.-Y.: Contrastive learning for unpaired image-to-image translation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12354, pp. 319–345. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58545-7_19

Qiu, Z., Zheng, P., Tao, X., Wu, X.: Object detection with deep learning: a review. IEEE Trans. Neural Netw. Learn. Syst. 30(11), 3212–3232 (2019)

Rodriguez, A.L., Mikolajczyk, K.: Domain adaptation for object detection via style consistency. arXiv preprint arXiv:1911.10033 (2019)

Saito, K., Ushiku, Y., Harada, T., Saenko, K.: Strong-weak distribution alignment for adaptive object detection. In: CVPR, pp. 6956–6965 (2019)

Siliang, M., Yong, X.: Mpdiou: a loss for efficient and accurate bounding box regression. arXiv preprint arXiv:2307.07662 (2023)

Sindagi, V.A., Oza, P., Yasarla, R., Patel, V.M.: Prior-based domain adaptive object detection for hazy and rainy conditions. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12359, pp. 763–780. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58568-6_45

Tarvainen, A., Valpola, H.: Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In: ICLR (2017)

Vs, V., Gupta, V., Oza, P., Sindagi, V.A., Patel, V.M.: Mega-cda: memory guided attention for category-aware unsupervised domain adaptive object detection. In: CVPR, pp. 4516–4526 (2021)

Wang, L., et al.: Dual multiscale mean teacher network for semi-supervised infection segmentation in chest CT volume for COVID-19. IEEE Trans. Cybern. 53(10), 6363–6375 (2023)

Wang, L., Qin, H., Zhou, X., Lu, X., Zhang, F.: R-yolo: a robust object detector in adverse weather. IEEE Trans. Instrum. Meas. 72, 1–11 (2022)

Song, Y.-Z., Rui Tam, Z., Chen, H.-J., Lu, H.-H., Shuai, H.-H.: Character-preserving coherent story visualization. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12362, pp. 18–33. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58520-4_2

Wang, Y., et al.: TogetherNet: bridging image restoration and object detection together via dynamic enhancement learning. Comput. Graph. Forum (2022)

Wang, Z., Cun, X., Bao, J., Zhou, W., Liu, J., Li, H.: Uformer: a general u-shaped transformer for image restoration. In: CVPR, pp. 17662–17672 (2022)

Wu, R., Duan, Z., Guo, C., Chai, Z., Li, C.: RIDCP: revitalizing real image dehazing via high-quality codebook priors. In: CVPR, pp. 22282–22291 (2023)

Xia, Z., Yan, C., Wei, S., Hong, G., Ping, Y.: Object detection in 20 years: a survey. Proc. IEEE 111(3), 257–276 (2023)

Xiong, F., Tian, J., Hao, Z., He, Y., Ren, X.: SCMT: self-correction mean teacher for semi-supervised object detection. In: IJCAI, pp. 1488–1494 (2022)

Xu, B., Chen, M., Guan, W., Hu, L.: Efficient teacher: semi-supervised object detection for yolov5. arXiv preprint arXiv abs/2302.07577 (2023)

Xu, C., Zhao, X., Jin, X., Wei, X.: Exploring categorical regularization for domain adaptive object detection. In: CVPR, pp. 11721–11730 (2020)

Xu, X., Wang, R., Lu, J.: Low-light image enhancement via structure modeling and guidance. In: CVPR, pp. 9893–9903 (2023)

Yang, Q., Wei, X., Wang, B., Hua, X., Zhang, L.: Interactive self-training with mean teachers for semi-supervised object detection. In: CVPR, pp. 5941–5950 (2021)

Yasarla, R., Priebe, C.E., Patel, V.M.: ART-SS: an adaptive rejection technique for semi-supervised restoration for adverse weather-affected images. In: ECCV, vol. 13678, pp. 699–718 (2022)

Ye, T., et al.: Perceiving and modeling density for image dehazing. In: ECCV, vol. 13679, pp. 130–145 (2022)

Yoo, J., Chung, I., Kwak, N.: Unsupervised domain adaptation for one-stage object detector using offsets to bounding box. In: ECCV, vol. 13693, pp. 691–708 (2022)

Yu, H., Zheng, N., Zhou, M., Huang, J., Xiao, Z., Zhao, F.: Frequency and spatial dual guidance for image dehazing. In: ECCV, vol. 13679, pp. 181–198 (2022)

Yu, J., et al.: Mttrans: cross-domain object detection with mean teacher transformer. In: ECCV, pp. 629–645 (2022)

Zaidi, S.S.A., Ansari, M.S., Aslam, A., Kanwal, N., Asghar, M., Lee, B.: A survey of modern deep learning based object detection models. Digital Signal Process. 126, 103514 (2022)

Zhang, K., et al.: Beyond monocular deraining: stereo image deraining via semantic understanding. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12372, pp. 71–89. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58583-9_5

Zhang, S., Tuo, H., Hu, J., Jing, Z.: Domain adaptive yolo for one-stage cross-domain detection. In: ACML, pp. 785–797 (2021)

Zhang, Y., Shi, Z., Zhang, Y.: Adioc loss: an auxiliary descent ioc loss function. Eng. Appl. Artif. Intell. 116, 105453 (2022)

Zhao, G., Li, G., Xu, R., Lin, L.: Collaborative training between region proposal localization and classification for domain adaptive object detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12363, pp. 86–102. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58523-5_6

Zhao, Z., et al.: Masked retraining teacher-student framework for domain adaptive object detection. In: ICCV, pp. 18993–19003 (2023)

Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., Ren, D.: Distance-iou loss: faster and better learning for bounding box regression. In: AAAI, vol. 34, pp. 12993–13000 (2020)

Zhou, H., Jiang, F., Lu, H.: SSDA-YOLO: semi-supervised domain adaptive YOLO for cross-domain object detection. Comput. Vis. Image Underst. 229, 103649 (2023)

Zhou, W., Du, D., Zhang, L., Luo, T., Wu, Y.: Multi-granularity alignment domain adaptation for object detection. In: CVPR, pp. 9571–9580 (2022)

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: ICCV, pp. 2223–2232 (2017)

Acknowledgements

This work is supported in part by the National Natural Science Foundation of China (Project number 62101336); and in part by the Guangdong Basic and Applied Basic Research Foundation (Project number 2022A1515011301).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhao, R., Yan, H., Wang, S. (2025). Revisiting Domain-Adaptive Object Detection in Adverse Weather by the Generation and Composition of High-Quality Pseudo-labels. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15094. Springer, Cham. https://doi.org/10.1007/978-3-031-72764-1_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-72764-1_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72763-4

Online ISBN: 978-3-031-72764-1

eBook Packages: Computer ScienceComputer Science (R0)