Abstract

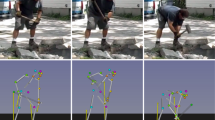

Current approaches for 3D human motion synthesis generate high-quality animations of digital humans performing a wide variety of actions and gestures. However, a notable technological gap exists in addressing the complex dynamics of multi-human interactions within this paradigm. In this work, we present ReMoS, a denoising diffusion-based model that synthesizes full-body reactive motion of a person in a two-person interaction scenario. Given the motion of one person, we employ a combined spatio-temporal cross-attention mechanism to synthesize the reactive body and hand motion of the second person, thereby completing the interactions between the two. We demonstrate ReMoS across challenging two-person scenarios such as pair-dancing, Ninjutsu, kickboxing, and acrobatics, where one person’s movements have complex and diverse influences on the other. We also contribute the ReMoCap dataset for two-person interactions containing full-body and finger motions. We evaluate ReMoS through multiple quantitative metrics, qualitative visualizations, and a user study, and also indicate usability in interactive motion editing applications. More details are available on the project page: https://vcai.mpi-inf.mpg.de/projects/remos.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

unpublished at the time of our paper submission

References

Ahuja, C., Ma, S., Morency, L.P., Sheikh, Y.: To react or not to react: end-to-end visual pose forecasting for personalized avatar during dyadic conversations. In: 2019 International Conference on Multimodal Interaction (2019)

Ao, T., Zhang, Z., Liu, L.: GestureDiffuCLIP: gesture diffusion model with clip latents. In: SIGGRAPH (2023)

Aristidou, A., Yiannakidis, A., Aberman, K., Cohen-Or, D., Shamir, A., Chrysanthou, Y.: Rhythm is a dancer: music-driven motion synthesis with global structure. IEEE Trans. Visualiz. Comput. Graph. (2022)

Athanasiou, N., Petrovich, M., Black, M.J., Varol, G.: Teach: temporal action composition for 3D humans. In: 2022 International Conference on 3D Vision (3DV) (2022)

Bhattacharya, U., Childs, E., Rewkowski, N., Manocha, D.: Speech2affectivegestures: synthesizing co-speech gestures with generative adversarial affective expression learning. In: Proceedings of the 29th ACM International Conference on Multimedia (2021)

Bhattacharya, U., Rewkowski, N., Banerjee, A., Guhan, P., Bera, A., Manocha, D.: Text2gestures: a transformer-based network for generating emotive body gestures for virtual agents. In: IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR) (2021)

Bjorck, N., Gomes, C.P., Selman, B., Weinberger, K.Q.: Understanding batch normalization. In: Advances in Neural Information Processing Systems (2018)

https://captury.com (2023)

Chan, J.C., Leung, H., Tang, J.K., Komura, T.: A virtual reality dance training system using motion capture technology. IEEE Trans. Learn. Technol. 4(2), 187–195 (2010)

Chopin, B., Tang, H., Daoudi, M.: Bipartite graph diffusion model for human interaction generation. In: Winter Conference on Applications of Computer Vision (WACV) (2024)

Chopin, B., Tang, H., Otberdout, N., Daoudi, M., Sebe, N.: Interaction transformer for human reaction generation. IEEE Trans. Multim. (2023)

Cummins, A.: In Search of the Ninja: The Historical Truth of Ninjutsu. The History Press (2012)

Dabral, R., Mughal, M.H., Golyanik, V., Theobalt, C.: Mofusion: A framework for denoising-diffusion-based motion synthesis. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2023)

Egges, A., Papagiannakis, G., Magnenat-Thalmann, N.: Presence and interaction in mixed reality environments. The Visual Computer (2007)

Elfwing, S., Uchibe, E., Doya, K.: Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Networks (2018)

Fieraru, M., Zanfir, M., Oneata, E., Popa, A.I., Olaru, V., Sminchisescu, C.: Three-dimensional reconstruction of human interactions. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Gandikota, R., Brown, N.: Pro-ddpm: progressive growing of variable denoising diffusion probabilistic models for faster convergence. In: 33rd British Machine Vision Conference 2022, BMVC (2022)

Ghosh, A., Cheema, N., Oguz, C., Theobalt, C., Slusallek, P.: Synthesis of compositional animations from textual descriptions. In: International Conference on Computer Vision (ICCV) (2021)

Ghosh, A., Dabral, R., Golyanik, V., Theobalt, C., Slusallek, P.: IMoS: intent-driven full-body motion synthesis for human-object interactions. In: Computer Graphics Forum, vol. 42. Wiley Online Library (2023)

Goel, A., Men, Q., Ho, E.S.L.: Interaction mix and match: synthesizing close interaction using conditional hierarchical GAN with multi-hot class embedding. Comput. Graph. Forum (2022)

Gu, D., Shim, J., Jang, J., Kang, C., Joo, K.: ContactGen: contact-guided interactive 3D human generation for partners. Proc. AAAI Conf. Artif. Intell. 38(3), 1923–1931 (2024)

Guo, C., et al.: Generating diverse and natural 3D human motions from text. In: Conference on Computer Vision and Pattern Recognition (2022)

Guo, C., Zuo, X., Wang, S., Cheng, L.: TM2T: stochastic and tokenized modeling for the reciprocal generation of 3D human motions and texts. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) ECCV 2022, Part XXXV, pp. 580–597. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-19833-5_34

Guo, W., Bie, X., Alameda-Pineda, X., Moreno-Noguer, F.: Multi-person extreme motion prediction. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Habibie, I., et al.: A motion matching-based framework for controllable gesture synthesis from speech. In: ACM SIGGRAPH Conference Proceedings (2022)

Hanser, E., Mc Kevitt, P., Lunney, T., Condell, J.: SceneMaker: intelligent multimodal visualisation of natural language scripts. In: Coyle, L., Freyne, J. (eds.) AICS 2009. LNCS (LNAI), vol. 6206, pp. 144–153. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-17080-5_17

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: GANS trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 30 (2017)

Ho, E.S., Komura, T.: Planning tangling motions for humanoids. In: IEEE-RAS International Conference on Humanoid Robots (2007)

Ho, E.S.L., Komura, T.: Character motion synthesis by topology coordinates. Comput. Graph. Forum 28(2), 299–308 (2009)

Ho, J., Jain, A., Abbeel, P.: Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 33 (2020)

Ho, J., Saharia, C., Chan, W., Fleet, D.J., Norouzi, M., Salimans, T.: Cascaded diffusion models for high fidelity image generation. J. Mach. Learn. Res. 23(1) (2022)

Hu, T., Zhu, X., Guo, W.: Two-person interaction recognition based on key poses. J. Comput. Inf. Syst. (2014)

Huang, S., et al.: Diffusion-based generation, optimization, and planning in 3d scenes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2023)

Huang, Y., et al.: Genre-conditioned long-term 3d dance generation driven by music. In: International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2022)

Karunratanakul, K., Preechakul, K., Suwajanakorn, S., Tang, S.: Guided motion diffusion for controllable human motion synthesis. In: International Conference on Computer Vision (ICCV) (2023)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Komura, T., Ho, E.S.L., Lau, R.W.H.: Animating reactive motion using momentum-based inverse kinematics. Comput. Animat. Virt. Worlds 16(3–4), 213–223 (2005)

Kulkarni, N., et al.: Nifty: neural object interaction fields for guided human motion synthesis. arXiv preprint arXiv:2307.07511 (2023)

Kundu, J.N., Buckchash, H., Mandikal, P., Jamkhandi, A., Radhakrishnan, V.B., et al.: Cross-conditioned recurrent networks for long-term synthesis of inter-person human motion interactions. In: Winter Conference on Applications of Computer Vision (WACV) (2020)

Li, J., Clegg, A., Mottaghi, R., Wu, J., Puig, X., Liu, C.K.: Controllable human-object interaction synthesis. arXiv preprint arXiv:2312.03913 (2023)

Li, J., Wu, J., Liu, C.K.: Object motion guided human motion synthesis. ACM Trans. Graph. (2023)

Liang, H., Zhang, W., Li, W., Yu, J., Xu, L.: InterGen: diffusion-based multi-human motion generation under complex interactions. Int. J. Comput. Vision (2024)

Liu, J., Shahroudy, A., Perez, M., Wang, G., Duan, L.Y., Kot, A.C.: NTU RGB+D 120: a large-scale benchmark for 3d human activity understanding. IEEE Trans. Pattern Anal. Mach. Intell. (2019)

Liu, X., Yi, L.: Geneoh diffusion: towards generalizable hand-object interaction denoising via denoising diffusion. In: International Conference on Learning Representations (ICLR) (2024)

Men, Q., Shum, H.P., Ho, E.S., Leung, H.: Gan-based reactive motion synthesis with class-aware discriminators for human–human interaction. Comput. Graph. (2022)

Mousas, C.: Performance-driven dance motion control of a virtual partner character. In: IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (2018)

Mughal, M.H., Dabral, R., Habibie, I., Donatelli, L., Habermann, M., Theobalt, C.: Convofusion: multi-modal conversational diffusion for co-speech gesture synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2024)

Ng, E., et al.: Learning to listen: Modeling non-deterministic dyadic facial motion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2022)

Petrovich, M., Black, M.J., Varol, G.: TEMOS: generating diverse human motions from textual descriptions. In: European Conference on Computer Vision (2022)

Po, R., et al.: State of the art on diffusion models for visual computing. arXiv preprints (2023)

Ramesh, A., Dhariwal, P., Nichol, A., Chu, C., Chen, M.: Hierarchical text-conditional image generation with clip latents. In: International Conference on Virtual Reality (2022)

Rempe, D., et al.: Trace and pace: Controllable pedestrian animation via guided trajectory diffusion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023)

Senecal, S., Nijdam, N.A., Aristidou, A., Magnenat-Thalmann, N.: Salsa dance learning evaluation and motion analysis in gamified virtual reality environment. Multim. Tools Appl. (2020)

Shafir, Y., Tevet, G., Kapon, R., Bermano, A.H.: Human motion diffusion as a generative prior. In: International Conference on Learning Representations (ICLR) (2024)

Shen, Y., Yang, L., Ho, E.S.L., Shum, H.P.H.: Interaction-based human activity comparison. IEEE Trans. Visualiz. Comput. Graph. (2020)

Shimada, S., Golyanik, V., Xu, W., Pérez, P., Theobalt, C.: Neural monocular 3d human motion capture with physical awareness. ACM Trans. Graph. (2021)

Shimada, S., Golyanik, V., Xu, W., Theobalt, C.: Physcap: physically plausible monocular 3d motion capture in real time. ACM Trans. Graph. (2020)

Shum, H.P., Komura, T., Shiraishi, M., Yamazaki, S.: Interaction patches for multi-character animation. ACM Trans. Graph. 27(5) (2008)

Shum, H.P., Komura, T., Yamazaki, S.: Simulating competitive interactions using singly captured motions. In: Proceedings of ACM Symposium on Virtual Reality Software and Technology (2007)

Siyao, L., et al.: Duolando: follower GPT with off-policy reinforcement learning for dance accompaniment. In: International Conference on Learning Representations (ICLR) (2024)

Sohl-Dickstein, J., Weiss, E., Maheswaranathan, N., Ganguli, S.: Deep unsupervised learning using nonequilibrium thermodynamics. In: International Conference on Machine Learning (ICML) (2015)

Song, J., Meng, C., Ermon, S.: Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502 (2020)

Spring, H.: Swing and the lindy hop: dance, venue, media, and tradition. Am. Music 15(2), 183 (1997)

Starke, S., Zhao, Y., Komura, T., Zaman, K.: Local motion phases for learning multi-contact character movements. ACM Trans. Graph. (2020)

Tanaka, M., Fujiwara, K.: Role-aware interaction generation from textual description. In: International Conference on Computer Vision (ICCV) (2023)

Tanke, J., et al.: Social diffusion: long-term multiple human motion anticipation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (2023)

Tevet, G., Raab, S., Gordon, B., Shafir, Y., Bermano, A.H., Cohen-Or, D.: Human motion diffusion model. arXiv preprint arXiv:2209.14916 (2022)

Tseng, J., Castellon, R., Liu, K.: Edge: editable dance generation from music. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2023)

Vaswani, A., et al.: Attention is all you need. Adv. Neural Inf. Process. Syst. (2017)

Wang, J., Xu, H., Narasimhan, M., Wang, X.: Multi-person 3d motion prediction with multi-range transformers. Adv. Neural Inf. Process. Syst. (2021)

Wang, Z., et al.: Move as you say, interact as you can: language-guided human motion generation with scene affordance. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2024)

Xie, Y., Jampani, V., Zhong, L., Sun, D., Jiang, H.: OmniControl: control any joint at any time for human motion generation. In: International Conference on Learning Representations (ICLR) (2024)

Xing, J., Xia, M., Zhang, Y., Cun, X., Wang, J., Wong, T.T.: Codetalker: speech-driven 3d facial animation with discrete motion prior. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2023)

Xu, S., Li, Z., Wang, Y.X., Gui, L.Y.: InterDiff: generating 3d human-object interactions with physics-informed diffusion. In: International Conference on Computer Vision (ICCV) (2023)

Ye, Y., et al.: Affordance diffusion: Synthesizing hand-object interactions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2023)

Yoon, Y., Ko, W.R., Jang, M., Lee, J., Kim, J., Lee, G.: Robots learn social skills: end-to-end learning of co-speech gesture generation for humanoid robots. In: International Conference on Robotics and Automation (ICRA). IEEE (2019)

Yuan, Y., Song, J., Iqbal, U., Vahdat, A., Kautz, J.: Physdiff: physics-guided human motion diffusion model. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (2023)

Yun, K., Honorio, J., Chattopadhyay, D., Berg, T.L., Samaras, D.: Two-person interaction detection using body-pose features and multiple instance learning. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (2012)

Zamfirescu-Pereira, J., Wong, R.Y., Hartmann, B., Yang, Q.: Why Johnny can’t prompt: how non-AI experts try (and fail) to design LLM prompts. In: Proceedings of Conference on Human Factors in Computing Systems (CHI) (2023)

Zhang, J., et al.: T2m-gpt: generating human motion from textual descriptions with discrete representations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2023)

Zhang, M., et al.: Motiondiffuse: text-driven human motion generation with diffusion model. arXiv preprint arXiv:2208.15001 (2022)

Zhang, W., Dabral, R., Leimkühler, T., Golyanik, V., Habermann, M., Theobalt, C.: ROAM: robust and object-aware motion generation using neural pose descriptors. In: International Conference on 3D Vision (3DV) (2024)

Zhang, X., Bhatnagar, B.L., Starke, S., Guzov, V., Pons-Moll, G.: Couch: towards controllable human-chair interactions. In: European Conference on Computer Vision (2022)

Zhou, Z., Wang, B.: UDE: a unified driving engine for human motion generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2023)

Zhu, L., Liu, X., Liu, X., Qian, R., Liu, Z., Yu, L.: Taming diffusion models for audio-driven co-speech gesture generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2023)

Acknowledgements

This research was supported by the EU Horizon 2020 grant Carousel+ (101017779), and the ERC Consolidator Grant 4DRepLy (770784). We thank Marc Jahan, Christopher Ruf, Michael Hiery, Thomas Leimkühler and Sascha Huwer for helping with the Ninjutsu data collection, and Noshaba Cheema for helping with the Lindy Hop data collection. We also thank Janis Sprenger and Duarte David for helping with the motion visualizations.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ghosh, A., Dabral, R., Golyanik, V., Theobalt, C., Slusallek, P. (2025). REMOS: 3D Motion-Conditioned Reaction Synthesis for Two-Person Interactions. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15094. Springer, Cham. https://doi.org/10.1007/978-3-031-72764-1_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-72764-1_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72763-4

Online ISBN: 978-3-031-72764-1

eBook Packages: Computer ScienceComputer Science (R0)