Abstract

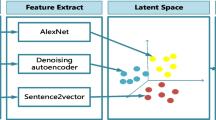

Understanding and modeling the popularity of User Generated Content (UGC) short videos on social media platforms presents a critical challenge with broad implications for content creators and recommendation systems. This study delves deep into the intricacies of predicting engagement for newly published videos with limited user interactions. Surprisingly, our findings reveal that Mean Opinion Scores from previous video quality assessment datasets do not strongly correlate with video engagement levels. To address this, we introduce a substantial dataset comprising 90,000 real-world UGC short videos from Snapchat. Rather than relying on view count, average watch time, or rate of likes, we propose two metrics: normalized average watch percentage (NAWP) and engagement continuation rate (ECR) to describe the engagement levels of short videos. Comprehensive multi-modal features, including visual content, background music, and text data, are investigated to enhance engagement prediction. With the proposed dataset and two key metrics, our method demonstrates its ability to predict engagements of short videos purely from video content.

D. Li—First author. Main work was completed during an internship at Snap.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Wang, H., Li, G., Liu, S., Kuo, C.-C.J.: ICME 2021 UGC-VQA challenge. http://ugcvqa.com/

Abadi, M., et al.: TensorFlow: large-scale machine learning on heterogeneous systems (2015). https://www.tensorflow.org/

Bulathwela, S., Perez-Ortiz, M., Yilmaz, E., Shawe-Taylor, J.: VLEngagement: a dataset of scientific video lectures for evaluating population-based engagement. arXiv e-prints arXiv:2011.02273 (2020). https://doi.org/10.48550/arXiv.2011.02273

Chen, B., Zhu, L., Li, G., Lu, F., Fan, H., Wang, S.: Learning generalized spatial-temporal deep feature representation for no-reference video quality assessment. IEEE Trans. Circuits Syst. Video Technol. 32(4), 1903–1916 (2022). https://doi.org/10.1109/TCSVT.2021.3088505

Chen, P., Li, L., Ma, L., Wu, J., Shi, G.: RIRNet: recurrent-in-recurrent network for video quality assessment. In: Proceedings of the 28th ACM International Conference on Multimedia, MM 2020, pp. 834–842. Association for Computing Machinery, New York (2020). https://doi.org/10.1145/3394171.3413717

Cho, K., et al.: Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1724–1734. ACL (2014)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 248–255 (2009)

Ghadiyaram, D., Pan, J., Bovik, A.C., Moorthy, A.K., Panda, P., Yang, K.C.: In-capture mobile video distortions: a study of subjective behavior and objective algorithms. IEEE Trans. Circuits Syst. Video Technol. 28(9), 2061–2077 (2018)

Götz-Hahn, F., Hosu, V., Lin, H., Saupe, D.: KonVid-150k: a dataset for no-reference video quality assessment of videos in-the-wild. IEEE Access 9, 72139–72160 (2021)

Gupta, V., et al.: 3MASSIV: multilingual, multimodal and multi-aspect dataset of social media short videos. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 21064–21075 (2022)

Hara, K., Kataoka, H., Satoh, Y.: Can spatiotemporal 3d CNNs retrace the history of 2D CNNs and ImageNet? In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6546–6555 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Hosu, V., et al.: The Konstanz natural video database (konVid-1k). In: Ninth International Conference on Quality of Multimedia Experience (QoMEX), pp. 1–6 (2017)

Ismail Fawaz, H., et al.: InceptionTime: finding AlexNet for time series classification. Data Min. Knowl. Discov. 34, 1936–1962 (2020)

Kay, W., et al.: The kinetics human action video dataset. ArXiv abs/1705.06950 (2017)

Kim, J., Guo, P.J., Seaton, D.T., Mitros, P., Gajos, K.Z., Miller, R.C.: Understanding in-video dropouts and interaction peaks inonline lecture videos. In: Proceedings of the First ACM Conference on Learning @ Scale Conference, L@S 2014, pp. 31-40. Association for Computing Machinery, New York (2014). https://doi.org/10.1145/2556325.2566237

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: Bengio, Y., LeCun, Y. (eds.) 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015, Conference Track Proceedings (2015). http://arxiv.org/abs/1412.6980

Korhonen, J.: Two-level approach for no-reference consumer video quality assessment. IEEE Trans. Image Process. 28(12), 5923–5938 (2019)

Lee, H., Im, J., Jang, S., Cho, H., Chung, S.: MeLU: meta-learned user preference estimator for cold-start recommendation. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2019, pp. 1073–1082. Association for Computing Machinery, New York (2019)https://doi.org/10.1145/3292500.3330859

Li, D., Jiang, T., Jiang, M.: Quality assessment of in-the-wild videos. In: Proceedings of the 27th ACM International Conference on Multimedia, MM 2019, pp. 2351–2359. Association for Computing Machinery, New York (2019)

Liao, L., et al.: Exploring the effectiveness of video perceptual representation in blind video quality assessment. In: Proceedings of the 30th ACM International Conference on Multimedia (ACM MM) (2022)

Lin, H., Hosu, V., Saupe, D.: KADID-10k: a large-scale artificially distorted IQA database. In: 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), pp. 1–3 (2019) https://doi.org/10.1109/QoMEX.2019.8743252

Liu, Y., Zhou, X., Yin, H., Wang, H., Yan, C.: Efficient video quality assessment with deeper spatiotemporal feature extraction and integration. J. Electron. Imaging 30, 063034 (2021). https://doi.org/10.1117/1.JEI.30.6.063034

Loshchilov, I., Hutter, F.: SGDR: stochastic gradient descent with warm restarts. In: 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017, Conference Track Proceedings. OpenReview.net (2017). https://openreview.net/forum?id=Skq89Scxx

Mittal, A., Saad, M.A., Bovik, A.C.: A completely blind video integrity oracle. IEEE Trans. Image Process. 25(1), 289–300 (2016)

Nuutinen, M., Virtanen, T., Vaahteranoksa, M., Vuori, T., Oittinen, P., Häkkinen, J.: CVD 2014-a database for evaluating no-reference video quality assessment algorithms. IEEE Trans. Image Process. 25(7), 3073–3086 (2016)

Pan, F., Li, S., Ao, X., Tang, P., He, Q.: Warm up cold-start advertisements: improving CTR predictions via learning to learn id embeddings. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2019, pp. 695–704. Association for Computing Machinery, New York (2019). https://doi.org/10.1145/3331184.3331268

Panda, R., Zhang, J., Li, H., Lee, J.Y., Lu, X., Roy-Chowdhury, A.K.: Contemplating visual emotions: understanding and overcoming dataset bias. In: European Conference on Computer Vision (2018)

Qing-Yuan, J., Yi, H., Gen, L., Jian, L., Lei, L., Wu-Jun, L.: SVD: a large-scale short video dataset for near-duplicate video retrieval. In: Proceedings of International Conference on Computer Vision (2019)

Radford, A., et al.: Learning transferable visual models from natural language supervision. In: Meila, M., Zhang, T. (eds.) Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18–24 July 2021, Virtual Event. Proceedings of Machine Learning Research, vol. 139, pp. 8748–8763. PMLR (2021), http://proceedings.mlr.press/v139/radford21a.html

Raffel, C., et al.: Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21(140), 1–67 (2020). http://jmlr.org/papers/v21/20-074.html

Saad, M.A., Bovik, A.C., Charrier, C.: Blind image quality assessment: a natural scene statistics approach in the DCT domain. IEEE Trans. Image Process. 21(8), 3339–3352 (2012)

She, D., Yang, J., Cheng, M.M., Lai, Y.K., Rosin, P.L., Wang, L.: WSCNet: weakly supervised coupled networks for visual sentiment classification and detection. IEEE Trans. Multimed. 22, 1358–1371 (2019)

Sinno, Z., Bovik, A.C.: Large-scale study of perceptual video quality. IEEE Trans. Image Process. 28(2), 612–627 (2019)

Tan, M., Le, Q.V.: EfficientNetv2: smaller models and faster training. In: Meila, M., Zhang, T. (eds.) Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18–24 July 2021, Virtual Event. Proceedings of Machine Learning Research, vol. 139, pp. 10096–10106. PMLR (2021). http://proceedings.mlr.press/v139/tan21a.html

Tu, Z., Chen, C.J., Wang, Y., Birkbeck, N., Adsumilli, B., Bovik, A.C.: Efficient user-generated video quality prediction. In: 2021 Picture Coding Symposium (PCS), pp. 1–5 (2021)

Tu, Z., Wang, Y., Birkbeck, N., Adsumilli, B., Bovik, A.C.: UGC-VQA: benchmarking blind video quality assessment for user generated content. IEEE Trans. Image Process. 30, 4449–4464 (2021)

Vaswani, A., et al.: Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS 2017, pp. 6000-6010. Curran Associates Inc., Red Hook (2017)

Volkovs, M., Yu, G., Poutanen, T.: DropoutNet: addressing cold start in recommender systems. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS 2017, pp. 4964–4973. Curran Associates Inc., Red Hook (2017)

Wang, Y., et al.: Rich features for perceptual quality assessment of UGC videos. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13435–13444 (2021)

Wu, H., Chen, C., Hou, J., Liao, L., Wang, A., Sun, W., Yan, Q., Lin, W.: Fast-VQA: efficient end-to-end video quality assessment with fragment sampling. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) ECCV 2022. LNCS, vol. 13666, pp. 538–554. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-20068-7_31

Wu, H., Chen, C., Liao, L., Hou, J., Sun, W., Yan, Q., Gu, J., Lin, W.: Neighbourhood representative sampling for efficient end-to-end video quality assessment (2022)

Wu, H., et al.: Exploring video quality assessment on user generated contents from aesthetic and technical perspectives. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 20144–20154 (2023)

Wu, S., Rizoiu, M.A., Xie, L.: Beyond views: measuring and predicting engagement in online videos. In: Proceedings of the International AAAI Conference on Web and Social Media, vol. 12, no. 1 (2018). https://doi.org/10.1609/icwsm.v12i1.15031, https://ojs.aaai.org/index.php/ICWSM/article/view/15031

Wu, X., et al.: Speech2Lip: high-fidelity speech to lip generation by learning from a short video. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 22168–22177 (2023)

Xu, H., et al.: mPLUG-2: a modularized multi-modal foundation model across text, image and video. ArXiv abs/2302.00402 (2023)

Yang, J., She, D., Lai, Y.K., Rosin, P.L., Yang, M.H.: Weakly supervised coupled networks for visual sentiment analysis. In: The IEEE Conference on Computer Vision and Pattern Recognition (2018)

Yim, J.G., Wang, Y., Birkbeck, N., Adsumilli, B.: Subjective quality assessment for YouTube UGC dataset. In: 2020 IEEE International Conference on Image Processing (ICIP), pp. 131–135 (2020)

Ying, Z., Mandal, M., Ghadiyaram, D., Bovik, A.: Patch-VQ: ‘patching up’ the video quality problem. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 14019–14029 (2021)

Zhan, R., et al.: Deconfounding duration bias in watch-time prediction for video recommendation. In: Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, KDD 2022, pp. 4472–4481. Association for Computing Machinery, New York, NY, USA (2022). https://doi.org/10.1145/3534678.3539092

Zhang, W., Zhai, G., Wei, Y., Yang, X., Ma, K.: Blind image quality assessment via vision-language correspondence: A multitask learning perspective. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 14071–14081 (2023)

Zhang, Z., et al.: MD-VQA: multi-dimensional quality assessment for UGC live videos. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1746–1755 (2023)

Zhu, Y., et al.: Learning to warm up cold item embeddings for cold-start recommendation with meta scaling and shifting networks. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2021, pp. 1167–1176. Association for Computing Machinery, New York (2021). https://doi.org/10.1145/3404835.3462843

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Li, D. et al. (2025). Delving Deep into Engagement Prediction of Short Videos. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15112. Springer, Cham. https://doi.org/10.1007/978-3-031-72949-2_17

Download citation

DOI: https://doi.org/10.1007/978-3-031-72949-2_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72948-5

Online ISBN: 978-3-031-72949-2

eBook Packages: Computer ScienceComputer Science (R0)