Abstract

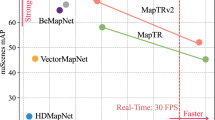

Online vectorized High-Definition (HD) map construction is critical for downstream prediction and planning. Recent efforts have built strong baselines for this task, however, geometric shapes and relations of instances in road systems are still under-explored, such as parallelism, perpendicular, rectangle-shape, etc. In our work, we propose GeMap (Geometry Map), which end-to-end learns Euclidean shapes and relations of map instances beyond fundamental perception. Specifically, we design a geometric loss based on angle and magnitude clues, robust to rigid transformations of driving scenarios. To address the limitations of the vanilla attention mechanism in learning geometry, we propose to decouple self-attention to handle Euclidean shapes and relations independently. GeMap achieves new state-of-the-art performance on the nuScenes and Argoverse 2 datasets. Remarkably, it reaches a 71.8% mAP on the large-scale Argoverse 2 dataset, outperforming MapTRv2 by +4.4% and surpassing the 70% mAP threshold for the first time. Code is available at https://github.com/cnzzx/GeMap.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Caesar, H., et al.: nuScenes: a multimodal dataset for autonomous driving. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11621–11631 (2020)

Chen, S., Cheng, T., Wang, X., Meng, W., Zhang, Q., Liu, W.: Efficient and robust 2D-to-BEV representation learning via geometry-guided kernel transformer. arXiv preprint arXiv:2206.04584 (2022)

Deo, N., Wolff, E., Beijbom, O.: Multimodal trajectory prediction conditioned on lane-graph traversals. In: Conference on Robot Learning, pp. 203–212. PMLR (2022)

Ding, W., Qiao, L., Qiu, X., Zhang, C.: Pivotnet: vectorized pivot learning for end-to-end HD map construction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3672–3682 (2023)

Ding, X., et al.: Unireplknet: a universal perception large-kernel convnet for audio video point cloud time-series and image recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5513–5524 (2024)

Espinoza, J.L.V., Liniger, A., Schwarting, W., Rus, D., Van Gool, L.: Deep interactive motion prediction and planning: playing games with motion prediction models. In: Learning for Dynamics and Control Conference, pp. 1006–1019. PMLR (2022)

Feng, Z., Guo, S., Tan, X., Xu, K., Wang, M., Ma, L.: Rethinking efficient lane detection via curve modeling. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17062–17070 (2022)

Gao, J., et al.: Vectornet: encoding HD maps and agent dynamics from vectorized representation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11525–11533 (2020)

Ge, C., et al.: Metabev: solving sensor failures for 3D detection and map segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8721–8731 (2023)

Gu, J., et al.: ViP3D: end-to-end visual trajectory prediction via 3D agent queries. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5496–5506 (2023)

Han, J., et al.: Onellm: one framework to align all modalities with language. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 26584–26595 (2024)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Huang, S., et al.: Anchor3dlane: learning to regress 3D anchors for monocular 3D lane detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17451–17460 (2023)

Jiao, J.: Machine learning assisted high-definition map creation. In: 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC), vol. 1, pp. 367–373. IEEE (2018)

Lang, A.H., Vora, S., Caesar, H., Zhou, L., Yang, J., Beijbom, O.: Pointpillars: fast encoders for object detection from point clouds. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12697–12705 (2019)

Lee, Y., Hwang, J.W., Lee, S., Bae, Y., Park, J.: An energy and GPU-computation efficient backbone network for real-time object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (2019)

Li, C., Shi, J., Wang, Y., Cheng, G.: Reconstruct from top view: a 3D lane detection approach based on geometry structure prior. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4370–4379 (2022)

Li, Q., Wang, Y., Wang, Y., Zhao, H.: Hdmapnet: an online HD map construction and evaluation framework. In: 2022 International Conference on Robotics and Automation (ICRA), pp. 4628–4634. IEEE (2022)

Li, Y., et al.: Bevdepth: acquisition of reliable depth for multi-view 3D object detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 1477–1485 (2023)

Li, Z., et al.: BEVFormer: learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) ECCV 2022. LNCS, vol. 13669, pp. 1–18. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-20077-9_1

Liang, M., et al.: Learning lane graph representations for motion forecasting. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12347, pp. 541–556. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58536-5_32

Liao, B., et al.: MapTR: structured modeling and learning for online vectorized HD map construction. In: The Eleventh International Conference on Learning Representations (2023). https://openreview.net/forum?id=k7p_YAO7yE

Liao, B., et al.: Maptrv2: an end-to-end framework for online vectorized HD map construction. arXiv preprint arXiv:2308.05736 (2023)

Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Liu, R., Chen, D., Liu, T., Xiong, Z., Yuan, Z.: Learning to predict 3D lane shape and camera pose from a single image via geometry constraints. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 1765–1772 (2022)

Liu, Y., Yuan, T., Wang, Y., Wang, Y., Zhao, H.: Vectormapnet: end-to-end vectorized HD map learning. In: International Conference on Machine Learning, pp. 22352–22369. PMLR (2023)

Liu, Z., et al.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022 (2021)

Liu, Z., et al.: Bevfusion: multi-task multi-sensor fusion with unified bird’s-eye view representation. In: 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 2774–2781. IEEE (2023)

Loshchilov, I., Hutter, F.: SGDR: stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983 (2016)

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101 (2017)

Loukkal, A., Grandvalet, Y., Drummond, T., Li, Y.: Driving among flatmobiles: bird-eye-view occupancy grids from a monocular camera for holistic trajectory planning. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 51–60 (2021)

Lu, C., van de Molengraft, M.J.G., Dubbelman, G.: Monocular semantic occupancy grid mapping with convolutional variational encoder-decoder networks. IEEE Robot. Autom. Lett. 4(2), 445–452 (2019)

Mi, L., et al.: Hdmapgen: a hierarchical graph generative model of high definition maps. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4227–4236 (2021)

Pan, B., Sun, J., Leung, H.Y.T., Andonian, A., Zhou, B.: Cross-view semantic segmentation for sensing surroundings. IEEE Robot. Autom. Lett. 5(3), 4867–4873 (2020)

Philion, J., Fidler, S.: Lift, splat, shoot: encoding images from arbitrary camera rigs by implicitly unprojecting to 3D. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12359, pp. 194–210. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58568-6_12

Qiao, L., Ding, W., Qiu, X., Zhang, C.: End-to-end vectorized HD-map construction with piecewise bezier curve. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13218–13228 (2023)

Scheel, O., Bergamini, L., Wolczyk, M., Osiński, B., Ondruska, P.: Urban driver: learning to drive from real-world demonstrations using policy gradients. In: Conference on Robot Learning, pp. 718–728. PMLR (2022)

Tabelini, L., Berriel, R., Paixao, T.M., Badue, C., De Souza, A.F., Oliveira-Santos, T.: Keep your eyes on the lane: real-time attention-guided lane detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 294–302 (2021)

Tabelini, L., Berriel, R., Paixao, T.M., Badue, C., De Souza, A.F., Oliveira-Santos, T.: Polylanenet: lane estimation via deep polynomial regression. In: 2020 25th International Conference on Pattern Recognition (ICPR), pp. 6150–6156. IEEE (2021)

Tan, M., Le, Q.: Efficientnet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114. PMLR (2019)

Van Gansbeke, W., De Brabandere, B., Neven, D., Proesmans, M., Van Gool, L.: End-to-end lane detection through differentiable least-squares fitting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (2019)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Wang, J., et al.: A keypoint-based global association network for lane detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1392–1401 (2022)

Wilson, B., et al.: Argoverse 2: next generation datasets for self-driving perception and forecasting. arXiv preprint arXiv:2301.00493 (2023)

Xie, Z., Pang, Z., Wang, Y.X.: MV-map: offboard HD-map generation with multi-view consistency. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8658–8668 (2023)

Yan, Y., Mao, Y., Li, B.: Second: sparsely embedded convolutional detection. Sensors 18(10), 3337 (2018)

Yang, W., et al.: Projecting your view attentively: monocular road scene layout estimation via cross-view transformation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15536–15545 (2021)

Yuan, T., Liu, Y., Wang, Y., Wang, Y., Zhao, H.: Streammapnet: streaming mapping network for vectorized online HD map construction. arXiv preprint arXiv:2308.12570 (2023)

Zhang, G., et al.: Online map vectorization for autonomous driving: a rasterization perspective. In: Advances in Neural Information Processing Systems, vol. 36 (2024)

Zhang, Y., Ding, X., Gong, K., Ge, Y., Shan, Y., Yue, X.: Multimodal pathway: improve transformers with irrelevant data from other modalities. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6108–6117 (2024)

Zhang, Y., et al.: Meta-transformer: a unified framework for multimodal learning. arXiv preprint arXiv:2307.10802 (2023)

Zhang, Y., Li, H., Liu, J., Yue, X.: Explore the limits of omni-modal pretraining at scale. arXiv preprint arXiv:2406.09412 (2024)

Zhang, Z., Zhang, Y., Ding, X., Jin, F., Yue, X.: Online vectorized HD map construction using geometry. arXiv preprint arXiv:2312.03341 (2023)

Zhou, B., Krähenbühl, P.: Cross-view transformers for real-time map-view semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13760–13769 (2022)

Zhou, Z., Ye, L., Wang, J., Wu, K., Lu, K.: HIVT: hierarchical vector transformer for multi-agent motion prediction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8823–8833 (2022)

Zhu, X., Su, W., Lu, L., Li, B., Wang, X., Dai, J.: Deformable DETR: deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159 (2020)

Acknowledgments

This work is partially supported by the National Natural Science Foundation of China (No. 62272045, No. 8326014), The Shun Hing Institute of Advanced Engineering (No. 8115074), and CUHK Direct Grants (No. 4055190).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, Z., Zhang, Y., Ding, X., Jin, F., Yue, X. (2025). Online Vectorized HD Map Construction Using Geometry. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15107. Springer, Cham. https://doi.org/10.1007/978-3-031-72967-6_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-72967-6_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-72966-9

Online ISBN: 978-3-031-72967-6

eBook Packages: Computer ScienceComputer Science (R0)