Abstract

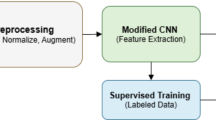

CLIP is a powerful and widely used tool for understanding images in the context of natural language descriptions to perform nuanced tasks. However, it does not offer application-specific fine-grained and structured understanding, due to its generic nature. In this work, we aim to adapt CLIP for fine-grained and structured – in the form of tabular data – visual understanding of museum exhibits. To facilitate such understanding we (a) collect, curate, and benchmark a dataset of 200K+ image-table pairs, and (b) develop a method that allows predicting tabular outputs for input images. Our dataset is the first of its kind in the public domain. At the same time, the proposed method is novel in leveraging CLIP’s powerful representations for fine-grained and tabular understanding. The proposed method (MUZE) learns to map CLIP’s image embeddings to the tabular structure by means of a proposed transformer-based parsing network (parseNet). More specifically, parseNet enables prediction of missing attribute values while integrating context from known attribute-value pairs for an input image. We show that this leads to significant improvement in accuracy. Through exhaustive experiments, we show the effectiveness of the proposed method on fine-grained and structured understanding of museum exhibits, by achieving encouraging results in a newly established benchmark. Our dataset and source-code can be found at: https://github.com/insait-institute/MUZE

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Some other variants, such as [1] also exist, however, a separate vision-only representation models are often preferred primarily due to the development ease.

- 2.

We refer to both the dataset and method with the same name. In case of ambiguity we use MUZE (dataset) or MUZE (method).

References

Alayrac, J.B., et al.: Flamingo: a visual language model for few-shot learning. Adv. Neural. Inf. Process. Syst. 35, 23716–23736 (2022)

Bai, Z., Nakashima, Y., Garcia, N.: Explain me the painting: multi-topic knowledgeable art description generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5422–5432 (2021)

Bangalath, H., Maaz, M., Khattak, M.U., Khan, S.H., Shahbaz Khan, F.: Bridging the gap between object and image-level representations for open-vocabulary detection. Adv. Neural. Inf. Process. Syst. 35, 33781–33794 (2022)

Bao, H., Dong, L., Piao, S., Wei, F.: BEiT: BERT pre-training of image transformers. arXiv preprint arXiv:2106.08254 (2021)

Barz, B., Denzler, J.: Deep learning on small datasets without pre-training using cosine loss. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1371–1380 (2020)

Becattini, F., et al.: VISCOUNTH: a large-scale multilingual visual question answering dataset for cultural heritage. ACM Trans. Multimedia Comput. Commun. Appl. (2023)

Campos, R., Mangaravite, V., Pasquali, A., Jorge, A., Nunes, C., Jatowt, A.: YAKE! keyword extraction from single documents using multiple local features. Inf. Sci. 509, 257–289 (2020)

Chen, W., Zha, H., Chen, Z., Xiong, W., Wang, H., Wang, W.: HybridQA: a dataset of multi-hop question answering over tabular and textual data. arXiv preprint arXiv:2004.07347 (2020)

Chen, X., et al.: PaLI: a jointly-scaled multilingual language-image model. In: ICLR (2023)

Chen, Y.-C., et al.: UNITER: universal image-text representation learning. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) Computer Vision – ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXX, pp. 104–120. Springer International Publishing, Cham (2020). https://doi.org/10.1007/978-3-030-58577-8_7

Cherti, M., et al.: Reproducible scaling laws for contrastive language-image learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2818–2829 (2023)

Conde, M.V., Turgutlu, K.: CLIP-Art: contrastive pre-training for fine-grained art classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3956–3960 (2021)

Cui, P., Zhang, D., Deng, Z., Dong, Y., Zhu, J.: Learning sample difficulty from pre-trained models for reliable prediction. In: Advances in Neural Information Processing Systems, vol. 36 (2024)

Dataset, E.: Novel datasets for fine-grained image categorization. In: First Workshop on Fine Grained Visual Categorization, CVPR. Citeseer. Citeseer. Citeseer (2011)

Ding, J., Xue, N., Xia, G., Dai, D.: Decoupling zero-shot semantic segmentation. 2022 ieee. In: CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 11573–11582 (2021)

Gao, H., Mao, J., Zhou, J., Huang, Z., Wang, L., Xu, W.: Are you talking to a machine? Dataset and methods for multilingual image question. In: Advances in Neural Information Processing Systems, vol. 28 (2015)

Garcia, N., et al.: A dataset and baselines for visual question answering on art. In: Bartoli, A., Fusiello, A. (eds.) Computer Vision – ECCV 2020 Workshops: Glasgow, UK, August 23–28, 2020, Proceedings, Part II, pp. 92–108. Springer International Publishing, Cham (2020). https://doi.org/10.1007/978-3-030-66096-3_8

Gu, X., Lin, T.Y., Kuo, W., Cui, Y.: Open-vocabulary object detection via vision and language knowledge distillation. arXiv preprint arXiv:2104.13921 (2021)

Hannan, D., Jain, A., Bansal, M.: MANYMODALQA: modality disambiguation and QA over diverse inputs. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 7879–7886 (2020)

Hwang, W., et al.: Post-OCR parsing: building simple and robust parser via bio tagging. In: Workshop on Document Intelligence at NeurIPS 2019 (2019)

Jia, C., et al.: Scaling up visual and vision-language representation learning with noisy text supervision. In: International Conference on Machine Learning, pp. 4904–4916. PMLR (2021)

Ju, C., Han, T., Zheng, K., Zhang, Y., Xie, W.: Prompting visual-language models for efficient video understanding. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision – ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXV, pp. 105–124. Springer Nature Switzerland, Cham (2022). https://doi.org/10.1007/978-3-031-19833-5_7

Kamath, A., Singh, M., LeCun, Y., Synnaeve, G., Misra, I., Carion, N.: MDETR-modulated detection for end-to-end multi-modal understanding. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1780–1790 (2021)

Kim, G., et al.: OCR-free document understanding transformer. In: European Conference on Computer Vision (ECCV) (2022)

Kim, W., Son, B., Kim, I.: ViLT: vision-and-language transformer without convolution or region supervision. In: International Conference on Machine Learning, pp. 5583–5594. PMLR (2021)

Lee, C.Y., et al.: FormNet: structural encoding beyond sequential modeling in form document information extraction. In: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (2022). https://doi.org/10.18653/v1/2022.acl-long.260, https://aclanthology.org/2022.acl-long.260

Lee, K., et al.: Pix2Struct: screenshot parsing as pretraining for visual language understanding. In: Proceedings of the 40th International Conference on Machine Learning (2023). https://proceedings.mlr.press/v202/lee23g.html

Li, B., Weinberger, K.Q., Belongie, S., Koltun, V., Ranftl, R.: Language-driven semantic segmentation. In: International Conference on Learning Representations (2022). https://openreview.net/forum?id=RriDjddCLN

Li, B., Weinberger, K.Q., Belongie, S.J., Koltun, V., Ranftl, R.: Language-driven semantic segmentation. CoRR abs/2201.03546 (2022). https://arxiv.org/abs/2201.03546

Li, J., Li, D., Xiong, C., Hoi, S.: BLIP: bootstrapping language-image pre-training for unified vision-language understanding and generation. In: International Conference on Machine Learning, pp. 12888–12900. PMLR (2022)

Li, L.H., Yatskar, M., Yin, D., Hsieh, C.J., Chang, K.W.: VisualBERT: a simple and performant baseline for vision and language. arXiv preprint arXiv:1908.03557 (2019)

Li, X., et al.: Oscar: object-semantics aligned pre-training for vision-language tasks. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) Computer Vision – ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXX, pp. 121–137. Springer International Publishing, Cham (2020). https://doi.org/10.1007/978-3-030-58577-8_8

Lin, W., Chen, J., Mei, J., Coca, A., Byrne, B.: Fine-grained late-interaction multi-modal retrieval for retrieval augmented visual question answering. In: Advances in Neural Information Processing Systems, vol. 36 (2024)

Liu, F., et al.: DePlot: one-shot visual language reasoning by plot-to-table translation. In: Findings of the Association for Computational Linguistics: ACL 2023 (2023). https://doi.org/10.18653/v1/2023.findings-acl.660, https://aclanthology.org/2023.findings-acl.660

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101 (2017)

Lu, J., Batra, D., Parikh, D., Lee, S.: ViLBERT: pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In: Advances in Neural Information Processing Systems, vol. 32 (2019)

Lu, Y., Guo, C., Dai, X., Wang, F.Y.: Data-efficient image captioning of fine art paintings via virtual-real semantic alignment training. Neurocomputing 490, 163–180 (2022)

Luo, J., Li, Z., Wang, J., Lin, C.Y.: ChartOCR: data extraction from charts images via a deep hybrid framework. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1917–1925 (2021)

Maaz, M., Rasheed, H., Khan, S., Khan, F.S., Anwer, R.M., Yang, M.-H.: Class-agnostic object detection with multi-modal transformer. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision – ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part X, pp. 512–531. Springer Nature Switzerland, Cham (2022). https://doi.org/10.1007/978-3-031-20080-9_30

Maji, S., Rahtu, E., Kannala, J., Blaschko, M., Vedaldi, A.: Fine-grained visual classification of aircraft. arXiv preprint arXiv:1306.5151 (2013)

Marty, P.F., Jones, K.B.: Museum Informatics: People, Information, and Technology in Museums, vol. 2. Taylor & Francis (2008)

Masry, A., Long, D.X., Tan, J.Q., Joty, S., Hoque, E.: ChartQA: a benchmark for question answering about charts with visual and logical reasoning. arXiv preprint arXiv:2203.10244 (2022)

Meng, F., et al.: Foundation model is efficient multimodal multitask model selector. arXiv preprint arXiv:2308.06262 (2023)

Ni, B., et al.: Expanding language-image pretrained models for general video recognition. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision – ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part IV, pp. 1–18. Springer Nature Switzerland, Cham (2022). https://doi.org/10.1007/978-3-031-19772-7_1

Nishanbaev, I., Champion, E., McMeekin, D.A.: A survey of geospatial semantic web for cultural heritage. Heritage 2(2), 1471–1498 (2019)

Pham, H., et al.: Combined scaling for zero-shot transfer learning. Neurocomputing 555, 126658 (2023)

Radford, A., et al.: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning, pp. 8748–8763. PMLR (2021)

Rasheed, H., Khattak, M.U., Maaz, M., Khan, S., Khan, F.S.: Fine-tuned clip models are efficient video learners. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6545–6554 (2023)

Sheng, S., Van Gool, L., Moens, M.F.: A dataset for multimodal question answering in the cultural heritage domain. In: Proceedings of the COLING 2016 Workshop on Language Technology Resources and Tools for Digital Humanities (LT4DH), pp. 10–17. ACL (2016)

Siegel, N., Horvitz, Z., Levin, R., Divvala, S., Farhadi, A.: FigureSeer: parsing result-figures in research papers. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision – ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part VII, pp. 664–680. Springer International Publishing, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_41

Singh, A., Hu, R., Goswami, V., Couairon, G., Galuba, W., Rohrbach, M., Kiela, D.: FLAVA: a foundational language and vision alignment model. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15638–15650 (2022)

Talmor, A., et al.: MULTIMODALQA: complex question answering over text, tables and images. arXiv preprint arXiv:2104.06039 (2021)

Wah, C., Branson, S., Welinder, P., Perona, P., Belongie, S.: The Caltech-UCSD birds-200–2011 dataset. Technical report California Institute of Technology (2011)

Wang, M., Xing, J., Liu, Y.: ActionCLIP: a new paradigm for video action recognition. arXiv preprint arXiv:2109.08472 (2021)

Wang, Z., Wu, Z., Agarwal, D., Sun, J.: MedCLIP: contrastive learning from unpaired medical images and text. arXiv preprint arXiv:2210.10163 (2022)

Wei, Y., et al.: Improving clip fine-tuning performance. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5439–5449 (2023)

Zhai, X., et al.: LiT: zero-shot transfer with locked-image text tuning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 18123–18133 (2022)

Zhang, C., Kaeser-Chen, C., Vesom, G., Choi, J., Kessler, M., Belongie, S.: The iMet collection 2019 challenge dataset. arXiv preprint arXiv:1906.00901 (2019)

Zhang, R., et al.: Tip-Adapter: training-free clip-adapter for better vision-language modeling. arXiv preprint arXiv:2111.03930 (2021)

Zhou, C., Loy, C.C., Dai, B.: Extract free dense labels from CLIP. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision – ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXVIII, pp. 696–712. Springer Nature Switzerland, Cham (2022). https://doi.org/10.1007/978-3-031-19815-1_40

Zhou, K., Yang, J., Loy, C.C., Liu, Z.: Conditional prompt learning for vision-language models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16816–16825 (2022)

Zhou, K., Yang, J., Loy, C.C., Liu, Z.: Learning to prompt for vision-language models. Int. J. Comput. Vis. 130(9), 2337–2348 (2022)

Zhou, X., Girdhar, R., Joulin, A., Krähenbühl, P., Misra, I.: Detecting twenty-thousand classes using image-level supervision. In: European Conference on Computer Vision, pp. 350–368. Springer (2022). https://doi.org/10.1007/978-3-031-20077-9_21

Acknowledgements

This research was partially funded by the Ministry of Education and Science of Bulgaria (support for INSAIT, part of the Bulgarian National Roadmap for Research Infrastructure). We thank the Bulgarian National Archaeological Institute with Museum for the support and guidance, the British Museum and Victoria&Albert Museum for the access to their data that made this research possible, and Google DeepMind which provided vital support and resources for this research. We also thank the anonymous reviewers for their efforts and valuable feedback to improve our work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Balauca, AA., Paudel, D.P., Toutanova, K., Van Gool, L. (2025). Taming CLIP for Fine-Grained and Structured Visual Understanding of Museum Exhibits. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15134. Springer, Cham. https://doi.org/10.1007/978-3-031-73116-7_22

Download citation

DOI: https://doi.org/10.1007/978-3-031-73116-7_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-73115-0

Online ISBN: 978-3-031-73116-7

eBook Packages: Computer ScienceComputer Science (R0)