Abstract

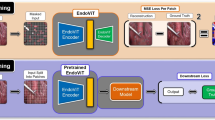

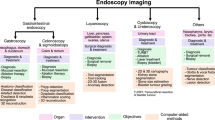

While recent advances in deep learning (DL) for surgical scene segmentation have yielded promising results on single-centre and single-imaging modality data, these methods usually do not generalise to unseen distribution or unseen modalities. Even though human experts can identify visual appearances, DL methods often fail to do so if data samples do not follow the similar data distribution. Current literature for tackling domain gaps in modality changes has been done mostly for natural scene data. However, these methods cannot be directly applied to the endoscopic data as the visual cues are very limited compared to the natural scene data. In this work, we exploit the style and content information in the image by performing instance normalization and feature covariance mapping techniques for preserving robust and generalizable feature representations. Further, to eliminate the risk of removing salient feature representation associated with the objects of interest, we introduce a restitution module within the feature learning ResNet backbone that allows the retention of useful task-relevant features. Our proposed method obtained 13.7% improvement over the baseline DeepLabv3+ and nearly 8% improvement on recent state-of-the-art (SOTA) methods for the target (different modality) set of EndoUDA polyp dataset. Similarly, our method achieved 19% improvement over the baseline and 6% over best performing SOTA on EndoUDA Barrett’s esophagus (BE) data.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bang, C.S., Lee, J.J., Baik, G.H.: Computer-aided diagnosis of esophageal cancer and neoplasms in endoscopic images: a systematic review and meta-analysis of diagnostic test accuracy. Gastrointest. Endosc. 93(5), 1006–1015 (2021)

Banik, D., Roy, K., Bhattacharjee, D., Nasipuri, M., Krejcar, O.: Polyp-net: a multimodel fusion network for polyp segmentation. IEEE Trans. Instrum. Meas. 70, 1–12 (2020)

Carneiro, G., Pu, L.Z.C.T., Singh, R., Burt, A.: Deep learning uncertainty and confidence calibration for the five-class polyp classification from colonoscopy. Med. Image Anal. 62, 101653 (2020)

Celik, N., Ali, S., Gupta, S., Braden, B., Rittscher, J.: EndoUDA: a modality independent segmentation approach for endoscopy imaging. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12903, pp. 303–312. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87199-4_29

Celik, N., Ali, S., Gupta, S., Braden, B., Rittscher, J.: Endouda: a modality independent segmentation approach for endoscopy imaging. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 303–312. Springer (2021)

Chen, L.C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 801–818 (2018)

Cho, W., Choi, S., Park, D.K., Shin, I., Choo, J.: Image-to-image translation via group-wise deep whitening-and-coloring transformation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10639–10647 (2019)

Choi, S., Jung, S., Yun, H., Kim, J.T., Kim, S., Choo, J.: Robustnet: improving domain generalization in urban-scene segmentation via instance selective whitening. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11580–11590 (2021)

Gatys, L., Ecker, A.S., Bethge, M.: Texture synthesis using convolutional neural networks. Adv. Neural Inf. Process. Syst. 28 (2015)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2414–2423 (2016)

Jaspers, T.J., et al.: Robustness evaluation of deep neural networks for endoscopic image analysis: insights and strategies. Med. Image Anal. 94, 103157 (2024)

Jin, X., Lan, C., Zeng, W., Chen, Z.: Style normalization and restitution for domain generalization and adaptation. IEEE Trans. Multimedia 24, 3636–3651 (2021)

Kim, J., Lee, J., Park, J., Min, D., Sohn, K.: Pin the memory: learning to generalize semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4350–4360 (2022)

Lee, S., Seong, H., Lee, S., Kim, E.: Wildnet: learning domain generalized semantic segmentation from the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9936–9946 (2022)

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.H.: Universal style transfer via feature transforms. Adv. Neural Inf. Process. Syst. 30 (2017)

Martinez-Garcia-Pena, R., Teevno, M.A., Ochoa-Ruiz, G., Ali, S.: Supra: superpixel guided loss for improved multi-modal segmentation in endoscopy. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 285–294 (2023)

Menon, S., Trudgill, N.: How commonly is upper gastrointestinal cancer missed at endoscopy? a meta-analysis. Endoscopy Int. Open 2(02), E46–E50 (2014)

Nogueira-Rodríguez, A., et al.: Real-time polyp detection model using convolutional neural networks. Neural Comput. Appl. 34(13), 10375–10396 (2022)

Pan, X., Luo, P., Shi, J., Tang, X.: Two at once: enhancing learning and generalization capacities via ibn-net. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 464–479 (2018)

Pan, X., Zhan, X., Shi, J., Tang, X., Luo, P.: Switchable whitening for deep representation learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1863–1871 (2019)

Pasha, S.F., Leighton, J.A., Das, A., Gurudu, S., Sharma, V.K.: Narrow band imaging (nbi) and white light endoscopy (wle) have a comparable yield for detection of colon polyps in patients undergoing screening or surveillance colonoscopy: a meta-analysis. Gastrointest. Endosc. 69(5), AB363 (2009)

Sung, H., et al.: Global cancer statistics 2020: globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 71(3), 209–249 (2021)

Teevno, M.A., Martinez-Garcia-Peña, R., Ochoa-Ruiz, G., Ali, S.: Domain generalization for endoscopic image segmentation by disentangling style-content information and superpixel consistency. In: 2024 IEEE 37th International Symposium on Computer-Based Medical Systems (CBMS), pp. 383–390 (2024). https://doi.org/10.1109/CBMS61543.2024.00070

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Instance normalization: the missing ingredient for fast stylization. arXiv preprint arXiv:1607.08022 (2016)

Zhang, Z., et al.: Pathologist-level interpretable whole-slide cancer diagnosis with deep learning. Nat. Mach. Intell. 1(5), 236–245 (2019)

Acknowledgements

The authors wish to acknowledge the Mexican Council for Science and Technology (CONAHCYT) for their support in terms of postgraduate scholarships in this project. This work has been supported by Azure Sponsorship credits granted by Microsoft’s AI for Good Research Lab through the AI for Health program. The project was also partially supported by the French-Mexican ANUIES CONAHCYT Ecos Nord grant 322537 and by the Worldwide Universities Network (WUN) funded Project “Optimise: Novel robust computer vision methods and synthetic datasets for minimally invasive surgery."

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Teevno, M.A., Ochoa-Ruiz, G., Ali, S. (2025). Tackling Domain Generalization for Out-of-Distribution Endoscopic Imaging. In: Xu, X., Cui, Z., Rekik, I., Ouyang, X., Sun, K. (eds) Machine Learning in Medical Imaging. MLMI 2024. Lecture Notes in Computer Science, vol 15242. Springer, Cham. https://doi.org/10.1007/978-3-031-73290-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-73290-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-73292-8

Online ISBN: 978-3-031-73290-4

eBook Packages: Computer ScienceComputer Science (R0)