Abstract

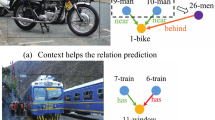

Scene Graph Generation (SGG) aims to explore the relationships between objects in images and obtain scene summary graphs, thereby better serving downstream tasks. However, the long-tailed problem has adversely affected the scene graph’s quality. The predictions are dominated by coarse-grained relationships, lacking more informative fine-grained ones. The union region of one object pair (i.e., one sample) contains rich and dedicated contextual information, enabling the prediction of the sample-specific bias for refining the original relationship prediction. Therefore, we propose a novel Sample-Level Bias Prediction (SBP) method for fine-grained SGG (SBG). Firstly, we train a classic SGG model and construct a correction bias set by calculating the margin between the ground truth label and the predicted label with one classic SGG model. Then, we devise a Bias-Oriented Generative Adversarial Network (BGAN) that learns to predict the constructed correction biases, which can be utilized to correct the original predictions from coarse-grained relationships to fine-grained ones. The extensive experimental results on VG, GQA, and VG-1800 datasets demonstrate that our SBG outperforms the state-of-the-art methods in terms of Average@K across three mainstream SGG models: Motif, VCtree, and Transformer. Compared to dataset-level correction methods on VG, SBG shows a significant average improvement of 5.6%, 3.9%, and 3.2% on Average@K for tasks PredCls, SGCls, and SGDet, respectively. The code will be available at https://github.com/Zhuzi24/SBG.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Biswas, B.A., Ji, Q.: Probabilistic debiasing of scene graphs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10429–10438 (2023)

Chen, C., Zhan, Y., Yu, B., Liu, L., Luo, Y., Du, B.: Resistance training using prior bias: toward unbiased scene graph generation. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 212–220 (2022)

Chen, T., Yu, W., Chen, R., Lin, L.: Knowledge-embedded routing network for scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6163–6171 (2019)

Chiou, M.J., Ding, H., Yan, H., Wang, C., Zimmermann, R., Feng, J.: Recovering the unbiased scene graphs from the biased ones. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 1581–1590 (2021)

Dai, B., Zhang, Y., Lin, D.: Detecting visual relationships with deep relational networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3076–3086 (2017)

De Boer, P.T., Kroese, D.P., Mannor, S., Rubinstein, R.Y.: A tutorial on the cross-entropy method. Ann. Oper. Res. 134, 19–67 (2005)

Deng, Y., et al.: Hierarchical memory learning for fine-grained scene graph generation. In: European Conference on Computer Vision. pp. 266–283. Springer (2022). https://doi.org/10.1007/978-3-031-19812-0_16

Desai, A., Wu, T.Y., Tripathi, S., Vasconcelos, N.: Learning of visual relations: the devil is in the tails. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 15404–15413 (2021)

Dong, X., Gan, T., Song, X., Wu, J., Cheng, Y., Nie, L.: Stacked hybrid-attention and group collaborative learning for unbiased scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19427–19436 (2022)

Ghosh, S., Burachas, G., Ray, A., Ziskind, A.: Generating natural language explanations for visual question answering using scene graphs and visual attention. arXiv preprint arXiv:1902.05715 (2019)

Gkanatsios, N., Pitsikalis, V., Koutras, P., Maragos, P.: Attention-translation-relation network for scalable scene graph generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (2019)

Gu, J., Joty, S., Cai, J., Zhao, H., Yang, X., Wang, G.: Unpaired image captioning via scene graph alignments. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10323–10332 (2019)

Guo, Y., et al.: From general to specific: informative scene graph generation via balance adjustment. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 16383–16392 (2021)

Hudson, D.A., Manning, C.D.: Gqa: a new dataset for real-world visual reasoning and compositional question answering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6700–6709 (2019)

Keskar, N.S., Socher, R.: Improving generalization performance by switching from adam to sgd. arXiv preprint arXiv:1712.07628 (2017)

Khandelwal, S., Sigal, L.: Iterative scene graph generation. Adv. Neural. Inf. Process. Syst. 35, 24295–24308 (2022)

Krishna, R., et al.: Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vision 123, 32–73 (2017)

Li, L., Chen, G., Xiao, J., Yang, Y., Wang, C., Chen, L.: Compositional feature augmentation for unbiased scene graph generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 21685–21695 (2023)

Li, P., Zhang, D., Wulamu, A., Liu, X., Chen, P.: Semantic relation model and dataset for remote sensing scene understanding. ISPRS Int. J. Geo Inf. 10(7), 488 (2021)

Li, R., Zhang, S., He, X.: Sgtr: end-to-end scene graph generation with transformer. In: proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19486–19496 (2022)

Li, R., Zhang, S., Wan, B., He, X.: Bipartite graph network with adaptive message passing for unbiased scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11109–11119 (2021)

Li, W., Zhang, H., Bai, Q., Zhao, G., Jiang, N., Yuan, X.: Ppdl: predicate probability distribution based loss for unbiased scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19447–19456 (2022)

Li, Y., et al.: Scene graph generation in large-size vhr satellite imagery: A large-scale dataset and a context-aware approach. arXiv preprint arXiv:2406.09410 (2024)

Li, Y., Yang, X., Shang, X., Chua, T.S.: Interventional video relation detection. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 4091–4099 (2021)

Liang, K., Guo, Y., Chang, H., Chen, X.: Visual relationship detection with deep structural ranking. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Lin, T.-Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Lin, X., Ding, C., Zeng, J., Tao, D.: Gps-net: graph property sensing network for scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3746–3753 (2020)

Lu, C., Krishna, R., Bernstein, M., Fei-Fei, L.: Visual relationship detection with language priors. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 852–869. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_51

Lu, C., Krishna, R., Bernstein, M., Fei-Fei, L.: Visual relationship detection with language priors. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. pp. 852–869. Springer (2016)

Lu, Y., et al.: Context-aware scene graph generation with seq2seq transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 15931–15941 (2021)

Luo, J., et al.: Skysensegpt: A fine-grained instruction tuning dataset and model for remote sensing vision-language understanding. arXiv preprint arXiv:2406.10100 (2024)

Lyu, X., et al.: Fine-grained predicates learning for scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19467–19475 (2022)

Lyu, X., Gao, L., Zeng, P., Shen, H.T., Song, J.: Adaptive fine-grained predicates learning for scene graph generation. IEEE Trans. Pattern Anal. Mach. Intell. (2023)

Malek, S., Melgani, F., Bazi, Y.: One-dimensional convolutional neural networks for spectroscopic signal regression. J. Chemom. 32(5), e2977 (2018)

Ren, S., He, K., Girshick, R.B., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, 7-12 December 2015, Montreal, Quebec, Canada, pp. 91–99 (2015). https://proceedings.neurips.cc/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html

Schroeder, B., Tripathi, S.: Structured query-based image retrieval using scene graphs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 178–179 (2020)

Schwing, A.G., Urtasun, R.: Fully connected deep structured networks. arXiv preprint arXiv:1503.02351 (2015)

Sharifzadeh, S., Baharlou, S.M., Tresp, V.: Classification by attention: scene graph classification with prior knowledge. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 5025–5033 (2021)

Suhail, M., Mittal, A., Siddiquie, B., Broaddus, C., Eledath, J., Medioni, G., Sigal, L.: Energy-based learning for scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13936–13945 (2021)

Tang, K., Niu, Y., Huang, J., Shi, J., Zhang, H.: Unbiased scene graph generation from biased training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3716–3725 (2020)

Tang, K., Zhang, H., Wu, B., Luo, W., Liu, W.: Learning to compose dynamic tree structures for visual contexts. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6619–6628 (2019)

Teng, Y., Wang, L.: Structured sparse r-cnn for direct scene graph generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19437–19446 (2022)

Vaswani, A., et al.: Attention is all you need. Adv. Neural Inform. Process. Syst. 30 (2017)

Wu, B., Yu, S., Chen, Z., Tenenbaum, J.B., Gan, C.: Star: a benchmark for situated reasoning in real-world videos. In: Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 2) (2021)

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1492–1500 (2017)

Xu, D., Zhu, Y., Choy, C.B., Fei-Fei, L.: Scene graph generation by iterative message passing. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5410–5419 (2017)

Yan, S., et al.: Pcpl: predicate-correlation perception learning for unbiased scene graph generation. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 265–273 (2020)

Yang, X., Tang, K., Zhang, H., Cai, J.: Auto-encoding scene graphs for image captioning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10685–10694 (2019)

Yu, J., Chai, Y., Wang, Y., Hu, Y., Wu, Q.: Cogtree: Cognition tree loss for unbiased scene graph generation. arXiv preprint arXiv:2009.07526 (2020)

Zareian, A., Karaman, S., Chang, S.-F.: Bridging knowledge graphs to generate scene graphs. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12368, pp. 606–623. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58592-1_36

Zellers, R., Yatskar, M., Thomson, S., Choi, Y.: Neural motifs: scene graph parsing with global context. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5831–5840 (2018)

Zeng, P., Gao, L., Lyu, X., Jing, S., Song, J.: Conceptual and syntactical cross-modal alignment with cross-level consistency for image-text matching. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 2205–2213 (2021)

Zhang, A., Yao, Y., Chen, Q., Ji, W., Liu, Z., Sun, M., Chua, T.S.: Fine-grained scene graph generation with data transfer. In: European conference on computer vision. pp. 409–424. Springer (2022). https://doi.org/10.1007/978-3-031-19812-0_24

Zou, F., Shen, L., Jie, Z., Zhang, W., Liu, W.: A sufficient condition for convergences of adam and rmsprop. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11127–11135 (2019)

Acknowledgements

This work was partly supported by the National Natural Science Foundation of China (42371321 and 42030102), Natural Science Foundation of HuBei Province (Grant No. 2024AFB283), and Science Foundation of China Three Gorges University (Grant No. 2023RCKJ0022).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Li, Y., Wang, T., Wu, K., Wang, L., Guo, X., Wang, W. (2025). Fine-Grained Scene Graph Generation via Sample-Level Bias Prediction. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15084. Springer, Cham. https://doi.org/10.1007/978-3-031-73347-5_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-73347-5_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-73346-8

Online ISBN: 978-3-031-73347-5

eBook Packages: Computer ScienceComputer Science (R0)