Abstract

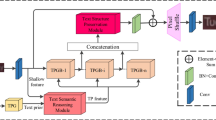

Scene text image super-resolution (STISR) aims at enhancing the visual clarity of a low-resolution text image for human perception or tasks like text recognition. In recent STISR work, various visual and semantic clues of the text play a key role in recovering the details of the text, but the utilization of different clues and their interactions is still insufficient, which often results in distorted or blurred appearances of the reconstructed text. To address this problem, we propose a multi-prompt guided text image super-resolution network (MPGTSRN). Specifically, we introduce multiple visual prompts for the text and combine them with semantic features to comprehensively capture the diverse characteristics of the text. We then propose a recurrent reconstruction network integrating multiple visual-semantic prompts to enhance the representation of the text and yield a high-resolution text image. We further propose a cross-representation attention mechanism that utilizes the complementarity of different prompts to guide the reconstruction network to adaptively focus on salient parts of the text and effectively improves the text details. The experimental results show the superiority of our proposed MPGTSRN in the STISR task.

M. Li and Z. Zhuang—Equal contribution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bautista, D., Atienza, R.: Scene text recognition with permuted autoregressive sequence models. In: EECV, pp. 178–196. Springer (2022)

Chan, K.C., Wang, X., Xu, X., Gu, J., Loy, C.C.: GLEAN: generative latent bank for large-factor image super-resolution. In: CVPR, pp. 14240–14249 (2021)

Chen, J., Li, B., Xue, X.: Scene text telescope: text-focused scene image super-resolution. In: CVPR, pp. 12021–12030 (2021)

Chen, J., Yu, H., Ma, J., Li, B., Xue, X.: Text gestalt: stroke-aware scene text image super-resolution. In: AAAI. vol. 36, pp. 285–293 (2022)

Chen, Y., Dai, X., Liu, M., Chen, D., Yuan, L., Liu, Z.: Dynamic convolution: attention over convolution kernels. In: CVPR, pp. 11030–11039 (2020)

Dong, C., Chen, C.L., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: ECCV, pp. 184–199 (2014)

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. IEEE TPAMI 38(2), 295–307 (2016)

Fang, S., Xie, H., Wang, Y., Mao, Z., Zhang, Y.: Read like humans: autonomous, bidirectional and iterative language modeling for scene text recognition. In: CVPR, pp. 7098–7107 (2021)

Guo, H., Dai, T., Meng, G., Xia, S.T.: Towards robust scene text image super-resolution via explicit location enhancement. In: IJCAI, pp. 782–790 (2023)

Jaderberg, M., Simonyan, K., Vedaldi, A., Zisserman, A.: Reading text in the wild with convolutional neural networks. IJCV 116(1), 1–20 (2016)

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: CVPR, pp. 4681–4690 (2017)

Liang, J., Zeng, H., Zhang, L.: Details or artifacts: a locally discriminative learning approach to realistic image super-resolution. In: CVPR, pp. 5647–5656 (2022)

Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: CVPRW, pp. 1132–1140 (2017)

Luo, C., Jin, L., Sun, Z.: MORAN: a multi-object rectified attention network for scene text recognition. PR 90, 109–118 (2019)

Ma, J., Guo, S., Zhang, L.: Text prior guided scene text image super-resolution. TIP 32, 1341–1353 (2023)

Ma, J., Liang, Z., Zhang, L.: A text attention network for spatial deformation robust scene text image super-resolution. In: CVPR, pp. 5901–5910 (2022)

Na, B., Kim, Y., Park, S.: Multi-modal text recognition networks: interactive enhancements between visual and semantic features. In: ECCV, pp. 446–463. Springer (2022)

Niu, B., et al.: Single image super-resolution via a holistic attention network. In: ECCV, pp. 191–207. Springer (2020)

Peyrard, C., Baccouche, M., Mamalet, F., Garcia, C.: ICDAR2015 competition on text image super-resolution. In: ICDAR, pp. 1201–1205 (2015)

Qi, Y., He, Y., Qi, X., Zhang, Y., Yang, G.: Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In: ICCV, pp. 6070–6079 (2023)

Shi, B., Bai, X., Yao, C.: An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE TPAMI 39(11), 2298–2304 (2016)

Shi, B., Yang, M., Wang, X., Lyu, P., Yao, C., Bai, X.: ASTER: an attentional scene text recognizer with flexible rectification. IEEE TPAMI 41(9), 2035–2048 (2018)

Shi, W., et al.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: CVPR, pp. 1874–1883 (2016)

Vaswani, A., et al.: Attention is all you need. In: NeurIPS, vol. 30 (2017)

Wang, W., et al.: Scene text image super-resolution in the wild. In: ECCV, pp. 650–666. Springer International Publishing, Cham (2020)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. TIP 13(4), 600–612 (2004)

Zhang, W., et al.: Pixel adapter: a graph-based post-processing approach for scene text image super-resolution. In: ACM MM, pp. 2168–2179 (2023)

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: CVPR, pp. 2472–2481 (2018)

Zhao, C., et al.: Scene text image super-resolution via parallelly contextual attention network. In: ACM MM, pp. 2908–2917 (2021)

Zhao, G., Lin, J., Zhang, Z., Ren, X., Su, Q., Sun, X.: Explicit sparse transformer: Concentrated attention through explicit selection (2019)

Zhao, M., Wang, M., Bai, F., Li, B., Wang, J., Zhou, S.: C3-STISR: scene text image super-resolution with triple clues. In: IJCAI, pp. 1707–1713 (2022)

Zhou, Y., Gao, L., Tang, Z., Wei, B.: Recognition-guided diffusion model for scene text image super-resolution. In: ICASSP 2024 - 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2940–2944 (2024). https://doi.org/10.1109/ICASSP48485.2024.10447585

Zhu, X., Guo, K., Fang, H., Ding, R., Wu, Z., Schaefer, G.: Gradient-based graph attention for scene text image super-resolution. In: AAAI. vol. 37, pp. 3861–3869 (2023)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Li, M., Zhuang, Z., Xu, S., Su, F. (2025). MPGTSRN: Scene Text Image Super-Resolution Guided by Multiple Visual-Semantic Prompts. In: Antonacopoulos, A., Chaudhuri, S., Chellappa, R., Liu, CL., Bhattacharya, S., Pal, U. (eds) Pattern Recognition. ICPR 2024. Lecture Notes in Computer Science, vol 15332. Springer, Cham. https://doi.org/10.1007/978-3-031-78125-4_22

Download citation

DOI: https://doi.org/10.1007/978-3-031-78125-4_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-78124-7

Online ISBN: 978-3-031-78125-4

eBook Packages: Computer ScienceComputer Science (R0)