Abstract

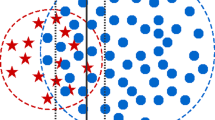

This paper improves upon existing data pruning methods for image classification by introducing a novel pruning metric and pruning procedure based on importance sampling. The proposed pruning metric explicitly accounts for data separability, data integrity, and model uncertainty, while the sampling procedure is adaptive to the pruning ratio and considers both intra-class and inter-class separation to further enhance the effectiveness of pruning. Furthermore, the sampling method can readily be applied to other pruning metrics to improve their performance. Overall, the proposed approach scales well to high pruning ratio and generalizes better across different classification models, as demonstrated by experiments on four benchmark datasets, including the fine-grained classification scenario.

S. Grosz—This author’s contribution was performed as an intern with Amazon.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chai, J.C.L., Ng, T.S., Low, C.Y., Park, J., Teoh, A.B.J.: Recognizability embedding enhancement for very low-resolution face recognition and quality estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9957–9967 (2023)

Chitta, K., Álvarez, J.M., Haussmann, E., Farabet, C.: Training data subset search with ensemble active learning. IEEE Trans. Intell. Transp. Syst. 23(9), 14741–14752 (2021)

Duda, R.O., Hart, P.E., et al.: Pattern Classification. Wiley (2006)

He, Y., Xiao, L., Zhou, J.T., Tsang, I.: Multisize dataset condensation. In: The Twelfth International Conference on Learning Representations (2024)

Khan, M.A., Hamila, R., Menouar, H.: CLIP: train faster with less data. In: 2023 IEEE International Conference on Big Data and Smart Computing (BigComp), pp. 34–39. IEEE (2023)

Killamsetty, K., Evfimievski, A.V., Pedapati, T., Kate, K., Popa, L., Iyer, R.: Milo: model-agnostic subset selection framework for efficient model training and tuning. arXiv preprint arXiv:2301.13287 (2023)

Killamsetty, K., et al.: Automata: gradient based data subset selection for compute-efficient hyper-parameter tuning. Adv. Neural. Inf. Process. Syst. 35, 28721–28733 (2022)

Killamsetty, K., Durga, S., Ramakrishnan, G., De, A., Iyer, R.: Grad-match: gradient matching based data subset selection for efficient deep model training. In: International Conference on Machine Learning, pp. 5464–5474. PMLR (2021)

Kim, M., Jain, A.K., Liu, X.: AdaFace: quality adaptive margin for face recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 18750–18759 (2022)

Kloek, T., Van Dijk, H.K.: Bayesian estimates of equation system parameters: an application of integration by monte carlo. Econometrica: J. Econ. Soc. 46, 1–19 (1978)

Krizhevsky, A., Hinton, G., et al.: Learning multiple layers of features from tiny images (2009)

Lakshminarayanan, B., Pritzel, A., Blundell, C.: Simple and scalable predictive uncertainty estimation using deep ensembles. In: Advances in Neural Information Processing Systems , vol.30 (2017)

Le, Y., Yang, X.: Tiny ImageNet visual recognition challenge. CS 231N 7(7), 3 (2015)

Li, J., He, L., Ren, S., Mao, R.: Data fine-pruning: a simple way to accelerate neural network training. In: Zhang, F., Zhai, J., Snir, M., Jin, H., Kasahara, H., Valero, M. (eds.) NPC 2018. LNCS, vol. 11276, pp. 114–125. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-05677-3_10

Park, D., Choi, S., Kim, D., Song, H., Lee, J.G.: Robust data pruning under label noise via maximizing re-labeling accuracy. In: Thirty-Seventh Conference on Neural Information Processing Systems (2023)

Paul, M., Ganguli, S., Dziugaite, G.K.: Deep learning on a data diet: finding important examples early in training. Adv. Neural. Inf. Process. Syst. 34, 20596–20607 (2021)

Pote, T., Adnan, M., Yargic, Y., Ioannou, Y.: Classification bias on a data diet. In: Conference on Parsimony and Learning (Recent Spotlight Track) (2023)

Sachdeva, N., McAuley, J.: Data distillation: a survey. arXiv preprint arXiv:2301.04272 (2023)

Shoshan, A., et al.: Asymmetric image retrieval with cross model compatible ensembles. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1–11 (2024)

Sorscher, B., Geirhos, R., Shekhar, S., Ganguli, S., Morcos, A.: Beyond neural scaling laws: beating power law scaling via data pruning. Adv. Neural. Inf. Process. Syst. 35, 19523–19536 (2022)

Sucholutsky, I., Schonlau, M.: Less than one’-shot learning: learning n classes from m< n samples. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 9739–9746 (2021)

Sundar, A.S., Keskin, G., Chandak, C., Chen, I.F., Ghahremani, P., Ghosh, S.: Prune then distill: dataset distillation with importance sampling. In: ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5. IEEE (2023)

Susan, S., Kumar, A.: The balancing trick: optimized sampling of imbalanced datasets-a brief survey of the recent state of the art. Eng. Rep. 3(4), e12298 (2021)

Tan, H., et al.: Data pruning via moving-one-sample-out. In: Thirty-seventh Conference on Neural Information Processing Systems (2023)

Toneva, M., Sordoni, A., des Combes, R.T., Trischler, A., Bengio, Y., Gordon, G.J.: An empirical study of example forgetting during deep neural network learning. In: International Conference on Learning Representations (2019)

Van Horn, et al.: The INaturalist species classification and detection dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8769–8778 (2018)

Wang, T., Zhu, J.Y., Torralba, A., Efros, A.A.: Dataset distillation. arXiv preprint arXiv:1811.10959 (2018)

Xia, X., Liu, J., Yu, J., Shen, X., Han, B., Liu, T.: Moderate coreset: a universal method of data selection for real-world data-efficient deep learning. In: The Eleventh International Conference on Learning Representations (2022)

Yang, S., Xie, Z., Peng, H., Xu, M., Sun, M., Li, P.: Dataset pruning: reducing training data by examining generalization influence. arXiv preprint arXiv:2205.09329 (2022)

Zheng, H., Liu, R., Lai, F., Prakash, A.: Coverage-centric coreset selection for high pruning rates. In: The Eleventh International Conference on Learning Representations (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Grosz, S. et al. (2025). Data Pruning via Separability, Integrity, and Model Uncertainty-Aware Importance Sampling. In: Antonacopoulos, A., Chaudhuri, S., Chellappa, R., Liu, CL., Bhattacharya, S., Pal, U. (eds) Pattern Recognition. ICPR 2024. Lecture Notes in Computer Science, vol 15302. Springer, Cham. https://doi.org/10.1007/978-3-031-78166-7_26

Download citation

DOI: https://doi.org/10.1007/978-3-031-78166-7_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-78165-0

Online ISBN: 978-3-031-78166-7

eBook Packages: Computer ScienceComputer Science (R0)