Abstract

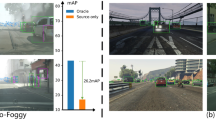

Visual object tracking is a popular research area in computer vision due to its diverse applications. Despite the impressive progress made by numerous state-of-the-art trackers on large-scale datasets, visual object tracking at nighttime remains challenging because of low light (brightness) conditions, lack of contrast, very low variability among feature distributions, etc. In addition, the lack of paired (labeled) data for nighttime tracking makes it infeasible for supervised learning based modeling. Unsupervised domain adaptation based tracking can resolve this issue. In this work, we proposed static image style transfer-based Reconstruction Assisted Domain Adaptation (RADA) with adversarial learning for nighttime object tracking. The main contribution of the work is two-fold. First, a reconstruction-assisted adaptation is proposed for domain invariant feature extraction and to achieve input and feature level adaptation. Secondly, static style transfer is used to generate synthetic paired images (video frames) for supervised nighttime modeling for visual object tracking. Style adversarial alignment at multiple levels helped to adapt between the styled source domain and the target domain, which do not require pseudo labels. RADA attained feature and input level adaptation without external model requirements for low-light image enhancement. Static style transfer avoids negative domain transfer and enables domain transfer learning on true labels. The effectiveness of RADA is validated on six benchmark datasets. RADA achieved state-of-the-art results on two benchmark nighttime adaptation datasets with improvements in the range of 3.7% - 11.4%. RADA also attained state-of-the-art results on three other nighttime datasets without target adaptation. The tracking results and model weights are available at https://github.com/chouhan-avinash/RADA/.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Kiani Galoogahi, H., Fagg, A., Lucey, S.: Learning background-aware correlation filters for visual tracking. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 1144–1152 (2017)

Li, Y., Fu, C., Ding, F., Huang, Z., Lu, G.: AutoTrack: towards high-performance visual tracking for UAV with automatic spatio-temporal regularization. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11920–11929 (2020)

Chen, Z., Zhong, B., Li, G., Zhang, S., Ji, R.: Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6668–6677 (2020)

Yinda, X., Wang, Z., Li, Z., Yuan, Y., Gang, Yu.: SiamFC++: towards robust and accurate visual tracking with target estimation guidelines. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 12549–12556 (2020)

Guo, D., Wang, J., Cui, Y., Wang, Z., Chen, S.: SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6269–6277 (2020)

Lin, L., Fan, H., Zhang, Z., Xu, Y., Ling, H.: Swintrack: a simple and strong baseline for transformer tracking. In: Conference on Neural Information Processing Systems (NeurIPS) (2022)

Cui, Y., Jiang, C., Wang, L., Wu, G.: MixFormer: end-to-end tracking with iterative mixed attention. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13598–13608, Los Alamitos, CA, USA. IEEE Computer Society (2022)

Ye, B., Chang, H., Ma, B., Shan, S., Chen, X.: Joint feature learning and relation modeling for tracking: a one-stream framework. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., (eds.) Computer Vision - ECCV 2022 - 17th European Conference, Tel Aviv, Israel, October 23-27, 2022, Proceedings, Part XXII, vol. 13682. LNCS, pp. 341–357. Springer (2022)

Cui, Y., Jiang, C., Gangshan, W., Wang, L.: MixFormer: end-to-end tracking with iterative mixed attention. IEEE Trans. Pattern Anal. Mach. Intell. 46(6), 4129–4146 (2024)

Li, B., Fu, C., Ding, F., Ye, J., Lin, F.: ADTrack: target-aware dual filter learning for real-time anti-dark UAV tracking. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), pp. 1–8 (2021)

Sasagawa, Y., Nagahara, H.: Yolo in the dark-domain adaptation method for merging multiple models. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXI 16, pp. 345–359. Springer (2020)

Zhu, J., et al.: DCPT: darkness clue-prompted tracking in nighttime UAVs. arXiv preprint arXiv:2309.10491 (2023)

Ma, L., et al.: Bilevel fast scene adaptation for low-light image enhancement. Int. J. Comput. Vis., 1–19 (2023)

Ye, J., Fu, C., Zheng, G., Cao, Z., Li, B.: Darklighter: light up the darkness for UAV tracking. In: 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3079–3085 (2021)

Ye, J., Changhong, F., Cao, Z., An, S., Zheng, G., Li, B.: Tracker meets night: a transformer enhancer for UAV tracking. IEEE Rob. Autom. Lett. 7(2), 3866–3873 (2022)

Li, B., Changhong, F., Ding, F., Ye, J., Lin, F.: All-day object tracking for unmanned aerial vehicle. IEEE Trans. Mob. Comput. 22(8), 4515–4529 (2023)

Fu, C., Dong, H., Ye, J., Zheng, G., Li, S., Zhao, J.: HighlightNet: highlighting low-light potential features for real-time UAV tracking. In: 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 12146–12153 (2022)

Wu, X., Wu, Z., Guo, H., Ju, L., Wang, S.: DANNet: a one-stage domain adaptation network for unsupervised nighttime semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15769–15778 (2021)

Wu, X., Wu, Z., Guo, H., Ju, L., Wang, S.: DANNet: a one-stage domain adaptation network for unsupervised nighttime semantic segmentation. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 15764–15773, Los Alamitos, CA, USA. IEEE Computer Society (2021)

Chen, Y., Li, W., Sakaridis, C., Dai, D., Van Gool, L.: Domain adaptive faster R-CNN for object detection in the wild. In: Computer Vision and Pattern Recognition (CVPR) (2018)

Ye, J., Fu, C., Zheng, G., Paudel, D.P., Chen, G.: Unsupervised domain adaptation for nighttime aerial tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–10 (2022)

Zhang, J., Li, Z., Wei, R., Wang, Y.: Progressive domain-style translation for nighttime tracking. In: Proceedings of the 31st ACM International Conference on Multimedia, MM ’23, pp. 7324–7334, New York, NY, USA. Association for Computing Machinery (2023)

Yao, L., Zuo, H., Zheng, G., Changhong, F., Pan, J.: SAM-DA: UAV tracks anything at night with SAM-powered domain adaptation (2023)

Kunhan, L., Changhong, F., Wang, Y., Zuo, H., Zheng, G., Pan, J.: Cascaded denoising transformer for UAV nighttime tracking. IEEE Robot. Autom. Lett. 8(6), 3142–3149 (2023)

Changhong, F., Li, T., Ye, J., Zheng, G., Li, S., Peng, L.: Scale-aware domain adaptation for robust UAV tracking. IEEE Robot. Autom. Lett. 8(6), 3764–3771 (2023)

Lv, Y., Feng, W., Wang, S., Dauphin, G., Zhang, Y., Xing, M.: Spectral-spatial feature enhancement algorithm for nighttime object detection and tracking. Symmetry 15(2) (2023)

Sun, L., Kong, S., Yang, Z., Gao, D., Fan, B.: Modified Siamese network based on feature enhancement and dynamic template for low-light object tracking in UAV videos. Drones 7(7) (2023)

Kennerley, M., Wang, J.G., Veeravalli, B., Tan, R.T.: 2PCNET: two-phase consistency training for day-to-night unsupervised domain adaptive object detection. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11484–11493, Los Alamitos, CA, USA. IEEE Computer Society (2023)

Chen, J., Sun, Q., Zhao, C., Ren, W., Tang, Y.: Rethinking unsupervised domain adaptation for nighttime tracking. In: Luo, B., Cheng, L., Wu, ZG., Li, H., Li, C. (eds.) Neural Information Processing, pp. 391–404. Springer, Singapore (2024)

Zheng, X., Cui, H., Xiaoqiang, L.: Multiple source domain adaptation for multiple object tracking in satellite video. IEEE Trans. Geosci. Remote Sens. 61, 1–11 (2023)

Li, X., Luo, M., Ji, S., Zhang, L., Meng, L.: Evaluating generative adversarial networks based image-level domain transfer for multi-source remote sensing image segmentation and object detection. Int. J. Remote Sens. 41(19), 7343–7367 (2020)

Peng, D., Guan, H., Zang, Y., Bruzzone, L.: Full-level domain adaptation for building extraction in very-high-resolution optical remote-sensing images. IEEE Trans. Geosci. Remote Sens. 60, 1–17 (2021)

Huang, L., Zhao, X., Huang, K.: GOT-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 43(5), 1562–1577 (2019)

Fan, H., et al.: LaSOT: a high-quality benchmark for large-scale single object tracking. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5369–5378 (2018)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Chouhan, A., Chandak, M., Sur, A., Chutia, D., Aggarwal, S.P. (2025). RADA: Reconstruction Assisted Domain Adaptation for Nighttime Aerial Tracking. In: Antonacopoulos, A., Chaudhuri, S., Chellappa, R., Liu, CL., Bhattacharya, S., Pal, U. (eds) Pattern Recognition. ICPR 2024. Lecture Notes in Computer Science, vol 15310. Springer, Cham. https://doi.org/10.1007/978-3-031-78192-6_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-78192-6_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-78191-9

Online ISBN: 978-3-031-78192-6

eBook Packages: Computer ScienceComputer Science (R0)