Abstract

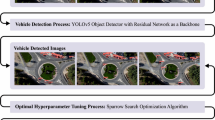

This paper addresses the critical challenge of vehicle detection in the harsh winter conditions in the Nordic regions, characterized by heavy snowfall, reduced visibility, and low lighting. Due to their susceptibility to environmental distortions and occlusions, traditional vehicle detection methods have struggled in these adverse conditions. The advanced proposed deep learning architectures brought promise, yet the unique difficulties of detecting vehicles in Nordic winters remain inadequately addressed. This study uses the Nordic Vehicle Dataset (NVD), which contains UAV (unmanned aerial vehicle) images from northern Sweden, to evaluate the performance of state-of-the-art vehicle detection algorithms under challenging weather conditions. Our methodology includes a comprehensive evaluation of single-stage, two-stage, segmentation-based, and transformer-based detectors against the NVD. We propose a series of enhancements tailored to each detection framework, including data augmentation, hyperparameter tuning, transfer learning, and Specifically implementing and enhancing the Detection Transformer (DETR). A novel architecture is proposed that leverages self-attention mechanisms with the help of MSER (maximally stable extremal regions) and RST (Rough Set Theory) to identify and filter the region that model long-range dependencies and complex scene contexts. Our findings not only highlight the limitations of current detection systems in the Nordic environment but also offer promising directions for enhancing these algorithms for improved robustness and accuracy in vehicle detection amidst the complexities of winter landscapes. The code and the dataset are available at https://nvd.ltu-ai.dev.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Sakhare, K.V., Tewari, T., Vyas, V.: Review of vehicle detection systems in advanced driver assistant systems. Archives of Computational Methods in Engineering 27(2), 591–610 (2020)

Tsai, L.-W., Hsieh, J.-W., Fan, K.-C.: Vehicle detection using normalized color and edge map. IEEE Trans. Image Process. 16(3), 850–864 (2007)

Felzenszwalb, P., McAllester, D., Ramanan, D., “A discriminatively trained, multiscale, deformable part model,” in,: IEEE conference on computer vision and pattern recognition. Ieee 2008, 1–8 (2008)

H. Mokayed, L. K. Meng, H. H. Woon, and N. H. Sin, “Car plate detection engine based on conventional edge detection technique,” in The International Conference on Computer Graphics, Multimedia and Image Processing (CGMIP2014). The Society of Digital Information and Wireless Communication, 2014

H. Mokayed, A. Nayebiastaneh, K. De, S. Sozos, O. Hagner, and B. Backe, “Nordic vehicle dataset (nvd): Performance of vehicle detectors using newly captured nvd from uav in different snowy weather conditions.” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 5313–5321

Geiger, A., Lenz, P., Urtasun, R., “Are we ready for autonomous driving? the kitti vision benchmark suite,” in,: IEEE conference on computer vision and pattern recognition. IEEE 2012, 3354–3361 (2012)

A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto, and H. Adam, “Mobilenets: Efficient convolutional neural networks for mobile vision applications,” arXiv preprint arXiv:1704.04861, 2017

F. Hu, G. Venkatesh, N. E. O’Connor, A. F. Smeaton, and S. Little, “Utilising visual attention cues for vehicle detection and tracking,” in 2020 25th International Conference on Pattern Recognition (ICPR). IEEE, 2021, pp. 5535–5542

Y. Cao, Z. He, L. Wang, W. Wang, Y. Yuan, D. Zhang, J. Zhang, P. Zhu, L. Van Gool, J. Han et al., “Visdrone-det2021: The vision meets drone object detection challenge results,” in Proceedings of the IEEE/CVF International conference on computer vision, 2021, pp. 2847–2854

Mokayed, H., Shivakumara, P., Woon, H.H., Kankanhalli, M., Lu, T., Pal, U.: A new dct-pcm method for license plate number detection in drone images. Pattern Recogn. Lett. 148, 45–53 (2021)

Rothmeier, T., Huber, W., “Let it snow: On the synthesis of adverse weather image data,” in,: IEEE International Intelligent Transportation Systems Conference (ITSC). IEEE 2021, 3300–3306 (2021)

Liu, K., Mattyus, G.: Fast multiclass vehicle detection on aerial images. IEEE Geosci. Remote Sens. Lett. 12(9), 1938–1942 (2015)

X. Zhao, Y. Ma, D. Wang, Y. Shen, Y. Qiao, and X. Liu, “Revisiting open world object detection,” IEEE Transactions on Circuits and Systems for Video Technology, 2023

R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2014, pp. 580–587

Lohia, A., Kadam, K.D., Joshi, R.R., Bongale, A.M.: Bibliometric analysis of one-stage and two-stage object detection. Libr. Philos. Pract. 4910, 34 (2021)

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 779–788

M. A. Hossain, M. I. Hossain, M. D. Hossain, N. T. Thu, and E.-N. Huh, “Fast-d: When non-smoothing color feature meets moving object detection in real-time,” IEEE Access, vol. 8, pp. 186 756–186 772, 2020

S. Li and F. Chen, “3d-detnet: a single stage video-based vehicle detector,” in Third International Workshop on Pattern Recognition, vol. 10828. SPIE, 2018, pp. 60–66

Wang, H., Yu, Y., Cai, Y., Chen, X., Chen, L., Li, Y.: Soft-weighted-average ensemble vehicle detection method based on single-stage and two-stage deep learning models. IEEE Transactions on Intelligent Vehicles 6(1), 100–109 (2020)

C.-Y. Wang, I.-H. Yeh, and H.-Y. M. Liao, “Yolov9: Learning what you want to learn using programmable gradient information,” arXiv preprint arXiv:2402.13616, 2024

C. Meng, H. Bao, and Y. Ma, “Vehicle detection: A review,” in Journal of Physics: Conference Series, vol. 1634, no. 1. IOP Publishing, 2020, p. 012107

S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Advances in neural information processing systems, vol. 28, 2015

T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2117–2125

Yang, M.Y., Liao, W., Li, X., Cao, Y., Rosenhahn, B.: Vehicle detection in aerial images. Photogrammetric Engineering & Remote Sensing 85(4), 297–304 (2019)

I. Y. Tanasa, D. H. Budiarti, Y. Guno, A. S. Yunata, M. Wibowo, A. Hidayat, F. N. Purnamastuti, A. Purwanto, G. Wicaksono, and D. D. Domiri, “U-net utilization on segmentation of aerial captured images,” in 2023 International Conference on Radar, Antenna, Microwave, Electronics, and Telecommunications (ICRAMET). IEEE, 2023, pp. 107–112

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017

N. Carion, F. Massa, G. Synnaeve, N. Usunier, A. Kirillov, and S. Zagoruyko, “End-to-end object detection with transformers,” in European conference on computer vision. Springer, 2020, pp. 213–229

K. SP and P. Mohandas, “Detr-spp: a fine-tuned vehicle detection with transformer,” Multimedia Tools and Applications, pp. 1–22, 2023

X. Zhu, W. Su, L. Lu, B. Li, X. Wang, and J. Dai, “Deformable detr: Deformable transformers for end-to-end object detection,” arXiv preprint arXiv:2010.04159, 2020

Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 10 012–10 022

Mokayed, H., Ulehla, C., Shurdhaj, E., Nayebiastaneh, A., Alkhaled, L., Hagner, O., Hum, Y.C.: Fractional b-spline wavelets and u-net architecture for robust and reliable vehicle detection in snowy conditions. Sensors 24(12), 3938 (2024)

Maity, M., Banerjee, S., Chaudhuri, S.S., “Faster r-cnn and yolo based vehicle detection: A survey,” in,: 5th international conference on computing methodologies and communication (ICCMC). IEEE 2021, 1442–1447 (2021)

Q. M. Chung, T. D. Le, T. V. Dang, N. D. Vo, T. V. Nguyen, and K. Nguyen, “Data augmentation analysis in vehicle detection from aerial videos,” in 2020 RIVF International Conference on Computing and Communication Technologies (RIVF). IEEE, 2020, pp. 1–3

Mo, N., Yan, L.: Improved faster rcnn based on feature amplification and oversampling data augmentation for oriented vehicle detection in aerial images. Remote Sensing 12(16), 2558 (2020)

Matas, J., Chum, O., Urban, M., Pajdla, T.: Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 22(10), 761–767 (2004)

Donoser, M., Bischof, H., “Efficient maximally stable extremal region (mser) tracking,” in,: IEEE computer society conference on computer vision and pattern recognition (CVPR’06), vol. 1. Ieee 2006, 553–560 (2006)

A. Zamberletti, I. Gallo, and L. Noce, “Augmented text character proposals and convolutional neural networks for text spotting from scene images,” in 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR). IEEE, 2015, pp. 196–200

Pal, S.K., Shankar, B.U., Mitra, P.: Granular computing, rough entropy and object extraction. Pattern Recogn. Lett. 26(16), 2509–2517 (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Mokayed, H. et al. (2025). Vehicle Detection Performance in Nordic Region. In: Antonacopoulos, A., Chaudhuri, S., Chellappa, R., Liu, CL., Bhattacharya, S., Pal, U. (eds) Pattern Recognition. ICPR 2024. Lecture Notes in Computer Science, vol 15322. Springer, Cham. https://doi.org/10.1007/978-3-031-78312-8_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-78312-8_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-78311-1

Online ISBN: 978-3-031-78312-8

eBook Packages: Computer ScienceComputer Science (R0)