Abstract

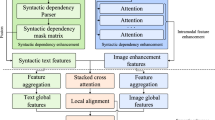

Multimodal generative models have demonstrated promising capabilities for bridging the semantic gap between visual and textual modalities, especially in the context of multimodal summarization. Most of the existing methods align the visual and textual information by self-attention mechanism. However, those approaches will cause imbalances or discrepancies between different modalities when processing such text-heavy tasks. To address this challenge, our method introduces an innovative multimodal summarization method. We first propose a novel text-caption alignment mechanism, which considers the semantic association across modalities while maintaining the semantic information. Then, we introduce a document segmentation module with a salient information retrieval strategy to integrate the inherent semantic information across facet-aware semantic blocks, obtaining a more informative and readable textual output. Additionally, we leverage the generated text summary to optimize image selection, enhancing the consistency of the multimodal output. By incorporating the textual information in the image selection process, our method selects more relevant and representative visual content, further enhancing the quality of the multimodal summarization. Experimental results illustrate that our method outperforms existing methods by utilizing visual information to generate a better text-image summary and achieves higher ROUGE scores.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cao, B., Araujo, A., Sim, J.: Unifying deep local and global features for image search. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XX 16, pp. 726–743. Springer (2020)

Chen, J., Zhuge, H.: Extractive text-image summarization using multi-modal RNN. In: 14th International Conference on Semantics, Knowledge and Grids, SKG 2018, Guangzhou, China, 12-14 September 2018, pp. 245–248. IEEE (2018). https://doi.org/10.1109/SKG.2018.00033

Cui, C., Liang, X., Wu, S., Li, Z.: Align vision-language semantics by multi-task learning for multi-modal summarization. Neural Comput. Appl. 1–14 (2024)

Devlin, J., Chang, M., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Burstein, J., Doran, C., Solorio, T. (eds.) Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2-7 June 2019, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics (2019). https://doi.org/10.18653/v1/n19-1423

Ding, C., et al.: Learning aligned audiovisual representations for multimodal sentiment analysis. In: Ghosh, S., Dhall, A., Kollias, D., Goecke, R., Gedeon, T. (eds.) Proceedings of the 1st International Workshop on Multimodal and Responsible Affective Computing, MRAC 2023, Ottawa, ON, Canada, 29 October 2023, pp. 21–28. ACM (2023). https://doi.org/10.1145/3607865.3613184

Evangelopoulos, G., et al.: Multimodal saliency and fusion for movie summarization based on aural, visual, and textual attention. IEEE Trans. Multim. 15(7), 1553–1568 (2013). https://doi.org/10.1109/TMM.2013.2267205

Hearst, M.A.: Text tiling: segmenting text into multi-paragraph subtopic passages. Comput. Linguist. 23(1), 33–64 (1997)

La, T., Tran, Q., Tran, T., Tran, A., Dang-Nguyen, D., Dao, M.: Multimodal cheapfakes detection by utilizing image captioning for global context. In: Dao, M., Dang-Nguyen, D., Riegler, M. (eds.) ICDAR@ICMR 2022: Proceedings of the 3rd ACM Workshop on Intelligent Cross-Data Analysis and Retrieval, Newark, NJ, USA, 27–30 June 2022, pp. 9–16. ACM (2022). https://doi.org/10.1145/3512731.3534210

Lewis, M., Liu, Y., Goyal, N., Ghazvininejad, M., Mohamed, A., Levy, O., Stoyanov, V., Zettlemoyer, L.: BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In: Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R. (eds.) Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020, pp. 7871–7880. Association for Computational Linguistics (2020). https://doi.org/10.18653/v1/2020.acl-main.703

Li, H., Zhu, J., Ma, C., Zhang, J., Zong, C.: Multi-modal summarization for asynchronous collection of text, image, audio and video. In: Palmer, M., Hwa, R., Riedel, S. (eds.) Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, EMNLP 2017, Copenhagen, Denmark, 9–11 September 2017, pp. 1092–1102. Association for Computational Linguistics (2017). https://doi.org/10.18653/v1/d17-1114

Li, H., Zhu, J., Ma, C., Zhang, J., Zong, C.: Read, watch, listen, and summarize: multi-modal summarization for asynchronous text, image, audio and video. IEEE Trans. Knowl. Data Eng. 31(5), 996–1009 (2019). https://doi.org/10.1109/TKDE.2018.2848260

Li, J., Li, D., Xiong, C., Hoi, S.C.H.: BLIP: bootstrapping language-image pre-training for unified vision-language understanding and generation. In: Chaudhuri, K., Jegelka, S., Song, L., Szepesvári, C., Niu, G., Sabato, S. (eds.) International Conference on Machine Learning, ICML 2022, 17–23 July 2022, Baltimore, Maryland, USA. Proceedings of Machine Learning Research, vol. 162, pp. 12888–12900. PMLR (2022)

Liang, X., Li, J., Wu, S., Zeng, J., Jiang, Y., Li, M., Li, Z.: An efficient coarse-to-fine facet-aware unsupervised summarization framework based on semantic blocks. In: Calzolari, N. (eds.) Proceedings of the 29th International Conference on Computational Linguistics, COLING 2022, Gyeongju, Republic of Korea, 12–17 October 2022, pp. 6415–6425. International Committee on Computational Linguistics (2022)

Liang, Y., Meng, F., Wang, J., Xu, J., Chen, Y., Zhou, J.: D\({^2}\)tv: dual knowledge distillation and target-oriented vision modeling for many-to-many multimodal summarization. In: Bouamor, H., Pino, J., Bali, K. (eds.) Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023, pp. 14910–14922. Association for Computational Linguistics (2023)

Liu, Y., Lapata, M.: Text summarization with pretrained encoders. In: Inui, K., Jiang, J., Ng, V., Wan, X. (eds.) Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019, pp. 3728–3738. Association for Computational Linguistics (2019). https://doi.org/10.18653/v1/D19-1387

Pal, A., Wang, J., Wu, Y., Kant, K., Liu, Z., Sato, K.: Social media driven big data analysis for disaster situation awareness: a tutorial. IEEE Trans. Big Data 9(1), 1–21 (2023). https://doi.org/10.1109/TBDATA.2022.3158431

Palaskar, S., Libovický, J., Gella, S., Metze, F.: Multimodal abstractive summarization for how2 videos. In: Korhonen, A., Traum, D.R., Màrquez, L. (eds.) Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, July 28- August 2, 2019, Volume 1: Long Papers, pp. 6587–6596. Association for Computational Linguistics (2019). https://doi.org/10.18653/v1/p19-1659

Radford, A., et al.: Learning transferable visual models from natural language supervision. In: Meila, M., Zhang, T. (eds.) Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18–24 July 2021, Virtual Event. Proceedings of Machine Learning Research, vol. 139, pp. 8748–8763. PMLR (2021)

Ruan, Q., Ostendorff, M., Rehm, G.: Histruct+: Improving extractive text summarization with hierarchical structure information. In: Muresan, S., Nakov, P., Villavicencio, A. (eds.) Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022, pp. 1292–1308. Association for Computational Linguistics (2022). https://doi.org/10.18653/V1/2022.FINDINGS-ACL.102

Thuma, B.S., de Vargas, P.S., Garcia, C.M., de Souza Britto Jr., A., Barddal, J.P.: Benchmarking feature extraction techniques for textual data stream classification. In: International Joint Conference on Neural Networks, IJCNN 2023, Gold Coast, Australia, 18–23 June 2023, pp. 1–8. IEEE (2023). https://doi.org/10.1109/IJCNN54540.2023.10191369

Wu, Q., Shen, C., Wang, P., Dick, A.R., van den Hengel, A.: Image captioning and visual question answering based on attributes and external knowledge. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1367–1381 (2018). https://doi.org/10.1109/TPAMI.2017.2708709

Yang, H., Zhao, Y., Qin, B.: Face-sensitive image-to-emotional-text cross-modal translation for multimodal aspect-based sentiment analysis. In: Goldberg, Y., Kozareva, Z., Zhang, Y. (eds.) Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022, pp. 3324–3335. Association for Computational Linguistics (2022). https://doi.org/10.18653/V1/2022.EMNLP-MAIN.219

Zhang, C., Zhang, Z., Li, J., Liu, Q., Zhu, H.: Ctnr: compress-then-reconstruct approach for multimodal abstractive summarization. In: International Joint Conference on Neural Networks, IJCNN 2021, Shenzhen, China, 18–22 July 2021, pp. 1–8. IEEE (2021). https://doi.org/10.1109/IJCNN52387.2021.9534082

Zhang, Z., Meng, X., Wang, Y., Jiang, X., Liu, Q., Yang, Z.: Unims: a unified framework for multimodal summarization with knowledge distillation. In: Thirty-Sixth AAAI Conference on Artificial Intelligence, AAAI 2022, 1 March 2022, pp. 11757–11764. AAAI Press (2022)

Zhang, Z., Shu, C., Chen, Y., Xiao, J., Zhang, Q., Lu, Z.: ICAF: iterative contrastive alignment framework for multimodal abstractive summarization. In: International Joint Conference on Neural Networks, IJCNN 2022, Padua, Italy, 18–23 July 2022, pp. 1–8. IEEE (2022). https://doi.org/10.1109/IJCNN55064.2022.9892884

Zheng, H., Lapata, M.: Sentence centrality revisited for unsupervised summarization. In: Korhonen, A., Traum, D.R., Màrquez, L. (eds.) Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, July 28- August 2, 2019, Volume 1: Long Papers, pp. 6236–6247. Association for Computational Linguistics (2019). https://doi.org/10.18653/v1/p19-1628

Zhu, J., Li, H., Liu, T., Zhou, Y., Zhang, J., Zong, C.: MSMO: multimodal summarization with multimodal output. In: Riloff, E., Chiang, D., Hockenmaier, J., Tsujii, J. (eds.) Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, October 31 - November 4, 2018, pp. 4154–4164. Association for Computational Linguistics (2018). https://doi.org/10.18653/v1/d18-1448

Zhu, J., Zhou, Y., Zhang, J., Li, H., Zong, C., Li, C.: Multimodal summarization with guidance of multimodal reference. In: The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, New York, NY, USA, 7–12 February 2020, pp. 9749–9756. AAAI Press (2020)

Acknowledgements

This work was supported in part by the Beijing Municipal Science & Technology Commission under Grant Z231100001723002, and in part by the National Key Research and Development Program of China under Grant 2020YFB1406702-3, in part by the National Natural Science Foundation of China under Grant 61772575 and 62006257.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Weng, Y., Ye, X., Xing, T., Liu, Z., Chaomurilige, Liu, X. (2025). Facet-Aware Multimodal Summarization via Cross-Modal Alignment. In: Antonacopoulos, A., Chaudhuri, S., Chellappa, R., Liu, CL., Bhattacharya, S., Pal, U. (eds) Pattern Recognition. ICPR 2024. Lecture Notes in Computer Science, vol 15319. Springer, Cham. https://doi.org/10.1007/978-3-031-78495-8_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-78495-8_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-78494-1

Online ISBN: 978-3-031-78495-8

eBook Packages: Computer ScienceComputer Science (R0)