Abstract

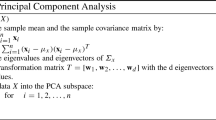

Many classification problems are related to a hierarchy of classes, that can be exploited in order to perform hierarchical classification of test objects. The most basic way of hierarchical classification is that of cascade classification, which greedily traverses the hierarchy from root to the predicted leaf. In order to perform cascade classification, a classifier must be trained for each node of the hierarchy. In large scale problems, the number of features can be prohibitively large for the classifiers in the upper levels of the hierarchy. It is therefore desirable to reduce the dimensionality of the feature space at these levels. In this paper we examine the computational feasibility of the most common dimensionality reduction method (Principal Component Analysis) for this problem, as well as the computational benefits that it provides for cascade classification and its effect on classification accuracy. Our experiments on two benchmark datasets with a large hierarchy show that it is possible to perform a certain version of PCA efficiently in such large hierarchies, with a slight decrease in the accuracy of the classifiers. Furthermore, we show that PCA can be used selectively at the top levels of the hierarchy in order to decrease the loss in accuracy. Finally, the reduced feature space, provided by the PCA, facilitates the use of more costly and possibly more accurate classifiers, such as non-linear SVMs.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Kosmopoulos, A., Gaussier, É., Paliouras, G., Aseervatham, S.: The ECIR 2010 large scale hierarchical classification workshop. In: SIGIR Forum. vol. 44, pp. 23–32 (2010)

Roweis, S.: EM Algorithms for PCA and SPCA. In: Advances in Neural Information Processing Systems, pp. 626–632 (1998)

Van der Maaten, L.J.P., Postma, E.O., van den Herik, H.J.: Dimensionality reduction: A comparative review. Journal of Machine Learning Research 10, 66–71 (2009)

Patridge, M., Calvo, R.: Fast dimensionality reduction and Simple PCA. Intelligent Data Analysis 2, 292–298 (1997)

Oja, E.: Principal components, minor components, and linear neural networks. In: Neural Networks, pp. 927–935 (1992)

Lehoucq, R.B., Sorensen, D.C., Yang, C.: ARPACK Users’ Guide: Solution of Large-Scale Eigenvalue Problems with Implicitly Restarted Arnoldi Methods. Software, Environments, and Tools 6 (1998)

Grbovic, M., Dance, R.C., Vucetic, S.: Sparse Principal Component Analysis with Constraints. In: Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence (2012)

Perronnin, F., Liu, Y., Sánchez, J., Poirier, H.: Large-scale image retrieval with compressed Fisher vectors. In: The Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, pp. 3384–3391 (2010)

Dekel, O., Keshet, J., Singer, Y.: Large margin hierarchical classification. In: ICML 2004: Proceedings of the Twenty First International Conference on Machine Learning, p. 27 (2004)

Yang, Y., Liu, X.: A re-examination of text categorization methods, pp. 42–49. ACM Press (1999)

Fan, R.-E., Chang, K.-W., Hsieh, C.-J., Wang, X.-R., Lin, C.-J.: LIBLINEAR: A library for large linear classification. Journal of Machine Learning Research 9, 1871–1874 (2008)

Venables, K.V., Ripley, B.D.: Modern Applied Statistics with S. Springer (2002)

Setiono, R., Liu, H.: Chi2: Feature selection and discretization of numeric attributes. In: Proceedings of the Seventh IEEE International Conference on Tools with Artificial Intelligence (1995)

Liu, T., Yang, Y., Wan, H., Zeng, H., Chen, Z., Ma, W.: Support Vector Machines Classification with a Very Large-scale Taxonomy. In: SIGKDD Explor. Newsl., pp. 36–43 (2005)

Chang, C., Lin, C.: LIBSVM: a library for support vector machines. In: ACM Transactions on Intelligent Systems and Technology (2011)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Kosmpoulos, A., Paliouras, G., Androutsopoulos, I. (2014). The Effect of Dimensionality Reduction on Large Scale Hierarchical Classification. In: Kanoulas, E., et al. Information Access Evaluation. Multilinguality, Multimodality, and Interaction. CLEF 2014. Lecture Notes in Computer Science, vol 8685. Springer, Cham. https://doi.org/10.1007/978-3-319-11382-1_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-11382-1_16

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-11381-4

Online ISBN: 978-3-319-11382-1

eBook Packages: Computer ScienceComputer Science (R0)