Abstract

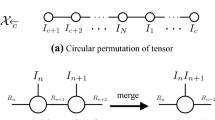

We propose singular value decomposition (SVD) algorithms for very large-scale matrices based on a low-rank tensor decomposition technique called the tensor train (TT) format. By using the proposed algorithms, we can compute several dominant singular values and corresponding singular vectors of large-scale structured matrices given in a low-rank TT format. We propose a large-scale trace optimization problem, and in the proposed methods, the large-scale optimization problem is reduced to sequential small-scale optimization problems. We show that the computational complexity of the proposed algorithms scales logarithmically with the matrix size if the TT-ranks are bounded. Numerical simulations based on very large-scale Hilbert matrix demonstrate the effectiveness of the proposed methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Comon, P., Golub, G.H.: Tracking a Few Extreme Singular Values and Vectors in Signal Processing. Proceedings of the IEEE 78, 1327–1343 (1990)

Frieze, A., Kannan, R., Vempala, S.: Fast Monte-Carlo Algorithms for Finding Low-Rank Approximations. In: Proceedings of the 39th Annual IEEE Symposium on Foundations of Computer Science, pp. 370–378 (1998)

Oseledets, I.V.: Tensor-Train Decomposition. SIAM J. Sci. Comput. 33, 2295–2317 (2011)

Grasedyck, L., Kressner, D., Tobler, C.: A Literature Survey of Low-Rank Tensor Approximation Techniques. arXiv:1302.7121 (2013)

Huckle, T., Waldherr, K., Schulte-Herbrüggen, T.: Computations in Quantum Tensor Networks. Linear Algebra Appl. 438, 750–781 (2013)

Cichocki, A.: Era of Big Data Processing: A New Approach via Tensor Networks and Tensor Decompositions. arXiv:1301.6068 (2014)

Lebedeva, O.S.: Tensor Conjugate-Gradient-Type Method for Rayleigh Quotient Minimization in Block QTT-Format. Russian J. Numer. Anal. Math. Modelling 26, 465–489 (2011)

Dolgov, S.V., Khoromskij, B.N., Oseledets, I.V., Savostyanov, D.V.: Computation of Extreme Eigenvalues in Higher Dimensions Using Block Tensor Train Format. Comp. Phys. Comm. 185, 1207–1216 (2014)

Kressner, D., Steinlechner, M., Uschmajew, A.: Low-Rank Tensor Methods with Subspace Correction for Symmetric Eigenvalue Problems. MATHICSE Technical Report 40.2013, EPFL, Lausanne (2013)

Holtz, S., Rohwedder, T., Schneider, R.: The Alternating Linear Scheme for Tensor Optimization in the Tensor Train Format. SIAM J. Sci. Comput. 34, A683–A713 (2012)

Schollwöck, U.: The Density-Matrix Renormalization Group in the Age of Matrix Product States. Ann. Physics 326, 96–192 (2011)

Lee, N., Cichocki, A.: Fundamental Tensor Operations for Large-Scale Data Analysis in Tensor Train Formats. arXiv:1405.7786 (2014)

Kolda, T.G., Bader, B.W.: Tensor Decompositions and Applications. SIAM Rev. 51, 455–500 (2009)

Knyazev, A.V.: Toward the Optimal Preconditioned Eigensolver: Locally Optimal Block Preconditioned Conjugate Gradient Method. SIAM J. Sci. Comput. 23, 517–541 (2001)

Lehoucq, R.B., Sorensen, D.C., Yang, C.: ARPACK User’s Guide: Solution of Large Scale Eigenvalue Problems with Implicitly Restarted Arnoldi Methods. Software Environ. Tools 6. SIAM, Philadelphia (1998). http://www.caam.rice.edu/software/ARPACK/

Kazeev, V.A., Khoromskij, B.N., Tyrtyshnikov, E.E.: Multilevel Toeplitz Matrices Generated by Tensor-Structured Vectors and Convolution with Logarithmic Complexity. SIAM J. Sci. Comput. 35, A1511–A1536 (2013)

Holtz, S., Rohwedder, T., Schneider, R.: On Manifolds of Tensors with Fixed TT-Rank. Numer. Math. 120, 701–731 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Lee, N., Cichocki, A. (2014). Big Data Matrix Singular Value Decomposition Based on Low-Rank Tensor Train Decomposition. In: Zeng, Z., Li, Y., King, I. (eds) Advances in Neural Networks – ISNN 2014. ISNN 2014. Lecture Notes in Computer Science(), vol 8866. Springer, Cham. https://doi.org/10.1007/978-3-319-12436-0_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-12436-0_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-12435-3

Online ISBN: 978-3-319-12436-0

eBook Packages: Computer ScienceComputer Science (R0)