Abstract

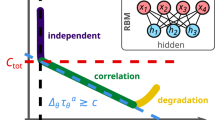

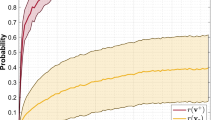

Evolution strategies have been demonstrated to offer a state-of- the-art performance on different optimisation problems. The efficiency of the algorithm largely depends on its ability to build an adequate meta-model of the function being optimised. This paper proposes a novel algorithm RBM-ES that utilises a computationally efficient restricted Boltzmann machine for maintaining the meta-model. We demonstrate that our algorithm is able to adapt its model to complex multidimensional landscapes. Furthermore, we compare the proposed algorithm to state-of the art algorithms such as CMA-ES on different tasks and demonstrate that the RBM-ES can achieve good performance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Auger, A., Brockhoff, D., Hansen, N.: Benchmarking the Local Metamodel CMA-ES on the Noiseless BBOB’2013 Test Bed. In: GECCO 2013 (2013)

Baluja, S., Caruana, R.: Removing the genetics from the standard genetic algorithm. In: ICML, pp. 1–11 (1995)

Sebag, M., Ducoulombier, A.: Extending population-based incremental learning to continuous search spaces. Parallel Probl. Solving from Nat. 5, 418–427 (1998)

Branke, J., Schmidt, C., Schmec, H.: Efficient Fitness Estimation in Noisy Environments. In: Proceedings of Genetic and Evolutionary Computation (2001)

Gutmann, H.-M.: A Radial Basis Function Method for Global Optimization. J. Glob. Optim. 19, 201–227 (2001)

Olhofer, M., Sendhoff, B.: A framework for evolutionary optimization with approximate fitness functions. IEEE Trans. Evol. Comput. 6, 481–494 (2002)

Büche, D., Schraudolph, N.N., Koumoutsakos, P.: Accelerating Evolutionary Algorithms With Gaussian Process Fitness Function Models. IEEE Trans. Syst. Man, Cybern. Part C Appl. Rev. 35, 183–194 (2005)

Runarsson, T.P.: Constrained evolutionary optimization by approximate ranking and Surrogate models. In: Yao, X., et al. (eds.) PPSN VIII. LNCS, vol. 3242, pp. 401–410. Springer, Heidelberg (2004)

Emmerich, M., Giotis, A., Özdemir, M., Bäck, T., Giannakoglou, K.: Metamodel-Assisted Evolution Strategies. In: Guervós, J.J.M., Adamidis, P.A., Beyer, H.-G., Fernández-Villacañas, J.-L., Schwefel, H.-P. (eds.) PPSN VII. LNCS, vol. 2439, pp. 361–370. Springer, Heidelberg (2002)

Gallagher, M.: Black-box optimization benchmarking: Results for the BayEDAcG algorithm on the noiseless function testbed. In: GECCO 2009, p. 2383. ACM Press, New York (2009)

Forrester, A., Sobester, A., Keane, A.: Engineering Design via Surrogate Modelling A Practical Guide. Wiley (2008)

Hansen, N., Auger, A., Finck, S., Ros, R.: Real-Parameter Black-Box Optimization Benchmarking 2009: Experimental Setup (2009)

Hinton, G.E.: Training products of experts by minimizing contrastive divergence. Neural Comput. 14, 1771–1800 (2002)

Martens, J., Chattopadhyay, A., Pitassi, T., Zemel, R.: On the Representational Efficiency of Restricted Boltzmann Machines. In: NIPS, pp. 1–21 (2013)

Hinton, G.E.: To Recognize Shapes, First Learn to Generate Images. Prog. Brain Res. 165, 535–547 (2007)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning. ACM Press (2010)

Hinton, G.E.: A Practical Guide to Training Restricted Boltzmann Machines (2010)

Desjardins, G., Courville, A.: Parallel tempering for training of restricted Boltzmann machines. In: AISTATS, pp. 145–152 (2010)

Larochelle, H., Mandel, M.: Learning Algorithms for the Classification Restricted Boltzmann Machine. J. Mach. Learn. Res. 13, 643–669 (2012)

Desjardins, G., Bengio, Y.: Empirical evaluation of convolutional RBMs for vision (2008)

Luo, H., Shen, R., Niu, C.: Sparse Group Restricted Boltzmann Machines. Arxiv Prepr. arXiv1008.4988, pp. 1–9 (2010)

Cho, K., Ilin, A., Raiko, T.: Improved learning of Gaussian-Bernoulli restricted Boltzmann machines. In: Honkela, T., Duch, W., Girolami, M., Kaski, S. (eds.) ICANN 2011, Part I. LNCS, vol. 6791, pp. 10–17. Springer, Heidelberg (2011)

Cho, K., Raiko, T., Ilin, A.: Enhanced gradient and adaptive learning rate for training restricted Boltzmann machines. Neural Computation 25(3) (2011)

Welling, M.: Product of Experts, http://www.scholarpedia.org/article/Product_of_experts

Welling, M., Rosen-Zvi, M., Hinton, G.E.: Exponential family harmoniums with an application to information retrieval. In: Adv. Neural Inf. Process. Syst. (2005)

Wang, N., Melchior, J., Wiskott, L.: An analysis of Gaussian-binary restricted Boltzmann machines for natural images. In: ESANN (2012)

Bengio, Y.: Learning Deep Architectures for AI. Found. Trends Mach. Learn. 2, 1–127 (2009)

Hansen, N., Auger, A., Ros, R., Finck, S., Pošík, P.: Comparing results of 31 algorithms from the black-box optimization benchmarking BBOB-2009. In: Proceedings of the 12th Annual Conference Comp. on Genetic and Evolutionary Computation, GECCO 2010, p. 1689. ACM Press, New York (2010)

Hansen, N.: Benchmarking a BI-population CMA-ES on the BBOB-2009 function testbed. In: Proceedings of the 11th Annual Conference Companion on Genetic and Evolutionary Computation Conference, GECCO 2009, p. 2389. ACM Press, New York (2009)

Taylor, M.E., Whiteson, S., Stone, P.: Comparing evolutionary and temporal difference methods in a reinforcement learning domain. In: GECCO 2006, p. 1321. ACM Press, New York (2006)

Heidrich-Meisner, V., Igel, C.: Similarities and differences between policy gradient methods and evolution strategies. In: ESANN, pp. 23–25 (2008)

Riedmiller, M., Peters, J., Schaal, S.: Evaluation of Policy Gradient Methods and Variants on the Cart-Pole Benchmark. In: 2007 IEEE ISADPRL, pp. 254–261 (2007)

Hansen, N.: The CMA Evolution Strategy: A Comparing Review. In: Lozano, J.A., Larrañaga, P., Inza, I., Bengoetxea, E. (eds.) Towards a New Evolutionary Computation. STUDFUZZ, vol. 192, pp. 75–102. Springer, Heidelberg (2006)

Dorigo, M., Stützle, T.: Ant Colony Optimization. MIT Press (2004)

Whitacre, J.M.: Adaptation and Self-Organization in Evolutionary Algorithms (2007)

Meyer-Nieberg, S., Beyer, H.: Self-adaptation in evolutionary algorithms. In: Lobo, F.G., Lima, C.F., Michalewicz, Z. (eds.) Parameter Setting in Evolutionary Algorithms. SCI, vol. 54, pp. 47–75. Springer, Heidelberg (2007)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Makukhin, K. (2014). Evolution Strategies with an RBM-Based Meta-Model. In: Kim, Y.S., Kang, B.H., Richards, D. (eds) Knowledge Management and Acquisition for Smart Systems and Services. PKAW 2014. Lecture Notes in Computer Science(), vol 8863. Springer, Cham. https://doi.org/10.1007/978-3-319-13332-4_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-13332-4_20

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-13331-7

Online ISBN: 978-3-319-13332-4

eBook Packages: Computer ScienceComputer Science (R0)