Abstract

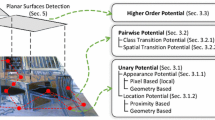

RGB-D sensors are popular in the computer vision community, especially for problems of scene understanding, semantic scene labeling, and segmentation. However, most of these methods depend on reliable input depth measurements, while discarding unreliable ones. This paper studies how reliable depth values can be used to correct the unreliable ones, and how to complete (or extend) the available depth data beyond the raw measurements of the sensor (i.e. infer depth at pixels with unknown depth values), given a prior model on the 3D scene. We consider piecewise planar environments in this paper, since many indoor scenes with man-made objects can be modeled as such. We propose a framework that uses the RGB-D sensor’s noise profile to adaptively and robustly fit plane segments (e.g. floor and ceiling) and iteratively complete the depth map, when possible. Depth completion is formulated as a discrete labeling problem (MRF) with hard constraints and solved efficiently using graph cuts. To regularize this problem, we exploit 3D and appearance cues that encourage pixels to take on depth values that will be compatible in 3D to the piecewise planar assumption. Extensive experiments, on a new large-scale and challenging dataset, show that our approach results in more accurate depth maps (with 20 % more depth values) than those recorded by the RGB-D sensor. Additional experiments on the NYUv2 dataset show that our method generates more 3D aware depth. These generated depth maps can also be used to improve the performance of a state-of-the-art RGB-D SLAM method.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Barron, J.T., Malik, J., Berkeley, U.C.: Intrinsic scene properties from a single RGB-D image. In: CVPR (2013)

Bazin, J., Seo, Y.: Globally optimal line clustering and vanishing point estimation in manhattan world. In: CVPR (2012)

Boykov, Y., Funka-Lea, G.: Graph cuts and efficient N-D image segmentation. IJCV 70(2), 109–131 (2006)

Camplani, M., Salgado, L.: Efficient spatio-temporal hole filling strategy for kinect depth maps. In: SPIE (2012)

Cheung, S.C.S.: Layer depth denoising and completion for structured-light RGB-D cameras. In: CVPR (2013)

Diebel, J., Thrun, S.: An application of markov random fields to range sensing. In: NIPS (2005)

Endres, F., Hess, J., Engelhard, N., Sturm, J., Cremers, D., Burgard, W.: An evaluation of the RGB-D SLAM system. In: ICRA (2012)

Flint, A., Murray, D., Reid, I.: Manhattan scene understanding using monocular, stereo, and 3D features. In: ICCV (2011)

Furukawa, Y., Curless, B., Seitz, S., Szeliski, R.: Manhattan-world stereo. In: CVPR (2009)

Furukawa, Y., Curless, B., Seitz, S.M., Szeliski, R.: Reconstructing building interiors from images. In: ICCV (2009)

Gallup, D., Frahm, J.M., Pollefeys, M.: Piecewise planar and non-planar stereo for urban scene reconstruction. In: CVPR (2012)

Ghanem, B., Ahuja, N.: Dinkelbach NCUT: An efficient framework for solving normalized cuts problems with priors and convex constraints. IJCV 89(1), 40–55 (2010)

Gupta, S., Arbel, P., Malik, J., Berkeley, B.: Perceptual organization and recognition of indoor scenes from RGB-D images. In: CVPR (2013)

Hedau, V., Hoiem, D., Forsyth, D.: Recovering the spatial layout of cluttered rooms. In: CVPR (2009)

Hedau, V., Hoiem, D., Forsyth, D.: Thinking inside the box: using appearance models and context based on room geometry. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010, Part VI. LNCS, vol. 6316, pp. 224–237. Springer, Heidelberg (2010)

Hedau, V., Hoiem, D., Forsyth, D.: Recovering free space of indoor scenes from a single image. In: CVPR (2012)

Henry, P., Krainin, M., Herbst, E., Ren, X., Fox, D.: RGB-D mapping: using kinect-style depth cameras for dense 3D modeling of indoor environments. IJRR 31(5), 647–663 (2012)

Hu, G., Huang, S., Zhao, L.: A robust RGB-D SLAM algorithm. In: IROS (2012)

Jia, Z., Gallagher, A., Saxena, A., Chen, T.: 3D-based reasoning with blocks, support, and stability. In: CVPR (2013)

Kim, B.s., Arbor, A., Savarese, S.: Accurate localization of 3D objects from RGB-D data using segmentation hypotheses. In: CVPR (2013)

Kopf, J., Cohen, M.: Joint bilateral upsampling. In: SIGGRAPH (2007)

Koppula, H.S., Anand, A., Joachims, T., Saxena, A.: Semantic labeling of 3D point clouds for indoor scenes. In: NIPS (2011)

Levin, A., Lischinski, D., Weiss, Y.: Colorization using optimization. In: SIGGRAPH (2004)

Park, J., Kim, H., Brown, M.S., Kweon, I.: High quality depth map upsampling for 3D-TOF cameras. In: ICCV (2011)

Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: Indoor segmentation and support inference from RGBD images. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012, Part V. LNCS, vol. 7576, pp. 746–760. Springer, Heidelberg (2012)

Sturm, J., Engelhard, N., Endres, F., Burgard, W., Cremers, D.: A benchmark for the evaluation of RGB-D SLAM systems. In: IROS (2012)

Wang, L., Jin, H., Yang, R., Gong, M.: Stereoscopic inpainting: joint color and depth completion from stereo images. In: CVPR (2008)

Acknowledgement

Research reported in this publication was supported by competitive research funding from King Abdullah University of Science and Technology (KAUST).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Thabet, A.K., Lahoud, J., Asmar, D., Ghanem, B. (2015). 3D Aware Correction and Completion of Depth Maps in Piecewise Planar Scenes. In: Cremers, D., Reid, I., Saito, H., Yang, MH. (eds) Computer Vision -- ACCV 2014. ACCV 2014. Lecture Notes in Computer Science(), vol 9004. Springer, Cham. https://doi.org/10.1007/978-3-319-16808-1_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-16808-1_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-16807-4

Online ISBN: 978-3-319-16808-1

eBook Packages: Computer ScienceComputer Science (R0)