Abstract

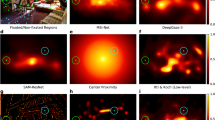

In this study, we investigate whether the aggregation of saliency maps allows to outperform the best saliency models. This paper discusses various aggregation methods; six unsupervised and four supervised learning methods are tested on two existing eye fixation datasets. Results show that a simple average of the TOP 2 saliency maps significantly outperforms the best saliency models. Considering more saliency models tends to decrease the performance, even when robust aggregation methods are used. Concerning the supervised learning methods, we provide evidence that it is possible to further increase the performance, under the condition that an image similar to the input image can be found in the training dataset. Our results might have an impact for critical applications which require robust and relevant saliency maps.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Koch, C., Ullman, S.: Shifts in selective visual attention: towards the underlying neural circuitry. Human Neurobiol. 4, 219–227 (1985)

Borji, A., Itti, L.: State-of-the-art in visual attention modeling. IEEE Trans. Pattern Anal. Mach. Intell. 35, 185–207 (2013)

Harel, J., Koch, C., Perona, P.: Graph-based visual saliency. In: Proceedings of Neural Information Processing Systems (NIPS) (2006)

Le Meur, O., Le Callet, P., Barba, D., Thoreau, D.: A coherent computational approach to model the bottom-up visual attention. IEEE Trans. PAMI 28, 802–817 (2006)

Garcia-Diaz, A., Fdez-Vidal, X.R., Pardo, X.M., Dosil, R.: Saliency from hierarchical adaptation through decorrelation and variance normalization. Image Vis. Comput. 30, 51–64 (2012)

Itti, L., Koch, C., Niebur, E.: A model for saliency-based visual attention for rapid scene analysis. IEEE Trans. PAMI 20, 1254–1259 (1998)

Bruce, N., Tsotsos, J.: Saliency, attention and visual search: an information theoretic approach. J. Vis. 9, 1–24 (2009)

Hou, X., Zhang, L.: Saliency detection: A spectral residual approach. In: CVPR (2007)

Borji, A., Sihite, D.N., Itti, L.: Salient object detection: A benchmark. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012, Part II. LNCS, vol. 7573, pp. 414–429. Springer, Heidelberg (2012)

Mai, L., Niu, Y., Feng, L.: Saliency aggregation: a data-driven approach. In: CVPR (2013)

Liu, Z., Zou, W., Le Meur, O.: Saliency tree: A novel saliency detection framework. IEEE Trans. Image Process. 23, 1937–1952 (2014)

Judd, T., Ehinger, K., Durand, F., Torralba, A.: Learning to predict where people look. In: ICCV (2009)

Riche, N., Mancas, M., Duvinage, M., Mibulumukini, M., Gosselin, B., Dutoit, T.: Rare 2012: A multi-scale rarity-based saliency detection with its comparative statistical analysis. Sig. Process. Image Commun. 28, 642–658 (2013)

Boykov, Y., Kolmogorov, V.: An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision. IEEE Trans. PAMI 26, 1124–1137 (2004)

Le Meur, O., Baccino, T.: Methods for comparing scanpaths and saliency maps: strengths and weaknesses. Behav. Res. Method 1, 1–16 (2012)

Roweis, S., Saul, L.: Nonlinear dimensionality reduction by locally linear embedding. Science 5500, 2323–2326 (2000)

Lee, D.D., Seung, H.S.: Algorithms for non-negative matrix factorization. In: Advances in Neural Information Processing System, (NIPS) (2000)

Huber, P.: Robust regression: Asymptotics, conjectures and monte carlo. Ann. Stat. 1, 799–821 (1973)

Peters, R.J., Iyer, A., Itti, L., Koch, C.: Components of bottom-up gaze allocation in natural images. Vis. Res. 45, 2397–2416 (2005)

Borji, A., Sihite, D.N., Itti, L.: Quantitative analysis of human-model agreement in visual saliency modeling: A comparative study. IEEE Trans. Image Process. 22, 55–69 (2012)

Jégou, H., Perronnin, F., Douze, M., Sánchez, J., Pérez, P., Schmid, C.: Aggregating local image descriptors into compact codes. IEEE Trans. Pattern Anal. Mach. Intell. 34, 1704–1716 (2012)

Yubing, T., Cheikh, F., Guraya, F., Konik, H., Trémeau, A.: A spatiotemporal saliency model for video surveillance. Cogn. Comput. 3, 241–263 (2011)

Liu, Z., Zhang, X., Luo, S., Le Meur, O.: Superpixel-based spatiotemporal saliency detection. IEEE Trans. Circuits Syst. Video Technol. 24, 1522–1540 (2014)

Mamede, S., Splinter, T., van Gog, T., Rikers, R.M.J.P., Schmidt, H.: Exploring the role of salient distracting clinical features in the emergence of diagnostic errors and the mechanisms through which reflection counteracts mistakes. BMJ Qual. Saf. 21, 295–300 (2012)

Won, W.J., Lee, M., Son, J.W.: Implementation of road traffic signs detection based on saliency map model. In: Intelligent Vehicles Symposium, pp. 542–547 (2008)

Acknowledgment

This work is supported in part by a Marie Curie International Incoming Fellowship within the 7th European Community Framework Programme under Grant No. 299202 and No. 911202, and in part by the National Natural Science Foundation of China under Grant No. 61171144. We thank Dr. Wanlei Zhao for his technical assistance for computing the VLAD scores.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Le Meur, O., Liu, Z. (2015). Saliency Aggregation: Does Unity Make Strength?. In: Cremers, D., Reid, I., Saito, H., Yang, MH. (eds) Computer Vision -- ACCV 2014. ACCV 2014. Lecture Notes in Computer Science(), vol 9006. Springer, Cham. https://doi.org/10.1007/978-3-319-16817-3_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-16817-3_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-16816-6

Online ISBN: 978-3-319-16817-3

eBook Packages: Computer ScienceComputer Science (R0)