Abstract

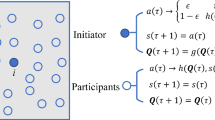

Many real-world scenarios can be modelled as multi-agent systems, where multiple autonomous decision makers interact in a single environment. The complex and dynamic nature of such interactions prevents hand-crafting solutions for all possible scenarios, hence learning is crucial. Studying the dynamics of multi-agent learning is imperative in selecting and tuning the right learning algorithm for the task at hand. So far, analysis of these dynamics has been mainly limited to normal form games, or unstructured populations. However, many multi-agent systems are highly structured, complex networks, with agents only interacting locally. Here, we study the dynamics of such networked interactions, using the well-known replicator dynamics of evolutionary game theory as a model for learning. Different learning algorithms are modelled by altering the replicator equations slightly. In particular, we investigate lenience as an enabler for cooperation. Moreover, we show how well-connected, stubborn agents can influence the learning outcome. Finally, we investigate the impact of structural network properties on the learning outcome, as well as the influence of mutation driven by exploration.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We describe stateless Q-learning, as this version is suitable for the work presented in this paper.

- 2.

Note that we exclude these fixed nodes from the results presented here, however a similar trend can be observed it they are included.

References

Ahn, H.T., Pereira, L.M., Santos, F.C.: Intention recognition promotes the emergence of cooperation. Adapt. Behav. 19(4), 264–279 (2011)

Backstrom, L., Boldi, P., Rosa, M.: Four degrees of separation. Arxiv preprint (2011). arXiv:1111.4570

Barabási, A.L., Albert, R.: Emergence of scaling in random networks. Science 286(5439), 509–512 (1999)

Barabási, A.L., Oltvai, Z.N.: Network biology: understanding the cell’s functional organization. Nat. Rev. Genet. 5(2), 101–113 (2004)

Bloembergen, D., De Jong, S., Tuyls, K.: Lenient learning in a multiplayer stag hunt. In: Proceedings of 23rd Benelux Conference on Artificial Intelligence (BNAIC 2011), pp. 44–50 (2011)

Bloembergen, D., Kaisers, M., Tuyls, K.: Empirical and theoretical support for lenient learning. In: Tumer, Yolum, Sonenberg, Stone (eds.) Proceedings of 10th International Conference on AAMAS 2011, pp. 1105–1106. International Foundation for AAMAS (2011)

Bloembergen, D., Ranjbar-Sahraei, B., Ammar, H.B., Tuyls, K., Weiss, G.: Influencing social networks: an optimal control study. In: Proceedings of the 21st ECAI 2014, pp. 105–110 (2014)

Börgers, T., Sarin, R.: Learning through reinforcement and replicator dynamics. J. Econ. Theor. 77(1), 1–14 (1997)

Boyd, R., Gintis, H., Bowles, S.: Coordinated punishment of defectors sustains cooperation and can proliferate when rare. Science 328(5978), 617–620 (2010)

Bullmore, E., Sporns, O.: Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10(3), 186–198 (2009)

Busoniu, L., Babuska, R., De Schutter, B.: A comprehensive survey of multiagent reinforcement learning. IEEE Trans. Syst. Man Cybern. Part C: Appl. Rev. 38(2), 156–172 (2008)

Chapman, M., Tyson, G., Atkinson, K., Luck, M., McBurney, P.: Social networking and information diffusion in automated markets. In: David, E., Kiekintveld, C., Robu, V., Shehory, O., Stein, S. (eds.) AMEC 2012 and TADA 2012. LNBIP, vol. 136, pp. 1–15. Springer, Heidelberg (2013)

Cimini, G., Sánchez, A.: Learning dynamics explains human behaviour in prisoner’s dilemma on networks. J. R. Soc. Interface 11(94), 20131186 (2014)

Cross, J.G.: A stochastic learning model of economic behavior. Q. J. Econ. 87(2), 239–266 (1973)

Dickens, L., Broda, K., Russo, A.: The dynamics of multi-agent reinforcement learning. In: ECAI, pp. 367–372 (2010)

Easley, D., Kleinberg, J.: Networks, Crowds, and Markets: Reasoning about a Highly Connected World. Cambridge University Press, Cambridge (2010)

Edmonds, B., Norling, E., Hales, D.: Towards the evolution of social structure. Comput. Math. Organ. Theory 15(2), 78–94 (2009)

Ghanem, A.G., Vedanarayanan, S., Minai, A.A.: Agents of influence in social networks. In: Proceedings of the 11th International Conference on AAMAS 2012 (2012)

Han, T.A., Pereira, L.M., Santos, F.C., Lenaerts, T.: Good agreements make good friends. Sci. Rep. 3, Article number: 2695 (2013). doi:10.1038/srep02695

Hofbauer, J., Sigmund, K.: Evolutionary games and population dynamics. Cambridge University Press, Cambridge (1998)

Hofmann, L.M., Chakraborty, N., Sycara, K.: The evolution of cooperation in self-interested agent societies: a critical study. In: Proceedings of the 10th International Conference on AAMAS 2011, pp. 685–692 (2011)

Jackson, M.O.: Social and Economic Networks. Princeton University Press, Princeton (2008)

Kaisers, M., Tuyls, K.: Frequency adjusted multi-agent Q-learning. In: Proceedings of 9th International Conference on AAMAS 2010, pp. 309–315, 10–14 May 2010

Maynard Smith, J., Price, G.R.: The logic of animal conflict. Nature 246(2), 15–18 (1973)

Narendra, K.S., Thathachar, M.A.L.: Learning automata - a survey. IEEE Trans. Syst. Man Cybern. 4(4), 323–334 (1974)

Nowak, M.A., May, R.M.: Evolutionary games and spatial chaos. Nature 359(6398), 826–829 (1992)

Ohtsuki, H., Hauert, C., Lieberman, E., Nowak, M.A.: A simple rule for the evolution of cooperation on graphs and social networks. Nature 441(7092), 502–505 (2006)

Pacheco, J.M., Santos, F.C., Souza, M.O., Skyrms, B.: Evolutionary dynamics of collective action in n-person stag hunt dilemmas. Proc. R. Soc. B: Biol. Sci. 276, 315–321 (2009)

Panait, L., Tuyls, K., Luke, S.: Theoretical advantages of lenient learners: an evolutionary game theoretic perspective. J. Mach. Learn. Res. 9, 423–457 (2008)

Ranjbar-Sahraei, B., Bou Ammar, H., Bloembergen, D., Tuyls, K., Weiss, G.: Evolution of cooperation in arbitrary complex networks. In: Proceedings of the 2014 International Conference on AAMAS 2014, pp. 677–684. International Foundation for AAMAS (2014)

Santos, F., Pacheco, J.: Scale-free networks provide a unifying framework for the emergence of cooperation. Phys. Rev. Lett. 95(9), 1–4 (2005)

Sigmund, K., Hauert, C., Nowak, M.A.: Reward and punishment. Proc. Nat. Acad. Sci. 98(19), 10757–10762 (2001)

Skyrms, B.: The Stag Hunt and the Evolution of Social Structure. Cambridge University Press, Cambridge (2004)

Sutton, R., Barto, A.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (1998)

Thathachar, M., Sastry, P.S.: Varieties of learning automata: an overview. IEEE Trans. Syst. Man Cybern. Part B: Cybern. 32(6), 711–722 (2002)

Tuyls, K., Verbeeck, K., Lenaerts, T.: A selection-mutation model for q-learning in multi-agent systems. In: Proceedings of 2nd International Conference on AAMAS 2003, pp. 693–700. ACM, New York (2003)

Ugander, J., Karrer, B., Backstrom, L., Marlow, C.: The anatomy of the facebook social graph. arXiv preprint, pp. 1–17 (2011). arXiv:1111.4503

Van Segbroeck, S., de Jong, S., Nowe, A., Santos, F.C., Lenaerts, T.: Learning to coordinate in complex networks. Adapt. Behav. 18(5), 416–427 (2010)

Watkins, C.J.C.H., Dayan, P.: Q-learning. Mach. Learn. 8(3), 279–292 (1992)

Watts, D.J., Strogatz, S.H.: Collective dynamics of ‘small-world’ networks. Nature 393(6684), 440–442 (1998)

Weibull, J.W.: Evolutionary game theory. MIT press, Cambridge (1997)

Zimmermann, M.G., Eguíluz, V.M.: Cooperation, social networks, and the emergence of leadership in a prisoner’s dilemma with adaptive local interactions. Phys. Rev. E 72(5), 056118 (2005)

Acknowledgements

We thank the anonymous reviewers as well as the audience at the Artificial Life and Intelligent Agents symposium for their helpful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Bloembergen, D., Caliskanelli, I., Tuyls, K. (2015). Learning in Networked Interactions: A Replicator Dynamics Approach. In: Headleand, C., Teahan, W., Ap Cenydd, L. (eds) Artificial Life and Intelligent Agents. ALIA 2014. Communications in Computer and Information Science, vol 519. Springer, Cham. https://doi.org/10.1007/978-3-319-18084-7_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-18084-7_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-18083-0

Online ISBN: 978-3-319-18084-7

eBook Packages: Computer ScienceComputer Science (R0)