Abstract

The manycore paradigm shift, and the resulting change in modern computer architectures, has made the development of optimal numerical routines extremely challenging. In this work, we target the development of numerical algorithms and implementations for Xeon Phi coprocessor architecture designs. In particular, we examine and optimize the general and symmetric matrix-vector multiplication routines (gemv/symv), which are some of the most heavily used linear algebra kernels in many important engineering and physics applications. We describe a successful approach on how to address the challenges for this problem, starting with our algorithm design, performance analysis and programing model and moving to kernel optimization. Our goal, by targeting low-level and easy to understand fundamental kernels, is to develop new optimization strategies that can be effective elsewhere for use on manycore coprocessors, and to show significant performance improvements compared to existing state-of-the-art implementations. Therefore, in addition to the new optimization strategies, analysis, and optimal performance results, we finally present the significance of using these routines/strategies to accelerate higher-level numerical algorithms for the eigenvalue problem (EVP) and the singular value decomposition (SVD) that by themselves are foundational for many important applications.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

CUDA Cublas Library. https://developer.nvidia.com/cublas

MAGMA. http://icl.cs.utk.edu/magma/

Abdelfattah, A., Keyes, D., Ltaief, H.: Systematic approach in optimizing numerical memory-bound kernels on GPU. In: Caragiannis, I., et al. (eds.) Euro-Par Workshops 2012. LNCS, vol. 7640, pp. 207–216. Springer, Heidelberg (2013)

Agullo, E., Augonnet, C., Dongarra, J., Ltaief, H., Namyst, R., Thibault, S.,Tomov, S.: Faster, cheaper, better - a hybridization methodology to develop linear algebra software for GPUs.In: Mei, W., Hwu, W. (eds.) GPU Computing Gems, vol. 2. Morgan Kaufmann, September 2010

Anderson, E., Bai, Z., Bischof, C., Blackford, S.L., Demmel, J.W., Dongarra, J.J., Croz, J.D., Greenbaum, A., Hammarling, S., McKenney, A., Sorensen, D.C.: LAPACK User’s Guide, 3rd edn. Society for Industrial and Applied Mathematics, Philadelphia (1999)

Anderson, M.J., Sheffield, D., Keutzer, K.: A predictive model for solving small linear algebra problems in gpu registers. In: IEEE 26th International Parallel Distributed Processing Symposium (IPDPS) (2012)

Bosilca, G., Bouteiller, A., Danalis, A., Hérault, T., Lemarinier, P., Dongarra, J.: DAGuE: a generic distributed DAG engine for high performance computing. Parallel Comput. 38(1–2), 37–51 (2012)

Buttari, A., Langou, J., Kurzak, J., Dongarra, J.: A class of parallel tiled linear algebra algorithms for multicore architectures. Parallel Comput. 35(1), 38–53 (2009)

Chan, E., Quintana-Orti, E.S., Quintana-Orti, G., van de Geijn, R.: Supermatrix out-of-order scheduling of matrix operations for smp and multi-core architectures. In: Proceedings of the Nineteenth Annual ACM Symposium on Parallel Algorithms and Architectures, SPAA 2007, pp. 116–125. ACM, New York (2007)

Dong, T., Haidar, A., Luszczek, P., Harris, A., Tomov, S., Dongarra, J.: LU Factorization of small matrices: accelerating batched DGETRF on the GPU. In: Proceedings of 16th IEEE International Conference on High Performance and Communications (HPCC 2014), August 2014

Dong, T., Haidar, A., Tomov, S., Dongarra, J.: A fast batched Cholesky factorization on a GPU. In: Proceedings of 2014 International Conference on Parallel Processing (ICPP-2014), September 2014

Dong, T., Dobrev, V., Kolev, T., Rieben, R., Tomov, S., Dongarra, J.: A step towards energy efficient computing: Redesigning a hydrodynamic application on CPU-GPU. In: IEEE 28th International Parallel Distributed Processing Symposium (IPDPS) (2014)

Dongarra, J., Du Croz, J., Duff, I., Hammarling, S.: Algorithm 679: a set of level 3 basic linear algebra subprograms. ACM Trans. Math. Soft. 16(1), 18–28 (1990)

Dongarra, J., Gates, M., Haidar, A., Jia, Y., Kabir, K., Luszczek, P., Tomov, S.: Portable HPC programming on intel many-integrated-core hardware with MAGMA port to Xeon Phi. In: Wyrzykowski, R., Dongarra, J., Karczewski, K., Waśniewski, J. (eds.) PPAM 2013, Part I. LNCS, vol. 8384, pp. 571–581. Springer, Heidelberg (2014)

Dongarra, J.J., Sorensen, D.C., Hammarling, S.J.: Block reduction of matrices to condensed forms for eigenvalue computations. J. Comput. Appl. Math. 27(1–2), 215–227 (1989). Special Issue on Parallel Algorithms for Numerical Linear Algebra

Fuller, S.H., Millett, I. (eds.): The Future of Computing Performance: Game Over or Next Level?. The National Academies Press, Washington (2011)

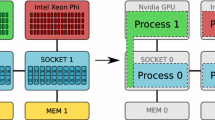

Haidar, A., Cao, C., Yarkhan, A., Luszczek, P., Tomov, S., Kabir, K., Dongarra, J.: Unified development for mixed multi-gpu and multi-coprocessor environments using a lightweight runtime environment. In: Proceedings of the 2014 IEEE 28th International Parallel and Distributed Processing Symposium, IPDPS 2014, pp. 491–500. IEEE Computer Society, Washington, DC (2014)

Haidar, A., Dong, T., Luszczek, P., Tomov, S., Dongarra, J.: Batched matrix computations on hardware accelerators based on GPUs. Int. J. High Perform. Comput. Appl. February 2015. doi:10.1177/1094342014567546

Haidar, A., Dongarra, J., Kabir, K., Gates, M., Luszczek, P., Tomov, S., Jia, Y.: Hpc programming on intel many-integrated-core hardware with magma port to xeon phi. Scientific Programming, 23, January 2015

Haidar, A., Luszczek, P., Tomov, S., Dongarra, J.: Towards batched linear solvers on accelerated hardware platforms. In: Proceedings of the 20th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP 2015. ACM, San Francisco, February 2015

Im, E.-J., Yelick, K., Vuduc, R.: Sparsity: Optimization framework for sparse matrix kernels. Int. J. High Perform. Comput. Appl. 18(1), 135–158 (2004)

Intel. Math kernel library. https://software.intel.com/en-us/en-us/intel-mkl/

John McCalpin. STREAM: Sustainable Memory Bandwidth in High Performance Computers. (http://www.cs.virginia.edu/stream/)

Messer, O.E.B., Harris, J.A., Parete-Koon, S., Chertkow, M.A.: Multicore and accelerator development for a leadership-class stellar astrophysics code. In: Manninen, P., Öster, P. (eds.) PARA. LNCS, vol. 7782, pp. 92–106. Springer, Heidelberg (2013)

Molero, J.M., Garzón, E.M., García, I., Quintana-Ortí, E.S., Plaza, A.: Poster: a batched Cholesky solver for local RX anomaly detection on GPUs, PUMPS (2013)

Nath, R., Tomov, S., Dong, T., Dongarra, J.: Optimizing symmetric dense matrix-vector multiplication on GPUs. In: Proceedings of International Conference for High Performance Computing. Networking, Storage and Analysis, Nov 2011

Tomov, S., Dongarra, J., Baboulin, M.: Towards dense linear algebra for hybrid gpu accelerated manycore systems. Parellel Comput. Syst. Appl. 36(5–6), 232–240 (2010)

Tomov, S., Nath, R., Ltaief, H., Dongarra, J.: Dense linear algebra solvers for multicore with GPU accelerators. In: Proceedings of the 2010 IEEE International Parallel & Distributed Processing Symposium, IPDPS 2010, pp. 1–8. IEEE Computer Society, Atlanta, 19–23 April 2010. http://dx.doi.org/10.1109/IPDPSW.2010.5470941. doi:10.1109/IPDPSW.2010.5470941

Tomov, S., Nath, R., Dongarra, J.: Accelerating the reduction to upper Hessenberg, tridiagonal, and bidiagonal forms through hybrid GPU-based computing. Parallel Comput. 36(12), 645–654 (2010)

Volkov, V., Demmel, J.: Benchmarking GPUs to tune dense linear algebra.In: Supercomputing 2008. IEEE (2008)

Yeralan, S.N., Davis, T.A., Ranka, S.: Sparse mulitfrontal QR on the GPU. Technical report, University of Florida Technical Report (2013)

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. ACI-1339822, the Department of Energy, and Intel. The results were obtained in part with the financial support of the Russian Scientific Fund, Agreement N14-11-00190.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Kabir, K., Haidar, A., Tomov, S., Dongarra, J. (2015). On the Design, Development, and Analysis of Optimized Matrix-Vector Multiplication Routines for Coprocessors. In: Kunkel, J., Ludwig, T. (eds) High Performance Computing. ISC High Performance 2015. Lecture Notes in Computer Science(), vol 9137. Springer, Cham. https://doi.org/10.1007/978-3-319-20119-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-20119-1_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20118-4

Online ISBN: 978-3-319-20119-1

eBook Packages: Computer ScienceComputer Science (R0)