Abstract

Virtual aquariums have various advantages when compared with real aquariums. First, imaginary creatures and creatures that are difficult to maintain in real aquariums can be displayed. Second, virtual aquariums have similar soothing effects as an actual aquarium. Therefore, we developed a new virtual aquarium through integral photography (IP), wherein virtual fishes are created with 3DCG animation and real water. Stereoscopic view is possible from all directions above the water tank through the IP and without the need for special glasses. A fly’s eye lens is sunk in the water resulting in larger focal length for the fly’s eye lens and an increase in the amount of popping out. Therefore, a stronger stereoscopic effect is obtained. The displayed fishes appear to be alive and swimming in the water, an effect achieved through three-dimensional computer graphics animation. This system can also be appreciated as an artwork. This system can also be applied to exhibit already-extinct ancient creatures in aquariums or museums in the future.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

A large number of people keep fishes in their homes and/or offices because of its soothing effect. Recent progress in computer graphics technology has made it possible to create an aquarium using virtual reality. Virtual fish has many advantages compared to real fish. For instance, keeping virtual fishes remove limitations as to the type of fish kept in the tank. From fishes that live deep in the sea to fictional creatures, one’s imagination would be the limit. Fishes that have perished can also be restored and brought back to life.

Figure 1 shows the concept of our system. Creatures created through three-dimensional computer graphics (3DCG) animation appear to be alive and swimming at the surface and beneath the water.

2 Related Work

Our virtual aquarium may share similar concepts with a virtual water tank where a display is arranged on one side of the water tank. In such cases however, displaying the creature in water can be difficult.

AquaTop Display: A True Immersive Water Display System [1]: a display that projects two-dimensional (2D) images on the surface of the water clouded with bath salts. In contrast, our study enables the display of a stereoscopic image as if it actually exists in the water and not only on the surface.

Three-dimensional (3D) crystal engraving or Bubblegram [2]: 3D designs are generated inside a solid block of glass or transparent plastic by irradiating a laser beam inside the material. The objects appear to exist inside the block. However, once it is created, changing the shape or moving the object becomes impossible. In comparison, our system enables animating the 3DCG image displayed inside the water.

3 Integral Photography

We adopted an integral photography (IP) in this system because IP is indisputably the most ideal system among various 3D display systems developed to date. IP, which was invented by Lippmann in 1908 and has been improved continuously, has an advantage in that it provides both horizontal and vertical parallax without the need to wear stereo glasses.

Currently, popular autostereoscopic systems such as the lenticular system and parallax barrier system provide a parallax only in the horizontal direction. Therefore, viewers can see 3D objects from any position only within a certain field of view. In this respect, IP is a technology that has nearly the same advantages as holography. Moreover, IP is considerably more feasible than holography because silver halide photography and laser technology are not required. Composed of a normal flat panel display (FPD), such as a liquid crystal display or an organic light-emitting diode, as well as a fly’s eye lens, as illustrated in Fig. 2, IP is also electronically rewritable. Although color reproducibility depends on the FPD, the reproducibility is still generally excellent.

Nevertheless, IP has not been widely used until now. One possible reason is that the extremely high initial cost of fly’s eye lens production when produced using a metal mold. Conventionally, because the lens pitch of the fly’s eye lens is considered an integral multiple of the pixel pitch of FPD, the lens should be custom-made according to the pixel pitch of FPD. However, this step can be very costly.

The invention of the extended fractional view (EFV) method [3−5], which is a new method of synthesizing an IP image, drastically changed this situation. In the EFV method, both an integer and an arbitrary real number are allowed as ratio between the lens and pixel pitches. The physical difference between the pitches is processed by software. Therefore, the initial high cost of customizing a fly’s eye lens is reduced because a comparatively inexpensive ready-made fly’s eye lens can be used in combination with various FPDs.

IP is also effective in expressing the glittering effect of a material, such as gems, because the light emitted from each convex lens of a fly’s eye lens depends on its direction [6−8].

4 System Configuration

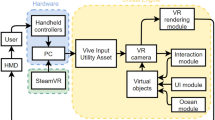

In this paper, an IP was introduced and the creature can be seen in the water. The IP was laid flat to ensure that observation from the top is possible. Figure 3 shows the composition of our system, which consists of a tablet PC with retina display, a plastic box with water, and fly’s eye lens sunk in the water. In this system, the use of water has two implications.

First, water is essential for imaginary underwater life. This system is a type of mixed reality in which real water and imaginary creatures exist in the same space. In common mixed reality systems, objects created with CG are synthesized with real space using transparent type head-mounted display (HMD). In this system however, IP is used instead of HMD.

A higher reality is provided because the refraction of the light occurs on the surface of the water as shown in Fig. 3.

Second, an advantage is that the focal length of each convex lens of the fly’s eye lens is enhanced when immersed in water [5]. The refractive index of the fly’s eye lens is at 1.5, and its focal length is increased by about three times the original after being sunk in water. As a result, the amount of popping out of underwater objects is tripled, and a higher stereoscopic effect is obtained (Fig. 4).

5 Creating a 3DCG Scene

The 3DCG scene consists of two swimming creature models and water surface textures. The scene is created in Autodesk Maya.

5.1 3DCG Models

The imaginary fishes were modeled as shown in Fig. 5. The material for the surface had some transparency and was colored light blue. Bead-like materials of various colors were added to the surface to give the impression of a constellation, similar to the modeling for live fish. The addition of bead-like material was done to add mystery and differentiation with the real fishes. Incandescence was also applied to the surface causing it to emit a faint light that enhances the sense of transparency and existence.

Next, bones were set in the model fishes as shown in Fig. 6. One long backbone was applied to the model from the head to the tail. The backbone was formed with several small bones and then sectioned. By dividing the backbone into several sections, the fishes could bend from left to right, ensuring smooth movement.

Lights were also set in the scene. The final appearance of the rendered fish is shown in Fig. 7.

In this scene, two fish models were created and made to appear to exist at different depths. A contrast in positions is necessary to evoke the sense of depth using IP.

5.2 Background

Two plane 2D images, which were painted the surface of the water, were placed in the scene as shown in Fig. 8. One image was placed in front of the fishes and the other placed at the back.. The back image emits a light reflected on the surface of the water, whereas the front image emits a waving light reflected on the bottom. The sense of depth was emphasized by adding the two layers of images to the fishes in the scene.

The design of the surface of the water was processed as one 2D image plane by using Adobe Photoshop as shown in Fig. 9. The design was created based on the white light reflected on real water. However, image blurring could occur when the synthesized IP image is viewed through the fly’s eye lens (1 mm pitch). Therefore, when the color contrast is weak, the image becomes difficult to see clearly. Hence, the blue and white contrasts were strengthened to enable the white ripple mark to stand out. The rendered scene from the view of camera is shown in Fig. 10.

6 Animation

Animating to show movement is important in this case because it will make the fishes seem alive. This time, the creatures were designed based on real fishes. Swimming fishes repeat similar movements. Therefore their movements have a certain periodicity. This movement is similar to how humans walk, that is, they move mostly by repeating the same movement over and over. By focusing on this point, one cycle of the movements was created and then repeated. This repetition enabled us to save time in calculating for the rendering.

The speed of the animation should not be too fast because it takes time to integrate the views from the right and left eyes and to perceive stereoscopic views. When a movement is too fast, a possibility exists that the amount of popping out will decrease.

One fish was placed in the front and the other at the back in this work. The repetitive animation cycle of the swimming movement of the two fishes, which were depicted as shaking their bodies from left to right, was created and its one round period was set to eight frames. The two fishes shake their bodies from left to right in opposite directions. The eight frames of the movement are shown in Fig. 11. When the eighth frame is played, the loop begins once more from the first frame, and repeats this cycle continuously. These frames are displayed as GIF animation.

7 Synthesis of IP Image

Generating an IP image for each frame of the animation is necessary. Figure 12 shows all the motifs of each frame, which were rendered from 32 × 32 camera positions by using our MEL script. An IP image was synthesized from the 1,024 rendered images by using our technology, called the extended fractional view method [3−5].

Figure 13 show an IP image of one of the eight frames. The bottom right image is a magnified image of part of the upper left image. We created GIF animation by connecting the synthesized IP images.

Each IP image of the GIF animation is then shown on the retina display of the iPad and observed through the fly’s eye lens, which was placed at the bottom of the plastic tank filled with water. Figure 14 shows the dimensions of the retina display of the iPad and the fly’s eye lens. Users can view auto-stereoscopic creatures with horizontal and vertical parallax from anywhere above the water tank. The resolution of the displayed 3D objects is sufficiently high because of the iPad retina display and the extended focal length of the fly’s eye lens placed in the water. The amount of pop-out from the bottom of the water is also sufficiently large.

8 Conclusion

Figure 15 shows a new virtual aquarium that displays imaginary creatures created in 3DCG as if they were swimming under water. Strong stereoscopic view was possible due to the contrast effect, wherein the two fishes and the surface of the water were placed at different depths. Animation was added to give the impression that the fishes were swimming in the water. This system can be appreciated as an artwork because of its beauty, which is very apparent when viewed in a semi-dark room. The soothing effects of a real aquarium can be replicated by placing this system in homes, offices or public spaces. This system could also be utilized to display long-extinct and ancient creatures in aquariums or museums in the future.

References

Matoba, Y., Takahashi, Y., Tokui, T., Phuong, S., Yamano, S., Koike, H.: AquaTop Display: a True Immersive Water Display System, In: ACM SIGGRAPH 2013 Emerging Technologies (2013)

Wikipedia. http://en.wikipedia.org/wiki/Bubblegram

Yanaka, K.: Integral photography suitable for small-lot production using mutually perpendicular lenticular sheets and fractional view. In: Proceedings of SPIE 6490 Stereoscopic Displays and Applications XIV, vol. 649016, pp. 1–8. (2007)

Yanaka, K.: Integral photography using hexagonal fly’s eye lens and fractional view. In: Proceedings of SPIE 6803 Stereoscopic Displays and Applications XIX, 68031 K, pp. 1–8. (2008)

Yoda, M., Momose, A., Yanaka, K.: Moving integral photography using a common digital photo frame and fly’s eye lens. In: SIGGRAPH ASIA Posters (2009)

Maki, N., Yanaka, K.: Underwater integral photography. In: IEEE VR 2015 Demo (2015)

Maki, N., Yanaka, K.: 3D CG integral photography artwork using glittering effects in the post-processing of Multi-viewpoint Rendered Images. In: HCI International (2014)

Maki, N., Shirai, A., Yanaka, K.: 3DCG Art expression on a tablet device using integral photography. In: Laval Virtual 2014 VRIC (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Maki, N., Yanaka, K. (2015). Virtual Aquarium: Mixed Reality Consisting of 3DCG Animation and Underwater Integral Photography. In: Yamamoto, S. (eds) Human Interface and the Management of Information. Information and Knowledge in Context. HIMI 2015. Lecture Notes in Computer Science(), vol 9173. Springer, Cham. https://doi.org/10.1007/978-3-319-20618-9_45

Download citation

DOI: https://doi.org/10.1007/978-3-319-20618-9_45

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20617-2

Online ISBN: 978-3-319-20618-9

eBook Packages: Computer ScienceComputer Science (R0)