Abstract

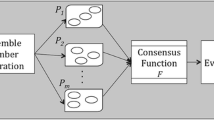

A very promising approach to reach a robust partitioning is to use ensemble-based learning. In this way, the classification/clustering task is more reliable, because the classifiers/clusterers in the ensemble cover the faults of each other. The common policy in clustering ensemble based learning is to generate a set of primary partitionings that are different from each other. These primary partitionings could be generated by a clustering algorithm with different initializations. It is popular to filter some of these primary partitionings, i.e. a subset of the produced partitionings is selected for the final ensemble. The selection phase is done to reach a diverse ensemble. A consensus function finally aggregates the ensemble into a final partitioning called also the consensus partitioning. Another alternative policy in the clustering ensemble based learning is to use the fusion of some primary partitionings that come from naturally different sources. On the other hand, swarm intelligence is also a new topic where the simple agents work in such a way that a complex behavior can be emerged. The necessary diversity for the ensemble can be achieved by the inherent randomness of swarm intelligence algorithms. In this paper we introduce a new clustering ensemble learning method based on the ant colony clustering algorithm. Indeed ensemble needs diversity vitally and swarm intelligence algorithms are inherently involved in randomness. Ant colony algorithms are powerful metaheuristics that use the concept of swarm intelligence. Different runnings of ant colony clustering on a dataset result in a number of diverse partitionings. Considering these results totally as a new space of the dataset we employ a final clustering by a simple partitioning algorithm to aggregate them into a consensus partitioning. From another perspective, ant colony clustering algorithms have many parameters. Effectiveness of the ant colony clustering methods is questionable because they depend on many parameters. On a test dataset, these parameters should be tuned to obtain a desirable result. But how to define them in a real task does not clear. The proposed clustering framework lets the parameters be free to be changed, and compensates non-optimality of the parameters by the ensemble power. Experimental results on some real-world datasets are presented to demonstrate the effectiveness of the proposed method in generating the final partitioning..

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Alizadeh, H., Minaei, B., Parvin, H., Moshki, M.: An asymmetric criterion for cluster validation. In: Mehrotra, K.G., Mohan, C., Oh, J.C., Varshney, P.K., Ali, M. (eds.) Developing Concepts in Applied Intelligence. SCI, vol. 363, pp. 1–14. Springer, Heidelberg (2011)

Ayad, H.G., Kamel, M.S.: Cumulative Voting Consensus Method for Partitions with a Variable Number of Clusters. IEEE Trans. on Pattern Analysis and Machine Intelligence 30(1), 160–173 (2008)

Faceli, K., Marcilio, C.P., Souto, D.: Multi-objective clustering ensemble. In: Proceedings of the Sixth International Conference on Hybrid Intelligent Systems (2006)

Newman C.B.D.J., Hettich S., Merz C.: UCI repository of machine learning databases (1998). http://www.ics.uci.edu/˜mlearn/MLSummary.html

Roth, V., Lange, T., Braun, M., Buhmann, J.: A Resampling Approach to Cluster Validation. Intl. Conf. on Computational Statistics, COMPSTAT (2002)

Strehl, A., Ghosh, J.: Cluster ensembles - a knowledge reuse framework for combining multiple partitions. Journal of Machine Learning Research 3(Dec), 583–617 (2002)

Kennedy, J., Russell, S.: Swarm Intelligence. Morgan Kaufmann, San Francisco (2001)

Kuncheva, L.I.: Combining Pattern Classifiers, Methods and Algorithms. Wiley, New York (2005)

Azimi, J., Cull, P., Fern, X.: Clustering ensembles using ants algorithm. In: Mira, J., Ferrández, J.M., Álvarez, J.R., de la Paz, F., Toledo, F. (eds.) IWINAC 2009, Part I. LNCS, vol. 5601, pp. 295–304. Springer, Heidelberg (2009)

Tsang, C.H., Kwong, S.: Ant Colony Clustering and Feature Extraction for Anomaly Intrusion Detection. Studies in Computational Intelligence (SCI) 34, 101–123 (2006)

Liu, B., Pan, J., McKay, R.I.(Bob): Incremental clustering based on swarm intelligence. In: Wang, T.-D., Li, X., Chen, S.-H., Wang, X., Abbass, H.A., Iba, H., Chen, G.-L., Yao, X. (eds.) SEAL 2006. LNCS, vol. 4247, pp. 189–196. Springer, Heidelberg (2006)

Deneubourg, J.L., Goss, S., Franks, N., Sendova-Franks, A., Detrain, C., Chretien, L.: The dynamics of collective sorting robot-like ants and ant-like robots. In: International Conference on Simulation of Adaptive Behavior: from Animals to Animates, pp. 356–363. MIT Press, Cambridge (1991)

Lumer, E.D., Faieta, B.: Diversity and adaptation in populations of clustering ants. In: International Conference on Simulation of Adaptive Behavior: from Animals to Animates, pp. 501–508. MIT Press, Cambridge (1994)

Maheshkumar S., Gursel S.: Application of machine learning algorithms to KDD intrusion detection dataset within misuse detection context. In: Int. Conf. on Machine Learning, Models, Technologies and Applications, pp. 209–215. CSREA Press, Las Vegas (2003)

Munkres, J.: Algorithms for the Assignment and Transportation Problems. Journal of the Society for Industrial and Applied Mathematics 5(1), 32–38 (1957)

Parvin, H., Beigi, A.: Clustering ensemble framework via ant colony. In: Batyrshin, I., Sidorov, G. (eds.) MICAI 2011, Part II. LNCS, vol. 7095, pp. 153–164. Springer, Heidelberg (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Abbasi, S., Manteghi, S., Heidarzadegan, A., Nemati, Y., Parvin, H. (2015). A Robust Clustering via Swarm Intelligence. In: Gervasi, O., et al. Computational Science and Its Applications -- ICCSA 2015. ICCSA 2015. Lecture Notes in Computer Science(), vol 9156. Springer, Cham. https://doi.org/10.1007/978-3-319-21407-8_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-21407-8_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21406-1

Online ISBN: 978-3-319-21407-8

eBook Packages: Computer ScienceComputer Science (R0)