Abstract

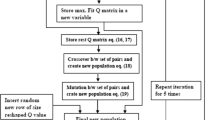

In applying reinforcement learning to continuous space problems, discretization or redefinition of the learning space can be a promising approach. Several methods and algorithms have been introduced to learning agents to respond to this problem. In our previous study, we introduced an FCCM clustering technique into Q-learning (called QL-FCCM) and its transfer learning in the Markov process. Since we could not respond to complicated environments like a non-Markov process, in this study, we propose a method in which an agent updates his Q-table by changing the trade-off ratio, Q-learning and QL-FCCM, based on the damping ratio. We conducted numerical experiments of the single pendulum standing problem and our model resulted in a smooth learning process.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Sutton, R.S., Bart, A.G.: Generalization in Reinforcement Learning-An Introduction. The MIT Press (1998)

Notsu, A., Honda, H., Ichihashi, H., Wada, H.: Contraction algorithm in state and action space for Q-learning. In: 10th International Symposium on Advanced Intelligent Systems, pp. 93–96 (2009)

Komori, Y., Notsu, A., Honda, K., Ichihashi, H.: Determination of the change timing of space segmentation using PCA for reinforcement learning. In: The 6th International Conference on Soft Computing and Intelligent Systems The 13th International Symposium on Advanced Intelligent Systems, pp. 2287–2290 (2012)

Kosko, B.: Neural Networks and Fuzzy Systems: A Dynamical Systems Approach to Machine Intelligence. Prentice Hall, Englewood Cliffs (1992)

Hammell, R.J., Sudkamp, T.: Learning Fuzzy Rules from Data. http://ftp.rta.nato.int/public/pubfulltext/rto/mp/rto-mp-003/mp-003-08.pdf

Komori, Y., Notsu, A., Honda, K., Ichihashi, H.: Automatic Adaptive Space Segmentation for Reinforcement Learning. International Journal of Fuzzy Logic and Intelligent Systems 12(1), 36–41 (2012)

Notsu, A., Honda, K., Ichihashi, H., Komori, Y.: Simple reinforcement learning for small-memory agent. In: 10th International Conference on Machine Learning and Applications, vol. 1, pp. 458–461 (2011)

Ueno, T., Notsu, A., Honda, K.: Application of FCM-type co-clustering to an agent in reinforcement learning. In: 1st IIAI International Conference on Advanced Information Technologies, vol. 12, pp. 1–5 (2013)

Bezdek, J.C.: Pattern Recognition with Fuzzy Objective Function Algorithms. Plenum Press (1981)

Oh, C.H., Honda, K., Ichihashi, H.: Fuzzy clustering for categorical multivariate data. In: Joint 9th IFSA World Congress and 20th NAFIPS International Conference, pp. 2154–2159 (2001)

Tsuda, K., Minoh, M., Ikeda, K.: Extracting straight lines by sequential fuzzy clustering. Pattern Recognition Letters. 17, 643–649 (1996)

Matsumoto, Y., Honda, K., Notsu, A., Ichihashi, H.: Exclusive Partition in FCM-type Co-clustering and Its Application to Collaborative Filtering. International Journal of Computer Science and Network Security 12(12), 52–58 (2012)

Honda, K., Notsu, A., Ichihashi, H.: Collaborative Filtering by Sequential User-Item Co-cluster Extraction from Rectangular Relational Data. International Journal of Knowledge Engineering and Soft Data Paradigms(IJKESDP) 2(4), 312–327 (2010)

Hathaway, R.J., Davenport, J.W., Bezdek, J.C.: Relational duals of the \(c\)-means clustering algorithms. Pattern Recognition 22(2), 205–212 (1989)

Watkins, C., Dayan, P.: Technical note: Q-learning. Machine Learning 3(8), 279–292 (1992)

Rummery, G.A., Niranjan, M: On-line Q-learning using connectionist systems, Technical Report CUED/F-INFENG/TR 166. Engineering Department, Cambridge University (1994)

Jaakkola, T., Shingh, S.P., Jordan, M.: I: Reinforcement Learning Algorithm for Partially Observable Markov Decision Process. Advances in Neural Information Processing System 7, 345–352 (1994)

Miyamoto, S., Ichihashi, H., Honda, K.: Algorithms for fuzzy clustering. Springer (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Notsu, A., Ueno, T., Hattori, Y., Ubukata, S., Honda, K. (2015). FCM-Type Co-clustering Transfer Reinforcement Learning for Non-Markov Processes. In: Huynh, VN., Inuiguchi, M., Demoeux, T. (eds) Integrated Uncertainty in Knowledge Modelling and Decision Making. IUKM 2015. Lecture Notes in Computer Science(), vol 9376. Springer, Cham. https://doi.org/10.1007/978-3-319-25135-6_21

Download citation

DOI: https://doi.org/10.1007/978-3-319-25135-6_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-25134-9

Online ISBN: 978-3-319-25135-6

eBook Packages: Computer ScienceComputer Science (R0)