Abstract

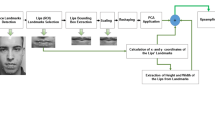

This paper demonstrates the state-of-the-art of ‘whole-word-state Dynamic Bayesian Network (DBN)’ model of audio and visual integration. In fact, many DBN models have been proposed in recent years for speech recognition due to its strong description ability and flexible structure. DBN is a statistic model that can represent multiple collections of random variables as they evolve over time. However, DBN model with whole-word-state structure, does not allow making speech as subunit segmentation. In this study, single stream DBN (SDBN) model is proposed where speech recognition and segmentation experiments are done on audio and visual speech respectively. In order to evaluate the performances of the proposed model, the timing boundaries of the segmented syllable word is compared to those obtained from the well trained tri-phone Hidden Markov Models (HMM). Besides the word recognition results, word syllable recognition rate and segmentation outputs are also obtained from the audio and visual speech features streams. Experiment results shows that, the integration of SDBN model with perceptual linear prediction (PLP) feature stream produce higher word recognition performance rate of 98.50 % compared with the tri-phone HMM model in clean environment. Meanwhile, with the increasing noise in the audio stream, the SDBN model shows more robust promising results.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Nefian, A.V., Liang, L., Pi, X., Xiaoxiang, L., Mao, C., Murphy, K.: A coupled HMM for audio-visual speech recognition. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2013–2016 (2002)

Zweig, G.: Speech recognition with dynamic Bayesian networks, Ph.D. Dissertation, University of California, Berkeley (1998)

Bilmes, J., Zweig, G.: Discriminatively structured dynamic graphical models for speech recognition, Technical report, JHU 2001 Summer Workshop (2001)

Zhang, Y., Diao, Q., Huang, S.: DBN based multi-stream models for speech. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 836–839 (2003)

Gowdy, J., Subramanya, A., Bartels, C., Bilmes, J.: DBN based multistream models for audio-visual speech recognition. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 993–996 (2004)

Bilmes, J., Bartels, C.: Graphical model architectures for speech recognition. IEEE Signal Process. Mag. 22, 89–100 (2005)

Ravyse, I.: Facial analysis and synthesis. Ph.D. thesis, Vrije Universiteit Brussel, Dept. Electronics and Informatics, Belgium. Online: www.etro.vub.ac.be/Personal/icravyse/RavysePhDThesis.pdf (2006)

Zhou, Y., Gu, L., Zhang, H.J.: Bayesian tangent shape model: estimating shape and pose parameters via bayesian inference. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR2003), vol. 1. pp. 109–118 (2003

Terry, L.: A phone-viseme dynamic bayesian network for ausio-visual automatic speech recognition. In: The 19th International Conference on Pattern Recognition, pp. 1–4 (2008)

Hermansky, H.: Perceptual linear predictive (PLP) analysis of speech. J. Acoust. Soc. Am. 87(4), 1738–1752 (1990)

Bilmes, J., Zweig, G.: The graphical models toolkit: an open source software system for speech and time-series processing. In: Proceedings of the IEEE International Conference on Acoustic Speech and Signal Processing (ICASSP), vol. 4, pp. 3916–3919 (2002)

Lee, L., Low, W., Mohamed, A.R.A.: A comparative analysis of word structures in malay and english children’s stories. Soc. Sci. Humanit. J. 21(1), 67–84 (2013)

Young, S.J., Kershaw, D., Odell, J., Woodland, P.: The HTK Book (for HTK Version 3.4) (2006). http://htk.eng.cam.ac.uk/docs/docs.shtml

Acknowledgements

Due acknowledgement is accorded to the Research Management Centre (RMC), Universiti Teknologi MARA for the funding received through the RAGS/1/2014/ICT07/UiTM//3.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Seman, N., Roslan, R., Jamil, N., Ardi, N. (2016). Bimodality Streams Integration for Audio-Visual Speech Recognition Systems. In: Abraham, A., Han, S., Al-Sharhan, S., Liu, H. (eds) Hybrid Intelligent Systems. HIS 2016. Advances in Intelligent Systems and Computing, vol 420. Springer, Cham. https://doi.org/10.1007/978-3-319-27221-4_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-27221-4_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-27220-7

Online ISBN: 978-3-319-27221-4

eBook Packages: EngineeringEngineering (R0)