Abstract

Collaborative editing cloud servers allow a group of online users to concurrently edit a document. Every user achieves consistent views of the document by applying others’ modifications, which are pushed by the cloud servers. The cloud servers repeatedly transform, order, broadcast modifications,and merge them into a joint version in a real-time manner (typically, less than one second). However, in existing solutions such as Google Docs and Cloud9, the servers employ operational transformation to resolve edit conflicts and achieve consistent views for each online user, so all inputs (and the document) are processed as plaintext by the cloud servers. In this paper, we propose LightCore, a collaborative editing cloud service for sensitive data against honest-but-curious cloud servers. A LightCore client applies stream cipher algorithms to encrypt input characters that compose the text of the document before the user sends them to servers, while the keys are shared by all authorized users and unknown to the servers. The byte-by-byte encryption feature of stream cipher enables the servers to finish all heavy processing and provide collaborative editing cloud services as the existing solutions without the protections against curious servers. Therefore, the lightweight load of clients is kept while the users’ sensitive data are protected. We implement LightCore supporting two different methods to generate keystreams, i.e., the “pure” stream cipher and the CTR mode of block cipher. Note that the document is usually modified by collaborative users for many times, and the sequence of text segments is not input and encrypted in chronological order. So, different from the stateless CTR mode of block cipher, the overall performance of high-speed but stateful stream cipher varies significantly with different key update rules and use scenarios. The analysis and evaluation results on the prototype system show that, LightCore provides secure collaborative editing services for resource-limited clients. Finally, we suggest the suitable keystream policy for different use scenarios according to these results.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Collaborative editing cloud service

- Operational transformation

- Stream cipher

- Key management

- Block cipher mode of operation

1 Introduction

With the progress of cloud computing, the collaborative editing service (e.g., Google Docs, Office Online and Cloud9) becomes a popular and convenient choice for online users. With such a service, a group of users can cooperatively edit documents through networks; in particular, they can concurrently modify a same document, even write on a same line. Meanwhile, the collaborative editing cloud service provides consistent views to all clients in a timely manner; for example, if each of two independent users concurrently inserts one character into a same line that are displayed identically on their own screens, all users will immediately see both these two characters appear in the expected positions.

The servers of collaborative editing cloud services carry out heavy processing to coordinate all online users’ operations. Firstly, the cloud servers are responsible for receiving operation inputs from clients, transforming operations by operational transformation (OT) [1] to resolve conflicts, modifying the stored documents into a joint version based on these transformed operations, and then broadcasting modifications to all online clients. To transform operations, the server revises the position of a modification based on all the other concurrent operations. For example, when Alice and Bob respectively insert ‘a’ and ‘b’ in the \(i^{th}\) and \(j^{th}\) positions, Bob’s operation is transformed to be executed in the \((j+1)^{th}\) if Alice’s operation is executed firstly and \(i < j\). Secondly, during the editing phase, the above steps are repeated continuously in a real-time manner, to enable instant reading and writing on clients. Finally, the servers have to maintain a history of joint versions, because users’ operations may be done on different versions due to the uncertain network delays. In a word, this centralized architecture takes full advantages of the cloud servers’ powerful computing, elasticity and scalability, and brings convenience to resource-limited clients.

In order to enable the cloud servers to coordinate the operations and resolve possible conflicts by OT, existing online collaborative editing systems process only plaintext (or unencrypted) inputs. Therefore, the cloud service provider is always able to read all clients’ documents. This unfriendly feature might disclose users’ sensitive data, for example, to a curious internal operator in the cloud system. Although the SSL/TLS protocols are adopted to protect data in transit against external attackers on the network, the input data are always decrypted before being processed by the cloud servers.

In this paper, we propose LightCore, a collaborative editing cloud service for sensitive data. In LightCore, before being sent to the cloud, all input characters are encrypted by a stream cipher algorithm, which encrypts the plaintext byte by byte. These characters compose the content of the document. The texts are always transmitted, processed and stored in ciphertext. The cryptographic keys are shared by authorized users, and the encryption algorithms are assumed to be secure. The other operation parameters except the input texts are still sent and processed as plaintext, so the cloud servers can employ OT to coordinate all operations into a joint version but not understand the document.

LightCore assumes honest-but-curious cloud servers. On one hand, the honest cloud servers always follow their specification to execute the requested operations; on the other hand, a curious server tries to read or infer the sensitive texts in the users’ documents. Note that the honesty feature is assumed to ensure service availability and data integrity; but not for the confidentiality of sensitive data. A malicious cloud server that arbitrarily deviates from its protocol, might break service availability or data integrity, but could not harm confidentiality, because the keys are held by clients only and every input character never appears as plaintext outside the clients.

By adopting stream cipher algorithms, LightCore keeps the lightweight load of clients, and takes advantage of the powerful resources of cloud servers as the existing collaborative editing cloud solutions. Because the stream cipher algorithm encrypts only the text byte by byte and the length of each input text is unchanged after being encrypted, the servers can conduct OT and other processing without understanding the ciphertext. On the contrary, the block cipher algorithms encrypt texts block by block (typically, 128 bits or 16 bytes), so the OT processing in ciphertext by servers is extremely difficult because users modify the text (i.e., insert or delete) in characters. That is, each character would have to be encrypted into one block with padding, to support the user operations in characters, which leads to an enormous waste in storage and transmission; otherwise, the workload of resolving edit conflicts would be transferred to the clients, which is unsuitable for resource-limited devices.

In fact, the “byte-by-byte” encryption feature can be implemented by stream cipher, or the CTR mode of block cipher.Footnote 1 In LightCore (and other collaborative editing systems), the text of a document is composed of a sequence of text segments with unfixed lengths. Because the document is a result of collaborative editing by several users, these text segments are not input and encrypted in chronological order, e.g., the sequence of {‘Collaborative’, ‘Editing’, ‘Cloud’} is the result of {‘Collaborative Document Cloud’} after deleting ‘Document’ and then inserting ‘Editing’ by different users. Each text segment is associated with an attributeFootnote 2 called keystream_info, containing the parameters to decrypt it. For the CTR mode of block cipher, keystream_info contains a key identifier, a random string nonceIV, an initial counter and an offset in a block; for stream cipher, it contains a key identifier and an initial position offset of the keystream. Note that all users share a static master key, and each data key to initialize cipher is derived from the master key and the key identifier.

The efficiency of LightCore varies as the keystream policy changes, that is, (a) different methods are used to generate keystreams, and (b) different key update rules of stream cipher are used in certain use scenarios (if stream cipher is used). In general, stream cipher has higher encryption speed and smaller delay than block cipher [2], but with a relative heavy initialization phase before generating keystreams. Moreover, different from the stateless CTR mode, stream cipher is stateful: given a key, to generate the \(j^{th}\) byte of keystream, all \(k^{th}\) bytes \((k < j)\) must be generated firstly. Therefore, insertion operations in random positions (e.g., an insertion in Line 1 after another in Line 2) require the decrypters to cache bytes of keystream to use later; and deletion operations cause the decrypters to generate lots of obsoleted bytes of keystream. This performance degradation is mitigated by updating the data keys of stream cipher in LightCore: the user (or encrypter) generates a new data key, re-initializes the encryptor and then generates keystreams by bytes to encrypt texts. The key update rules are designed by balancing (a) the cost of initialization and keystream generation, and (b) the distribution and order of the deletion and insertion operations.

We implement LightCore based on Etherpad, an open-source collaborative editing cloud system. The LightCore prototype supports the RC4 stream cipher algorithm and the AES CTR mode. Two principles of stream cipher key update rules are adopted, that is, a user (or encrypter) updates the key of stream cipher, if (a) the generated bytes of the keystream come to a predetermined length, or (b) the user moves to another position previous to the line of the current cursor to insert texts. Then, the evaluation and analysis on the prototype suggest the suitable keystream policy with detailed parameters for different typical use scenarios.

LightCore provides collaborative editing cloud services for online users, with the following properties:

-

Reasonable confidentiality against honest-but-curious cloud servers. All input characters are encrypted at the client side before being sent to the cloud servers, either these texts are kept in the document or deleted finally. The content of the document is kept secret to servers, but the format information such as length, paragraph, font and color is known, which enables the servers to coordinate users’ operations.

-

Lightweight workload on clients. The cloud servers of LightCore are responsible for receiving users’ edit inputs, resolving edit conflicts, maintaining the documents, and distributing the current freshest versions to online clients. Compared with those of existing collaborative editing solutions, a LightCore user only needs to additionally generate keystreams to protect input texts as an encrypter and decrypt texts from servers as a decrypter.

-

Real-time and full functionality. The byte-by-byte lightweight encryption is fully compatible with uncrypted real-time collaborative editing services, no editing function is impeded or disabled. Even for a new user that logins to the system to access a very long and repeatedly-edited document, the keystream policy facilitates the user to decrypt it in real time.

The rest of the paper is organized as follows. Section 2 introduces the background and related work on collaborative systems and edit conflict solutions. Section 3 describes the assumptions and threat model. The system design, including the basic model, key management and keystream policy, is given in the Sect. 4. Section 5 describes the implementation of LightCore, and security analysis is presented in Sect. 6. In Sect. 7, we illustrates performance evaluation, and present keystream polices suggestions. Finally, we conclude this paper and analyze our future work in Sect. 8.

2 Background and Related Work

2.1 Real-Time Collaborative Editing Systems

Collaborative editing is the practice of groups producing works together through individual contributions. In current collaborative editing systems, modifications (e.g., insertions, deletions, font format or color setting) marked with their authors, are propagated from one collaborator to the other collaborators in a timely manner (less than 500 ms). Applying collaborative editing in textual documents, programmatic source code [3, 4] or video has been a mainstream.

Distributed systems techniques for ordering [5] and storing have been applied in most real-time collaborative editing systems [6–8], including collaborative editor softwares and browser-based collaborative editors. Most of these have adopted decentralized settings, but some well-known systems use central cloud resources to simplify synchronization between clients (e.g., Google Docs [9] and Microsoft Office Online [10]). In a collaborative editing system with decentralized settings, the clients take more burden on broadcasting, ordering modifications and resolving conflicts. However, in a cloud-based collaborative systems, cloud servers help to order and merge modifications, resolve conflicts, broadcast operations and store documents. It not only saves the deployment and maintenance cost but also reduces the burden on clients by using cloud resources.

However, the cloud may not be completely trusted by users. In order to protect sensitive data from unauthorized disclosure, data of users are encrypted before being sent to the cloud [11–14]. SPORC [15] encrypts modifications with block cipher AES at the client side, but the cloud server can only order, broadcast and store operations, so it takes much burden for the clients to resolve conflicts and restore the documents from series of operations when accessing the documents. In our scheme, data are encrypted with stream cipher, and no functionalities of cloud servers are impeded or disabled.

There are four main features in real-time collaborative editing systems: (a) highly interactive clients are responded instantly via the network, (b) volatile participants are free to join or leave during a session, (c) modifications are not pre-planned by the participants, and (d) edit conflicts on the same data are required to be well resolved to achieve the consistent views at all the clients. As the modifications are collected and sent every less than 500 ms, the size of the input text is relatively small (about 2 to 4 characters) in spite of the copy and paste operations. In this case, edit conflicts happen very frequently.

2.2 Operational Transformation

The edit conflict due to concurrent operations is one of the main challenges in collaborative editing systems. Without an efficient solution to edit conflicts, it may result in inconsistent text in different clients when collaborators concurrently edit the same document. There are many methods to resolve conflicts such as the lock mechanism [16, 17] and differ-patch [18–20]. Among these methods, operational transformation (OT) [1] adopted in our system is an efficient technology for consistency maintenance when concurrent operations frequently happen. OT was pioneered by C. Ellis and S. Gibbs [21] in the GROVE system. In more than 20 years, OT has evolved to acquire new capabilities in new applications [22–24]. In 2009, OT was adopted as a core technique behind the collaboration features in Apache Wave and Google Docs.

In OT, modifications from clients may be defined as a series of operations. OT ensures consistency by synchronizing shared state, even if concurrent operations arrive at different time points. For example, a string “preotty”, called S, is shared on the clients \(C_{1}\) and \(C_{2}\), \(C_{1}\) modifies S into “pretty” by deleting the character at the \(3^{th}\) position and \(C_{2}\) modifies S into “preottily” by inserting “il” after the \(5^{th}\) position concurrently, the consistent result should be “prettily”. However, without appropriate solutions, it may cause inconsistency at client \(C_{1}\): shift from “pretty” as the result of deletion to “prettyil” as the result of insertion.

OT preserves consistency by transforming the position of an operation based on the previously applied concurrent operations. By adopting OT, for each two concurrent operations: \(op_i\) and \(op_j\) irrelevant of the execution sequence, the OT function \(T(\cdot )\) satisfies : \(op_i \circ T(op_j,op_i)\equiv op_j\circ T(op_i,op_j)\), where \(op_i \circ op_j\) denotes the sequence of operations containing \(op_i\) followed by \(op_j\) and \(\equiv \) denotes equivalence of the two sequences of operations. In the above example, the consistent result “prettily” can be achieved at client \(C_{1}\) by transforming the operation “insert ‘il’ after the \(5^{th}\) position” into “insert ‘il’ after the \(4^{th}\) position” based on the operation “delete the character at the \(3^{th}\) position”.

In a collaborative editing cloud service, the cloud servers can be responsible for receiving and caching editing operations in its queue, imposing order on each editing operation, executing OT on concurrent operations based on the order iteratively, broadcasting these editing operations to other clients and applying them in its local copy to maintain a latest version of the document. When receiving an operation \(op_{r_c}\) from the client, the cloud server execute OT as follows:

-

Notes that the operation \(op_{r_c}\) is generated from the client’s latest revision \(r_c\).

$$S_0\rightarrow S_1\rightarrow \ldots S_{r_c}\rightarrow S_{r_c+1} \rightarrow \ldots \rightarrow S_{r_H} $$denotes the operation series stored in cloud. \(op_c\) is relative to \(S_{r_c}\).

-

The cloud server needs to compute new \({op_{r_c}}'\) relative to \(S_{r_H}\). The cloud server firstly computes a new \({op_{r_c}}'\) relative to \(S_{r_c+1}\) by computing \(T(S_{r_c+1},op_{r_c})\). Similarly the cloud server can repeat for \(S_{r_c+2}\) and so forth until \({op_{r_c}}'\) represented relative to \(S_{r_H}\) is achieved.

Edit conflicts are also required to be resolved by OT at the client. Considering network delay and the requirement of non-block editing at the client, the local editing operations may not be processed by the server timely. Therefore, the client should cache its local operations in its queue, and execute OT on the concurrent operations based on these cached operations.

3 Assumptions and Threat Model

LightCore functionally allows multiple collaborators to edit the shared documents and view changes from other collaborators using cloud resources. We assume that all the authorized collaborators mutually trust each other and strive together to complete the same task (e.g., drafting a report or programming a system). That is, all the changes committed by the client of any collaborator are well-intentioned, and respectable by other collaborators.

The collaborative privileges are granted and managed by a special collaborator, called the initiator, who is in charge of creating the target document for future collaborative editing, generating the shared secret passcode and distributing it among all authorized collaborators through out-of-band channels. The passcode is used for deriving the master encryption key to protect the shared contents and updates. We assume that the passcode is strong enough to resist guessing attacks and brute force attacks. The master key with a random string is used to generate the data key, which initializes cryptographic algorithms to generate keystreams. We assume that the cryptographic algorithms to encrypt data are secure. Meanwhile, we assume that the random string will not be repeatedly generated by the clients.

We assume that the client of each collaborator runs in a secure environment which guarantees that

-

the generation and distribution of shared secret and privilege management on the client of the initiator are appropriately maintained;

-

the secret passcode and keys that appear in the clients, are not stolen by any attacker;

-

the communication channel between the client and the cloud is enough to transmit all necessary data in real time and protected by existing techniques such as SSL/TLS.

In LightCore, the cloud server is responsible for storing and maintaining the latest content, executing operations (delete, insert, etc.) on the content, resolving operational conflicts, and broadcasting the updates among multiple clients. The cloud server is considered to be honest-but-curious. In case of risking its reputation, the honest cloud server will timely and correctly disseminate modifications committed by all the authorized clients without maliciously attempting to add, drop, alter, or delay operation requests. However, motivated by economic benefits or curiosity, the cloud provider or its internal employees may spy or probe into the shared content, determine the document type (e.g., a letter) by observing the format and layout, and discover the pivot part of the documents by analyzing the frequency and quantity of access.

Additionally, we assume that the cloud servers will protect the content from unauthorized users access and other traditional network attacks (such as DoS attacks), and keep the availability of share documents, for example, by redundancy.

4 System Design

This section describes the system design of LightCore. We firstly give the basic model, including the specifications of clients and servers, and the encryption scheme. Then, the key management of LightCore is presented, and we analyze two different ways to generate keystreams.

4.1 Basic Model

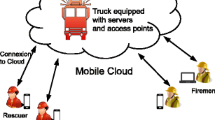

Similar to existing collaborative editing systems, LightCore involves a group of collaborative users and a cloud server. Each client communicates with the server over the Internet, to send its operations and receive modifications from others in real time. For each document, the server maintains a history of versions. That is, it keeps receiving operations from users, and these modifications make the document shift from a version to another one. When applying modifications on a version, the server may need OT to transform some operations. The server also keeps sending the current freshest version to users, that is, all transformed operations since the last version is sent. Because a user is still editing on the stale version when the freshest one is being sent, the OT processing may also be required to update its view at the client side. The above procedure is shown in Fig. 1.

In LightCore, we design the crypto module for protecting the documents at the client side. The input characters of insertion operation (not deletion operation without inputs) are encrypted with keystreams byte by byte, but the position of each operation is sent in plaintext. When receiving the operation from one client, the cloud server may transform the operation by OT and apply it in the latest version based on the position. That is, no functionalities of the cloud server are impeded or disabled in ciphertext. After receiving the operation from other users through the cloud servers, the input characters of the operation will be firstly decrypted, so that it can be presented at the screen in plaintext.

Client. At the client side, users are authenticated by the cloud before entering the system. The collaborative privileges are granted and managed by the initiator, who is in charge of creating the target document. Therefore, only authorized users can download or edit the document. Meanwhile, the master key to generate keystreams, which are to encrypt the text of the document, is only delivered to the authorized users by the initiator. Without the master key, both the cloud server and attackers from the network cannot read or understand the document.

There are two main phases at the client side to edit a document in LightCore: the pre-edit phase and the editing phase. In this pre-edit phase, the client requests a document to maintain a local copy, and the server will respond with the current freshest version of the document to the client. Before generating the local copy, the user is required to input a passcode, and the document is decrypted with the master key derived from the passcode. This decryption time depends on the length of the document, different from that of decrypting the small text of each operation (Op) in the editing phase. Then, the local copy is used for user’s edit operations, so that edit operations will not be interrupted by network delay or congestion. In the editing phase, the client encrypts its input characters of each operation before sending it to the cloud server. Meanwhile, the operation is cached in a queue (Ops) so that its concurrent operations can be transformed by OT, when it is not successfully received and processed by the server. In the system, every operation is associated with a revision number of the document, which denotes the version that the operation is generated from. When receiving an operation of other clients from the cloud server, the input characters of the operation are firstly decrypted. Then, the client may execute OT on the operation based on the revision number, and applies the modification in its local copy.

Server. First of all, to follow users’ requirements and the specification, access control is enforced by the cloud server. The server maintains a history of versions for each document. In the pre-edit phase, the server sends the freshest encrypted document to the client, and holds an ordered list of modification records for the document (Ops). Every modification record contains an operation, its revision number and its author information. In the editing phase, the server keeps receiving operations from the clients, transforming them by executing OT functions based on the modification records, ordering each operation by imposing a global revision number on it and broadcasting these updated operations with new revision numbers to other collaborative clients. Meanwhile, the cloud server merges these operations into a freshest version of the document in ciphertext, and adds them to the modification records.

Encrypted Operations. We preserve confidentiality for users’ data by adopting symmetric cryptographic algorithms with the “byte-by-byte” encryption feature at the client side. In our system, each modification at the client is called an operation. There are two types of edit operations: insertion and deletion. The other operations including copy and paste can also be represented by these two types of operations. An insertion is comprised of the position of the insertion in the document and the inserted text. And a deletion is comprised of the position of the deletion and the length of deleted text. Each inserted text segment of the operation is associated with an attribute called keystream_info, containing the parameters to encrypt and decrypt it. The other operations related to setting font or color are also supported by taking font or color value as attributes.

By applying the byte-by-byte encryption algorithms, the length of each input text is kept unchanged after being encrypted. The cloud server can conduct OT and other processing without understanding the ciphertext. Compared with block cipher, applying stream cipher (including the CTR mode of block cipher) in the system has the following advantages:

-

It is impervious for the cloud server to help to resolve conflicts. To satisfy real-time view presentation, the operations are submitted every 500 ms, so the input text of the operation is generally very small (about 2 to 4 characters). Applying block cipher to encrypt characters block by block makes it difficult for the server to conduct OT functions because users modify the text in characters. That is, the position of the operation related to OT would be extremely difficult to be determined, when modifying a character in a block with an unfixed length of padding. In this case, the OT processing overhead of the server would be transferred to the clients.

-

It is feasible for the cloud server to hold a freshest well-organized document. Without understanding the content of the text encrypted by stream cipher, the server can merge operations and apply operations in the latest version of the document based on the position and unchanged length of the text. So, a freshest well-organized document in ciphertext is kept at the server. However, it is costly for the server to apply operations encrypted by block cipher in the latest version of the document. That is, each character would have to be encrypted into one block with fixed-length padding, to support operations in characters, which leads to an enormous waste in storage and transmission; otherwise, a series of operations would be processed at the client side when a user requests the document in the pre-edit phase. Although clients can actively submit a well-organized document to the cloud periodically, the transmission cost may also increase the burden on clients.

4.2 Key Management

We construct a crypto module at the client to encrypt and decrypt the text of the document. In the crypto module, both stream cipher and the CTR mode of block cipher are supported. Each document is assigned a master key (denoted as mk), derived from a passcode. When users access the document, the passcode is required to be input. The passcode may be transmitted through out-of-band channels. We assume that the delivery of the passcode among users is secure.

The text segment of the document is encrypted with the data key (denoted as DK), which initializes the cryptographic algorithm to generate keystreams. The data key is generated by computing DK = H(mk, userId || keyId), where H is a secure keyed-hash mac function (e.g., SHA-256-HMAC), mk is the master key, userId is the identity of the collaborator, and keyId is a random key identifier. The userId with a unique value in the system is attached to each operation as the attribute author to distinguish different writers. The keyId generated by the client is a parameter contained in the attribute keystream_info. For the CTR mode of block cipher, keystream_info contains a key identifier, a random string nonceIV, an initial counter and an offset in a block; the string nonceIV || counter is the input of the block cipher, to generate keystreams, and the counter is increased by one after each block. For stream cipher, it contains a key identifier and an initial position offset of the keystream; the initial position offset locates the bytes of the keystream to decrypt the first character of the text segment. The keyId and nonceIV generated randomly ensure that the keystreams will not be resued. Therefore, different collaborators with different data key generate non-overlapping keystreams, and bytes of keystreams are not reused to encrypt data.

After encrypting the input texts, the client will send the operation with the attributes author and keystream_info. Therefore, authorized readers and writers with the same master key can compute the data key and generate the same keystreams, based on the attributes when decrypting the texts.

4.3 Keystream Policies

Both stream cipher and block cipher CTR mode are applied in our system. In general, stream cipher has higher encryption speed and smaller delay than block cipher [2], but the performance of the stateful stream cipher may be degraded when decrypting a document generated from random insertions and deletions. In order to achieve an efficient cryptographic scheme, we design two key update rules for stream cipher, which take full advantage of stream cipher while match the features of collaborative editing cloud services.

Comparison of Two Types of Cipher. In both stream cipher and the CTR mode of block cipher, each byte of the plaintext is encrypted one at a time with the corresponding byte of the keystream, to give a byte of the ciphertext. During the execution of the two types of cipher, it involves initialization phase and keystream generation phase. We test the initialization latency and keystream generation speed of ISSAC, Rabbit, RC4 and AES-CTR by JavaScript on browsers. The results in Table 1 illustrate that the speed of these stream cipher algorithms is much faster than AES, but all of them are with a relatively heavy initialization phase before generating keystreams. For example, the time of executing 1000 times of initialization of RC4 is approximately equal to that of generating 0.38 MB bytes of a keystream. For the CTR mode of stateless block cipher, keystream generation is only related to the counter as the input of block cipher. Given the counter and cryptographic key, the CTR mode of block cipher outputs the corresponding bytes of the keystream.

It generally requires only one initialization (round key schedule) for the CTR mode of block cipher, for multiple block encryption or decryption. Unlike the CTR mode of block cipher, stream cipher is stateful: given a key, to generate the \(j^{th}\) byte of keystream, all \(k^{th}\) bytes \((k < j)\) must be generated firstly. Therefore, when decrypting documents by stream cipher, insertion operations in random positions (e.g., an insertion in Line 1 after another in Line 2) require the decrypters to cache bytes of keystreams to use later; and deletion operations cause the decrypters to generate lots of obsoleted bytes of keystreams. Examples of the impact from random insertions and deletions are shown in Fig. 2.

When decrypting a document generated from random insertions, it may require repeatedly initializing the stream cipher and generating obsoleted bytes of keystreams, for the resource-limited clients without enough cache. If all collaborative clients input characters in sequential positions of the document, the position of the inserted texts in a document will be consistent with the position of the used bytes in the keystream. In this case, decrypting the document only requires one initialization and the sequentially generated keystream will be in full use. However, the text segments of the document are not input and encrypted in chronological order due to random insertions. In this case, it may cause inconsistent positions of the text segments and their used bytes of keystreams. For example: a character \(c_{1}\) is inserted in the position previous to the character \(c_{2}\) encrypted with the \(i^{th}\) byte of the keystream; as the keystream cannot be reused for security consideration, \(c_{1}\) is encrypted with the \(j^{th}\) byte where \(i<j\); to decrypt \(c_{1}\), the bytes from \(0^{th}\) to \(j^{th}\) should be firstly generated; if the \(i^{th}\) byte is not cached, the client shall re-initialize the stream cipher to generate bytes from \(0^{th}\) to \(i^{th}\) when decrypting \(c_{2}\); therefore, the bytes from \(0^{th}\) to \(i^{th}\) called obsoleted bytes are repeatedly generated; otherwise, bytes from \(0^{th}\) to \(i^{th}\) shall be preserved until they are reused. In fact, it is difficult to determine whether and when the generated bytes of the keystream will be reused. In this case, the size of cached bytes may be larger than that of the document. It is not advisable to cache so large bytes of keystreams when the document is of large size.

Random deletions also cause the decrypter to generate lots of obsoleted bytes with stream cipher. For example, a text segment \(T=<{c_1,c_2,...,c_n}>\) is firstly inserted by a client, and characters \(<c_2,...,c_{n-1}>\) are deleted by another client; if all the characters of T are encrypted with the bytes of the keystream initialized by the same key, the bytes of the keystream related to \(<c_2,...,c_{n-1}>\) are required to be generated to decrypt \(c_n\). In this example, \(n-2\) obsoleted bytes of the keystream are generated. However, if \(c_n\) is encrypted with the bytes of another keystream, which is initialized by a updated key, the \(n-2\) obsoleted bytes would not be generated. In this case, only one additional initialization with the new data key is required. Note that, it is efficient only when the time to generate the continuous deleted bytes of the keystream is longer than that of the additional initialization. If the size of deleted characters is small, it may less efficient for frequently initializing the stream cipher.

Key Update Rules for Stream Cipher. If a stable performance is expected, adopting the stateless CTR mode of block cipher is suggested. However, to take full advantage of fast stream cipher in LightCore, we design two key update rules to mitigate the performance degradation for stream cipher: the user (or encrypter) generates a new data key, re-initializes the stream cipher algorithm and then generates keystreams by bytes to encrypt texts. The key update rules are designed by balancing (a) the cost of initialization and keystream generation, and (b) the distribution and order of the insertion and deletion operations.

One key update rule for random insertions is to keep the consistency between the positions of the used bytes in the keystream with the positions of inserted characters in the document. In LightCore, we update the data key to initialize the stream cipher when the user moves to another position previous to the line of the current cursor to insert texts. Therefore, we can ensure that the positions of the bytes in the keystream to encrypt a text T segment are smaller than those of the bytes to encrypt the text in the positions previous to T.

The second key update rule for random deletions is to limit the length of the keystream under each data key. The client updates the key when the generated or used bytes of the keystream come to a predetermined length. The value of the predetermined length should balance the cost of initialization and keystream generation. If the value is too small, it may frequently initialize the stream cipher so that the time-consuming initialization may bring much overhead. If the value is too large, lots of deletions may also cause much overhead for generating obsoleted bytes of keystreams related to the deleted characters. By evaluating the performance of stream cipher with the key update rules of different predetermined length, a suitable predetermined length can be set in different use scenarios, which will be illustrated in Sect. 7.

5 Implementation

We built the LightCore prototype on top of Etherpad, a Google open-source real-time collaborative system. The client-side code implemented by JavaScript can be executed on different browsers (IE, Chrome, Firefox, Safari, etc.). The cloud server of the system is implemented on Node.js, a platform built on Chrome’s JavaScript runtime. Based on the implementation of Etherpad, there are some issues to be addressed as follows, when we implement the prototype system.

5.1 Client Improvement

In the pre-edit phase, the decrypter shall decrypt the whole document from the beginning to the end. If stream cipher is used and the data keys are updated, the decrypter may find multiple data keys are used alternatively; for example, a text segment encrypted with the data key \(DK_1\), may be cut into two text segments by inserting another text segment encrypted with another data key \(DK_2\); then it results in an alternatively-used data key list \(DK_1\), \(DK_2\), \(DK_1\). Therefore, in the pre-edit phase, the decrypter shall keep the statuses of multiple stream ciphers initialized with different data keys; otherwise, it may need to initialize a same data key and generate a same keystream for more than one time. To balance the memory requirement and the efficiency, in the prototype, the client maintains the status of two stream ciphers initialized with different data key for each author of the document. One is called the current decryptor, and the other is to back up the current one called the backup decryptor. When decrypting a text segment encrypted by a new decryptor, the clients back up the current decryptor and update the current decryptor by re-initializing it with a new data key. If a text segment is required to be decrypted by the backup decryptor, the clients will exchange the current decryptor with the backup one. The generated bytes of the keystream by each decryptor are cached, until a predetermined length (1 KB in the prototype system) is reached or the decryptor is updated.

In the editing phase, the input characters of each insertion is encrypted before being sent to the cloud; so, the user (as a decrypter) receives and decrypts texts as the same order that the encrypter encrypt the texts. The client maintains the status of only one decryptor for each client to decrypt the operations from other clients. The bytes of the keystream are sequentially generated to be used, but the generated keystream is not cached since they will not be reused.

Attributes Update. In order to decrypt the text correctly, the attribute keystream_info, including the position information of used bytes of the keystream, is attached to each insertion operation. The position information is expressed by the offset of the byte in the keystream related to the first character of the insertion. However, random insertions will change the value of keystream_info. For example: a text segment \(T = <c_{1},c_{2},...,c_{n}>\) is encrypted by the bytes from \(k^{th}\) to \({(k+n)}^{th}\) of one keystream, and the offset k is regarded as the value of attribute keystream_info A; then, a new text is inserted between \(c_{i}\) and \(c_{i+1}\) of T; finally, T is cut into two text segments \(T_{1} = <c_{1},c_{2},..,c_{i}>\) and \(T_{2} = <c_{i+1},c_{i+2},..,c_{n}>\) with the same value of A. In fact, the value of A of \(T_{2}\) should be revised into \(k+i\) when decrypting the full document. Fortunately, this attribute value is easily revised by the client in the pre-edit phase. Instead of maintaining attributes keystream_info of all the old operations, and revising them for each random insertion in the editing phase, it is efficient for the client to calculate the correct attribute value of the latter text segment based on the length of the previous text segments with the same keystream_info, because all the texts and the attributes are downloaded from the server during the decryption process in the pre-edit phase.

5.2 Sever Improvement

Attributes Update. The correct value of attribute keystream_info can be also changed by random deletions. For example: a text segment \(T = <c_{1},c_{2},...,c_{n}>\) is encrypted by the bytes from \(k^{th}\) to \({(k+n)}^{th}\) of one keystream, and the offset k is regarded as the value of attribute keystream_info A; then, a substring \(<c_{i+1},c_{i+2},..,c_{j}> ( i>0, j<n)\) of T is deleted; finally, T is cut into two text segments \(T_{1} = <c_{1},c_{2},..,c_{i}>\) and \(T_{2} = <c_{j+1},c_{j+2},..,c_{n}>\) with the same value of A. In fact, the value of A of \(T_{2}\) should be updated into \(k+j\) when decrypting the full document. This problem is perfectly solved at the server side, and it cannot be done at the client side.

As all the text segments with the related attributes are stored at the cloud, and the servers apply each operation in the latest version of the document, a small embedded code to update the value of keystream_info is executed at the cloud server, when the cloud server is processing the received operations. Instead of revising it at the client which does not maintain the attributes of deleted texts, it is more reasonable for the server to revise it and store the updated attributes with the text.

5.3 Character Set and Special Character

The client is implemented by JavaScript in Browsers that use the UTF-16 character set, so the encrypted texts may contain illegal characters. In the UTF-16 character set, each character in BMP plane-0 (including ASCII characters, East Asian languages characters, etc.) [28] will be presented as 2 bytes, and 0xDF80 to 0xDFFF in hexadecimal is reserved. Therefore, in the LightCore client, if the encrypted result is in the zone from 0xDF80 to 0xDFFF (i.e., an illegal characters in UTF-16), it will be XORed with 0\(\,\times \,\)0080 to make it a legal UTF-16 character. In the prototype, LightCore supports ASCII characters, which are in the zone from 0\(\,\times \,\)0000 to 0\(\,\times \,\)007F. At the same time, the above XORing may make the decrypted character be an illegal ASCII character; for example, the input ‘a’ (0\(\,\times \,\)0061 in hexadecimal) will result in 0\(\,\times \,\)00e1, an illegal ASCII character. So, in this case, the decrypter will XOR it with 0\(\,\times \,\)0080 again if it finds the decrypted result is in the zone from 0\(\,\times \,\)0080 to 0\(\,\times \,\)00FF. We plan to support other languages characters in the future, and one more general technique is to map the encrypted result in the zone from 0xDF80 to 0xDFFF, into a 4-bytes legal UTF-16 character.

In our system, the newline character (0\(\,\times \,\)000A in hexadecimal) is a special character that is not encrypted. As mentioned above, the cloud servers need the position information of user operations to finish processing. In Etherpad and LightCore, the position is represented as (a) the line number and (b) the index at that line. So, the unencrypted newline characters enable the servers to locate the correct positions of user operations. This method discloses some information to the curious servers, as well as other format attributes; see Sect. 6 for the detailed analysis.

6 Security Analysis

In LightCore, all user data including all operations and every version of the documents are processed in the cloud. Attackers from inside or outside might attempt to alter or delete the user data, or disrupt the cloud services. However, for the reputation and benefits of the cloud service provider, the honest-but-curious cloud servers are supposed to preserve integrity, availability and consistency for the data of users. The cloud service provider will deploy adequate protections to prevent such external attacks, including access control mechanisms to prevent malicious operations on a document by other unauthorized users.

Preserving the confidentiality of users’ documents is the main target of LightCore. Firstly, in our system, only the authorized users with the shared master key can read the texts of the documents. LightCore adopts stream cipher and the CTR mode of block cipher to encrypt data at the client side. In the editing phase, the input texts of each operation is encrypted before being sent to the cloud. Therefore, the input texts is transmitted in ciphertext and documents in the cloud are also stored in ciphertext. Secondly, the algorithms are assumed to be secure and the keys only appears on the clients. So, these keys could only be leaked by the collaborative users or the clients, which are also assumed to be trusted. Finally, data keys are generated in a random way by each user, and LightCore uses each byte of the keystreams generated by data keys for only one time. Any text is encrypted by the keystreams generated specially for it. So, the curious servers cannot infer the contents by analyzing the difference of two decrypted texts.

In order to maintain the functionalities of the cloud servers, we only encrypt the input texts of each operation but not the position of the operation. The position of each operation and the length of the operated text are disclosed to the cloud servers, which may leak a certain of indirect sensitive information (including the number of lines, the distribution of paragraphs and other structure information). We assume these data can only be access by the authorized clients and the cloud servers, and they are not disclosed to external attackers by adopting the SSL protocol. In this case, the related data are limited to the cloud and the clients. Additionally, the attributes attached to the text segments, including font, color, author identity, keystream_info, might also be used to infer the underline information of the documents. For example, a text segment with the “bold” attribute may disclose its importance; A text segment with “list” attribute may also leak some related information. However, some of the attributes can be easily protected by encrypting them at the client in LightCore, because the cloud servers are not required to process all of them (for example, font, size, color, etc.). Therefore, encrypting these attributes will not impede the basic functionalities of the cloud servers. To protect these attributes will be included in our future work. Anyway, attributes author and keystream_info cannot be encrypted, because these attributes related to the basic functionalities of the cloud servers.

Another threat from the cloud, is to infer sensitive data by collecting and analyzing data access patterns from careful observations on the inputs of clients. Even if all data are transmitted and stored in an encrypted format, traffic analysis techniques can reveal sensitive information about the documents. For example, analysis on the frequency of modifications on a certain position could reveal certain properties of the data; the access history to multiple documents could disclose access habits of a user and the relationship of the documents; access to the same document even the same line from multiple users could suggest a common interest. We do not resolve the attacks resulted from such traffic analysis and access pattern analysis. However, in a high interactive collaborative editing system, modifications are submitted and sent about every 500 ms, which generates a large amount of information flow in the editing phase. Therefore, it is very costly for curious cloud servers to collect and analyze traffic information and access patterns, which do not directly leak sensitive information.

7 Performance Evaluation

The basic requirement of LightCore is that the highly interactive client can view the modifications of other clients in real time. During the editing process, each operation is processed by the sending client, the cloud server and the receiving clients. The whole process shall be very short and the latency of transmission shall be low. Therefore, the added cryptographic computation shall make no difference on real time. The feature of quick joining to edit is also expected to be satisfied. Therefore, the time of decrypting the document should be short when new clients join. In this section, we present the results of the experiments, to show that a high performance of LightCore is achieved, and we also suggest the suitable keystream policies for different use scenarios.

We installed the cloud server on a Ubuntu system machine with 3.4 GHZ Inter(R) Core(TM) i7-2600 and 4 GB of RAM. We evaluated the performance of the crypto module on FireFox Browser of version 34.0.5. The algorithms of stream cipher or block cipher (CTR mode) are configurable in LightCore. In our experiments, we test the performance of the crypto module at the client that implements the stream cipher RC4 or the CTR mode of block cipher AES.

7.1 Real Time

We evaluate the performance at the client of both the original collaborative system without crypto module and LightCore with crypto module. At the client side, the input texts of each insertion are encrypted before being sent to the cloud servers. When receiving the operation, the client will firstly decrypt it, transform it based on the operations in the local queue and apply it in its local copy. In order to evaluate the time of these main procedures, we make an experiment that 20 collaborators from different clients quickly input texts in the same document concurrently. The time of transforming an operation (called the queuing time), the time of applying an operation in its local copy (called the applying time) and the transmission time of each operation are given in Table 2. In fact, the main difference lies in the added encryption/decryption process, and the other processes are not affected. The decryption time less than 500 ms has no influence on real time. In order to test the concurrent capability, in the experiment, we set a client C only responsible for receiving operations from the 20 clients. The total time from the start time to applying 20 operations in its local copy at the client C is also given in Table 2. We can see that the total time 1236 ms of LightCore is only 27 ms longer than that of the original system, which has no difference on human sensory perception.

7.2 Decryption Time of Pre-edit Phase

In LightCore, the cloud servers maintain the freshest well-organized document, by modifying the stored document into a joint version based on OT. When join to edit, the clients download the freshest document, decrypt it, and then apply (or present) it on the editor. For resource-limited clients with the decryption function, a short time to join (i.e., pre-edit phase) is expected. In this part, we evaluate the performance of the decryption functionality implemented by the CTR mode of block cipher (AES) and stream cipher (RC4). Unlike stateless block cipher, the performance of stateful stream cipher varies in decrypting documents generated from different insertions and deletions. For the resource-limited clients, the size of buffer to cache bytes of keystreams is limited to less than 1 KB in LightCore. Without enough buffer to cache bytes of keystreams to use latter, insertion operations in random positions require re-initialization and generating obsoleted bytes of keystreams. And deletion operations may also cause obsoleted bytes of keystreams.

For the two types of operations, we implement two stream cipher key update rules in LightCore, that is, the client updates the key of stream cipher, if (a) the generated bytes of the keystream comes to a predetermined length or (b) the user moves to another line previous to its current line to insert some texts. We make two experiments, one is to evaluate the performance when decrypting documents generated by random insertions and the other is to measure the performance when decrypting documents generated by random deletions.

Experiment of Random Insertions. In this experiment, documents of 1 MB are firstly generated by inserting texts in random positions of the documents. We suppose that users generally edit the document in the field of view, so we limit the distance of the positions between two continuous insertions less than 50 lines. Although texts of small size may be inserted in random positions when users are modifying the document, we suppose that users input texts continuously after a certain position, which is in accordance with the habit of regular editing. In the experiment, we set that 256 characters are continuously inserted after a certain position. We define insertions at the positions previous to the line of the current cursor as forward insertions. As forward insertions break the consistency of positions between texts and its used bytes of keystreams, different proportion of forward insertions may have different influence on the performance of the decryption function implemented by stream cipher. Therefore, we measure the decryption time of documents generated by random insertions with different proportion of forward insertions from 0 to 50 percents.

Firstly, the performance of decrypting a document with stream cipher without key update rules is given in Fig. 3(a). The results show that the decryption time increases with the proportions of forward insertions. When the proportion of forward insertions comes to 15 percents, the decryption time, longer than 8 s, may be still intolerable for users. We evaluate the performance of LightCore implemented by stream cipher of different predetermined lengths of keystreams from 0.5 KB to 32 KB. The results in Fig. 3(b) show that the time of decrypting the documents with stream cipher is less than 500 ms. Although the decryption time of adopting AES CTR maintains about 300 ms, the performance of stream cipher of the predetermined length 16 KB or 32 KB is better than AES CTR. The main differences lie in the different number of initialization and that of obsoleted bytes of keystreams, which are given in Table 3 of Appendix A.

Experiment of Random Deletions. In this experiment, we generate documents of 1 MB by sequentially appending 2 MB text and subsequently deleting 1 MB text in random positions. The documents are encrypted with stream cipher of different predetermined lengths of keystreams from 0.5 KB to 32 KB or AES CTR. We suppose that the length of each deleted text may have influence on the decryption time of stream cipher. For example, a long text segment \(T=<c_{1},c_{2},...,c_{n}>\) is encrypted with the bytes in the position from \(0^{th}\) to \(n^{th}\) of one keystream, and the predetermined length of the keystream is n. If each deleted text is longer than n, T may be deleted and this keystream has not to be generated when decrypting the document. If each deleted text is short, the character \(c_n\) may not be deleted. In order to decrypt \(c_n\), the obsoleted bytes from \(1^{th}\) to \((n-1)^{th}\) of this keystream are required to be generated. We test the decryption time of documents with different length of deleted text from 32 to 8192 characters. The results in Fig. 4 show that the decryption time of stream cipher RC4 is linearly decreasing with the length of each deletion text. Although the decryption time of AES CTR maintains about 300 ms, the performance of RC4 is more efficient for the predetermined length of keystreams longer than 16 KB. When the deleted text is longer than 2048 characters, the value of the 8 KB curve is approximately equal to that of 16 KB curve. When the deleted text is longer than 4096 characters, the value at 4096 of 8 KB curve (219 ms) and that of 16KB curve (229 ms) is smaller than that of 32 KB curve. In fact, it will not be better for adopting stream cipher of the predetermined length of keystreams longer than 32 KB. The main difference lies in the number of initialization and that of obsoleted bytes of keystreams, which is given in Table 4 of Appendix A. If the value of predetermined length is larger than 32 KB, the more obsoleted bytes of keystreams bring more overhead even if the number of initialization decreases.

Suggestions for Keystream Polices. The results of the experiments above illustrate that the efficiency of LightCore varies as the keystream policy changes. Therefore, users can determine different keystream polices based on their requirements in different use scenarios. If a stable decryption time is expected, adopting the CTR mode of block cipher may be more suitable. If a shorter decryption time is expected, especially for documents of large size, a faster stream cipher of different key update rules is suggested to be adopted. If a large size of texts are input sequentially after the position of each forward insertion, it can achieve an efficient performance of stream cipher by re-initializing the stream cipher with a new data key and setting a large value of the predetermined length. However, when a document is corrected by frequently inserting small text each time (e.g., 2 to 10 characters), we suggest combining stream cipher with block cipher CTR mode in LightCore, that is, (a) the clients encrypt data with stream cipher when users are sequentially appending text at some positions; and (b) encrypt data with block cipher CTR mode when forward insertions happen. In this case, it will not result in heavy overhead for frequent initialization of stream cipher. Note that block CTR mode and stream cipher can be used simultaneously in LightCore.

Efficient key update rules should balance the overhead of initialization and that of generating obsoleted bytes of keystreams. A small predetermined length of each keystream requires frequent initialization, and a larger one causes lots of obsoleted bytes of keystreams. When a document is not modified by frequently deleting, that is, the proportion of the total deleted text in the full document is small, the predetermined length of keystreams can be set at a bigger value. Otherwise, we should set an appropriate value for the predetermined length based on the overhead of initialization and keystream generation. For example, the value 16 KB or 32 KB of the predetermined length for RC4 can bring more efficient performance.

8 Conclusion

We propose LightCore, a collaborative editing cloud solution for sensitive data against honest-but-curious servers. LightCore provides real-time online editing functions for a group of concurrent users, as existing systems (e.g., Google Docs, Office Online and Cloud9). We adopts stream cipher or the CTR mode of block cipher to encrypt (and decrypt) the contents of the document within clients, while only the authorized users share the keys. Therefore, the servers cannot read the contents, but the byte-by-byte encryption feature enables the cloud servers to process user operations in the same way as existing collaborative editing cloud systems. In order to optimize the decryption time in the pre-edit phase under certain use scenarios, we analyze different keystream policies, including the method to generate keystreams and the key update rules. Experiments on the prototype system show that LightCore provides efficient online collaborative editing services for resource-limited clients.

We plan to extend LightCore in the following aspects. Firstly, in the current design and implementation, only the texts of the document are protected and then the servers may infer a limited number of information about the document from the formats. We will analyze the possibility of encrypting more attributes (e.g., font, color and list) while the servers’ processing is not impeded or disabled. Secondly, for a given document, the client can dynamically switch among different keystream policies in an intelligent way, according to the editing operations that happened and the prediction. Finally, more languages characters will be supported in LigthCore.

Notes

- 1.

Other block cipher modes of operation such as OFB and CFB, also generate the keystream in bytes, but are less efficient.

- 2.

Other typical attributes include font, color, size, etc..

References

Sun, D., Sun, C.: Context-based operational transformation in distributed collaborative editing systems. IEEE Trans. Parallel Distrib. Syst. 20(10), 1454–1470 (2009)

Shamir, A.: Stream ciphers: dead or alive? In: Lee, P.J. (ed.) ASIACRYPT 2004. LNCS, vol. 3329, pp. 78–78. Springer, Heidelberg (2004)

Lautamäki, J., Nieminen, A., Koskinen, J., Aho, T., Mikkonen, T., Englund, M.: Cored: browser-based collaborative real-time editor for Java web applications. In: 12 Computer Supported Cooperative Work (CSCW), pp. 1307–1316 (2012)

Fan, H., Sun, C.: Supporting semantic conflict prevention in real-time collaborative programming environments. ACM SIGAPP Appl. Comput. Rev. 12(2), 39–52 (2012)

Lamport, L.: Time, clocks, and the ordering of events in a distributed system. Commun. ACM 21(7), 558–565 (1978)

Nédelec, B., Molli, P., Mostéfaoui, A., Desmontils, E.: LSEQ: an adaptive structure for sequences in distributed collaborative editing. In: Proceedings of the 2013 ACM symposium on Document engineering, pp. 37–46 (2013)

Nédelec, B., Molli, P., Mostéfaoui, A., Desmontils, E.: Concurrency effects over variable-size identifiers in distributed collaborative editing. In: Proceedings of the International workshop on Document Changes: Modeling, Detection, Storage and Visualization (2013)

Vidot, N., Cart, M., Ferrié, J., Suleiman, M.: Copies convergence in a distributed real-time collaborative environment. In: Proceeding on the ACM 2000 Conference on Computer Supported Cooperative Work (CSCW), pp. 171–180 (2000)

Google Docs (2014). http://docs.google.com/

Office Online (2014). http://office.microsoft.com/zh-cn/online/FX100996074.aspx

Raykova, M., Zhao, H., Bellovin, S.M.: Privacy enhanced access control for outsourced data sharing. In: 16th International Conference on Financial Cryptography and Data Security (FC), pp. 223–238 (2012)

di Vimercati, S.D.C., Foresti, S., Jajodia, S., Livraga, G., Paraboschi, S., Samarati, P.: Enforcing dynamic write privileges in data outsourcing. Comput. Secur. 39, 47–63 (2013)

Zhou, L., Varadharajan, V., Hitchens, M.: Secure administration of cryptographic role-based access control for large-scale cloud storage systems. J. Comput. Syst. Sci. 80(8), 1518–1533 (2014)

Li, M., Yu, S., Ren, K., Lou, W.: Securing personal health records in cloud computing: patient-centric and fine-grained data access control in multi-owner settings. In: Jajodia, S., Zhou, J. (eds.) SecureComm 2010. LNICST, vol. 50, pp. 89–106. Springer, Heidelberg (2010)

Feldman, A.J., Zeller, W.P., Freedman, M.J., Felten, E.W.: SPORC: group collaboration using untrusted cloud resources. In: 9th USENIX Symposium on Operating Systems Design and Implementation, pp. 337–350 (2010)

Sang, C., Li, Q., Kong, L.: Tenant oriented lock concurrency control in the shared storage multi-tenant database. In: 16th IEEE International Enterprise Distributed Object Computing Conference Workshops (EDOC), pp. 179–189 (2012)

Sun, C.: Optional and responsive fine-grain locking in internet-based collaborative systems. IEEE Trans. Parallel Distrib. Syst. 13(9), 994–1008 (2002)

Fraser, N.: Differential synchronization. In: Proceedings of the 2009 ACM Symposium on Document Engineering, New York, USA, pp. 13–20 (2009)

Fuzzy patch April 2009. http://neil.fraser.name/writing/patch/

Myers, E.W.: An O(ND) difference algorithm and its variations. Algorithmica 1(2), 251–266 (1986)

Bernstein, P.A., Hadzilacos, V., Goodman, N.: Concurrency Control and Recovery in Database Systems. Addison Wesley, Reading (1987)

Ressel, M., Nitsche-Ruhland, D., Gunzenhäuser, R.: An integrating, transformation-oriented approach to concurrency control and undo in group editors. In: Proceedings of the ACM 1996 Conference on Computer Supported Cooperative Work (CSCW), pp. 288–297 (1996)

Ressel, M., Gunzenhäuser, R.: Reducing the problems of group undo. In: Proceedings of the International ACM SIGGROUP Conference on Supporting Group Work, pp. 131–139 (1999)

Sun, C.: Undo as concurrent inverse in group editors. Interactions 10(2), 7–8 (2003)

Schneier, B.: Fast software encryption. In: 7th International Workshop (FSE 2000), vol. 1978, pp. 182–184 (1994)

Boesgaard, M., Vesterager, M., Pedersen, T., Christiansen, J., Scavenius, O.: Rabbit: a new high-performance stream cipher. In: Johansson, T. (ed.) FSE 2003. LNCS, vol. 2887, pp. 307–329. Springer, Heidelberg (2003)

Mousa, A., Hamad, A.: Evaluation of the RC4 algorithm for data encryption. IJCSA 3(2), 44–56 (2006)

Hoffman, P., Yergeau, F.: Utf-16, an encoding of iso 10646, Technical report RFC 2781, February 2000

Acknowledgement

This work was partially supported by National 973 Program under award No. 2014CB340603, National 863 Program under award No. 2013AA01A214, and Strategy Pilot Project of Chinese Academy of Sciences under award No. XDA06010702.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

A Appendix

A Appendix

Table 3 shows the detailed size of obsoleted bytes of keystreams to be generated and the number of initialization, when decrypting a document of 1 MB generated from random insertions. The first row of Table 3 denotes the rate of forward insertions (or inserting text at the position previous to the line of the current cursor) from 0 to 0.5 (50 percents). The first column denotes the predetermined length of keystreams. We give the related obsoleted bytes of keystreams in the column titled “Obsol” and the size of obsoleted bytes is given in KB. The related number of initialization is shown in the column titled “Init”. The number of initialization and the size of obsoleted bytes is increasing with the rate of forward insertions when the predetermined length is given. The results show that it results in much initialization and lots of obsoleted bytes of the keystream, if the key to initialize the stream cipher is not updated during the whole encryption process (one seed). When the two principles of stream cipher key update rules are adopted in LightCore, the stream cipher of a longer predetermined length of keystreams may cause less initialization and more obsoleted bytes of keystreams.

Table 4 shows the detailed size of obsoleted bytes of keys streams to be generated and the number of initialization, when decrypting a document of 1 MB generated by sequentially appending text to 2 MB and then deleting text at random positions to 1 MB. The first row of Table 4 denotes the length of each deleted text. The first column denotes the predetermined length of keystreams. We give the related obsoleted bytes of keystreams in the column titled “Obsol” and the size of obsoleted bytes is given in KB. The related number of initialization is shown in the column titled “Init”. The column titled “random” denotes that the length of each deleted text is randomly determined. The number of initialization and the size of obsoleted bytes is decreasing with the length of each deleted text when the predetermined length is given. Given a smaller predetermined length of keystreams (0.5 KB or 1 KB, e.g.), initialization may bring more overhead than obsoleted bytes of keystreams. The results show that it causes less initialization and more obsoleted bytes of keystreams when a larger predetermined length of keystreams is given for stream cipher key update rules.

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Jiang, W., Lin, J., Wang, Z., Li, H., Wang, L. (2015). LightCore: Lightweight Collaborative Editing Cloud Services for Sensitive Data. In: Malkin, T., Kolesnikov, V., Lewko, A., Polychronakis, M. (eds) Applied Cryptography and Network Security. ACNS 2015. Lecture Notes in Computer Science(), vol 9092. Springer, Cham. https://doi.org/10.1007/978-3-319-28166-7_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-28166-7_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-28165-0

Online ISBN: 978-3-319-28166-7

eBook Packages: Computer ScienceComputer Science (R0)