Abstract

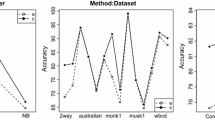

Feature selection is a pre-processing step in classification, which selects a small set of important features to improve the classification performance and efficiency. Mutual information is very popular in feature selection because it is able to detect non-linear relationship between features. However the existing mutual information approaches only consider two-way interaction between features. In addition, in most methods, mutual information is calculated by a counting approach, which may lead to an inaccurate results. This paper proposes a filter feature selection algorithm based on particle swarm optimization (PSO) named PSOMIE, which employs a novel fitness function using nearest neighbor mutual information estimation (NNE) to measure the quality of a feature set. PSOMIE is compared with using all features and two traditional feature selection approaches. The experiment results show that the mutual information estimation successfully guides PSO to search for a small number of features while maintaining or improving the classification performance over using all features and the traditional feature selection methods. In addition, PSOMIE provides a strong consistency between training and test results, which may be used to avoid overfitting problem.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Guyon, I., Elisseeff, A.: An introduction to variable and feature selection. J. Mach. Learn. Res. 3, 1157–1182 (2003)

Liu, H., Motoda, H., Setiono, R., Zhao, Z.: Feature selection: an ever evolving frontier in data mining. FSDM 10, 4–13 (2010)

Whitney, A.W.: A direct method of nonparametric measurement selection. IEEE Trans. Comput. 100(9), 1100–1103 (1971)

Marill, T., Green, D.M.: On the effectiveness of receptors in recognition systems. IEEE Trans. Inf. Theory 9(1), 11–17 (1963)

Nag, K., Pal, N.R.: A multiobjective genetic programming-based ensemble for simultaneous feature selection and classification. IEEE Trans. Cybern. 46(2), 499–510 (2015)

Lin, F., Liang, D., Yeh, C.C., Huang, J.C.: Novel feature selection methods to financial distress prediction. Expert Syst. Appl. 41(5), 2472–2483 (2014)

Chuang, L.Y., Chang, H.W., Tu, C.J., Yang, C.H.: Improved binary PSO for feature selection using gene expression data. Comput. Biol. Chem. 32(1), 29–38 (2008)

Hassan, R., Cohanim, B., De Weck, O., Venter, G.: A comparison of particle swarm optimization and the genetic algorithm. In: Proceedings of the 1st AIAA Multidisciplinary Design Optimization Specialist Conference, pp. 1–13 (2005)

Dash, M., Liu, H.: Feature selection for classification. Intell. Data Anal. 1(3), 131–156 (1997)

Kohavi, R., John, G.H.: Wrappers for feature subset selection. Artif. Intell. 97(1), 273–324 (1997)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification. Wiley, New York (2012)

Dash, M., Liu, H., Motoda, H.: Consistency based feature selection. In: Terano, T., Liu, H., Chen, A.L.P. (eds.) PAKDD 2000. LNCS (LNAI), vol. 1805, pp. 98–109. Springer, Heidelberg (2000)

Hall, M.: Correlation-based feature selection for discrete and numeric class machinelearning. In: Proceedings of 7th International Conference on Machine Learning, Stanford University (2000)

Kononenko, I.: On biases in estimating multi-valued attributes. In: IJCAI. vol. 95, pp. 1034–1040. Citeseer (1995)

Walters-Williams, J., Li, Y.: Estimation of mutual information: a survey. In: Wen, P., Li, Y., Polkowski, L., Yao, Y., Tsumoto, S., Wang, G. (eds.) RSKT 2009. LNCS, vol. 5589, pp. 389–396. Springer, Heidelberg (2009)

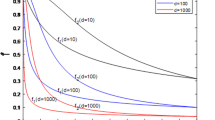

Kraskov, A., Stögbauer, H., Grassberger, P.: Estimating mutual information. Phys. Rev. E 69(6), 066138 (2004)

Kennedy, J., Eberhart, R., et al.: Particle swarm optimization. In: Proceedings of IEEE International Conference on Neural Networks, vol. 4, pp. 1942–1948. Perth, Australia (1995)

Jaynes, E.T.: Information theory and statistical mechanics. Phys. Rev. 106(4), 620 (1957)

Sturges, H.A.: The choice of a class interval. J. Am. Stat. Assoc. 21(153), 65–66 (1926)

Parzen, E.: On estimation of a probability density function and mode. Ann. Math. Stat. 33(3), 1065–1076 (1962)

Doquire, G., Verleysen, M.: A performance evaluation of mutual information estimators for multivariate feature selection. In: Carmona, P.L., Salvado Sánchez, J., Fred, A.L.N. (eds.) ICPRAM 2012. AISC, vol. 204, pp. 51–63. Springer, Heidelberg (2013)

Stearns, S.D.: On selecting features for pattern classifiers. In: Proceedings of the 3rd International Conference on Pattern Recognition (ICPR 1976), pp. 71–75. Coronado, CA (1976)

Zhu, Z., Ong, Y.S., Dash, M.: Wrapper-filter feature selection algorithm using a memetic framework. IEEE Trans. Syst. Man Cybern. B Cybern. 37(1), 70–76 (2007)

Neshatian, K., Zhang, M.: Genetic programming for feature subset ranking in binary classification problems. In: Vanneschi, L., Gustafson, S., Moraglio, A., Falco, I., Ebner, M. (eds.) EuroGP 2009. LNCS, vol. 5481, pp. 121–132. Springer, Heidelberg (2009)

Hunt, R., Neshatian, K., Zhang, M.: A genetic programming approach to hyper-heuristic feature selection. In: Bui, L.T., Ong, Y.S., Hoai, N.X., Ishibuchi, H., Suganthan, P.N. (eds.) SEAL 2012. LNCS, vol. 7673, pp. 320–330. Springer, Heidelberg (2012)

Sousa, P., Cortez, P., Vaz, R., Rocha, M., Rio, M.: Email spam detection: a symbiotic feature selection approach fostered by evolutionary computation. Int. J. Inf. Technol. Decis. Making 12(04), 863–884 (2013)

Bhowan, U., McCloskey, D.: Genetic programming for feature selection and question-answer ranking in IBM watson. In: Machado, P., Heywood, M.I., McDermott, J., Castelli, M., García-Sánchez, P., Burelli, P., Risi, S., Sim, K. (eds.) EuroGP 2015. LNCS, vol. 9025, pp. 153–166. Springer, Heidelberg (2015)

Xue, B., Zhang, M., Browne, W.N.: Particle swarm optimisation for feature selection in classification: novel initialisation and updating mechanisms. Appl. Soft. Comput. 18, 261–276 (2014)

Cervante, L., Xue, B., Zhang, M., Shang, L.: Binary particle swarm optimisation for feature selection: a filter based approach. In: 2012 IEEE Congress on Evolutionary Computation (CEC), pp. 1–8. IEEE (2012)

Butler-Yeoman, T., Xue, B., Zhang, M.: Particle swarm optimisation for feature selection: a hybrid filter-wrapper approach. In: 2015 IEEE Congress on Evolutionary Computation (CEC), pp. 2428–2435. IEEE (2015)

Xue, B., Zhang, M., Browne, W., Yao, X.: A survey on evolutionary computation approaches to feature selection. IEEE Trans. Evol. Comput. published online on 30 November 2015. doi:10.1109/TEVC.2015.2504420

Asuncion, A., Newman, D.: UCI machine learning repository (2007)

Van Den Bergh, F.: An analysis of particle swarm optimizers. PhD thesis, University of Pretoria (2006)

Zhai, Y., Ong, Y.S., Tsang, I.W.: The emerging big dimensionality. IEEE Comput. Intell. Mag. 9(3), 14–26 (2014)

Eberhart, R.C., Shi, Y.: Comparing inertia weights and constriction factors in particle swarm optimization. In: Proceedings of the 2000 Congress on Evolutionary Computation, vol. 1, pp. 84–88. IEEE (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Nguyen, H.B., Xue, B., Andreae, P. (2016). Mutual Information Estimation for Filter Based Feature Selection Using Particle Swarm Optimization. In: Squillero, G., Burelli, P. (eds) Applications of Evolutionary Computation. EvoApplications 2016. Lecture Notes in Computer Science(), vol 9597. Springer, Cham. https://doi.org/10.1007/978-3-319-31204-0_46

Download citation

DOI: https://doi.org/10.1007/978-3-319-31204-0_46

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-31203-3

Online ISBN: 978-3-319-31204-0

eBook Packages: Computer ScienceComputer Science (R0)