Abstract

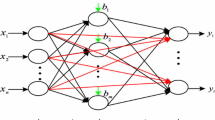

According to the simplicity and universal approximation capability, single layer feedforward networks (SLFN) are widely used in classification and regression problems. The paper presents a new OLS-PSO constructive algorithm based on Orthogonal Least Square (OLS) method and Particle Swarm Optimization (PSO) algorithm. Instead of evaluating the orthogonal components of each neuron as the conventional OLS method, a new recursive formulation is derived. Then based on the new evaluation of each neuron’s contribution, the PSO algorithm is used to seek the optimal parameters of the new neuron in continuous space. The proposed algorithm is experimented on some practical regression problems and compared with other constructive algorithms. Results show that proposed OLS-PSO algorithm could achieve a compact SLFN with good generalization ability.

This work was partially supported by the National Science Centre, Cracow, Poland under Grant No. 2013/11/B/ST6/01337.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Hornik, K.: Approximation capabilities of multilayer feedforward networks. Neural Netw. 4, 251–257 (1991)

Lin, B., Lin, B., Chong, F., Lai, F.: Higher-order-statistics-based radial basis function networks for signal enhancement. IEEE Trans. Neural Netw. 18(3), 823–832 (2007)

Min, C.C., Srinivasan, D., Cheu, R.: Neural networks for continuous online learning and control. IEEE Trans. Neural Netw. 17(5), 1511–1531 (2006)

Kwok, T.Y., Yeung, D.Y.: Objective functions for training new hidden units in constructive neural networks. IEEE Trans. Neural Netw. 8(5), 1131–1148 (1997)

Kwok, T.Y., Yeung, D.Y.: Constructive algorithms for structure learning in feedforward neural networks for regression problems. IEEE Trans. Neural Netw. 8(3), 630–645 (1997)

Huang, G.-B., Zhu, Q.-Y., Siew, C.-K.: Extreme learning machine: a new learning scheme of feedforward neural networks. In: 2004 International Joint Conference on Neural Networks (IJCNN-2004), (Budapest, Hungary), July 25–29 (2004)

Huang, G.-B., Chen, L.: Enhanced random search based incremental extreme learning machine. Neurocomputing 71(16–18), 3460–3468 (2008)

Feng, G., Huang, G.B., Lin, Q., Gay, R.: Error minimized extreme learning machine with growth of hidden nodes and incremental learning. IEEE Trans. Neural Netw. 20(8), 1352–1357 (2009)

Chen, S., Billings, S.A., Luo, W.: Orthogonal least squares methods and their applications to non-linear system identification. Int. J. Control 50, 1873–1896 (1989)

Chen, S., Hong, X., Luk, B.L., Harris, C.J.: Construction of tunable radial basis function networks using orthogonal forward selection. IEEE Trans. Syst. Man Cybern. B 39(2), 457–466 (2009)

Zhang, L., Li, K., He, H., Irwin, G.W.: A new discrete-continuous algorithm for radial basis function networks construction. IEEE Trans. Neural Netw. Learn. Syst. 24(11), 1785–1798 (2013)

Rao, C.R., Mitra, S.K.: Generalized Inverse of Matrices and Its Applications. Wiley, New York (1971)

Kennedy, J., Eberhart, R.C.: Particle swarm optimization. In: Proceedings of IEEE International Conference on Neural Network, Perth, Australia, pp. 1942–1948 (1995)

Langdon, W.B., Poli, R.: Evolving problems to learn about particle swarm optimizers and other search algorithms. IEEE Trans. Evol. Comput. 11(5), 561–578 (2007)

Zhang, C., Shao, H., Li, Y.: Particle swam optimisation for evolving artificial neural network. In: Proceedings of the IEEE Intemational Conference on Systems, Man, and Cybernetics, pp. 2487–2490 (2000)

Han, F., Yao, H.-F., Ling, Q.-H.: An improved extreme learning machine based on particle swarm optimization. In: Huang, D.-S., Gan, Y., Premaratne, P., Han, K. (eds.) ICIC 2011. LNCS, vol. 6840, pp. 699–704. Springer, Heidelberg (2012)

Mendes, R., Cortez, P., Rocha, M., Neves, J.: Particle swarms for feedforward neural network training. In: Proceedings International Joint Conference on Neural Networks, pp. 1895–1899 (2002)

Shi, Y., Eberhart, R.C.: A modied particle swarm optimizer. In: Proceedings of IEEE World Conference on Computation Intelligence, pp. 69–73 (1998)

Bartlett, P.L.: The sample complexity of pattern classification with neural networks: the size of the weights is more important than the size of the network. IEEE Trans. Inf. Theory 44, 525–536 (1998)

Wilamowski, B.M., Yu, H.: Improved computation for Levenberg Marquardt training. IEEE Trans. Neural Netw. 21(6), 930–937 (2010)

Xie, T., Yu, H., Hewlett, J., Rozycki, P., Wilamowski, B.: Fast and efficient second order method for training radial basis function networks. IEEE Trans. Neural Netw. 24(4), 609–619 (2012)

Shi, Y., Eberhart, R.C.: Empirical study of particle swarm optimization. In: Proceeding of the 1999 Congress on Evolutionary Computation, Piscataway, pp. 1945–1950 (1999)

Fahlman, S.E.: Faster learning variations on backpropagation: an empirical study. In: Proceedings of 1988 Connectionist Models Summer School, pp. 38–51 (1988)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Wu, X., Rozycki, P., Wilamowski, B.M. (2016). Single Layer Feedforward Networks Construction Based on Orthogonal Least Square and Particle Swarm Optimization. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L., Zurada, J. (eds) Artificial Intelligence and Soft Computing. ICAISC 2016. Lecture Notes in Computer Science(), vol 9692. Springer, Cham. https://doi.org/10.1007/978-3-319-39378-0_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-39378-0_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39377-3

Online ISBN: 978-3-319-39378-0

eBook Packages: Computer ScienceComputer Science (R0)