Abstract

Evaluating the quality of academic content on social media is a critical research topic for the further development of scholarly collaboration on the social web. This pilot study used the question/answer pairs of Library and Information Science (LIS) domain on ResearchGate to examine how scholars assess the quality of academic answers on the social web. This study aims to: (1) examine the aspects used by scholars in assessing the academic answer quality and identify the objective and subjective aspects; (2) future verify the existing of subjective aspects when judging the academic answers’ quality by detecting the agreement of evaluation between different evaluators. Though concluding the evaluation criteria of the academic content quality from the related works, the authors identified nine aspects of the quality evaluation and mapped the participants’ responds of the reasons for the answer quality judgment to the identified quality judgment framework. We found that aspects that related to the content of academic text and the users’ beliefs and preferences are the two common used aspects to judge the academic answer quality, which indicated that not only the text itself, but also the evaluator’s beliefs and preferences influence the quality judgment. Another finding is the agreement level between different evaluator’s judgments is very low, compared with other non-academic text judgment agreement level.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

As Web2.0 technology develops, user generated contents (UGCs) on social networking sites gradually become the main sources of internet information. How to quickly distinguish high quality UGCs to satisfied information consumers’ needs becomes a critical research topic. Social Q&A platforms are one of the popular social networking sites to enable users to ask questions and provide plenty of answers. Over the past decade, we observed the growing popular of the social Q&A platforms. An increasing number of individuals are using social Q&A platforms to fulfil their information needs. Abundant available answers are obtained from different users with different quality. The information quality is greater reliance on the requirement of information consumers to make these quality judgments. Ferschke [1] clearly described “high quality information must be fit for use by information consumers in a particular context, meet a set of predefined specifications or requirements, and meet or exceed user expectations. Thus, high quality information provides a particular high value to the end user.”

Meanwhile, academic social Q&A platforms, such as ResearchGate, change the traditional academic exchange channels and provide a new informal way that researchers interact and communicate with other researchers [2]. On social Q&A platforms, everyone can provide academic resources without any peer-reviewed. As a result, these are a mass of resources with diverse quality from high to low. This made it hard for scholars to find the high quality resources, which may result in decreasing the desire to join in the social Q&A platforms to acquire and share academic information [4]. So the information quality on the academic social networking sites is another necessary issue to be solved. We argue that the academic answers quality evaluation on academic social Q&A platforms is more critical than generic social Q&A platforms and different from the generic social Q&A evaluation [3]. Firstly, on the academic Q&A platforms, academic questions and answers are more professional and need much domain knowledge to understand. What’s more, there may be no fixed high quality answers, especially for discussion seeking questions. Secondly, academic answers’ quality maybe have multiple new facets which need other novel criteria to evaluate. Thirdly, for the academic social Q&A platforms, most information consumers are scholars with the different professional levels which are different from those information consumers who use generic Q&A sites [3].

In this study, we selected ResearchGate’s Q&A platform as the academic social media platform for our study. ResearchGateFootnote 1 is one of the most well-known academic social networking sites (ASNSs) that support scholars’ various activities, including asking and answering questions. We used the question/answer pairs of Library and Information Science (LIS) domain to examine how scholars assess the quality of academic answers on the social web. This study had two main motivations. One was to examine the aspects used by the evaluators to access the academic answer quality. Then based on the definition of each information quality evaluation aspects that acquired from the previous studies, we identified the objective and subjective aspects. The other was to detect the agreement of evaluation between different evaluators when judging the academic answers’ quality, in order to further verify the exiting of the subjective aspects during the quality assessment. Moreover, by comparing the agreement level of the academic content assessment with the other kinds of content’s evaluation agreement, this study can acquire the reliability of the evaluators’ judgment on the academic content quality. The two research questions are:

-

What aspects that evaluators use to access the quality of academic answers? What aspects are objective ones whose judgment are based on the information itself, and what aspects are subjective ones whose judgment are context sensitive?

-

How about the agreement in evaluation the academic answers’ quality between the evaluators?

2 Related Works

Academic social media changed the way scholars obtain the academic resources [5]. There are rapidly growing existing works on academic social networking sites (ASNS), which include scholarly information exchange [6] and trustworthiness of scholarly information on ASNS [5, 7], motivation of joining in ASNS [8], and scholarship assessment through ASNS [9].

Although few existing work focused on the quality of academic answers, there are many related works on examining answer quality on generic social Q&A platforms. Some of the prior researches focused on finding the answers quality criteria, such as content, cognitive, utility, source, user relationship, socioemotional, to automatically evaluate the answer quality [10–12]. Some detected the relationship between the identified answer quality features and peer judgment quality [13–15]. Others were concentrated on comparing the quality of different Q&A platforms [16, 17].

For the assessment of academic content quality, judging research articles’ quality was the earliest research topic. The previous works considered that “high quality journals are more likely to publish high quality research papers” [18]. So previous works focused on detecting the high quality journals, such as analyzing journals’ citation, impact factor, and reputation [19, 20]. However, other researches argued that it is biased to judge an article’s quality based on the journals’ quality evaluation methods [21]. So the following studies were directly based on the papers’ external features, such as using papers’ authors reputation and citation [22, 23]. Then the following works researched into the papers’ content and judgment context to explore the papers quality. Calvert and Zengzhi present the most accepted criteria given by the journal editors for evaluating research articles, including the new information or data, acceptable research design, level of scholarship, advancement of knowledge, theoretical soundness, appropriate methodology and analysis [24]. Clyde (2004) detected the influence of the evaluators’ specialist knowledge on the research publications’ quality judgment [43].

Until now there has few works about the quality of academic content on social media. Li et al. studied the effect of web-captured features and human-coded features on the peer-judged academic answers’ quality in the ResearchGate Q&A platform [3]. There are some previous works undertook the relevance and credibility of academic information on the social media [27–33]. And some works had announced that “the relevance and credibility of information are aspects of the concept of information quality” [25, 26]. The studies about the relevance judgment of academic content focused on undertaking in detecting the criteria for evaluating the relevance of academic resources on the web [27–30]. For example, Park interviewed 11 graduate students to evaluate the bibliographic citations for the research proposal of masters’ thesis. They identified three major categories, including internal context, external context, and problem context, of affecting relevance assessments [28]. The trustworthiness of the academic information on social media is another related topic. These studies concentrated on reporting what criteria influence users’ judgment of the academic resources’ trustworthiness [25, 31–33]. For instance, Watson examined the relevance and reliability criteria applied to information by 37 students for their research assignments or projects. The identified criteria was classified into two major categories, pre-access criteria and post-access criteria [25].

In summary, there are no clear evaluation frameworks for academic answer quality on ASNS. So this paper aims to review assessment criteria for answer quality among existing work and examine how users assessment of the academic answer quality.

3 Research Design

3.1 Study Platform: ResearchGate Q&A

ResearchGate (in short: RG) is one of the most well-known ASNS for scholars. RG has more than 5 million users by the end of 2014. Its mission is to connect scholars and make it easy for them to share and access scientific outputs, knowledge, and expertise. On RG, scholars can share their publications; connect with other scholars; view, download, and cite other scholars’ publications; and ask academic questions and receive answers.

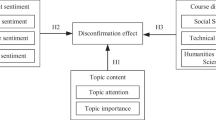

In this paper, we used RG’s Q&A platform to investigate academic answer quality assessment. As Fig. 1 shown, scholar posts a question, and other researchers can view or follow this answer, provide answers to the question, or use “up vote” or “down vote” to rate the answers according to their criteria.

A question/answering interface on RG (https://www.researchgate.net/post/How_can_I_decide_number_of_neuron_in_hidden_layer_in_ANN_for_probable_best_performance_in_classification_problem)

3.2 Dataset: Question/Answer Sets

In this study, we chose questions in the category of “Library Information ServicesFootnote 2” on RG Q&A. This is because the authors are LIS researchers who knows the domain. These same question/answer pairs were used as dataset in other studies too [3, 6]. The dataset contains 38 questions with 413 corresponding answers. Following Choi, Kitzie and Shah classification [35], we focused on the discussion-seeking questions because they are relatively more complex than information seeking questions, and may require more quality assessment criteria. Therefore, we narrowed down to 17 discussion seeking questions with 188 answers.

We further cleaned the dataset by removing those answers that do not provide the information to answer the question. For example, some answers only contain askers’ gratitude to the answerer, or some answerers declared that he had the same question or asking another related questions, answering another question that is put forward by other answerers. After we removed the above kinds of answers, we had 15 questions with 157 answers.

3.3 Research Method

Data Collecting.

We recruited 15 LIS domain scholars, who have adequate domain knowledge to understand and assess the content of the answers. These participants are labeled as E1–E15 in this paper. In order to obtain data for calculating the judgment agreement on the quality assessment, we divided the 15 questions with 157 answers into five groups by randomly assigning 157 question/answer pairs (QAPs) into the five groups. Through this way, we had three groups with 31 QAPs, and two groups with 32 QAPs. So, quality of each QAP in these five groups is judged by three participants. During our experiment, the participants used 11-point Likert scale (0 to 10) to judge the answer quality with 0 being the lowest quality and 10 as the highest. After finishing the judgments, the participants were asked to explain the criteria used for assessing the answer quality.

Data Analysis.

Using the related work presented in Sect. 2 [11–41], we summarize the following nine groups of criteria for assessing academic answer quality:

-

Criteria related to the content of academic text ( C1 ): this group are the criteria that examine the characteristics of the text content and are objective. This group contains 23 criteria: recency [24, 27–32, 40], information type [25, 27, 28, 30, 32], theoretical soundness [24], appropriate methodology [24], appropriate analysis [24], readability [11, 14, 28–30, 32, 40, 41], balanced and objective point of view [25, 27, 30–32], the views of other scholars [32], scholarly [25, 27, 31, 32, 41], the scope [25, 28, 29, 31], depth [11, 14, 17, 25, 27–29, 40, 41], references [27, 30–32, 41], objective accuracy [2, 14, 17, 25, 27, 29, 40, 41], appropriate quantity [27, 28], examples [27, 30], discipline [30], good logic [32, 41], including contact information [32], no repetitive [11, 28], original [11], consistently [40] and writing style [15, 25, 27, 41].

-

Criteria related to the sources of text ( C2 ): this group are the criteria relating to the text source, not the information content. This group contains the following four criteria: clear information about who is posting the information and his/her goals [32], author’s authorship [11, 14, 15, 24, 25, 27, 28, 30, 32, 33, 41], source status [25, 28, 29, 31–33], source type, such as from a paper, a report, a website, a forum, or a PowerPoint slides [28, 30].

-

Criteria related to the users’ beliefs and preferences ( C3 ): For the quality evaluation, different users recognize the text quality differently [34]. There are criteria which are subjective and determined differently by individual users. One of the reasons that leads to this phenomenon perhaps is that different users have different beliefs and preferences. Several criteria related to users’ beliefs and preferences were identified, which include topics satisfying the information needs [11, 27, 28, 30], subjective accuracy/validity/reasonableness/believable [14, 16, 29, 40, 41], interest/affectiveness [27–30, 41], and utility [11, 29, 41].

-

Criteria related to the users’ previous experience and background ( C4 ): another reason is users’ previous experience and background that results in the different quality judgment. In other words, users’ domain knowledge influences the evaluation of some criteria, which are understandability [27, 29, 30, 40, 41], known source [29, 30, 32], language [30], content novelty [11, 29, 30, 40, 41], value of a citation [28], and source novelty [29].

-

Criteria related to the user’s situation ( C5 ): different research situations maybe also affect users’ quality judgment. This group contains the following five criteria: time constraints [29, 30], relationship with author [29], information consumers’ purpose [28], stage of research [28, 30], and personal availability [28, 29].

-

Criteria related to the text as a physical entity ( C6 ): this group includes the criteria which are objective and external characteristics of the text, including obtainability [28–30], cost [16, 25, 29, 32], length [14, 15, 30, 41], and quickness [15, 41].

-

Criteria related to other information and sources ( C7 ): this group have the related criteria that associated with other information or sources to confirm the text quality. Consensus within the field [29, 32], external verification/tangibility/corroboration [17, 25, 29, 32, 41], cited by other authors in other documents [32] are the three identified criteria.

-

Criteria related to the texts’ layouts and structure ( C8): this group criteria are proposed to how well the format of the text is organized. We recognized four criteria, which are having lists/diagrams/statistics/pictures [27, 32], fewer advertisements [32], working links [25, 32], structural clues (with topic sentences, first paragraph, and headings) [25].

-

Criteria related to the social environment ( C9 ): this group contains social and emotional value that express in the text, which include users’ endorsement [14], polite [11], socioemotional value [40, 41], review [15], answerer’s attitude/effort/experience [41], and humor [41] criteria.

Then, we classified the participants’ responses according to the above mentioned nine groups of criteria using the directed content analysis method [36]. The classification results were discussed and confirmed among the authors to reach an agreement results.

We calculated the inter-rater agreement among the three sets of assessments on the QAPs using Fleiss’ Kappa [39]. Fleiss’ Kappa extended Cohen’s kappa [38] by being able to handle more than two raters. Fleiss’ kappa through a statistical measure assess the reliability of agreement between the raters when assigning categorical quality ratings to the answers [42]. Fleiss’ kappa below 0 represent “poor agreement”, 0.01 to 0.2 “slight agreement”, 0.21 to 0.4 “fair agreement”, 0.41 to 0.6 “moderate agreement”, 0.61 to 0.8 “substantial agreement”, 0.81 to 1.00 “almost perfect agreement” [37].

4 Results Analysis

4.1 Analysis of the Evaluation Criteria

The 15 participants who responded the reasons for answer quality judgment were mapped to the nine criteria groups. As shown in Table 1 of the results, the criteria related to the user’s situation (C5), other information and sources (C7) were not supported by the participants. It is possible that the participants only gave the quality score to the answers under our requests rather than truly seeking such information. So they could not image the situation clearly and associated the quality of the answer with the other information or sources when judging the answer quality.

Table 1 also shows that the content of academic text (C1) is the most commonly used criterion to evaluate the quality. Fourteen of the 15 participants mentioned this group of criteria. Among the 14 participants, 6 participants used the objective accuracy criterion (E4, E6, E9, E11, E12 and E13); for example, E12 mentioned that he needs objective evidences to support his quality evaluation. Meanwhile, completeness was also used by 6 among the 14 participants (E2, E3, E7, E9, E11 and E12) to evaluate the quality. An example is E7 said the answer should provide the enough arguments. Three of the 14 participants used the logic criterion (E5, E7 and E14) to judge the quality; for example E7 said the answer should express the idea logically. Readability and with references criteria are used by two participants, respectively (E7 and E11 for readability and E3 and E9 for with references). There were one participant using having theoretical basis (E2) and examples (E3), respectively.

The second commonly selected group is related to the users’ beliefs and preferences (C3). Ten of the 15 participants used this group criteria. Seven among the 10 participants used the relevance criterion (E3, E5, E6, E7, E12, E13 and E14), which means that the answer should provide relevant information to the question and meet the users’ information need. An example mentioned by the E14 is that the answer should focus on the problem. E2 and E4 mentioned the criterion of reasonability, and E11 mentioned utility.

Criteria related to the text as a physical entity (C6) are used by three participants (E5, E6 and E8). They mentioned such as accessibility, length and time constraint. The authorship was claimed by two participants (E1 and E6), which is the criterion related to the sources of text (C2). Two participants mentioned criteria related to the users’ previous experience and background (C4) (E2 and E12), including creative and understandability. E4 and E8 claimed they value the socioemotional of the answer; for example, the answer should express with friendship, honest, and serious attitude. Criteria related to the texts’ layouts and structure (C8) was selected by only one participant (E15).

According to Table 1, we recognize that the criteria that are associated with the user, like users’ preferences, background, and situation, were subjective quality evaluation criteria. Different user with different personality may perceive the quality differently. The other criteria are objective ones whose judgment are independent to different users’ judgments and are based on the content of the information for evaluating the quality.

4.2 Agreements on Quality Judgments

As shown in Table 2, based on the Landis and Koch [37] interpreting, the agreement level on quality evaluation agreement among the three participants on each question/answer pair was low. The Fleiss’ kappa of the five parts of data sets are all well below slight agreement (<0.2). The results gave us the indication that academic answer quality is a highly subjective concept. Based on results in Sect. 4.1, we known that different participants used different criteria to judge the quality, and 10 out of 15 participants used criteria that are related to their own preferences, background, and situations. This is the reason why the agreement of evaluating academic answer quality stay at very low agreement level. This result is somewhat consistent with a study of detecting the Wikipedia articles’ quality evaluation, which reported their agreement value being between 0.06 and 0.16 [34]. This indicates that academic text quality judgment is probably more difficult than for that on Wikipedia articles.

5 Discussion and Conclusions

In this study, we used the LIS domain question/answer sets from ResearchGate to detect the criteria that evaluators use to evaluate the academic answer quality. Then, we mapped the participants’ responds to the quality judgment framework. We found that the content of academic text and the users’ beliefs and preferences are the two common used criteria to judge academic answer quality. Meanwhile, based on the previous works’ definition, we identified the subjective criteria, which are dependent to different users, and objective criteria, which are only related to the content of the text. This investigation indicates that not only the text itself, but also the users’ beliefs and preferences can influence the quality judgment. So it is hard to achieve high agreement on text quality based on different users’ judgments. Especially for academic text, which are more complicated than generic text, it is even more difficult to get the high level agreement. This phenomena indicated that academic content quality evaluation cannot be simply based on a few users’ judgments, because they cannot reach the acceptable agreement level.

The major contribution of this study is that we identified that the evaluation of the academic answer quality contains both objective and subjective criteria. The objective criteria include readability, depth, and recency, and automatic methods can be used to evaluate the quality from these criteria. Meanwhile, the subjective criteria should be judged based on different users’ background and requirement. For example, the more expertise a user has on a particular domain, the higher chance that the user likes the professional content, whereas, the less knowledge a user has on a domain, the more likely the user would like to read some easy readable domain related text.

This is a pilot study regarding the evaluation of academic answer quality. A limitation of our study is that we just detected the one kind of academic text, ResearchGate academic answers on LIS domain. We plan to expand our study to other academic sources in different domains. More specifically, we hope to detect what criteria that belong to the nine criteria groups we identified are more important for scholars to judgment the academic content quality. This helps to set up a general quality evaluation framework for academic information on social media. Such framework would combine the subjective and objective criteria.

References

Ferschke, O.: The quality of content in open online collaboration platforms. Dissertation (2014)

Thelwall, M., Kousha, K.: Academia.edu: social network or academic network? J. Assoc. Inf. Sci. Technol. 65(4), 721–731 (2014)

Li, L., He, D., Jeng, W., Goodwin, S., Zhang, C.: Answer quality characteristics and prediction on an academic Q&A site: a case study on ResearchGate. In: Proceedings of the 24th International Conference on World Wide Web Companion, pp. 1453–1458. International World Wide Web Conferences Steering Committee, May 2015

Cheng, R., Vassileva, J.: Design and evaluation of an adaptive incentive mechanism for sustained educational online communities. User Model. User-Adap. Interact. 16(3–4), 321–348 (2006)

Tenopir, C., Levine, K., Allard, S., Christian, L., Volentine, R., Boehm, R., Watkinson, A.: Trustworthiness and authority of scholarly information in a digital age: results of an international questionnaire. J. Assoc. Inf. Sci. Technol. (2015)

Jeng, W., DesAutels, S., He, D., Li, L.: Information exchange on an academic social networking site: a multi-discipline comparison on ResearchGate Q&A (2015). arXiv preprint arXiv:1511.03597

Watkinson, A., Nicholas, D., Thornley, C., Herman, E., Jamali, H.R., Volentine, R., Tenopir, C.: Changes in the digital scholarly environment and issues of trust: an exploratory, qualitative analysis. Inf. Process. Manag. 45, 375–381 (2015)

Jeng, W., He, D., Jiang, J.: User participation in an academic social networking service: a survey of open group users on Mendeley. J. Assoc. Inf. Sci. Technol. 66(5), 890–904 (2015)

Thelwall, M., Kousha, K.: ResearchGate: disseminating, communicating, and measuring scholarship? J. Assoc. Inf. Sci. Technol. 66(5), 876–889 (2015)

Agichtein, E., Castillo, C., Donato, D., Gionis, A., Mishne, G.: Finding high-quality content in social media. In: Proceedings of the 2008 International Conference on Web Search and Data Mining, pp. 183–194. ACM, February 2008

Shah, C., Pomerantz, J.: Evaluating and predicting answer quality in community QA. In: Proceedings of the 33rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 411–418. ACM, July 2010

Liu, Y., Bian, J., Agichtein, E.: Predicting information seeker satisfaction in community question answering. In: Proceedings of the 31st Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 483–490. ACM, July 2008

Blooma, M.J., Hoe-Lian Goh, D., Yeow-Kuan Chua, A.: Predictors of high-quality answers. Online Inf. Rev. 36(3), 383–400 (2012)

John, B.M., Chua, A.Y.K., Goh, D.H.L.: What makes a high-quality user-generated answer? Internet Comput. IEEE 15(1), 66–71 (2011)

Fu, H., Wu, S., Oh, S.: Evaluating answer quality across knowledge domains: using textual and non-textual features in social Q&A. In: Proceedings of the 78th ASIS&T Annual Meeting: Information Science with Impact: Research in and for the Community, p. 88. American Society for Information Science, November 2015

Harper, F.M., Raban, D., Rafaeli, S., Konstan, J.A.: Predictors of answer quality in online Q&A sites. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 865–874, April 2008

Fichman, P.: A comparative assessment of answer quality on four question answering sites. J. Inf. Sci. 37(5), 476–486 (2011)

Lee, K.P., Schotland, M., Bacchetti, P., Bero, L.A.: Association of journal quality indicators with methodological quality of clinical research articles. J. Am. Med. Assoc. 287(21), 2805–2808 (2002)

Blake, V.L.P.: The perceived prestige of professional journals, 1995: a replication of the Kohl-Davis study. Educ. Inf. 14, 157–179 (1996)

Opthof, T.: Sense and nonsense about the impact factor. Cardiovasc. Res. 33, 1–7 (1997)

Seglen, P.O.: Why the impact factor of journals should not be used for evaluating research. Br. Med. J. 314, 498–502 (1997)

Ugolini, D., Parodi, S., Santi, L.: Analysis of publication quality in a cancer research institute. Scientometrics 38(2), 265–274 (1997)

Mukherjee, B.: Evaluating e-contents beyond impact factor-a pilot study selected open access journals in library and information science. J. Electron. Publishing 10(2) (2007)

Calvert, P.J., Zengzhi, S.: Quality versus quantity: contradictions in LIS journal publishing in China. Libr. Manag. 22(4/5), 205–211 (2001)

Watson, C.: An exploratory study of secondary students’ judgments of the relevance and reliability of information. J. Assoc. Inf. Sci. Technol. 65(7), 1385–1408 (2014)

Rieh, S.Y., Danielson, D.R.: Credibility: a multidisciplinary framework. Annu. Rev. Inf. Sci. Technol. 41(1), 307–364 (2007)

Cool, C., Belkin, N., Frieder, O., Kantor, P.: Characteristics of text affecting relevance judgments. In: National Online Meeting. Learned Information (EUROPE) LTD, vol. 14, p. 77, August 1993

Park, T.K.: The nature of relevance in information retrieval: an empirical study. Libr. Q. 63, 318–351 (1993)

Barry, C.L.: User-defined relevance criteria: an exploratory study. JASIS 45(3), 149–159 (1994)

Vakkari, P., Hakala, N.: Changes in relevance criteria and problem stages in task performance. J. Documentation 56(5), 540–562 (2000)

Currie, L., Devlin, F., Emde, J., Graves, K.: Undergraduate search strategies and evaluation criteria: searching for credible sources. New Libr. World 111(3/4), 113–124 (2010)

Liu, Z.: Perceptions of credibility of scholarly information on the web. Inf. Process. Manag. 40(6), 1027–1038 (2004)

Rieh, S.Y.: Judgment of information quality and cognitive authority in the Web. J. Am. Soc. Inf. Sci. Technol. 53(2), 145–161 (2002)

Arazy, O., Kopak, R.: On the measurability of information quality. J. Am. Soc. Inf. Sci. Technol. 62(1), 89–99 (2011)

Choi, E., Kitzie, V., Shah, C.: Developing a typology of online Q&A models and recommending the right model for each question type. Proc. Am. Soc. Inf. Sci. Technol. 49(1), 1–4 (2012)

Krippendorff, K.: Content Analysis: An Introduction to Its Methodology. Sage, Thousand Oaks (2012)

Landis, J.R., Koch, G.G.: The measurement of observer agreement for categorical data. Biometrics 33, 159–174 (1977)

Cohen, J.: A coefficient for agreement for nominal scales. Educ. Psychol. Measur. 20, 37–46 (1960)

Fleiss, J.L., Cohen, J.: The equivalence of weighted Kappa and the intraclass correlation coefficient as measures of reliability. Educ. Psychol. Measur. 33, 613–619 (1973)

Chua, A.Y., Banerjee, S.: So fast so good: an analysis of answer quality and answer speed in community question-answering sites. J. Am. Soc. Inf. Sci. Technol. 64(10), 2058–2068 (2013)

Kim, S., Oh, S.: Users’ relevance criteria for evaluating answers in a social Q&A site. J. Am. Soc. Inf. Sci. Technol. 60(4), 716–727 (2009)

Fleiss, J.L.: Measuring nominal scale agreement among many raters. Psychol. Bull. 76(5), 378 (1971)

Clyde, L.A.: Evaluating the quality of research publications: a pilot study of school librarianship. J. Am. Soc. Inf. Sci. Technol. 55(13), 1119–1130 (2004)

Acknowledgments

This work is supported by the Major Projects of National Social Science Fund (No. 13&ZD174), the National Social Science Fund Project (No. 14BTQ033).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Li, L., He, D., Zhang, C. (2016). Evaluating Academic Answer Quality: A Pilot Study on ResearchGate Q&A. In: Nah, FH., Tan, CH. (eds) HCI in Business, Government, and Organizations: eCommerce and Innovation. HCIBGO 2016. Lecture Notes in Computer Science(), vol 9751. Springer, Cham. https://doi.org/10.1007/978-3-319-39396-4_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-39396-4_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39395-7

Online ISBN: 978-3-319-39396-4

eBook Packages: Computer ScienceComputer Science (R0)