Abstract

Memories of experience are influenced by a peak-end effect [13]. Memories are modified to emphasize the final portions of an experience, and the peak positive, or negative, portion of that experience. We examine peak-end effects on judged Technical Quality (TQ) of online video. In two studies, sequences of different types of video disruption were varied so as to manipulate the peak-end effect of the experiences. The first experiment demonstrated an end effect, plus a possible peak effect involving negative, but not positive, experience. The second study manipulated payment conditions so that some sessions were structured as requiring payment to watch the video. The second study also distinguished between a peak effect and a possible sequence effect. Evidence was again found for an end effect, with a secondary effect of sequence, but no evidence was found for a peak effect independent of sequencing.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Memories of experience are disproportionately influenced by the end of the experience (the end effect), and by the most intense (positive or negative) portion of the experience (the peak effect), a finding first demonstrated by Frederickson and Kahneman [1] and confirmed in a number of subsequent studies [2]. Since measures of well being, such as user experience and satisfaction, are based on memories of experience, rather than on experience per se, design to improve satisfaction needs to consider how to improve peak and end experiences.

Quality of Experience (QoE) has become a key concern and competitive differentiating feature in the telecommunications industry [3]. In this paper we assess whether or not the peak-end effect applies to cumulative QoE after viewing sequences of Over-The-Top (OTT) videos. If the peak-end effect applies for services such as online video, then the service provider can better estimate customer experience based on peak-end effects in the Technical Quality (TQ) of viewed video.

In the following section we briefly review past work on QoE and its measurement. We discuss how QoE is related to frustration and satisfaction, and how it accumulates over time to form memories of experience, and attitudes towards the application or service being experienced. We then report on two studies that tested peak-end effects with online video. The first study is briefly summarized here and is reported more fully elsewhere [4], while the second study is reported here for the first time. Both studies used ethics protocols that were approved by the University of Toronto IRB.

2 Background

Quality of Experience (QoE) for the customer is a key differentiator of business success between service providers [5]. Overall QoE of video streaming is influenced by criteria such as video quality, audio quality, speed of service access, and frequency of service interruption. These elements of TQ are particularly important, and may be the only aspect of QoE that is under the control of service providers [6].

The Mean Opinion Score (MOS) [7] is commonly used to measure perceived video TQ. MOS provides a rating of subjective service quality, typically using the following scale anchors: 1 (bad); 2 (poor); 3 (fair); 4 (good); 5 (excellent). Other methodologies have been proposed for measuring TQ, including Session MOS [8], physiological measures such as skin conductance [9], behavior-based measures such as cancellation rates [10], and user acceptability of service quality [11].

A considerable amount of research has shown that a divergence between actual experiences and memories of those experiences [1, 12–14]. Although the memory of emotional experience is not objectively accurate, it is a powerful predictor of behavior [13].

Chignell et al. [15] carried out a subjective QoE study where videos with different types of impairments and disruptions were compared under different assumed payment conditions. As expected, the free service was most preferred, but no significant interaction was found between payment type and disruption type. Thus, the relative ordering, in terms of TQ, of different types of disruption such as freezings or failure to play all the way through, did not change between the free and paid condition.

The first study summarized below examined the applicability of the peak-end effect to TQ ratings of online video. The second study sought to replicate the peak-end effect found in the first study and to determine whether or not the peak-end effect is influenced by the type of payment (free vs. paid).

3 Study 1

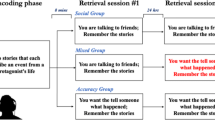

Twenty-four participants (10 female, 14 male) each saw three blocks of eight videos (24 videos in total). Videos were modified to have four different levels of disruption. Some of the videos played without any impairment or disruption (I0), some videos had either one (I1) or three (I3) impairments (incidents of freezing) but still played all the way through to the end, and some played only part way through before stopping and failing to complete (NR1). Videos that had no more than one instance of freezing (I0 and I1) were labeled as “good”, while the I3 and NR1 videos were labeled as “bad”. After each video was shown, a TQ rating was made using the following question and response options. “Your evaluation of the technical quality in the video is: Excellent (5), Good (4), Fair (3), Poor (2), Bad (1).”

Videos consisted of short film trailers and popular YouTube videos. After each block of eight videos participants were asked two further questions. The first question was “How would you rate your level of satisfaction with the viewing experience provided by the brand?” (Response options were 0-Completely Satisfied, 1-Mostly Satisfied, 2-Somewhat Satisfied, 3-Neither Satisfied nor Dissatisfied, 4-Somewhat Dissatisfied, 5-Mostly Dissatisfied, 6-Completely Dissatisfied). The second question was “How would you rate your level of frustration with the viewing experience provided by the brand?” (Response options were on an 11-point scale ranging between 0-Not at all Frustrated and 10-Extremely Frustrated). After viewing the three sequences of four videos within the first half of each block participants were also asked: How would you rate your overall experience after viewing the last four videos? (Response options for this question were: 5-Excellent, 4-Good, 3-Fair, 2-Poor, 1-Bad, 0-Terrible).

Each group of four videos was comprised of one of six sequences of “good” (1) and “bad” videos (2). Each participant saw each of the sequences once, and the ordering of the sequences was counterbalanced between participants. As can be seen in Fig. 1, there seems to be some evidence for an end effect, with the two sequences ending in good videos (2111 and 2211) having a higher overall rating than the sequence ending with two bad videos (1122) or one bad video (1112). In terms of the peak effect, the sequence with two bad videos in the middle of the sequence has relatively low quality of experience, even though the sequence ended with a good video. However, the sequence with two good videos in the middle did not have relatively higher quality of experience. In summary, there is evidence for an end effect on judged QoE involving both a bad ending and a good ending to the sequence. However, for the peak effect, the evidence in Fig. 1 suggests that it may only apply with respect to bad videos.

Figure 2 shows mean frustration and satisfaction ratings with respect to sequences of four videos. As expected with the end effect, the sequence with three good videos and one bad video had more associated frustration, and lower satisfaction when the bad video was at the end of the sequence than it did when the bad video was at the beginning of the sequence. Similarly, for sequences for two bad, and two good videos, frustration was lower, and satisfaction was higher when the two good videos were at the end of the sequence.

Regression analysis with frustration as the criterion was carried out, with the list of predictors including: the number of bad videos in the block; the maximum number of back to back bad videos in the block; the number of the first two videos (in the block) that were bad, and whether or not the last video (or two last videos) was/were bad. The analysis suggested a significant peak effect (r = 0.372, p < .05) with the two predictors in the derived model being the last video, and the number of bad videos in the second half of the block (the respective beta coefficients were 0.303, and 0.22, respectively). Additionally, ratings of satisfaction were significantly higher (t [61.7] = 2.68, p < 0.01), with a mean increase of 0.84 on the 7-point satisfaction rating scale, when the last video in the block (of eight videos) was good rather than bad.

4 Study 2

Study 2 considered two issues that had not been addressed in Study 1. First, Study 1 did not distinguish between peaks (i.e., videos with exceptionally high or low TQ) and sequences, i.e., sequences of two or more videos that were relatively “bad”, or “good”. Second, Study 1 did not consider the issue of payment. Would peak-end effects also be found, and would an effect of payment type interact with the peak-end effect?

Study 2 had two classes of disruption (impairments, and non-retainability failures where the video failed to play through to the end) and nine types of disruption in total (see Table 1, where the first row represents undisrupted video, the next four rows are impairments and the last four rows are failures). The nine disruption types were randomly assigned to each video. In addition, where the disruptions occurred and how long the freezing impairments lasted was randomized within the constraints shown in Table 2.

The study was broken down into 8 blocks of videos, with each block lasting approximately five minutes. There were two experimental groups – one that could terminate videos and one group that could not terminate the videos. Each video was approximately 1–1.5 min long, and once the participant had watched 4.5 min of video within a block, the video they were watching at that time would be the last video they would watch for that block. For users who could terminate, another video would come up if they decided to stop watching a video, and they would keep watching videos until they reached the 4.5 min mark. This method ensured that the total viewing time for each block would be approximately the same for all participants.

The users had 14 categories of videos to choose from (e.g., sports, nature, entertainment, etc.) and chose 8 categories of interest (one category per block). Four of the blocks were “free” and the other four were “paid”. The payment type was manipulated using e-tokens that represented the amount of money participants would receive at the end of the study. At the beginning of the study, participants were told they had 30 tokens that would reflect their payment for the study and that each token was equal to one dollar. They were also told that some blocks would require payment of 2.5 tokens (but were not told how many paid blocks there were), which meant that money would be deducted from their eventual compensation. According to the design of the study, each participant would pay 2.5 tokens for each of the four paid blocks, resulting in a loss of 10 tokens (i.e., $10), therefore, the participants ended up with 20 tokens at the end of the study, resulting in a payment of $20. After the experiment the participants were told that everyone received $20 and that the tokens were just used to give the illusion that the participants had to pay, or give up money, for the paid videos.

The sequences of video disruptions varied randomly within blocks. Some blocks tended to have more failures at the beginning or end, and some blocks included the most severe failure (R3), allowing an assessment of the peak-end effect to be made by comparing blocks with appropriately contrasting sequences of disruption types.

Each video clip contained audio, and the content was not graphic in nature (i.e. no horror, gore, violence, nudity or emotionally disturbing content). Videos consisted of short film trailers and a selection of popular YouTube videos. Disruptions to the video included freezing during play back and videos that did not play all the way to the end (sometimes with periods of freezing prior to the failure to complete).

Participants provided ratings of TQ, Quality of Experience (QoE), and Acceptability after each video. At the end of each block, participants also provided ratings of overall TQ (Block TQ), and Overall Experience (Block OE), with respect to the block of videos just viewed. Response options were Yes or No for acceptability, and for all other ratings the following six point scale of response options was used: 6-Excellent, 5-Good, 4-Fair, 3-Poor, 2-Bad, 1-Terrible. After viewing all 8 blocks of videos, participants also filled in a short post-questionnaire that asked them to rate their overall experience of viewing videos in the experiment.

4.1 Results

The TQ ratings provided by participants confirmed our hypotheses that I0 and I1 videos would be rated most favourably, non-retainability failures would be rated least favourably, and the I2, I3, and I4 impairments would have ratings between the two extremes (see Fig. 3). Because of this trend, we created a new factor called Expected Technical Quality (Expected TQ) that classified the disrupted videos into three groups: Good (I0, I1), Medium (I2, I3, I4), and Poor (R0, R1, R2, R3).

Repeated measures ANOVA was carried out with payment type (PayType) and Expected TQ as the experimental factors, and TQ as the independent variable. Mauchly’s test was used to test for sphericity, and degrees of freedom were adjusted using the Greenhouse-Geisser criterion where necessary (as described in [16]). There was a significant main effect of Expected TQ (F [2, 22] = 106.8, p < .001), but the main effect of Pay Type, and the interaction of Pay Type with Expected TQ were non-significant (F < 1 in both cases). As can be seen in Fig. 4, the mean TQ across the three levels of Expected TQwas almost identical for the two Pay Types.

We developed a GoodBad measure to assess sensitivity to differences in degree of impairment (number of freezings) of the videos. Equation 1 below shows the formula for the GoodBad measure, which reflects the mean of four difference scores between rated TQ for videos with few if any impairments (I0, I1, I2), and rated TQ for videos with a higher number of impairments (I3, and I4).

We screened out three participants who had low sensitivity to differences in number of impairments, as indicated by their GoodBad scores. We then carried out further analyses on the remaining 11 participants. First we created a set of features to capture different sequencing of good and bad videos within blocks. Disruption types were classified as “good”, “medium”, or “poor” as explained above (beginning of Sect. 4.1) and these types were coded respectively as 1, 2, and 3. Table 3 shows the coding scheme then used for the First2 and Last2 features. For instance, if the first two videos were both good the value of First 2 would be 1, and similarly for the last two videos and Last2. As another example of the coding (fourth row of the table), if the first video in the pair was good and the second video in the pair was poor, then the corresponding score for First2 or Last2 would be 4.

Table 4 provides an overview of the peak and sequence features. We then carried out two multiple regression analyses to determine which block features influenced Block TQ, and Block OE, respectively. The Pearson correlation between the Block TQ and Block OE was .587. Other correlations between the two outcome measures and the predictors are shown in Table 5. All of the correlations between the features used as predictors (below) were below .4 except for the correlation between First and First2 (r = .709), and the correlation between Last and Last2 (r = .695).

The predictors in the multiple regression analysis were First, First2, Last, Last2, positive.peak, negative.peak, and longest.bad.sequence. As recommended by Field et al. [16] for an exploratory analysis, backward stepwise removal of the predictors was used. For Block TQ, the backward stepwise analysis removed all the predictors except Last2. The correlation of Last2 with Block TQ was .538.

For Block OE, Last and longest.bad.sequence were the two predictors in the selected model (adjusted R-squared as reported by IBM SPSS was .253). Since one of the Last (Last or Last2) predictors was part of the chosen model for both the outcomes, and Last2 was almost as highly correlated with Block OE, and more highly correlated with Block TQ than Last, we selected Last2 as a predictor for the next analysis along with Longest Bad Sequence, which had the next highest correlation with the two outcomes. Two forced entry regression analyses were carried out. In the first analysis (with Block OE as the dependent measure), adjusted R-squared for the model was .197, and both predictors were significant (p < .05, Table 6).

For the model predicting Block TQ, the adjusted R-square was .282, and only Last2 was a significant predictor (Table 7).

Figure 5 shows Last2 and Longest Bad Sequence (the number of “bad” videos in the longest sequence) plotted against Block OE. The “f” and “p” labels stand for free or paid service in the block. There was a strong end effect with Block OE scores tending to increase as the Last2 score increased. Block OE scores were ranked from best to worst, so a higher score reflected a more negative opinion. Thus, as the Last2 score increased (i.e., the end of the block had worse TQ), Block OE became less favourable. There was also a sequencing effect where longer bad sequences were associated with lower Block OE. In contrast, there was no tendency for these effects to differ between the “free” and “paid” blocks, which were inter-mixed throughout the scatterplot.

Figure 6 shows Last2 plotted against Block TQ. The “f” and “p” labels in the figure again indicate whether the block corresponding to that data point was represented as a free or paid service. It can be seen that there is a strong end effect with block TQtending to increase as the Last2 score increases. Block TQ satisfaction was rated using a Likert scale from Strongly Agree to Strongly Disagree (coded from 1 to 5), meaning that higher scores reflected less satisfaction with the TQ of the block. Figure 6 shows that blocks with “bad” ends are associated with less satisfaction. Again, there is no tendency for this effect to differ between the “free” and “paid” blocks, which are inter-mixed throughout the scatterplot.

5 Conclusions

Payment effects were not found in Study 2, nor did payment type interact significantly with the end effect that was observed. One possibility for this non-intuitive result is that people are less susceptible to payment effects when judging TQ if they actually watch the video rather than imagine the experience. An alternative possibility is that the experimental manipulation of payment type was unsuccessful either because the participants did not find the amounts of money involved large enough to worry about, or because they did not believe that they were actually having to pay since the payment was concerned with money they had not yet received. Further research is needed to pin down the impact of payment type, not only on judgments of technical quality, but also on peak-end effects.

Both studies discussed here found a reasonably strong end effect. Study 1 also found a negative “sequence effect” involving pairs of bad videos in the middle of block sequences. Study 2 found an effect of the longest bad sequence in the block on Block OE, but longest bad sequence did not have a significant effect on rated block TQ. In summary, the studies reported here succeeded in showing that the sequence of disruption types within a block of viewed videos does in fact have an impact on Block TQ, and on Block OE. This suggests that peak-end effects will influence customer experience for online video and that estimation of QoE should take these effects into account.

References

Fredrickson, B.L., Kahneman, D.: Duration neglect in retrospective evaluations of affective episodes. J. Pers. Soc. Psychol. 65(1), 45–55 (1993)

Kahneman, D.: Thinking Fast and Slow. Random House, New York (2011)

Moeller, S., Raake, A.: Quality of Experience: Advanced Concepts, Applications and Methods. Springer, Cham (2014)

Chignell, M., Zucherman, L., Kaya, D., Jiang, J.: Peak-end effects in video quality of experience. In: IEEE Digital Media Industry and Academic Forum, Santorini, Greece (2016, to appear)

Bharadwaj, S.G., Varadarajan, R., Fahy, J.: Sustainable competitive advantage in service industries: a conceptual model and research propositions. J. Mark. 57(4), 83–99 (1993)

Li, W., Spachos, P., Chignell, M., Leon-Garcia, A., Zucherman, L., Jiang, J.: Impact of technical and content quality of overall experience of OTT Video. In: 2016 IEEE Consumer Communications and Networking Conference (CCNC) (in press)

ITU-T.: Subjective Video Quality Assessment Methods for Multimedia Applications. Recommendation P. 910, Telecommunication Standardization Sector of ITU (2008)

Leon-Garcia, A., Zucherman, L.: Generalizing MOS to assess technical quality for end-to-end telecom session. In: Globecom Workshops (GC Wkshps), pp. 681–687. IEEE, New York (2014)

Laghari, K., Gupta, R., Arndt, S., Antons, J-N., Schleicher, R., Möller, S., Falk, T.H.: Neurophysiological experimental facility for quality of experience (QoE) assessment. In: Proceedings of the First IFIP/IEEE International Workshop on Quality of Experience Centric Management, pp. 1300–1305. IEEE, New York (2013)

Khirman, S., Henriksen, P.: Relationship between quality of service and quality of experience for public internet service, In: Proceedings of Passive and Active Measurement (PAM 2002), Fort Collins, Colorado, (2002)

Spachos, P., Li, W., Chignell, M., Leon-Garcia, A., Zucherman, L., Jiang, J.: Acceptability and quality of experience in over the top video. In: 2015 IEEE International Conference on Communication Workshop (ICCW), pp. 1693–1698. IEEE, New York (2015)

Lottridge, D., Chignell, M., Jovicic, A.: Affective interaction understanding, evaluating, and designing for human emotion. Rev. Hum. Fact. Ergon. 7(1), 197–217 (2011)

Kahneman, D.: New challenges to the rationality assumption. In: Kahneman, D., Tversky, A. (eds.) Choices, Values, and Frames, pp. 758–774. Cambridge University Press, Cambridge (2000)

Baumgartner, H., Sujan, M., Padgett, D.: Patterns of affective reactions to advertisements: the integration of moment-to-moment responses into overall judgments. J. Mark. Res. 34, 219–232 (1997)

Chignell, M., Kealey, R., DeGuzman, C., Zucherman, L., Jiang, J.: Using visualizations to measure SQE: individual differences, and pricing and disruption type effects. Working paper, Interactive Media Lab, University of Toronto (2016)

Field, A., Miles, J., Field, Z.: Discovering Statistics Using R. Sage, Thousand Oaks (2012)

Acknowledgment

The authors would like to thank KanmanusOngvisatepaiboon for his assistance in developing the software used in the experiment. This research was funded by a grant from TELUS Communications Company, and by an NSERC Collaborative Research Development Grant, both to the first author.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Chignell, M., de Guzman, C., Zucherman, L., Jiang, J., Chan, J., Charoenkitkarn, N. (2016). Improving Sense of Well-Being by Managing Memories of Experience. In: Yamamoto, S. (eds) Human Interface and the Management of Information: Applications and Services. HIMI 2016. Lecture Notes in Computer Science(), vol 9735. Springer, Cham. https://doi.org/10.1007/978-3-319-40397-7_43

Download citation

DOI: https://doi.org/10.1007/978-3-319-40397-7_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-40396-0

Online ISBN: 978-3-319-40397-7

eBook Packages: Computer ScienceComputer Science (R0)