Abstract

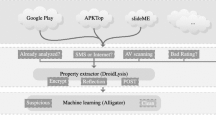

There is generally a lack of consensus in Antivirus (AV) engines’ decisions on a given sample. This challenges the building of authoritative ground-truth datasets. Instead, researchers and practitioners may rely on unvalidated approaches to build their ground truth, e.g., by considering decisions from a selected set of Antivirus vendors or by setting up a threshold number of positive detections before classifying a sample. Both approaches are biased as they implicitly either decide on ranking AV products, or they consider that all AV decisions have equal weights. In this paper, we extensively investigate the lack of agreement among AV engines. To that end, we propose a set of metrics that quantitatively describe the different dimensions of this lack of consensus. We show how our metrics can bring important insights by using the detection results of 66 AV products on 2 million Android apps as a case study. Our analysis focuses not only on AV binary decision but also on the notoriously hard problem of labels that AVs associate with suspicious files, and allows to highlight biases hidden in the collection of a malware ground truth—a foundation stone of any malware detection approach.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We make available a full open source implementation under the name STASE at https://github.com/freaxmind/STASE.

- 2.

- 3.

Since the goal of this study is not to evaluate the individual performance of antivirus engines, their names have been omitted and replaced by an unique number (ID).

- 4.

We could not obtain the results for 54 151 (2.56 %) applications because of a file size limit by VirusTotal.

- 5.

AVs considered in [8]: AntiVir, AVG, Bit- Defender, ClamAV, ESET, F-Secure, Kaspersky, McAfee, Panda, Sophos.

- 6.

If one set of AVs leads to a percentage x over 50 %, then the other set relevant value is 100-x% < 50 %.

References

Symantec: Symantec. istr 20 - internet security threat report, April 2015. http://know.symantec.com/LP=1123

Bailey, M., Oberheide, J., Andersen, J., Mao, Z.M., Jahanian, F., Nazario, J.: Automated classification and analysis of internet malware. In: Kruegel, C., Lippmann, R., Clark, A. (eds.) RAID 2007. LNCS, vol. 4637, pp. 178–197. Springer, Heidelberg (2007)

Canto, J., Sistemas, H., Dacier, M., Kirda, E., Leita, C.: Large scale malware collection: lessons learned. In: 27th International Symposium on Reliable Distributed Systems, vol. 52(1), pp. 35–44 (2008)

Maggi, F., Bellini, A., Salvaneschi, G., Zanero, S.: Finding non-trivial malware naming inconsistencies. In: Jajodia, S., Mazumdar, C. (eds.) ICISS 2011. LNCS, vol. 7093, pp. 144–159. Springer, Heidelberg (2011)

Mohaisen, A., Alrawi, O.: AV-Meter: an evaluation of antivirus scans and labels. In: Dietrich, S. (ed.) DIMVA 2014. LNCS, vol. 8550, pp. 112–131. Springer, Heidelberg (2014)

Perdisci, R., U, M.: Vamo: towards a fully automated malware clustering validity analysis. In: Annual Computer Security Applications Conference, pp. 329–338 (2012)

VirusTotal: VirusTotal about page. https://www.virustotal.com/en/about/

Arp, D., Spreitzenbarth, M., Malte, H., Gascon, H., Rieck, K.: Drebin: effective and explainable detection of android malware in your pocket. In: Symposium on Network and Distributed System Security (NDSS), pp. 23–26 (2014)

Yang, C., Xu, Z., Gu, G., Yegneswaran, V., Porras, P.: DroidMiner: automated mining and characterization of fine-grained malicious behaviors in android applications. In: Kutyłowski, M., Vaidya, J. (eds.) ICAIS 2014, Part I. LNCS, vol. 8712, pp. 163–182. Springer, Heidelberg (2014)

Kantchelian, A., Tschantz, M.C., Afroz, S., Miller, B., Shankar, V., Bachwani, R., Joseph, A.D., Tygar, J.D.: Better malware ground truth: techniques for weighting anti-virus vendor labels. In: AISec 2015, pp. 45–56. ACM (2015)

Rossow, C., Dietrich, C.J., Grier, C., Kreibich, C., Paxson, V., Pohlmann, N., Bos, H., Van Steen, M.: Prudent practices for designing malware experiments: Status quo and outlook. In: Proceedings of S&P, pp. 65–79 (2012)

Allix, K., Jérome, Q., Bissyandé, T.F., Klein, J., State, R., Le Traon, Y.: A forensic analysis of android malware-how is malware written and how it could be detected? In: COMPSAC 2014, pp. 384–393. IEEE (2014)

Bureau, P.M., Harley, D.: A dose by any other name. In: Virus Bulletin Conference, VB, vol. 8, pp. 224–231 (2008)

Li, P., Liu, L., Gao, D., Reiter, M.K.: On challenges in evaluating malware clustering. In: Jha, S., Sommer, R., Kreibich, C. (eds.) RAID 2010. LNCS, vol. 6307, pp. 238–255. Springer, Heidelberg (2010)

Bayer, U., Comparetti, P.M., Hlauschek, C., Kruegel, C., Kirda, E.: Scalable, behavior-based malware clustering. In: Proceedings of the 16th Annual Network and Distributed System Security Symposium (NDSS 2009) (1) (2009)

Gashi, I., Sobesto, B., Mason, S., Stankovic, V., Cukier, M.: A study of the relationship between antivirus regressions and label changes. In: ISSRE, November 2013

GData: Mobile malware report (Q3 2015). https://secure.gd/dl-en-mmwr201503

Enck, W., Ongtang, M., McDaniel, P.: On lightweight mobile phone application certification. In: Proceedings of the 16th ACM Conference on Computer and Communications Security - CCS 2009, pp. 235–245 (2009)

Enck, W., Octeau, D., McDaniel, P., Chaudhuri, S.: A study of android application security. In: Proceedings of the 20th USENIX Security, vol. 21 (2011)

Felt, A.P., Chin, E., Hanna, S., Song, D., Wagner, D.: Android permissions demystified. In: Proceedings of the 18th ACM Conference on Computer and Communications Security, CCS 2011, pp. 627–638. ACM, New York (2011)

Yan, L., Yin, H.: Droidscope: seamlessly reconstructing the os and dalvik semantic views for dynamic android malware analysis. In: Proceedings of the 21st USENIX Security Symposium, vol. 29 (2012)

Zhou, Y., Wang, Z., Zhou, W., Jiang, X.: Hey, you, get off of my market: detecting malicious apps in official and alternative android markets. In: Proceedings of the 19th Annual Network and Distributed System Security Symposium (2), pp. 5–8 (2012)

Barrera, D., Kayacik, H.G., van Oorschot, P.C., Somayaji, A.: A methodology for empirical analysis of permission-based securitymodels and its application to android. In: Proceedings of the 17th ACM CCS (1), pp. 73–84 (2010)

Peng, H., Gates, C., Sarma, B., Li, N., Qi, Y., Potharaju, R., Nita-Rotaru, C., Molloy, I.: Using probabilistic generative models for ranking risks of android apps. In: Proceedings of the 2012 ACM CCS, pp. 241–252. ACM (2012)

Sommer, R., Paxson, V.: Outside the closed world: on using machine learning for network intrusion detection. In: Proceedings of the 2010 IEEE S&P, pp. 305–316 (2010)

Allix, K., Bissyandé, T.F., Jérome, Q., Klein, J., State, R., Le Traon, Y.: Empirical assessment of machine learning-based malware detectors for android. Empirical Softw. Eng. 21, 183–211 (2014)

Allix, K., Bissyandé, T.F., Klein, J., Le Traon, Y.: Are your training datasets yet relevant? In: Piessens, F., Caballero, J., Bielova, N. (eds.) ESSoS 2015. LNCS, vol. 8978, pp. 51–67. Springer, Heidelberg (2015)

Allix, K., Bissyandé, T.F., Klein, J., Le Traon, Y.: Androzoo: collecting millions of android apps for the research community. In: MSR 2016 (2016)

Zhou, Y., Jiang, X.: Dissecting android malware: characterization and evolution. In: Proceedings of the 2012 IEEE S&P, pp. 95–109. IEEE Computer Society (2012)

Hurier, M.: Definition of ouroboros. https://github.com/freaxmind/ouroboros

Pfitzner, D., Leibbrandt, R., Powers, D.: Characterization and evaluation of similarity measures for pairs of clusterings. Knowl. Inf. Syst. 19(3), 361–394 (2009)

Cohen, W.W., Ravikumar, P., Fienberg, S.E.: A comparison of string metrics for matching names and records. In: KDD Workshop on Data Cleaning and Object Consolidation, vol. 3 (2003)

Harley, D.: The game of the name malware naming, shape shifters and sympathetic magic. In: CEET 3rd International Conference on Cybercrime Forensics Education & Training, San Diego, CA (2009)

Wang, T., Meng, S., Gao, W., Hu, X.: Rebuilding the tower of babel: towards cross-system malware information sharing. In: Proceedings of the 23rd ACM CIKM, pp. 1239–1248 (2014)

Acknowledgment

This work was supported by the Fonds National de la Recherche (FNR), Luxembourg, under the project AndroMap C13/IS/5921289.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Hurier, M., Allix, K., Bissyandé, T.F., Klein, J., Le Traon, Y. (2016). On the Lack of Consensus in Anti-Virus Decisions: Metrics and Insights on Building Ground Truths of Android Malware. In: Caballero, J., Zurutuza, U., Rodríguez, R. (eds) Detection of Intrusions and Malware, and Vulnerability Assessment. DIMVA 2016. Lecture Notes in Computer Science(), vol 9721. Springer, Cham. https://doi.org/10.1007/978-3-319-40667-1_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-40667-1_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-40666-4

Online ISBN: 978-3-319-40667-1

eBook Packages: Computer ScienceComputer Science (R0)