Abstract

The big data is characterized by 4Vs (volume, velocity, variety, and variability). In this paper we focus on the velocity, but actually it usually comes together with volume. It means, that the crucial problem of the contemporary data analytics is to answer the question how to discover useful knowledge from fast incoming data. The paper presents an online data stream classification method, which adapts the classification with context to recognize incoming examples and additionally takes into consideration the memory and processing time limitations. The proposed method was evaluated on the real medical diagnosis task. The preliminary results of the experiments encourage us to continue works on the proposed approach.

Similar content being viewed by others

Keywords

1 Introduction

The analysis of huge volumes and fast arriving data is recently the focus of intense research, because such methods could build a competitive advantage of a given company. One of the useful approach is the data stream classification, which is employed to solve problems related to discovery client preference changes, spam filtering, fraud detection, and medical diagnosis to enumerate only a few. Basically, there are two classifier design approaches:

-

“build and use”, which firstly focuses on the training a model and then the trained classifier makes a decision,

-

“build, use, and improve”, which firstly tries to build a model quickly and then, it uses the trained classifier to make a decision and tries to tune the model parameters continuously.

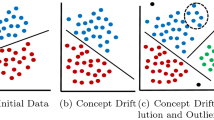

The first approach is very traditional and may be used only under the assumptions that data used for training and being recognized comes from the same distribution and the number of training examples ensures that model is trained well (i.e., it is not undertrained). Of course, for many practical tasks such assumption could be accepted, nevertheless, many contemporary problems do not allow to approve them and should take into consideration that the statistical dependencies describing the classification task as prior probabilities and conditional probability density functions could change. Additionally, we should respect the fact that data may come very fast, what causes that it is impossible to label arriving examples manually by a human expert, but each object should be labeled by a classifier. The first problem is called concept drift [1] and the efficient methods, which are able to deal with it, are still the focus of intense research, because appearance of this phenomena may potentially cause a significant accuracy deterioration of an exploiting classifier. Basically, the following approaches may be considered to deal with the above problem:

-

detecting the drift and retraining the classifier,

-

rebuilding the model frequently, and

-

adopting the classification model to changes.

In this work, we focus on the data stream without the drift or where the changes are very slow, smooth, and not so significant. The proposed method will employ the model build, use, and improve, i.e., it could be used to classify incoming examples even in the case, if the classifier’s model still requires training. Thus, we concentrate on the family of online learner algorithms that continuously update the classifier parameters, while processing the incoming data. Not all types of classifiers can act as online learners. Basically, they have to meet some basic requirements [2]: (i) each object is processed only once in the course of training; (ii) the memory and processing time are limited, and (iii) the training process could be paused at any time, and its accuracy should not be lower than that of a classifier trained on batch of data collected up to the given time.

In this research we adopt the online learning to the data stream classification, but proposed model also takes into consideration additional information about incoming objects. We assume, that each decision is not independent, but also could depend on the previous classification. This situation is typical for medical diagnosis, especially in the case of chronic disease diagnosis, as hypertension diagnosis and therapy planing, or considered in this work, human acid-base state recognition. For such diagnosis tasks not only the recent observations about patient are taken into consideration while decision is made, but a doctor should also consider a patient history as previous ordered therapies and diagnoses.

There are several works related to the recognition with context. The first work was published by Raviv in 1967 [3], who employed the Markov chain model to the character recognition. The “classification with context” has been promoted especially by Toussaint [4] and Harallick [5]. It has been widely applied to the several domains as image processing or medical diagnosis [6]. The main contribution of this work is the proposition of the novel data stream classifier with context, which makes a decision on the basis of a recent observations about the incoming object and additionally takes into consideration the previous classifications. According to the restrictions of data stream analytics tools, it will use the limited memory and computational time.

2 Probabilistic Model of On-line Data Stream Classification

As we mentioned before, in many pattern classification tasks there exist dependencies among patterns to be classified, e.g., for character recognition (especially in the case if we have a prior knowledge, that the incoming characters form words from a given language, then the probability of character appearance depends of previous recognized sign), image classification, and chronic disease diagnosis (where historical data plays the crucial role for high quality medical assessment), to name only a few.

The formalization of classification task requires successively classified objects are related one to another, but also could take into considerations the occurrence of external factors changing the character of these relationships. Let’s illustrate this task using an example of medical diagnosis. The aim of this task is to classify the successive states of a patient. Each diagnosis could be the basis for the certain therapeutic procedure. Thus, we obtain the closed-loop system, in which the object under observation could be simultaneously subjected to control (treatment) dependent on the classification.

In this research we do not take into consideration the control, which could be recognized as the cause of the concept drift. As distinct from the traditional concept drift model, where drift appears randomly, in this case the drift has deterministic nature. In the future, we are going to extend the model by applying the methods related to so-called recurrent concept drift [7].

Let us present the mathematical model of the online classifier with context [4]. The online classification consists of the classification of a sequence of observations of the incoming objects. Let’s \(x_n \in \mathcal {X}\subseteq \mathbb {R}^d\) denotes the feature vector characterizing the nth object and \(j_n \in \mathcal {M}=\{1,...,M\}\) be its label. The probabilistic approach implies the assumption that \(x_n\) and \(j_n\) are the observed values of a pair of random variables \(\mathbf {X}_n\) and \(\mathbf {J}_n\). Let’s model the interdependence between successive classifications as the first-order time-homogeneous Markov chain. Its probabilistic characteristics, i.e., the transition probabilities and initial probabilities are given by the following formulas, which describe the probability that a given object belongs to class \(j_n\) if the previous decision was \(j_{n-1}\).

the transition probabilities form the transition matrix

and initial probabilities

form the vector of initial probabilities

We also assume that the probability distributions of the random variables \(\mathbf {X}_n\) and \(\mathbf {J}_n\) exist and they are characterized by the conditional probability density functions (CPDFs)

Additionally, the probability density functions \(f(\overline{x}_n|\overline{j}_n)\) exists and the observed stream (sequence of observations) is conditionally independent, i.e.,

where \(\overline{x}_n=(x_1,x_2,...,x_n)\) and \(\overline{j}_n=(j_1,j_2,...,j_n)\).

The optimal classifier \(\varPsi ^*\) makes a decision using the following formulaFootnote 1

where the posterior probability

could be calculated recursively

with the initial condition

3 Proposed Algorithm

Let’s notice that the posterior probabilities using as the support functions could be calculated recurrently, but for this calculations a knowledge about the CPDFs is required. We propose the hybrid approach based mostly on non-parametric estimation, which could be used in the chosen build, use and improve model, but in the case of new examples come, it requires to store them, what may break the assumption about the memory limit. Because we have a limited memory at our disposal, we store only a fixed number of the training examples using for CPDF estimation and we update probability characteristics (transition and initial probabilities) continuously. Thus, the assumption about memory limitation is fulfilled. To ensure that the classification model is updated, we add the new incoming examples into training set only in the case, if their labels are available. It is consistent with the assumption that expert is not always available. In the future we can control which of the object should be labeled, e.g., using active learning paradigm [9] as proposed in the previous works of the author [10], where the decision about the labeling of an example is made on the basis of its distance from a classifier decision boundary. In the case, if new example is stored, the oldest example in the dataset is removed to ensure its fixed size of training set. Such a procedure allows us to continuously updating the training and keeping in the memory the examples which are most relevant to the recent concept. The detailed description of the method is presented in Algorithm 1. To calculate support functions we use the procedure inspired by the \(k_n-\)Nearest Neighbor as the CPDFs estimator [11], which pseudocode is presented in Algorithm 2. Such a method is not so computationally efficient, but for the fixed size of the training set its processing time is predicable, what fulfills the condition related to the processing time limitation.

4 Experiment

In order to study the performance of proposed algorithm and evaluate its usefulness to the computer-aided medical diagnosis, we carried out the computer experiments on real dataset included observation of the patients suffering from acid-base disorder. The human acid-base state (ABS) diagnosis task is related to disorders in produce and elimination of \(H^+\) and \(CO_2\) by organism. During the course of treatment, recognition of ABS is very important because pH stability of physiological fluids is required. The ABS disorder has a dynamic character and the current patient’s state depends on symptoms, previously applied treatment and classification. The diagnosis of ABS as a pattern recognition task includes the five classes: breathing acidosis, metabolic acidosis, breathing alkalinity, metabolic alkalinity, normal state. The features contains the value of the gasometric examination of blood as \(pCO_2\), concentration of \(HCO_3^+\), pH and applied treatment (respiration treatment, pharmacological treatment, or no treatment). The dataset consists of the sequences of 78 patient observations (each sequence includes from 7 to 25 successive records). The whole dataset includes 1170 observations which came from the Medical Academy of Wroclaw. Results of experimental investigation are presented in Fig. 1.

The accuracy was evaluate not on the basis of a validation set, but we use the schema called “test-and-train” [12]. Basically, firstly the fixed number of the examples are used to build the first prototype of the classifier. Then each incoming object n is used to improve the model, which is evaluated on the next incoming example \(n+1\).

As we can see, the methods which take into consideration context outperform the classifier which recognize objects independently. We can also observe, the methods have also the asymptotic characteristic. The accuracy of the proposed method a quite similar as the performance of the methods previously developer and applied to the problem of the ABS diagnosis [13], but we have to underline, that the proposed approach does not requires so much memory, because only part of the data are stored. Of course we realize that the scope of the experiments was limited, but the preliminary results of the experiments encourage us to continue the work on the proposed methods in the future.

5 Final Remarks

The novel method of online classifier with context was presented in this work. Its performance seems to be very promising, therefore we would like to continue the work on the presented method. In the future we would like to include the method which will be able to take into consideration the model parameter change caused by the applied control. We are going to extend the model by applying the methods related to so-called recurrent concept drift. Additionally, we consider to retrain classifier using the batch mode, i.e., the model will be improved not object by object, by on the basis of collected data chunk, what could decrease the computational load of the algorithm. Finally, we are going to apply the classifier ensemble approach to the proposed method, e.g., by training new individual classifier on the basis of each incoming data chunk or to improve the models of the selected individuals in the classifier pool only.

Notes

- 1.

For so-called 0–1 loss function which returns 0 in the case of correct decision and 1 otherwise [8].

References

Widmer, G., Kubat, M.: Learning in the presence of concept drift and hidden contexts. Mach. Learn. 23(1), 69–101 (1996)

Domingos, P., Hulten, G.: A general framework for mining massive data streams. J. Comput. Graph. Stat. 12, 945–949 (2003)

Raviv, J.: Decision making in Markov chains applied to the problem of pattern recognition. IEEE Trans. Inf. Theor. 13(4), 536–551 (1967)

Toussaint, G.T.: The use of context in pattern recognition. Pattern Recogn. 10(3), 189–204 (1978)

Haralick, R.M.: Decision making in context. IEEE Trans. Pattern Anal. Mach. Intell. PAMI 5(4), 417–428 (1983)

Żołnierek, A.: Pattern recognition algorithms for controlled Markov chains and their application to medical diagnosis. Pattern Recogn. Lett. 1(5), 299–303 (1983)

Ramamurthy, S., Bhatnagar, R.: Tracking recurrent concept drift in streaming data using ensemble classifiers. In: Proceedings of the Sixth International Conference on Machine Learning and Applications, ICMLA 2007, Computer Society, pp. 404–409. IEEE, Washington, DC (2007)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification, 2nd edn. Wiley, New York (2001)

Settles, B.: Active learning. Synth. Lect. Artif. Intell. Mach. Learn. 6(1), 1–114 (2012)

Kurlej, B., Wozniak, M.: Active learning approach to concept drift problem. Log. J. IGPL 20(3), 550–559 (2012)

Goldstein, M.: \(k_n\)-nearest neighbor classification. IEEE Trans. Inf. Theor. 18(5), 627–630 (1972)

Bifet, A., Holmes, G., Pfahringer, B., Read, J., Kranen, P., Kremer, H., Jansen, T., Seidl, T.: MOA: a real-time analytics open source framework. In: Gunopulos, D., Hofmann, T., Malerba, D., Vazirgiannis, M. (eds.) ECML PKDD 2011, Part III. LNCS, vol. 6913, pp. 617–620. Springer, Heidelberg (2011)

Wozniak, M.: Proposition of common classifier construction for pattern recognition with context task. Knowl.-Based Syst. 19(8), 617–624 (2006)

Acknowlegements

This work was supported by EC under FP7, Coordination and Support Action, Grant Agreement Number 316097, ENGINE - European Research Centre of Network Intelligence for Innovation Enhancement (http://engine.pwr.wroc.pl/). This work was also supported by the Polish National Science Center under the grant no. DEC-2013/09/B/ST6/02264. All computer experiments were carried out using computer equipment sponsored by ENGINE project.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Woźniak, M., Cyganek, B. (2016). A First Attempt on Online Data Stream Classifier Using Context. In: Tan, Y., Shi, Y. (eds) Data Mining and Big Data. DMBD 2016. Lecture Notes in Computer Science(), vol 9714. Springer, Cham. https://doi.org/10.1007/978-3-319-40973-3_50

Download citation

DOI: https://doi.org/10.1007/978-3-319-40973-3_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-40972-6

Online ISBN: 978-3-319-40973-3

eBook Packages: Computer ScienceComputer Science (R0)